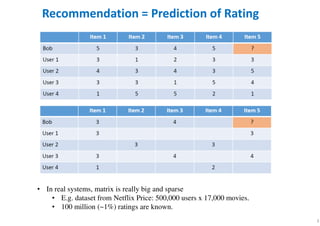

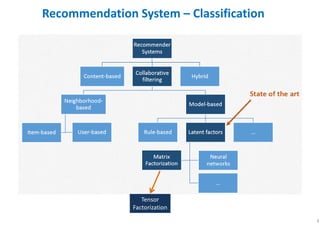

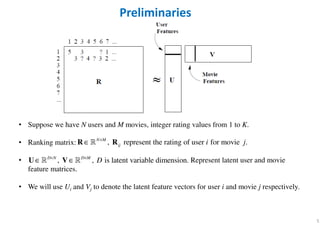

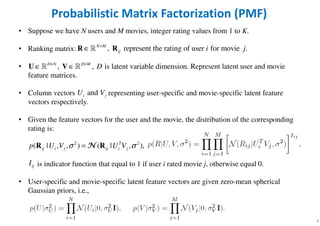

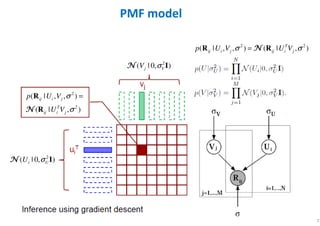

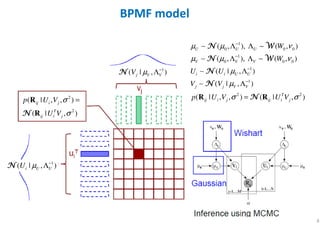

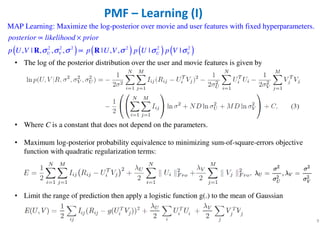

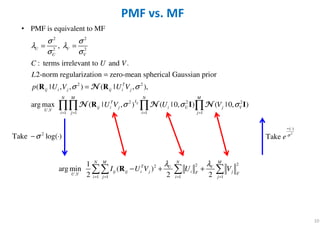

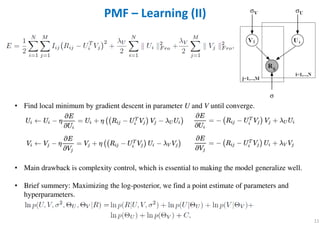

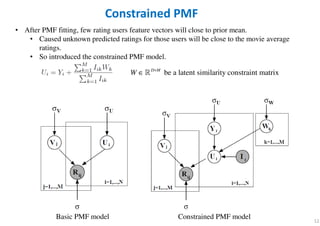

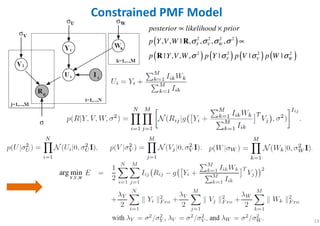

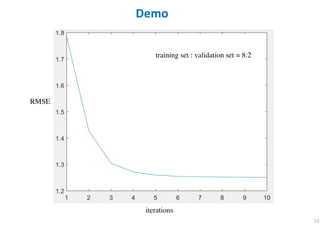

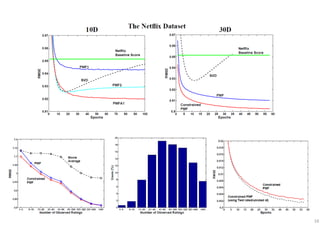

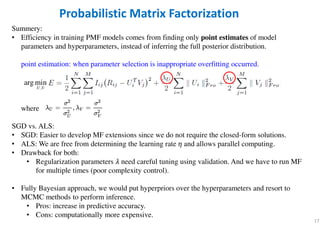

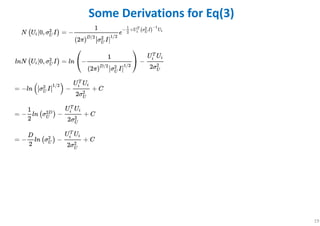

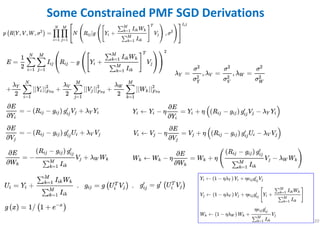

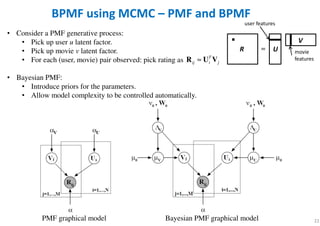

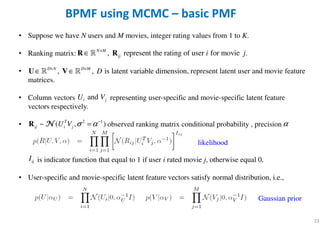

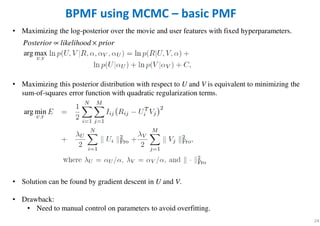

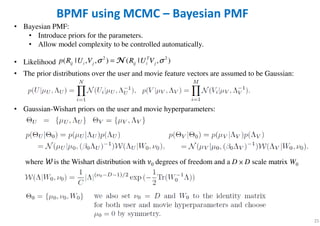

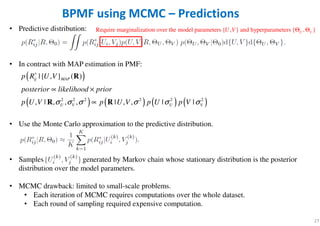

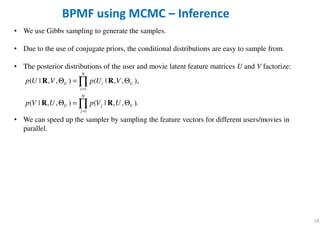

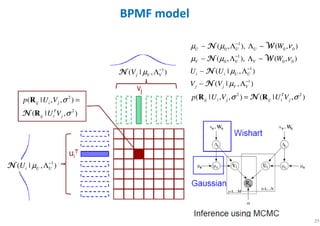

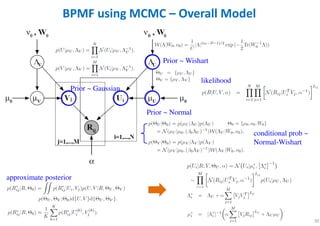

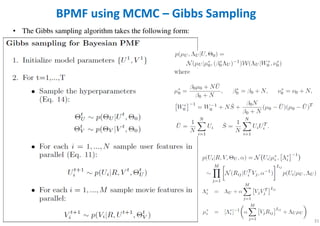

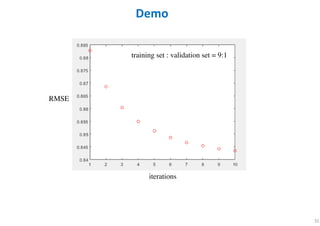

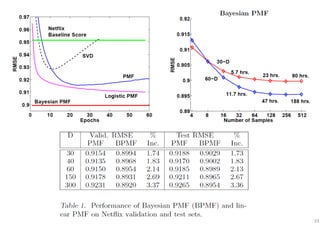

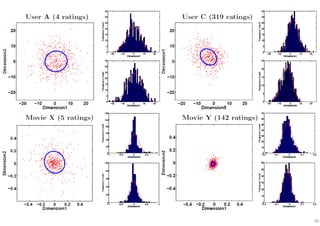

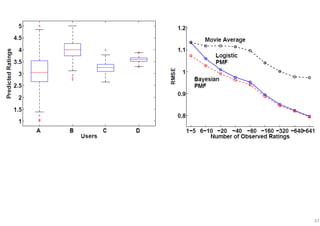

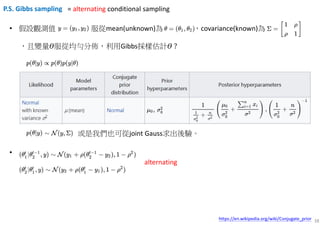

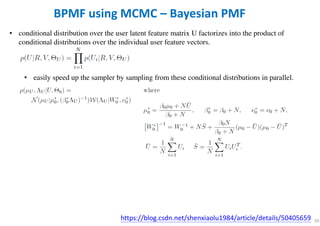

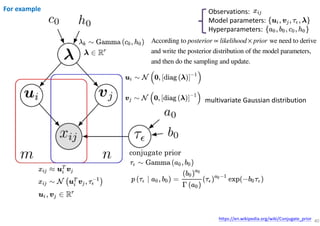

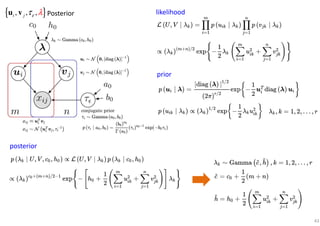

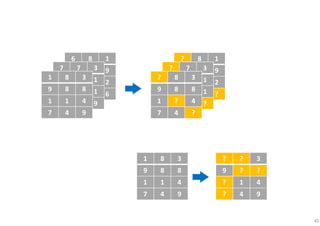

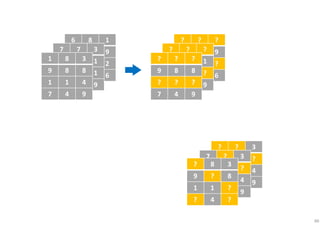

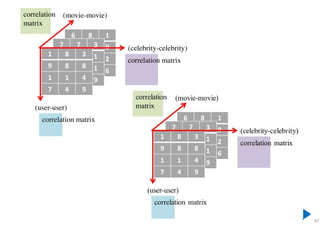

The document outlines probabilistic matrix factorization (PMF) and its applications in recommendation systems, particularly focusing on models like Bayesian PMF (BPMF) and constrained PMF. It discusses the mathematical foundations, learning processes, and comparisons between several factorization methods, emphasizing challenges such as computational complexity and parameter tuning. Additionally, it highlights the advantages of Bayesian approaches in improving predictive accuracy while addressing their computational demands.