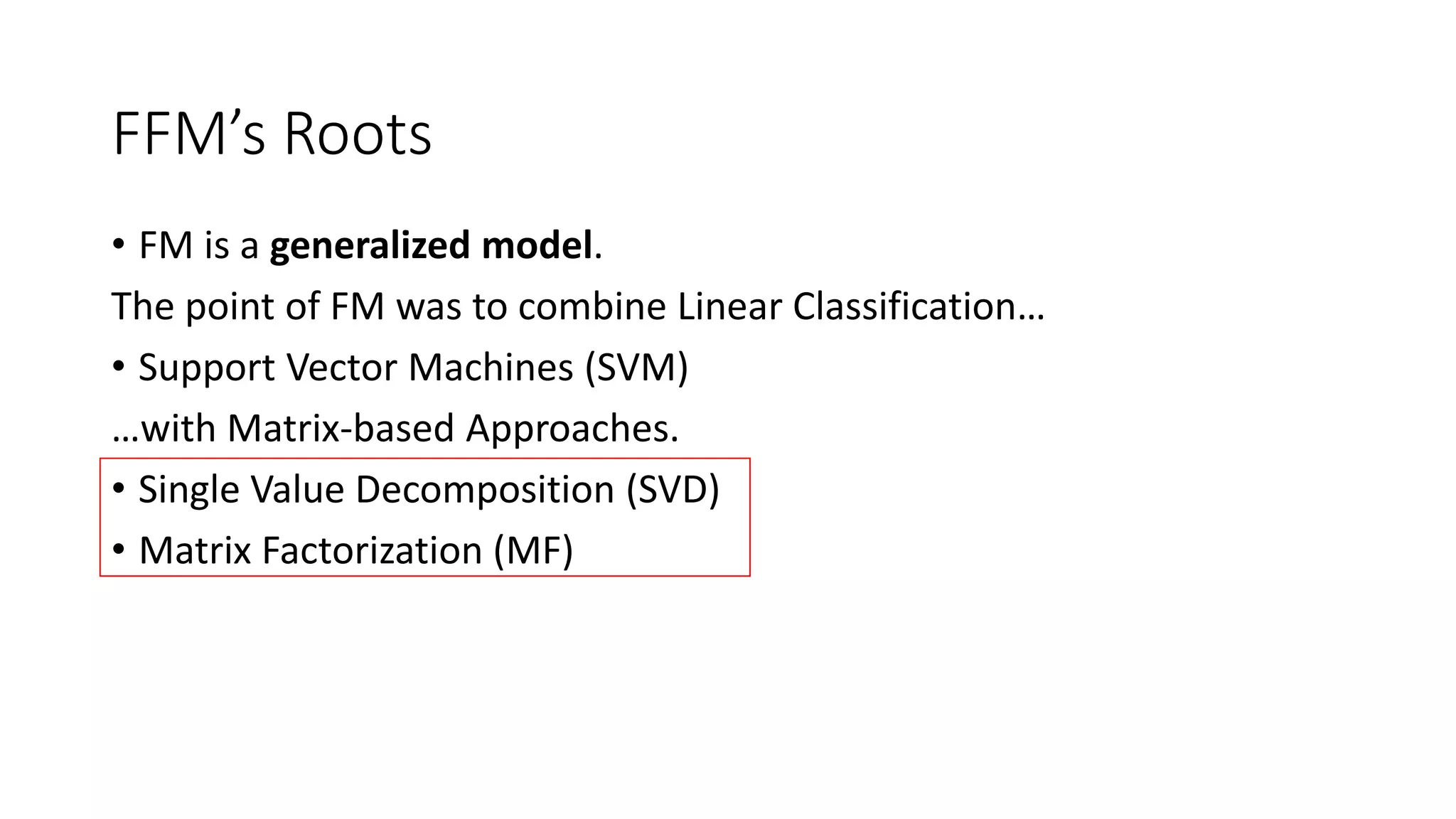

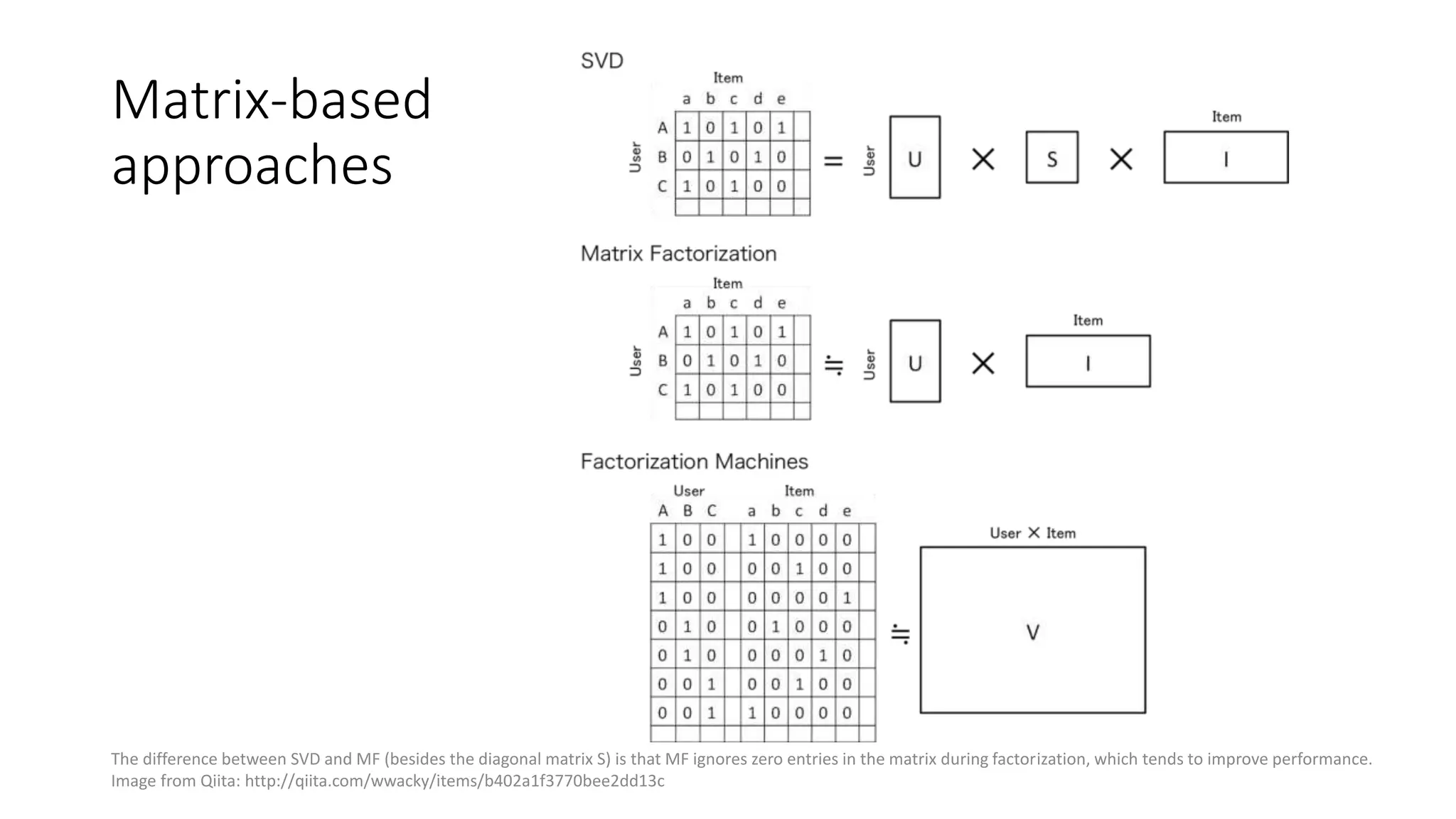

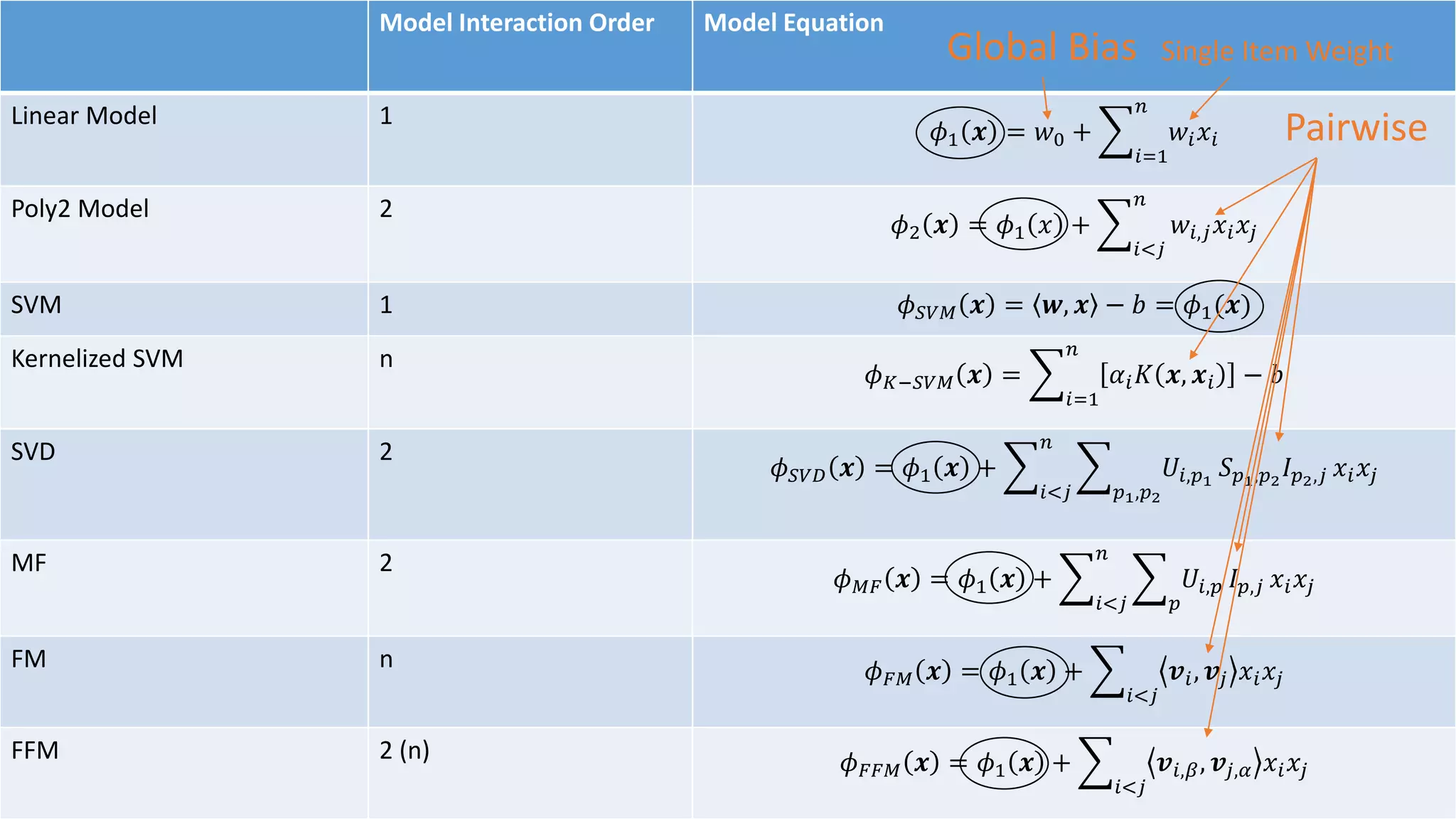

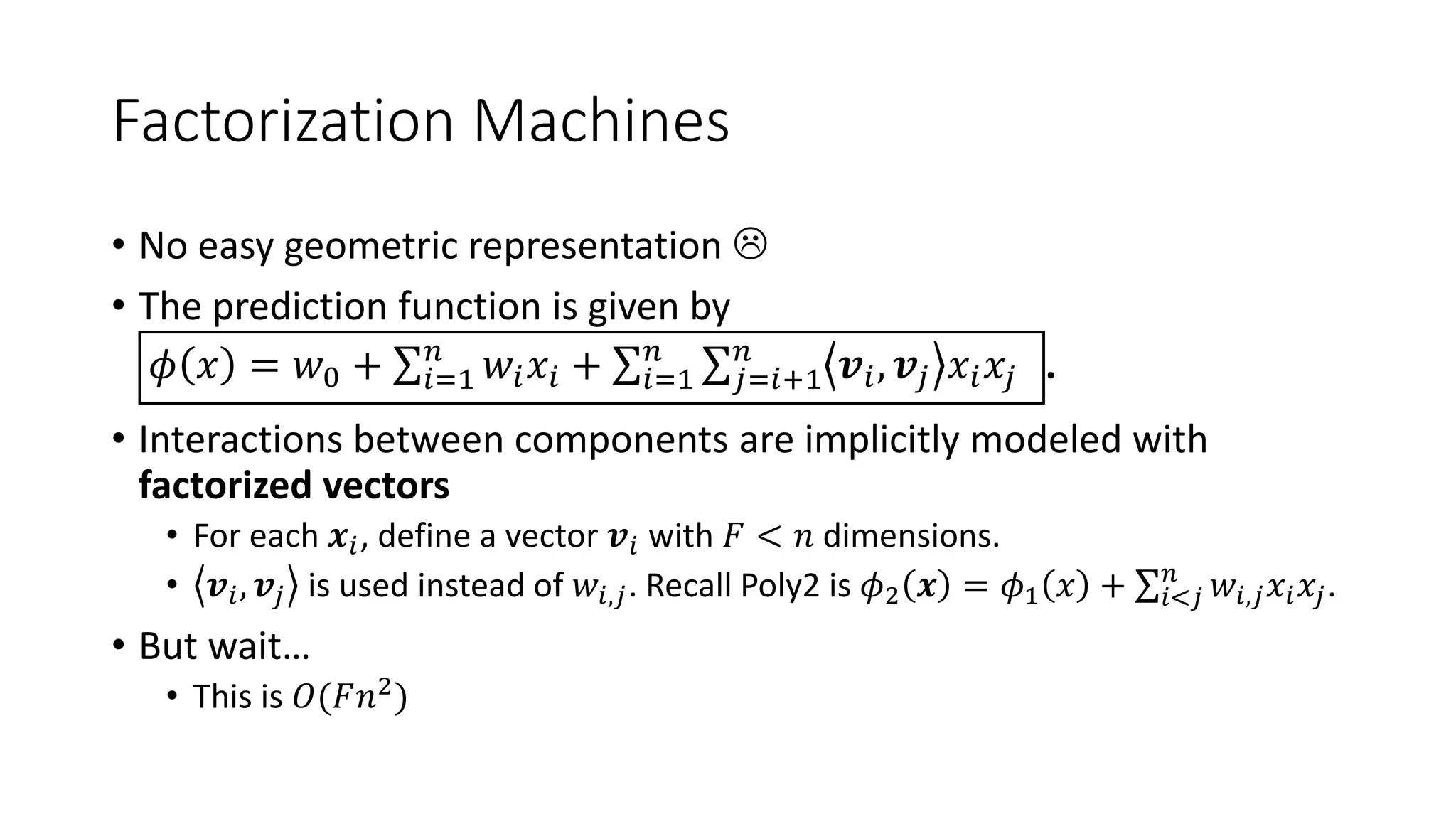

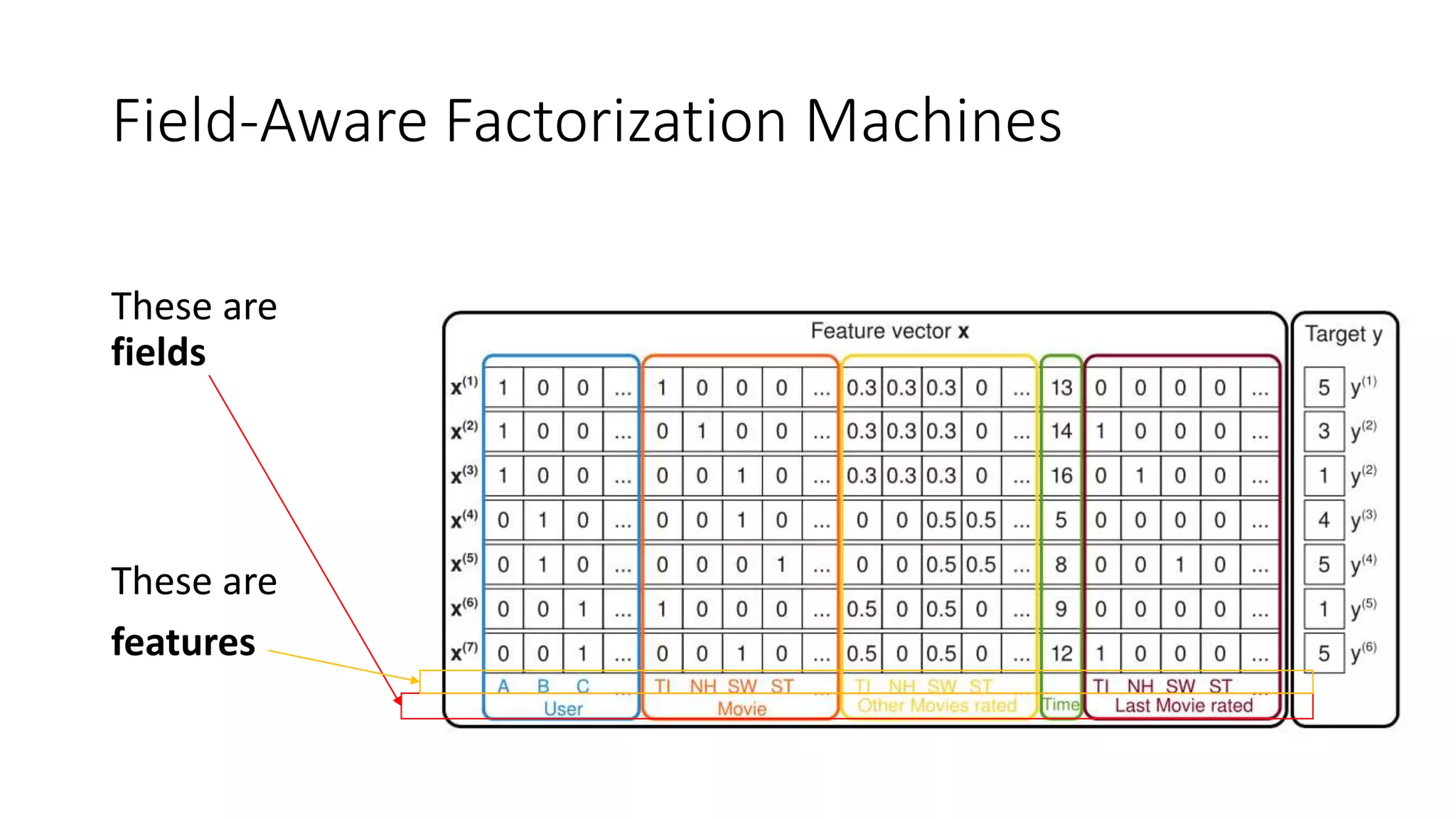

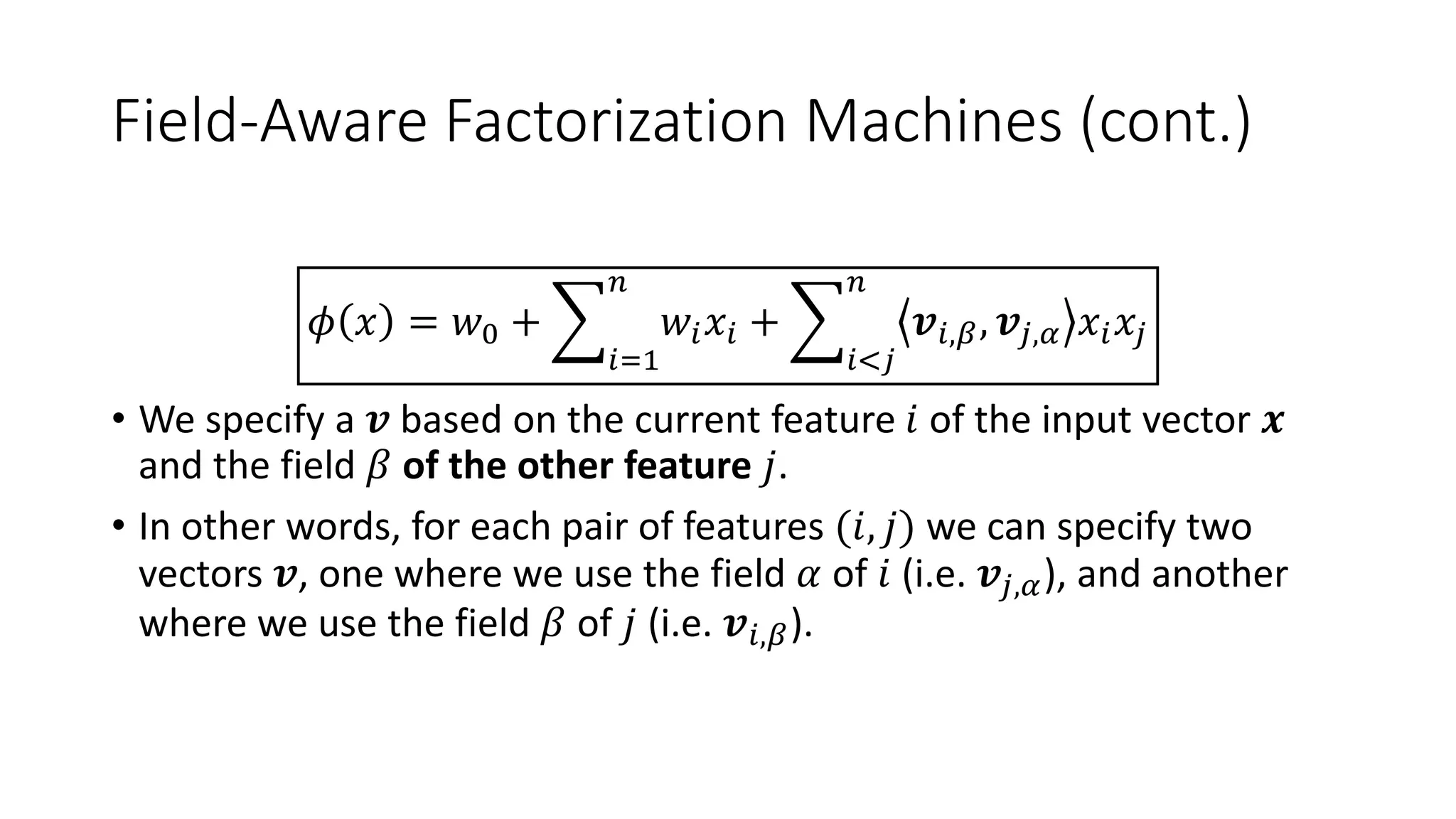

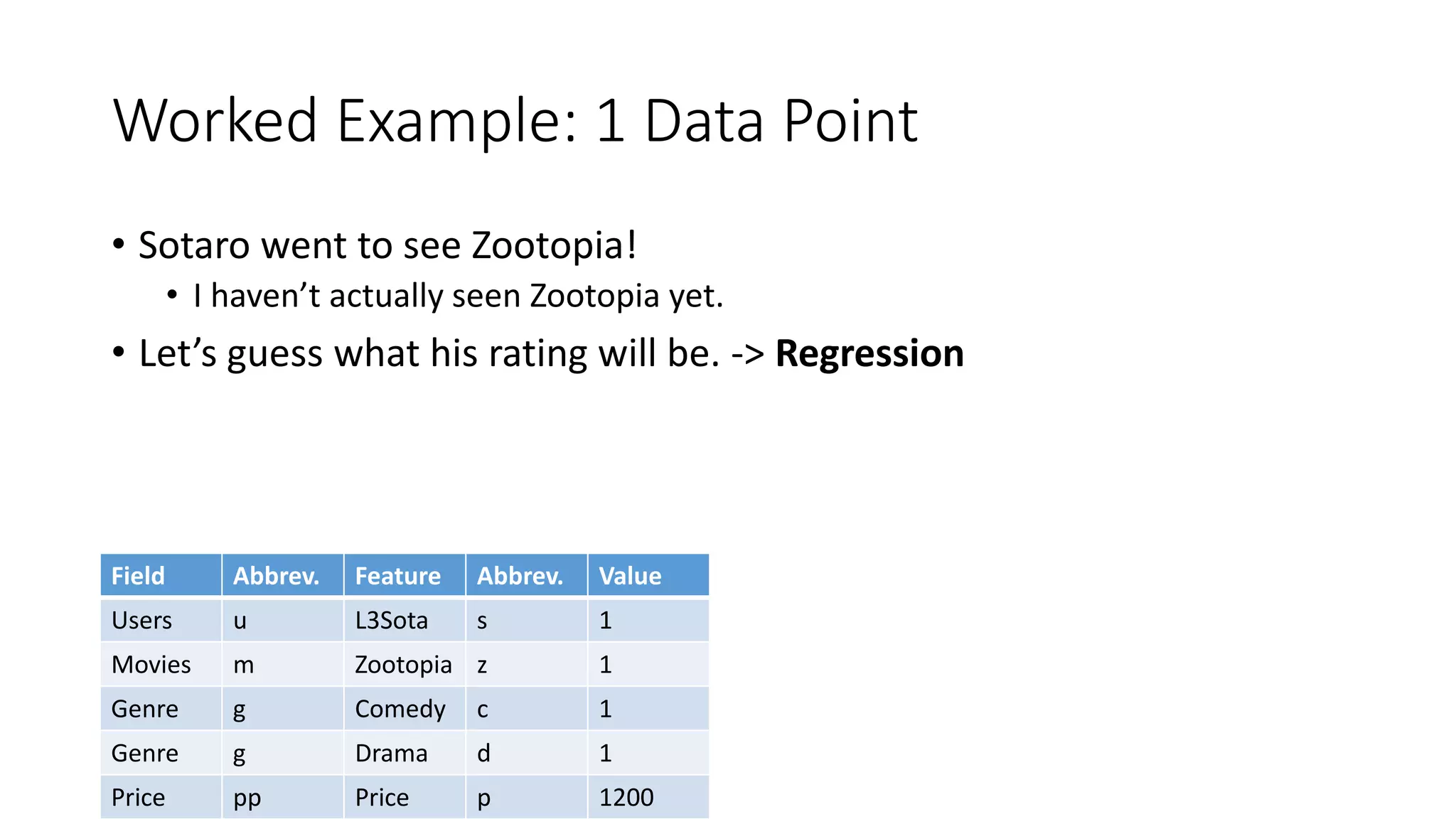

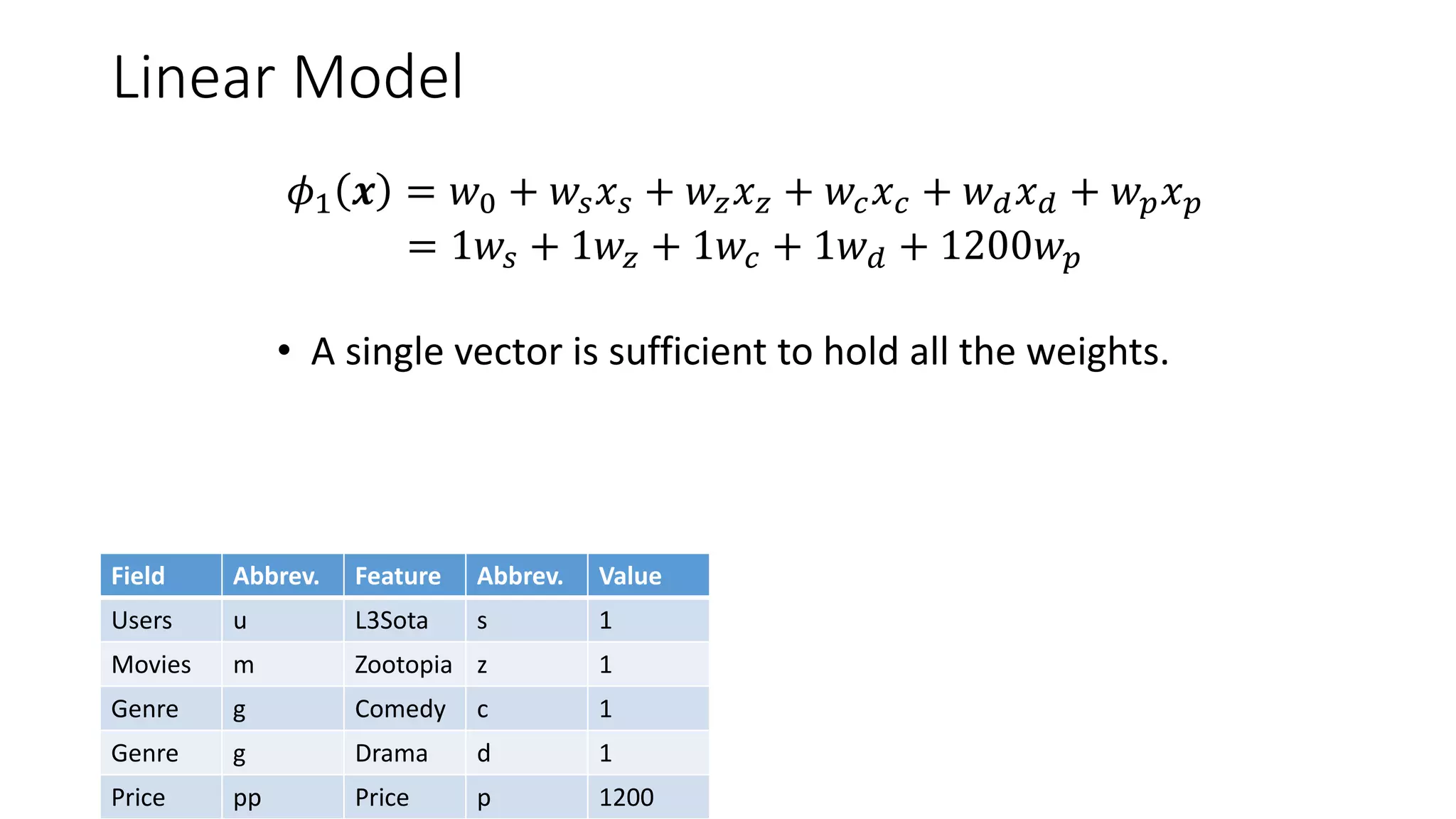

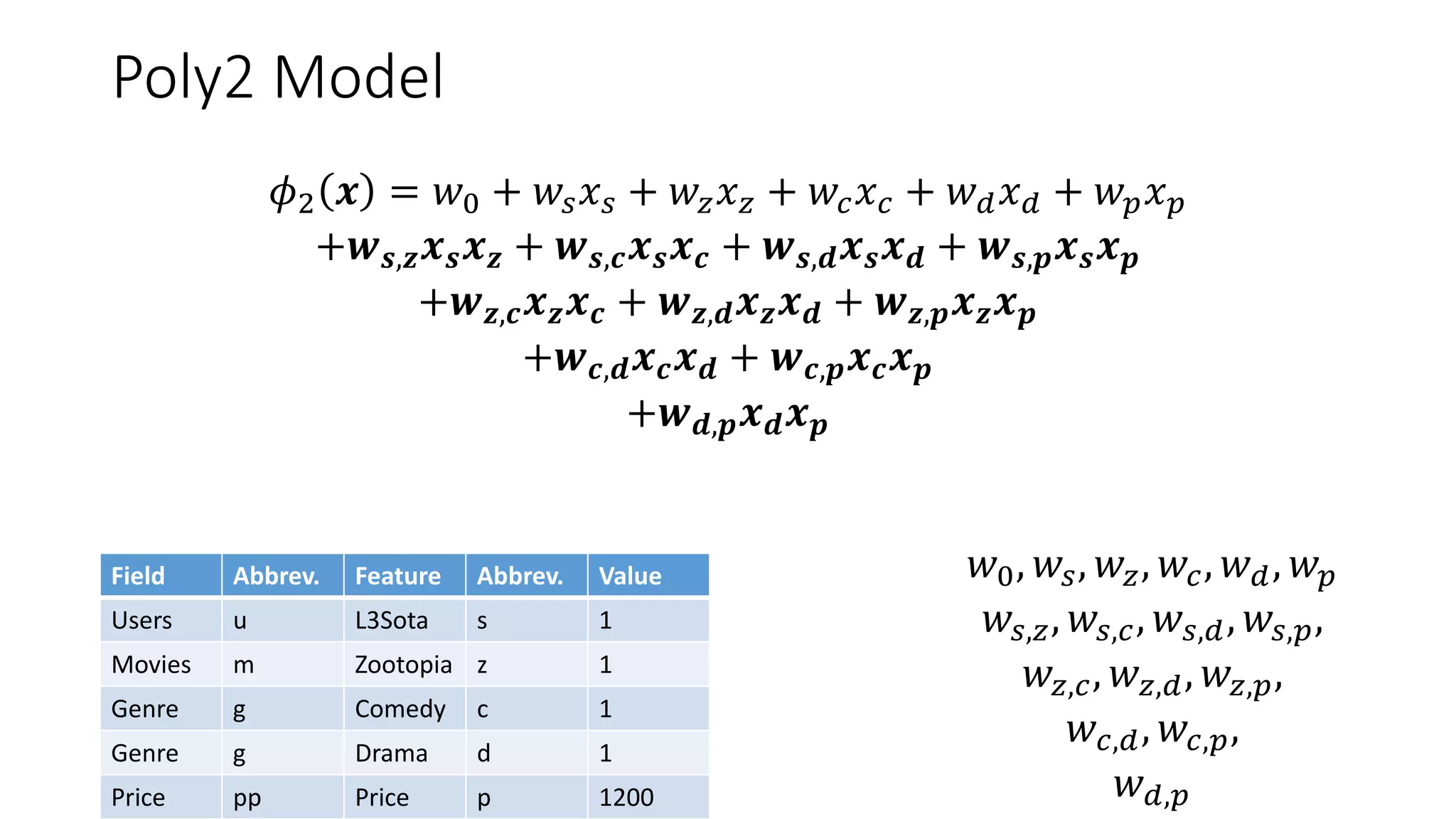

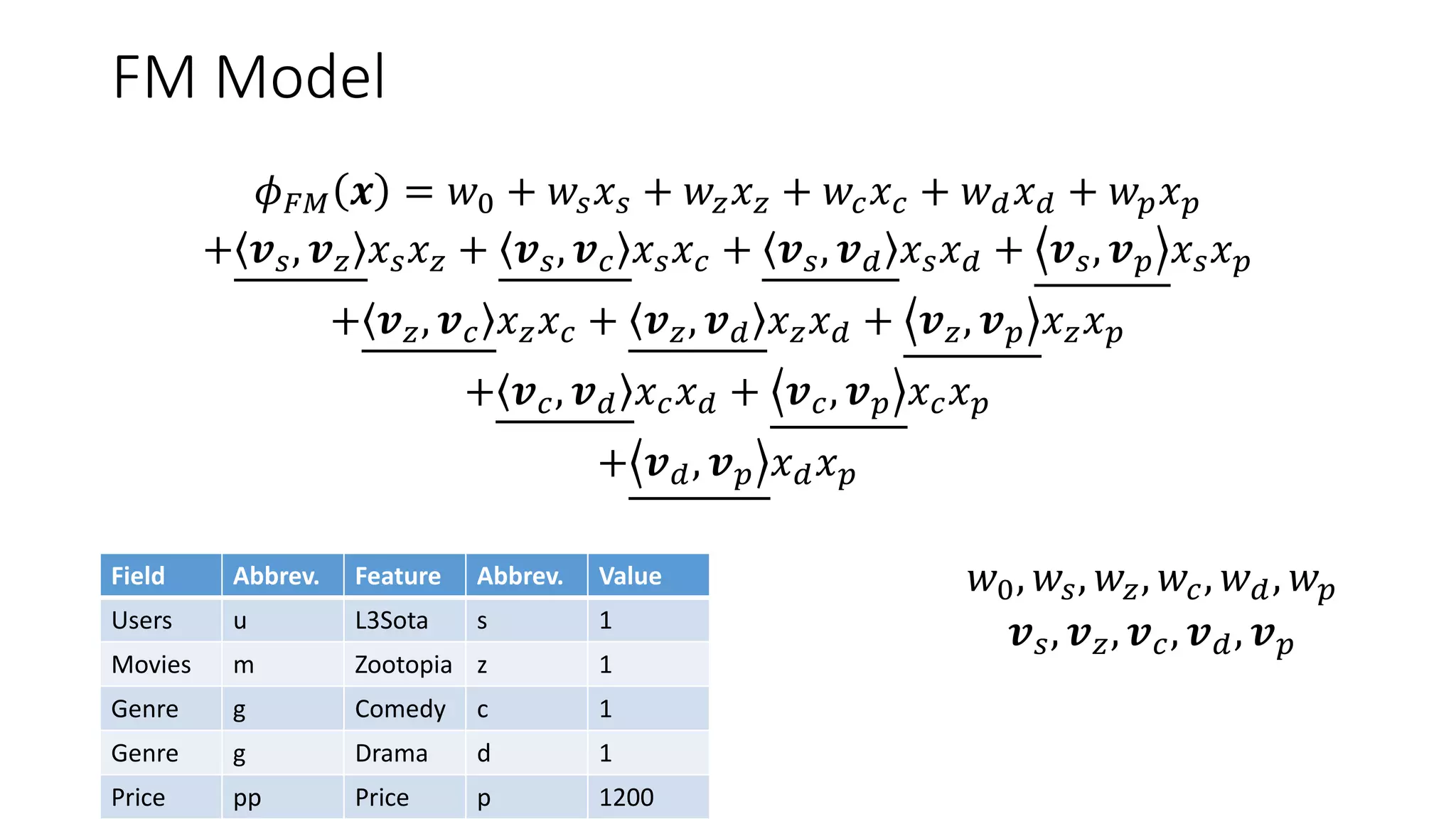

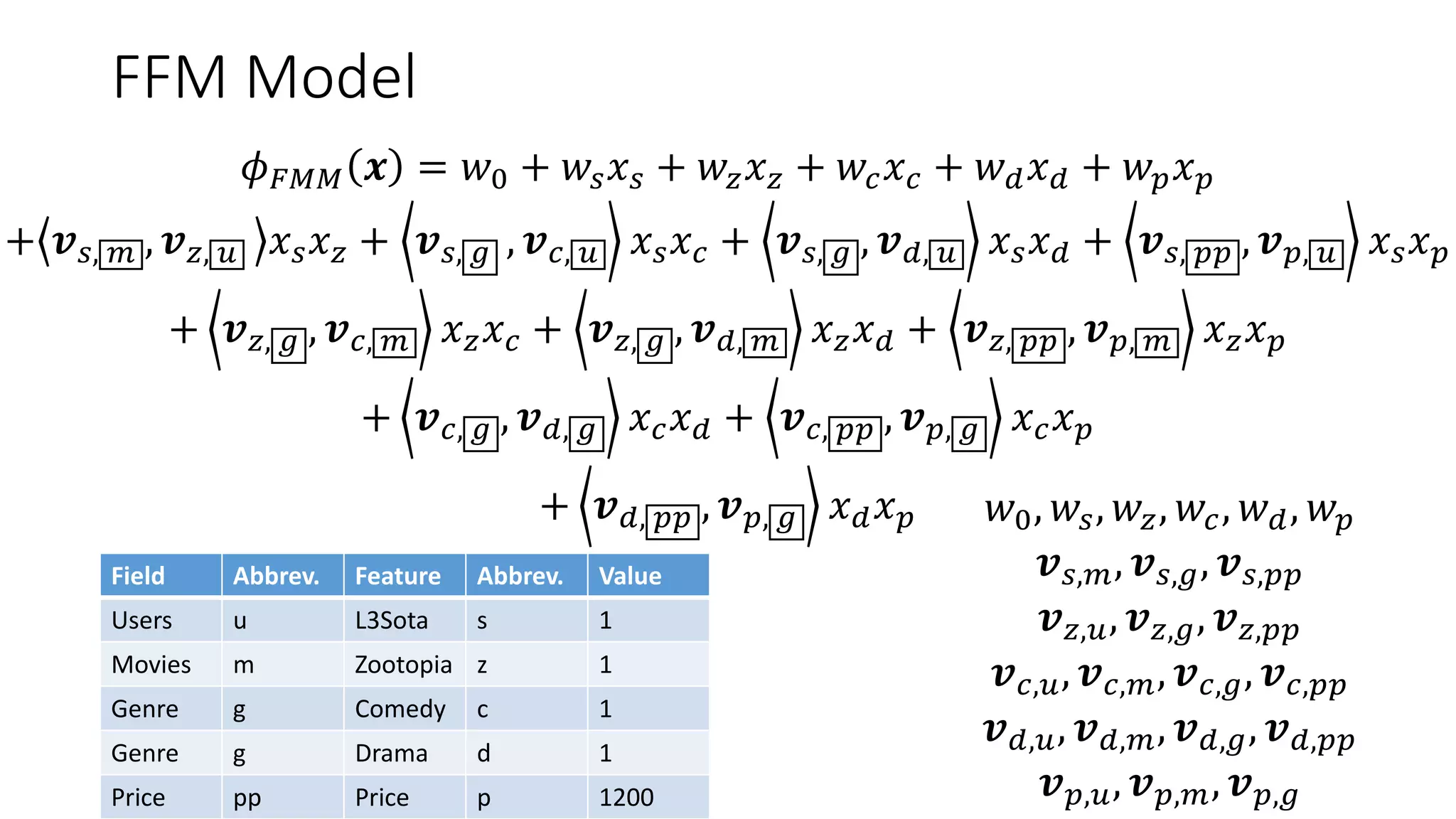

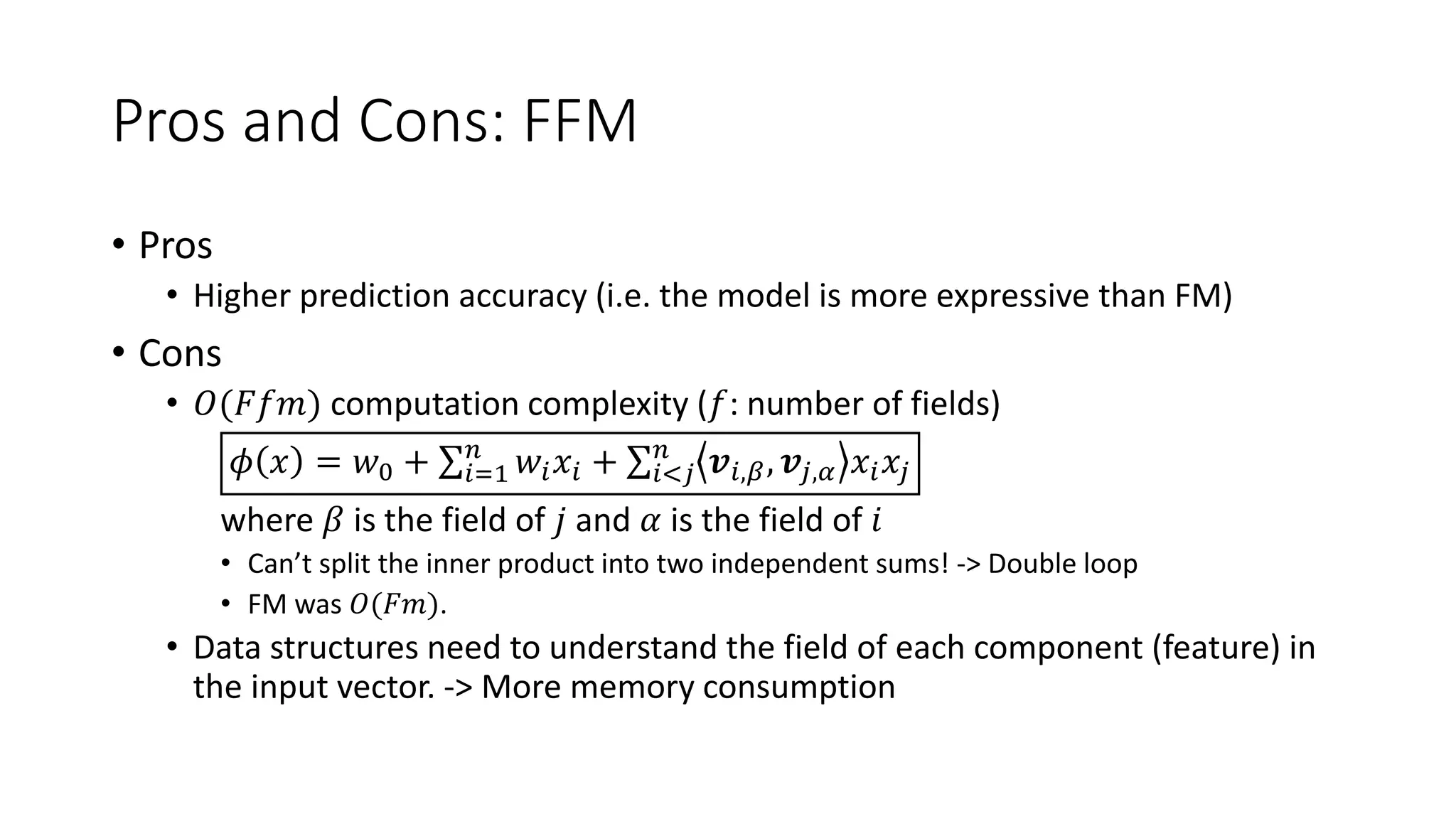

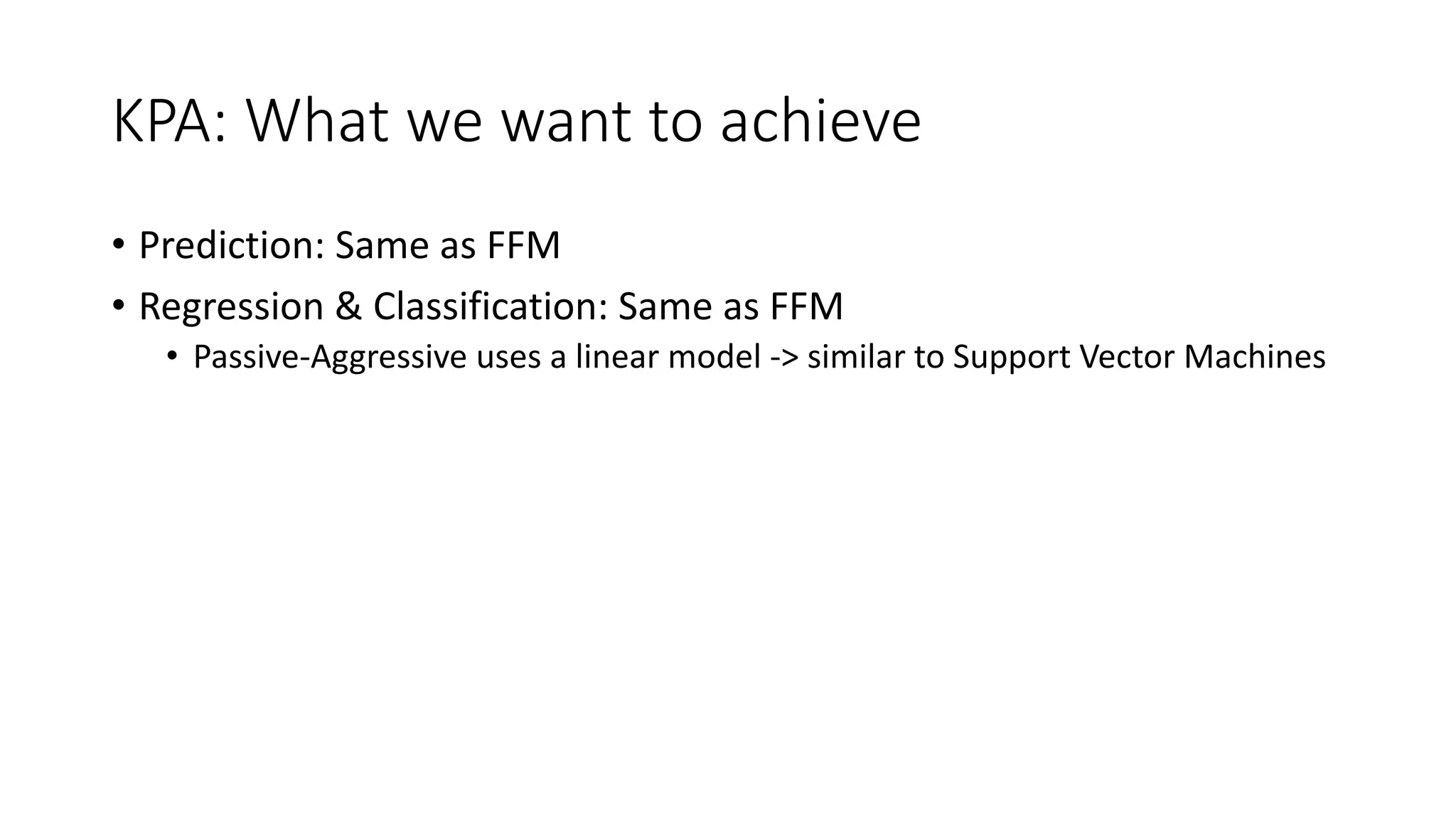

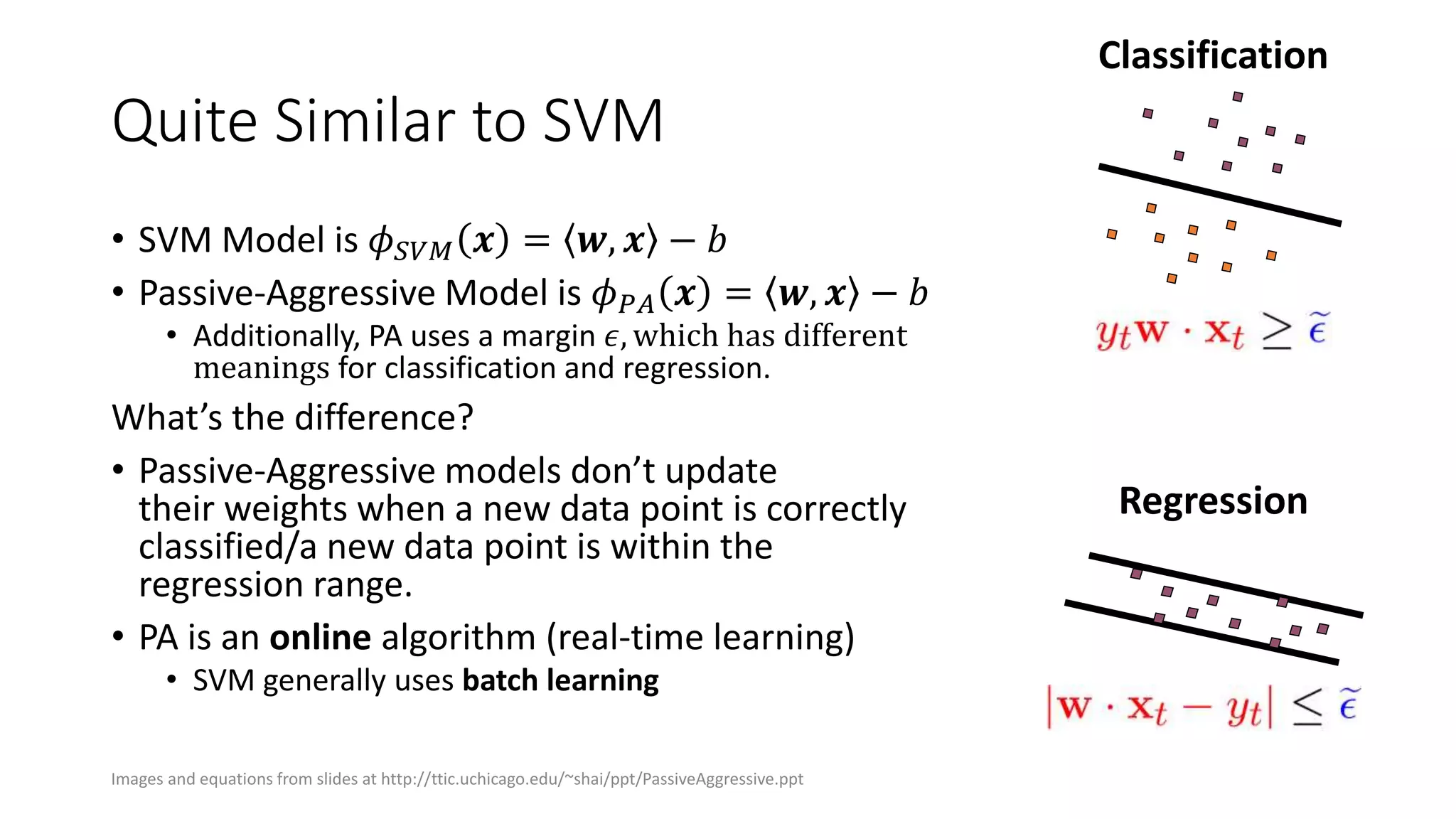

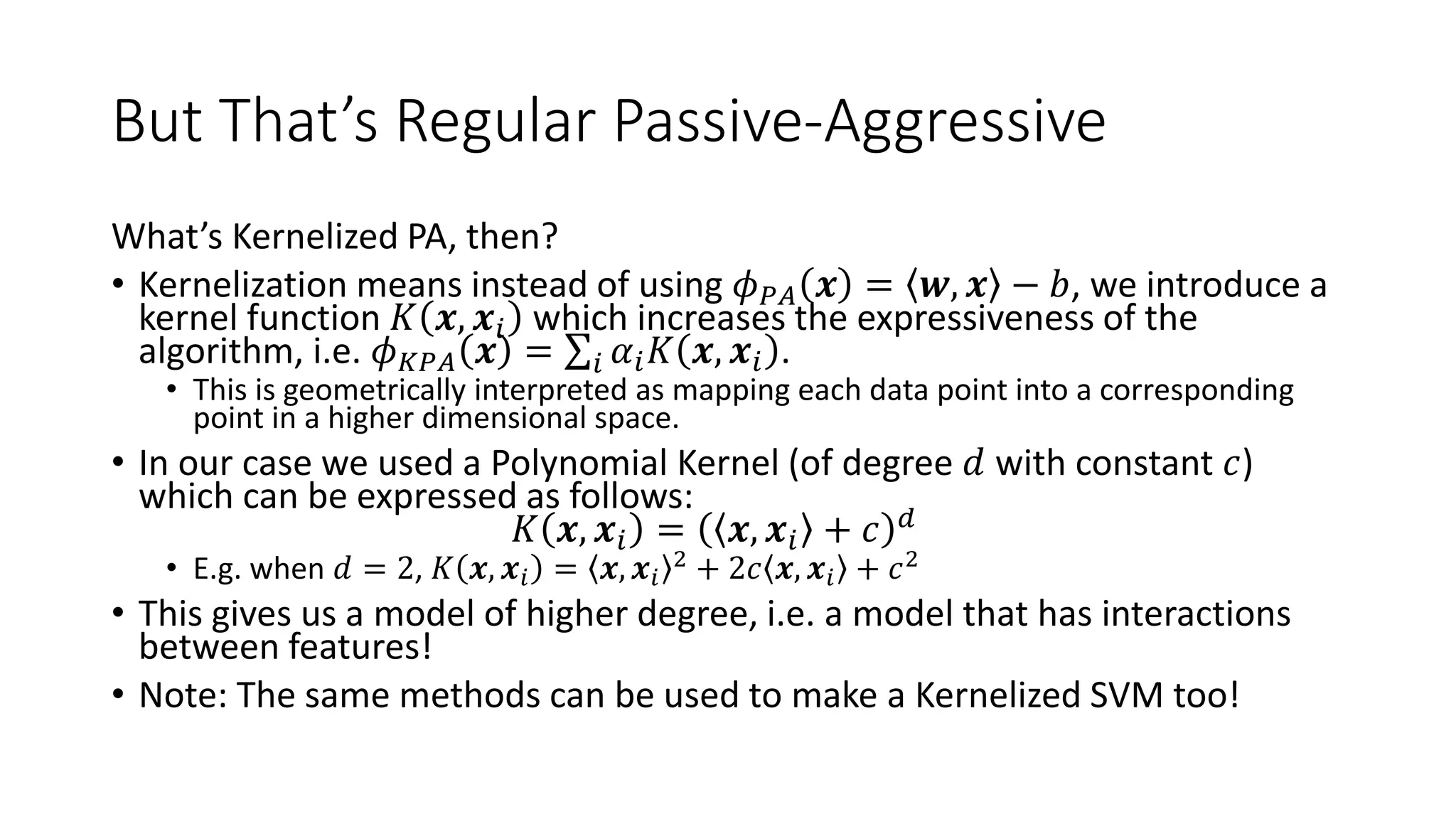

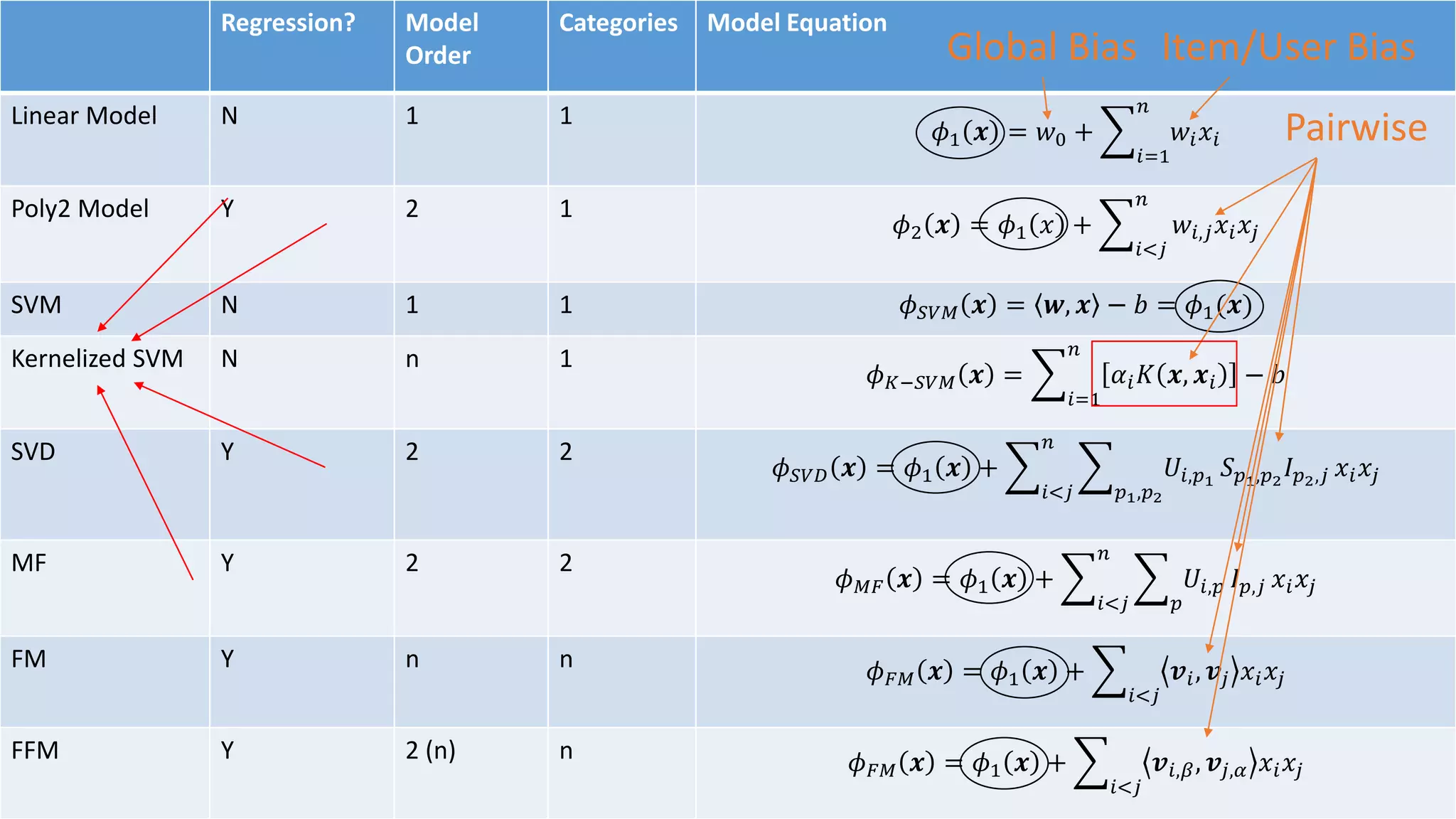

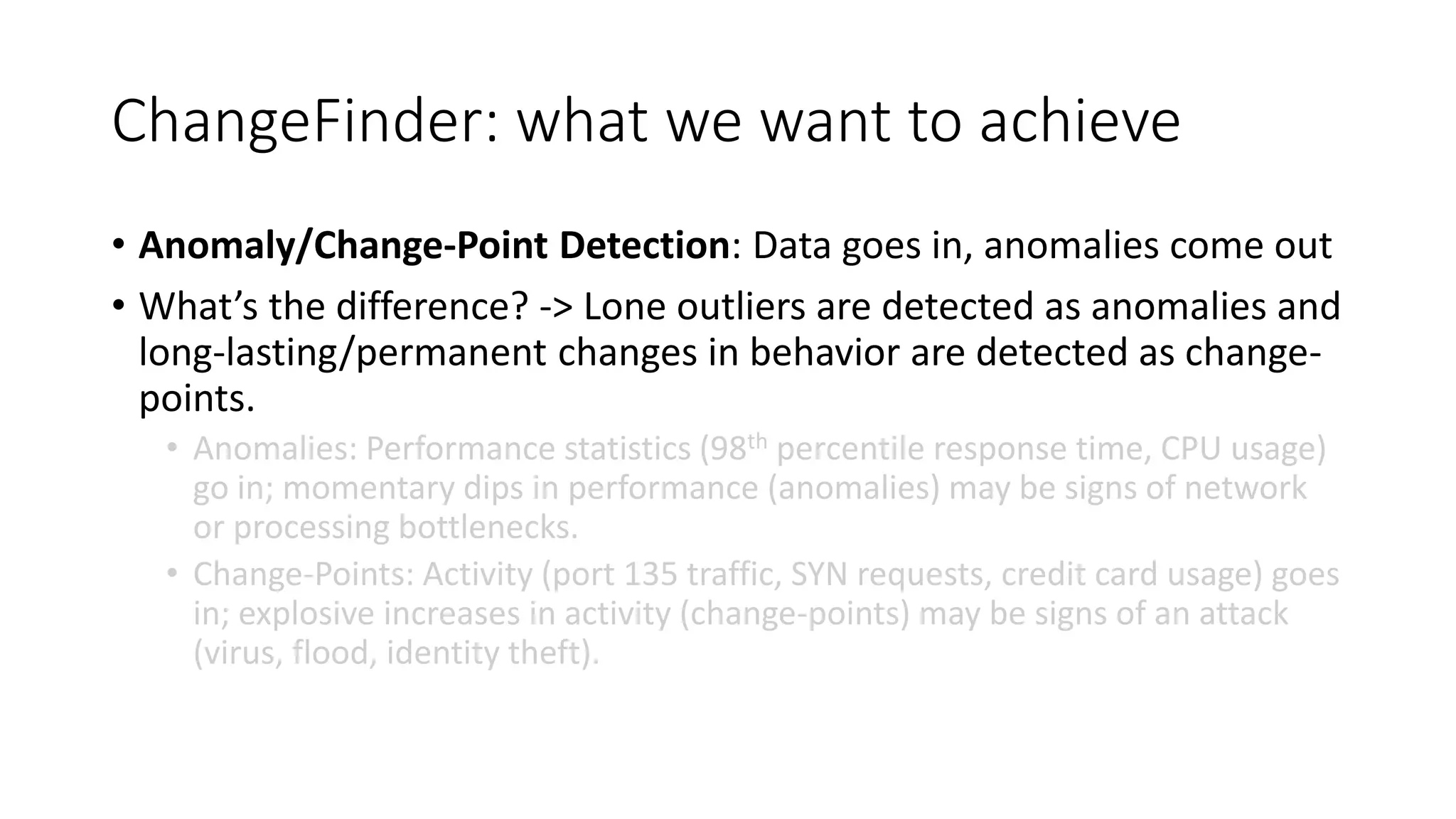

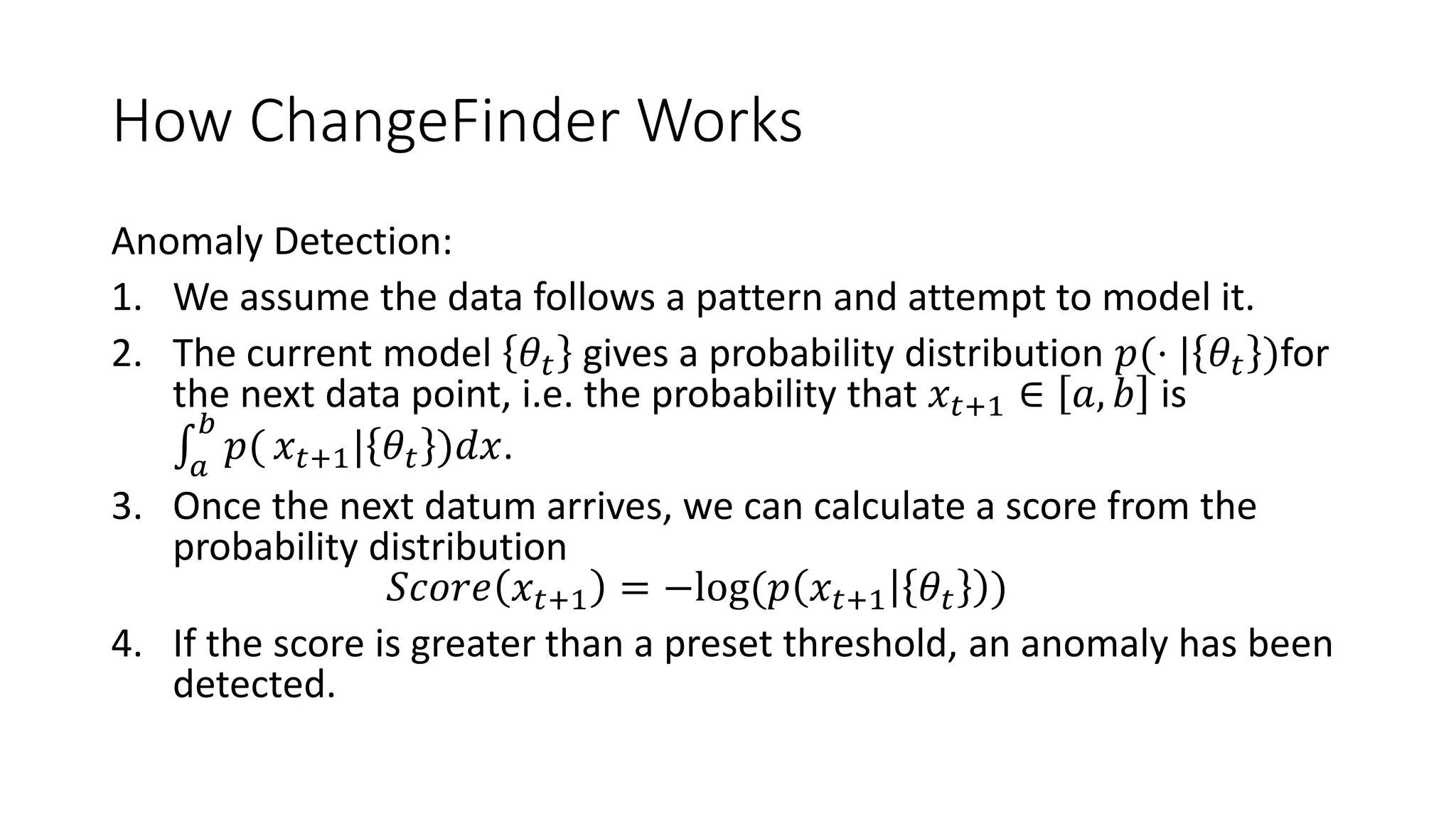

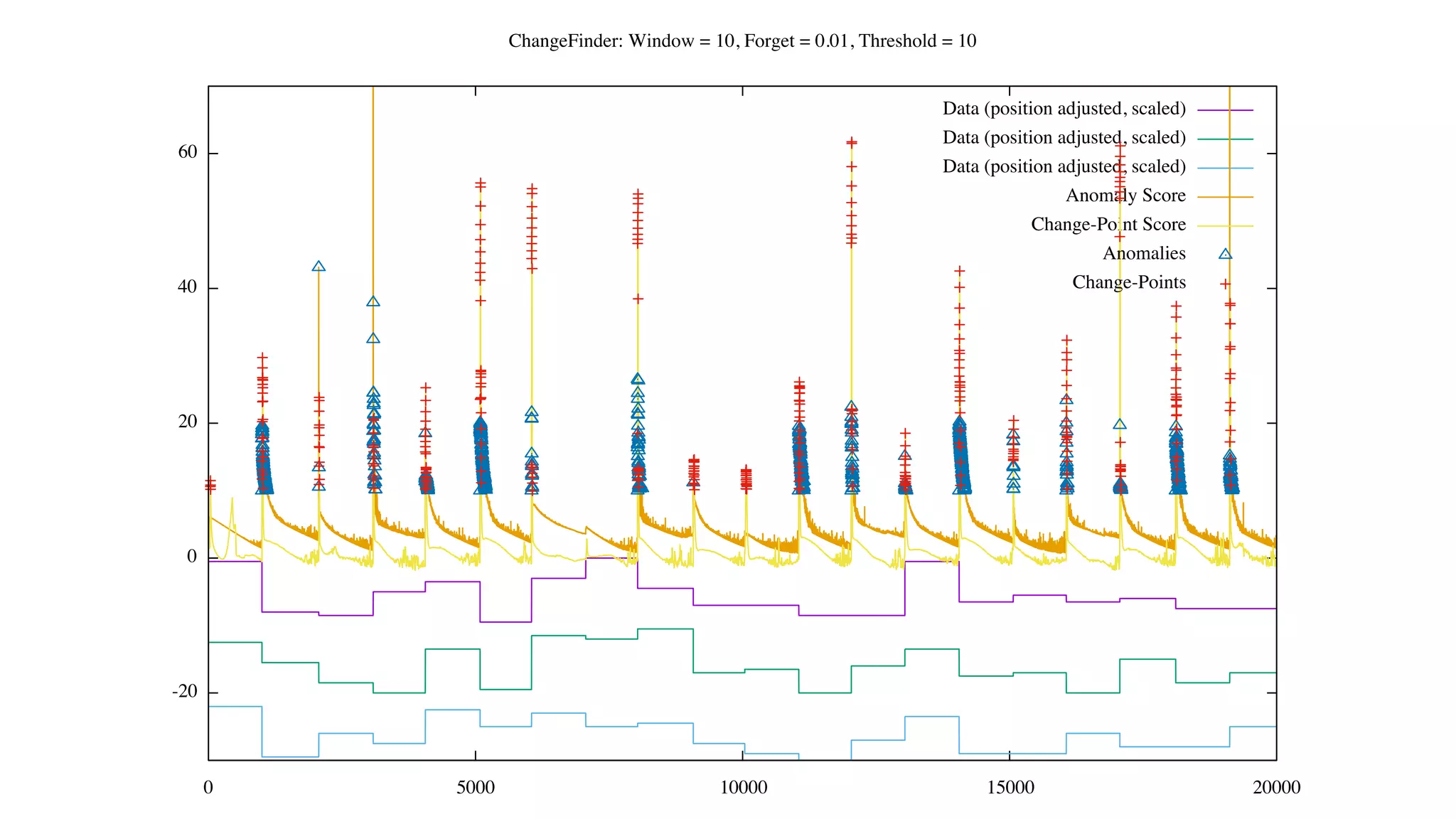

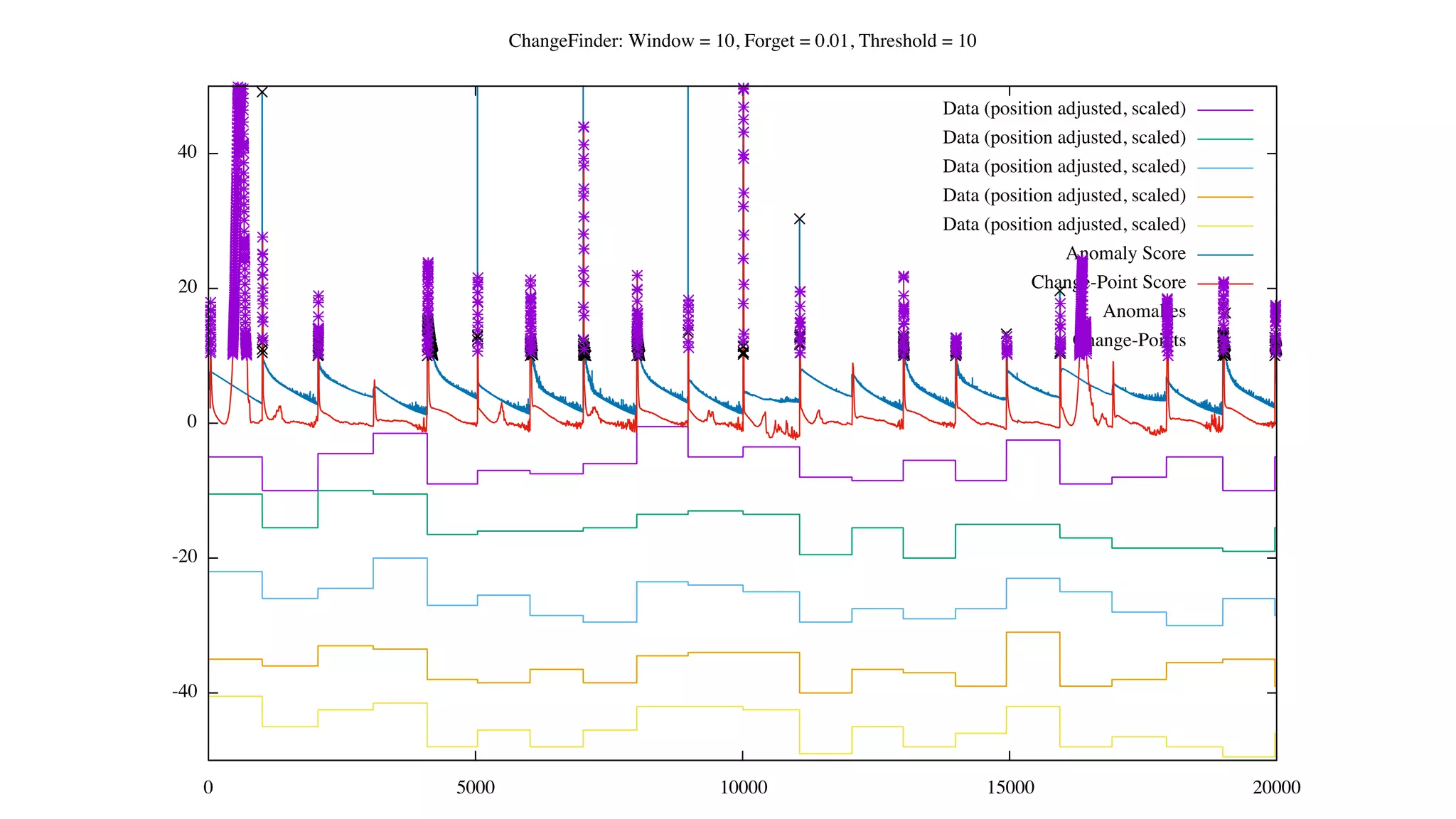

The document outlines an internship presentation by Sotaro Sugimoto at Treasure Data, focusing on machine learning techniques including field-aware factorization machines and kernelized passive-aggressive models. It discusses model-based predictors for tasks like click-through rate prediction and shopping recommendations, detailing the mechanics and advantages of these models. The presentation also covers the implementation status of these algorithms within the Hivemall framework.

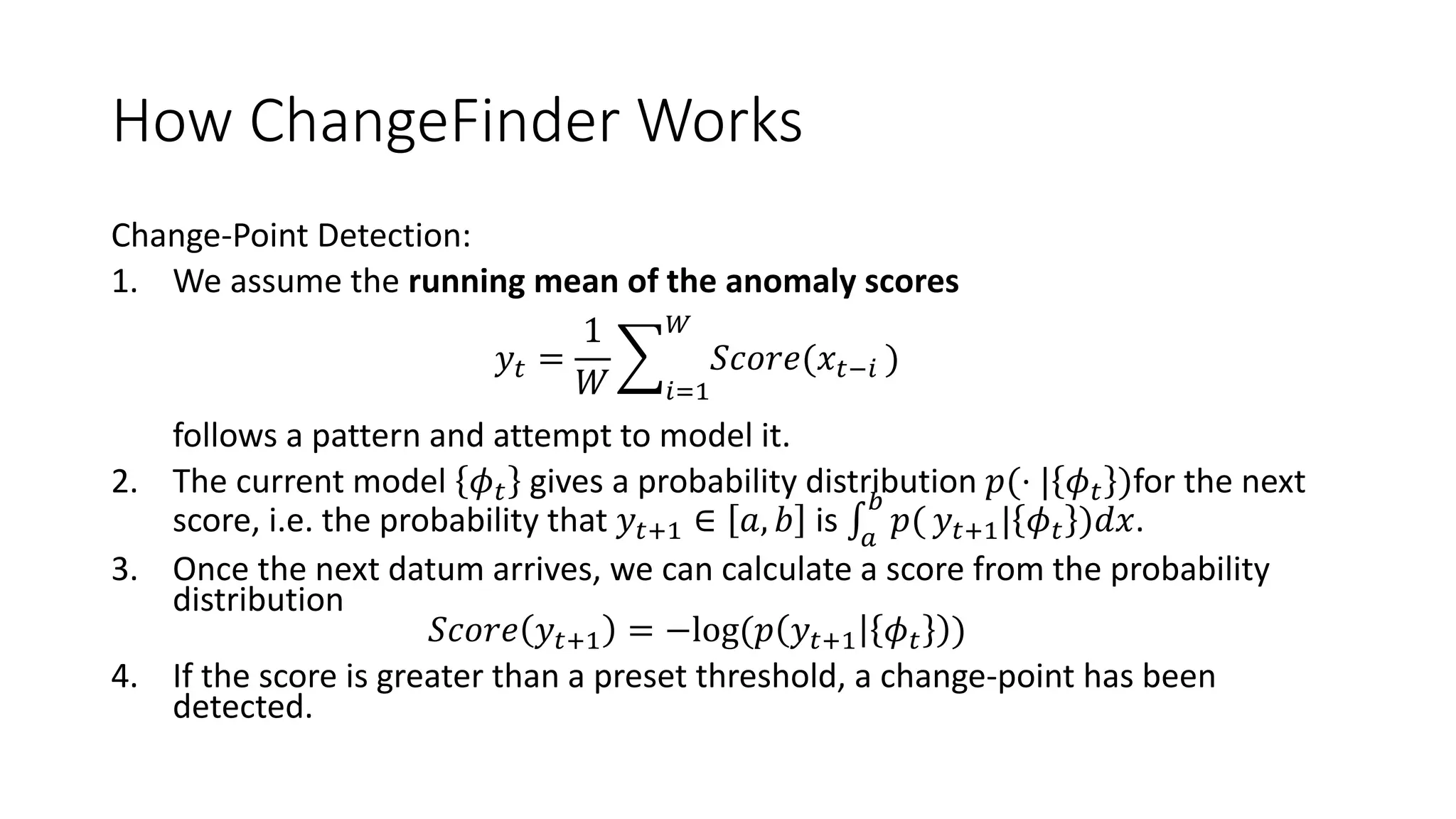

![Status of FFM within Hivemall

• Pull request merged (#284)

• https://github.com/myui/hivemall/pull/284

• Will probably be in next release(?)

• train_ffm(array<string> x, double y[, const string options])

• Trains the internal FFM model using a (sparse) vector x and target y.

• Training uses Stochastic Gradient Descent (SGD).

• ffm_predict(m.model_id, m.model, data.features)

• Calculates a prediction from the given FFM model and data vector.

• The internal FFM model is referenced as ffm_model m](https://image.slidesharecdn.com/spring2016intern-160616080150/75/Spring-2016-Intern-at-Treasure-Data-28-2048.jpg)

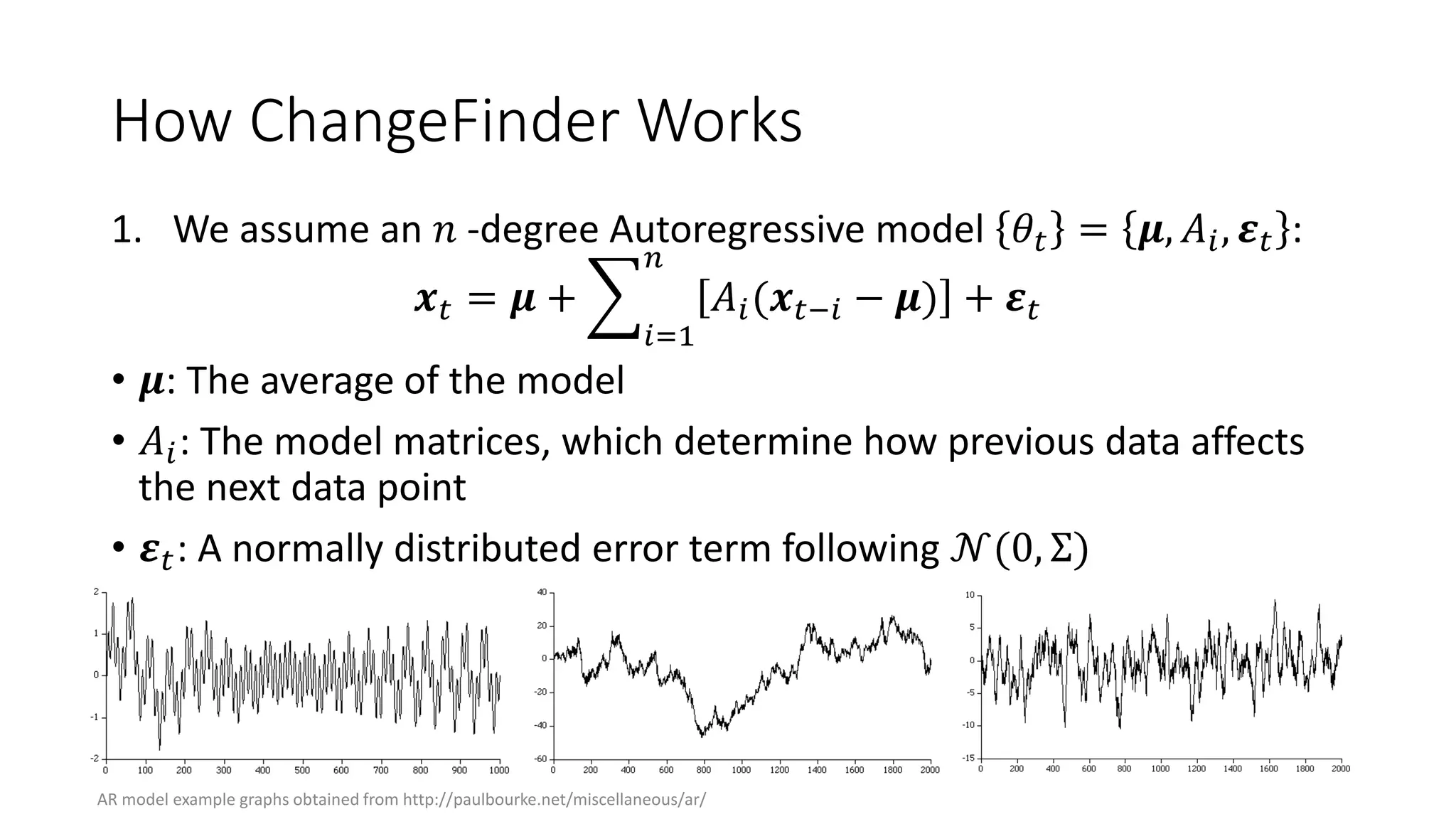

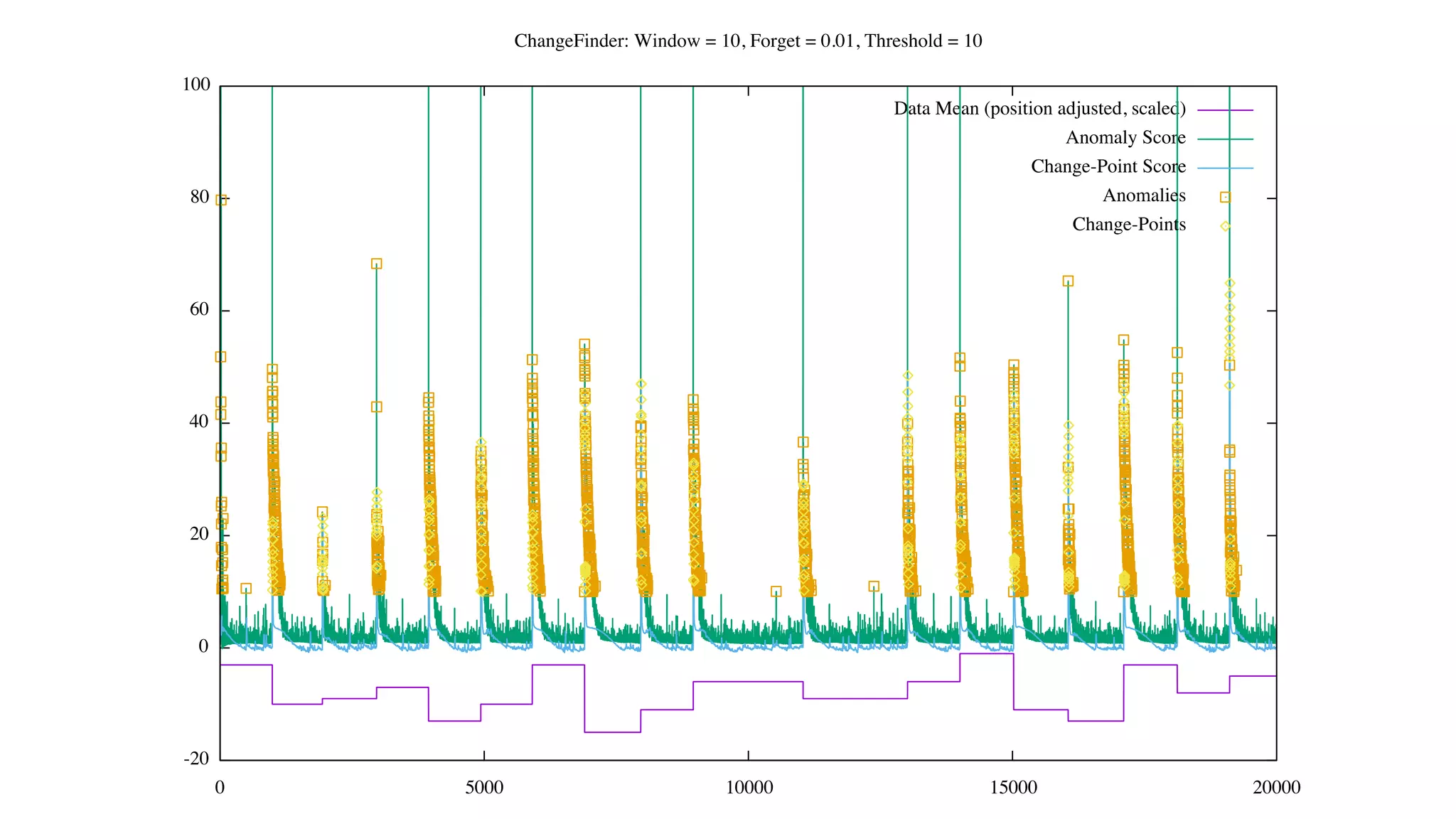

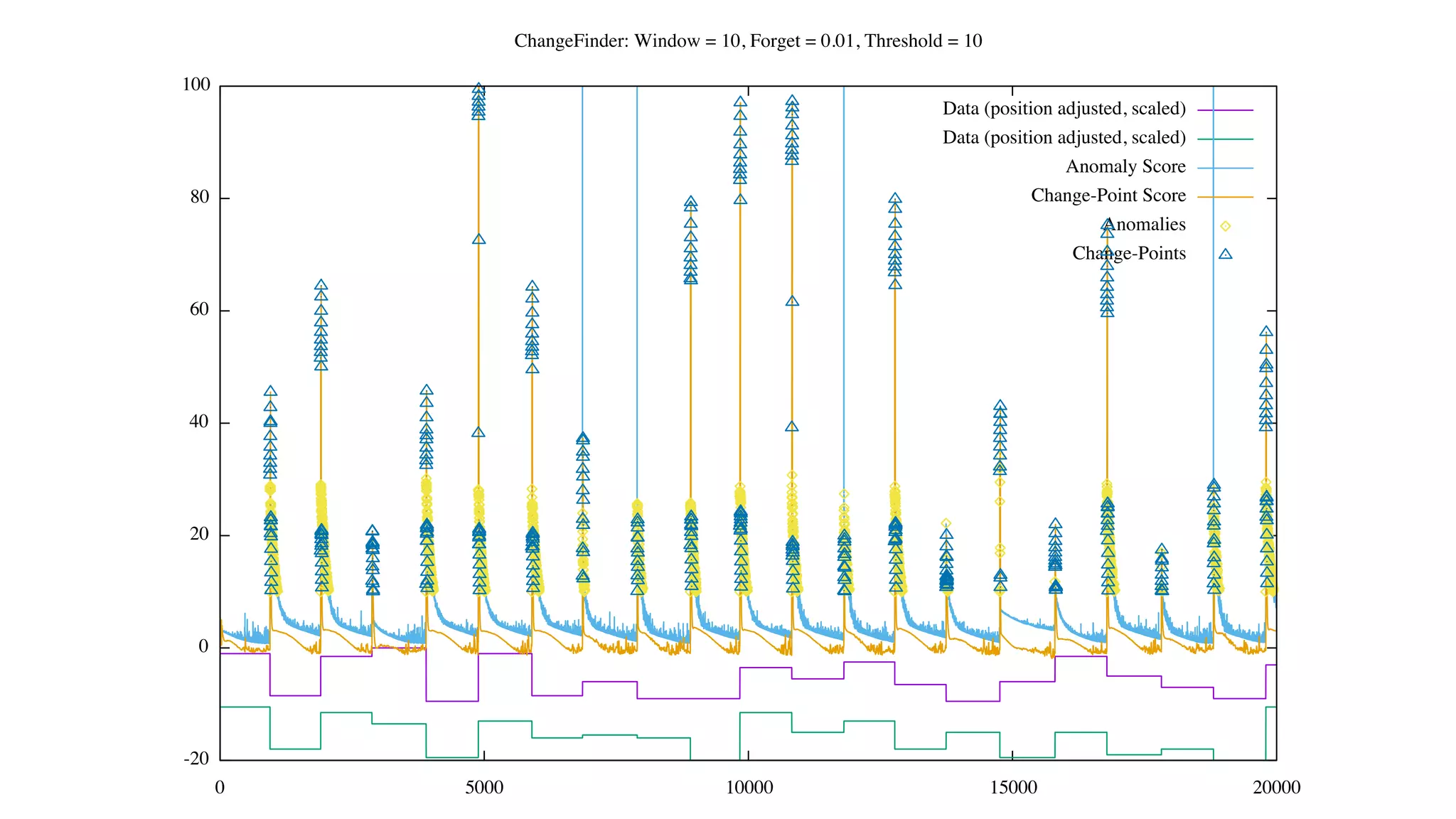

![Status of ChangeFinder within Hivemall

• No pull request yet

• https://github.com/L3Sota/hivemall/tree/feature/cf_sdar_focused

• Mostly complete but some issues remain with detection accuracy, esp. at

higher dimensions

• cf_detect(array<double> x[, const string options])

• ChangeFinder expects input one data point (one vector) at a time, and

automatically learns from the data in the order provided while returning

detection results.](https://image.slidesharecdn.com/spring2016intern-160616080150/75/Spring-2016-Intern-at-Treasure-Data-56-2048.jpg)