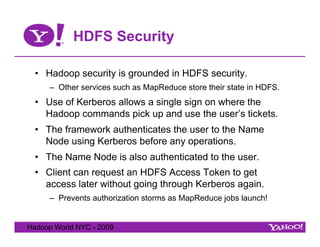

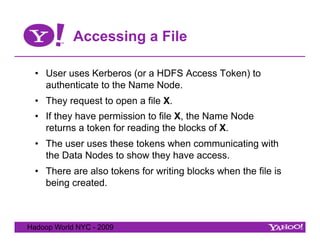

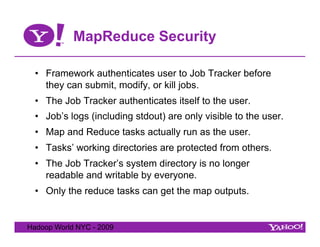

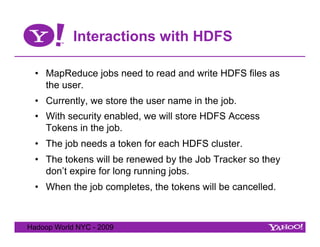

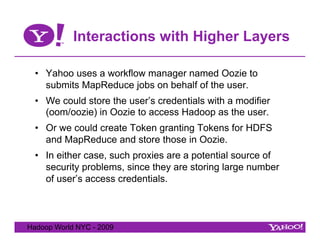

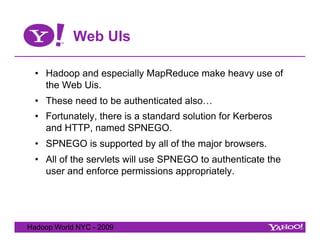

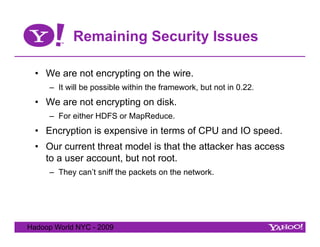

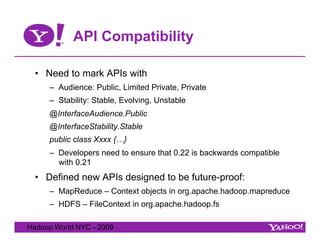

Owen O'Malley discusses security and compatibility challenges in Hadoop, emphasizing the need for authenticated access to shared clusters where sensitive customer data is stored. Key solutions include implementing Kerberos for authentication, managing HDFS security, and ensuring that new versions of Hadoop maintain backwards compatibility for APIs and protocols. The presentation also highlights ongoing security issues, such as the lack of encryption on data in transit and at rest.