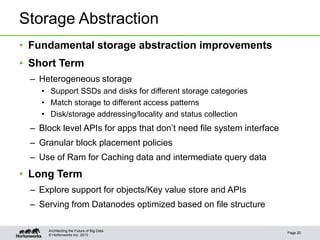

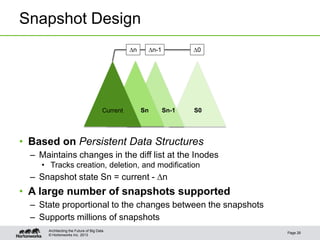

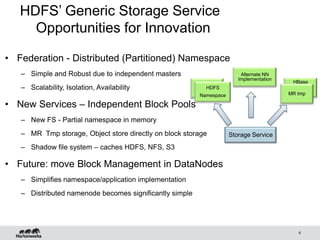

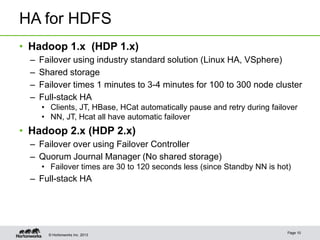

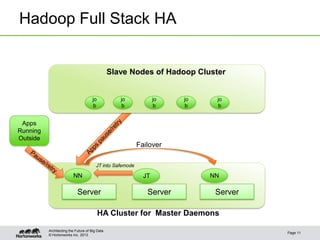

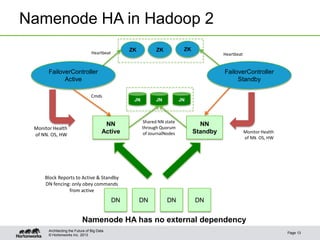

The document discusses enhancements in Hadoop 2.0, highlighting features like federation, high availability, and snapshots. It notes significant progress in reliability, operability, and scalability, with over 2192 commits and continuous improvements to performance and enterprise readiness. Future directions include enhanced storage abstraction and support for heterogeneous storage systems.

![© Hortonworks Inc. 2013

Snapshot – APIs and CLIs

• All regular commands & APIs can be used with snapshot path

– /<path>/.snapshot/snapshot_name/file.txt

– Copy /<path>/.snapshot/snap1/ImportantFile /<path>/

• CLIs

– Allow snapshots

• dfsadmin –allowSnapshots <dir>

• dfsadmin –disAllowSnapshots <dir>

– Create/delete/rename snapshots

• fs –createSnapshot<dir> [snapshot_name]

• fs –deleteSnapshot<dir> <snapshot_name>

• fs –renameSnapshot<dir> <old_name> <new_name>

– Tool to print diff between snapshots

– Admin tool to print all snapshottable directories and snapshots

Page 15

Architecting the Future of Big Data](https://image.slidesharecdn.com/radia-june26-255pm-hall1-v3-130709110628-phpapp01/85/HDFS-What-is-New-and-Future-15-320.jpg)