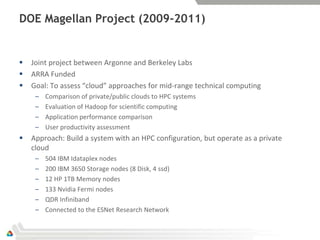

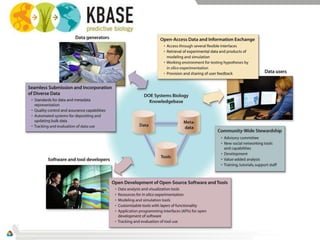

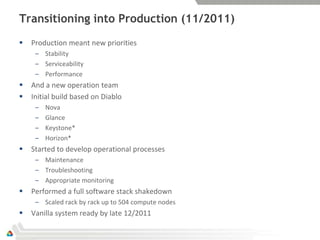

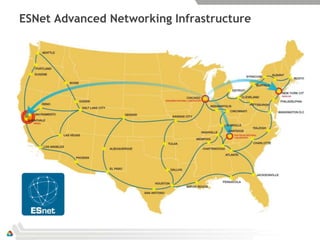

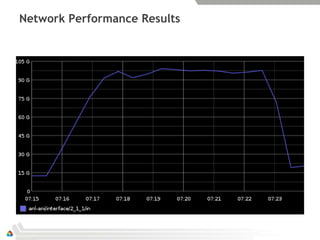

The document summarizes Magellan's experiences with OpenStack as a cloud computing platform for high performance computing workloads. Initial testing found stability and scalability issues with Eucalyptus, but OpenStack performed much better. A production OpenStack deployment was established to support scientific computing projects. Further work optimized networking performance, achieving near-wire speed wide area data transfers of 95 Gbps using OpenStack. The results demonstrate OpenStack's potential for on-demand high performance computing and data-intensive workloads.