The document outlines the agenda for the May Triangle OpenStack Meetup, including:

- Welcome and introductions starting at 4:30pm

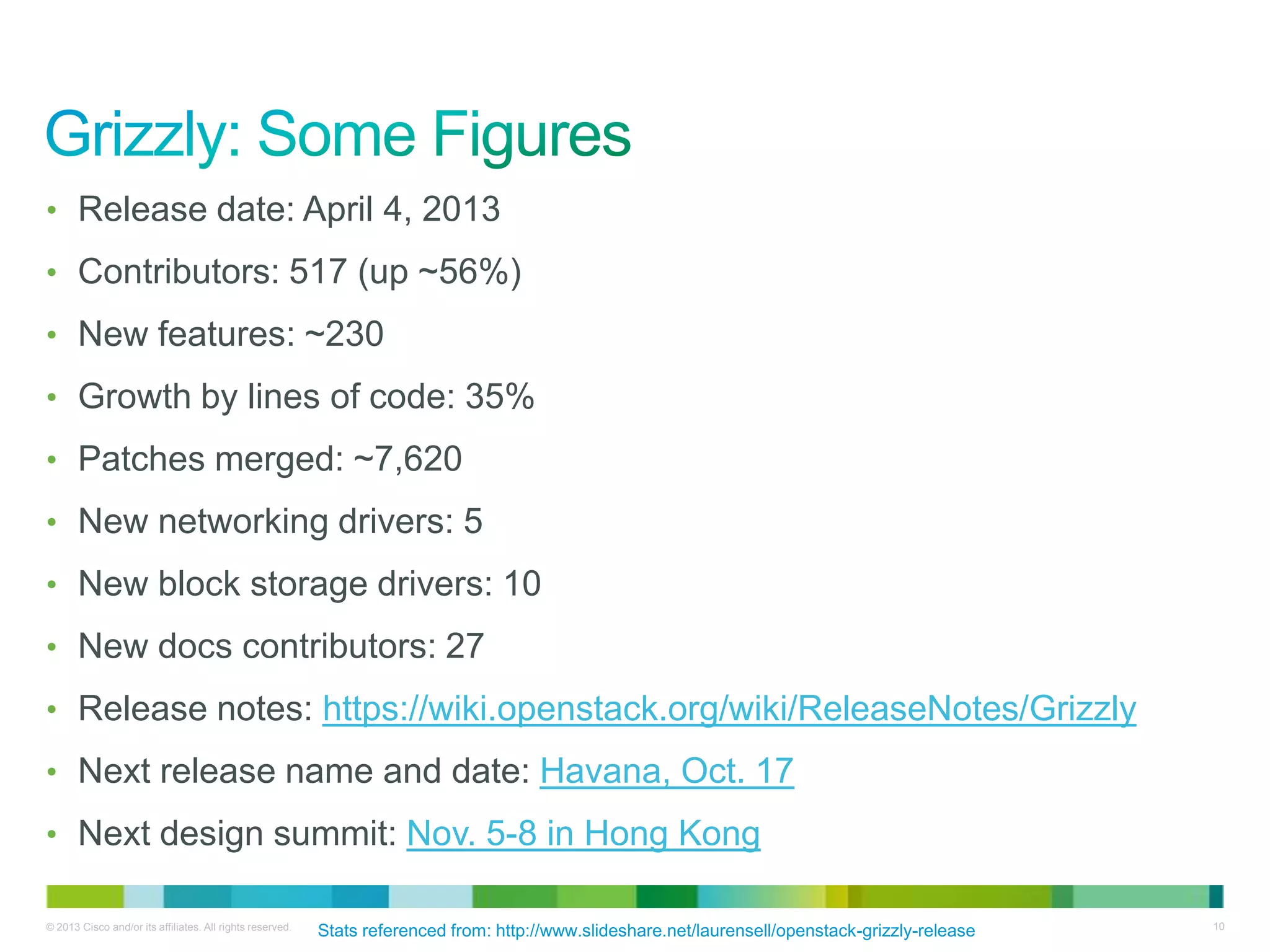

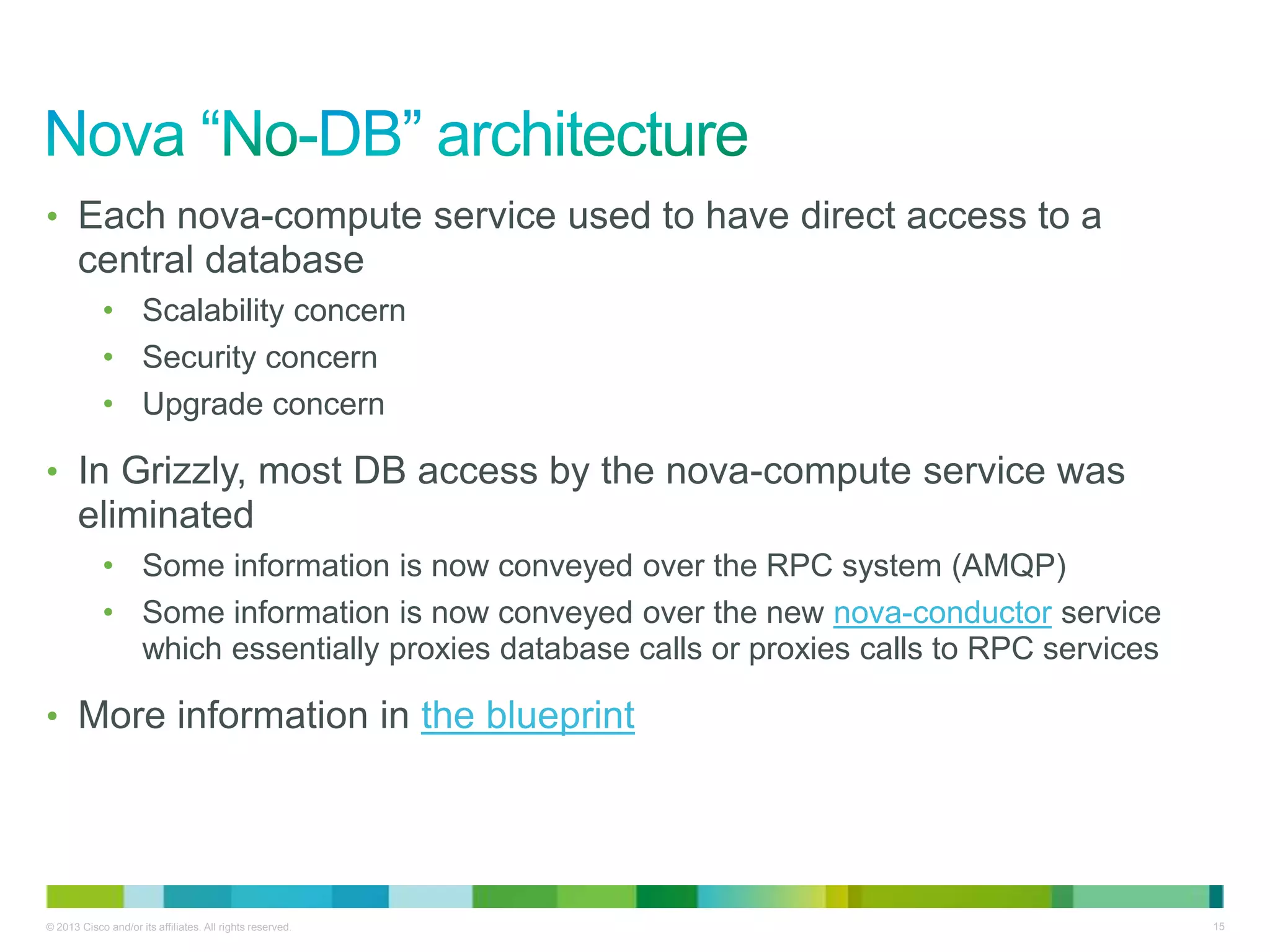

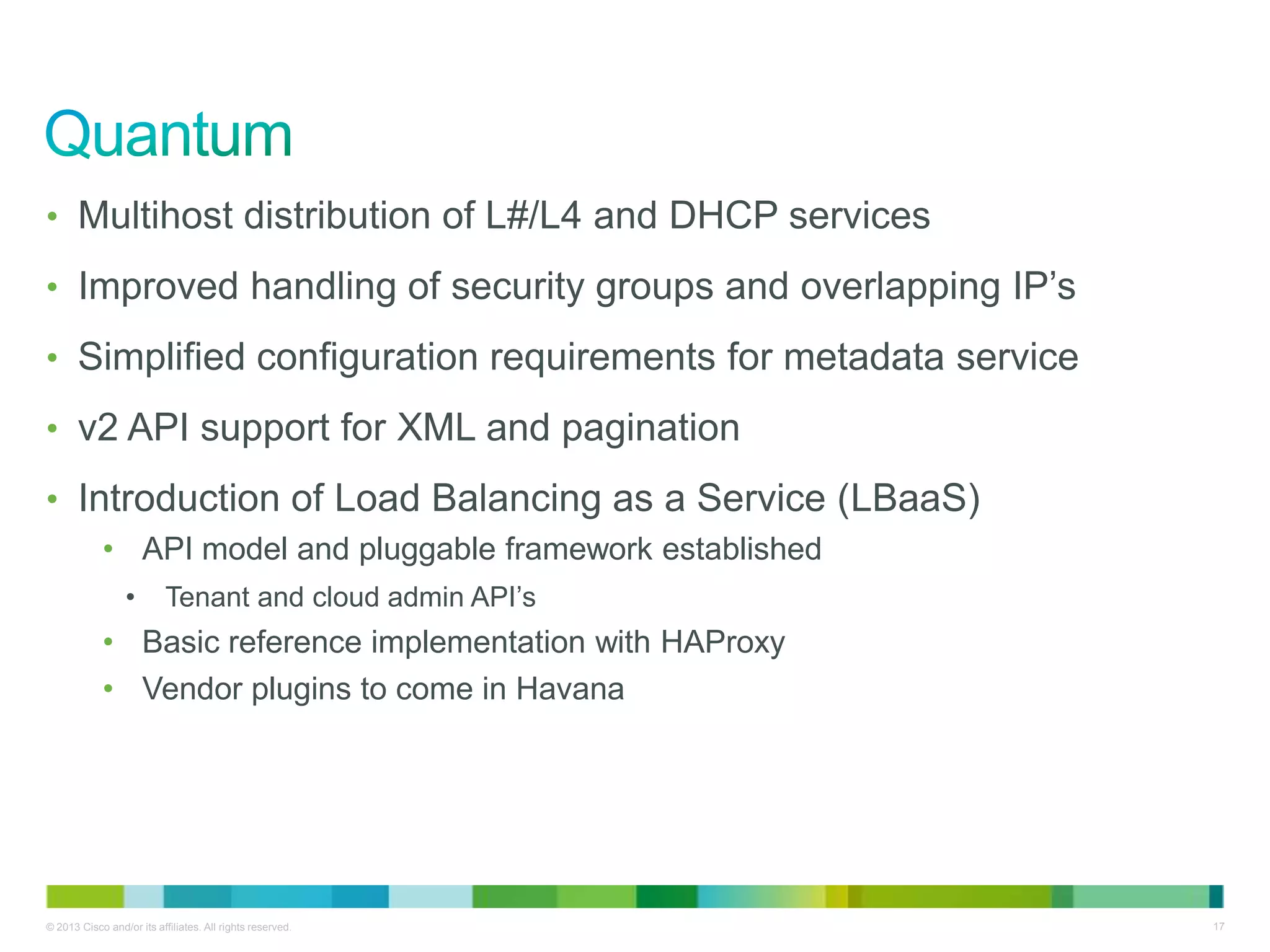

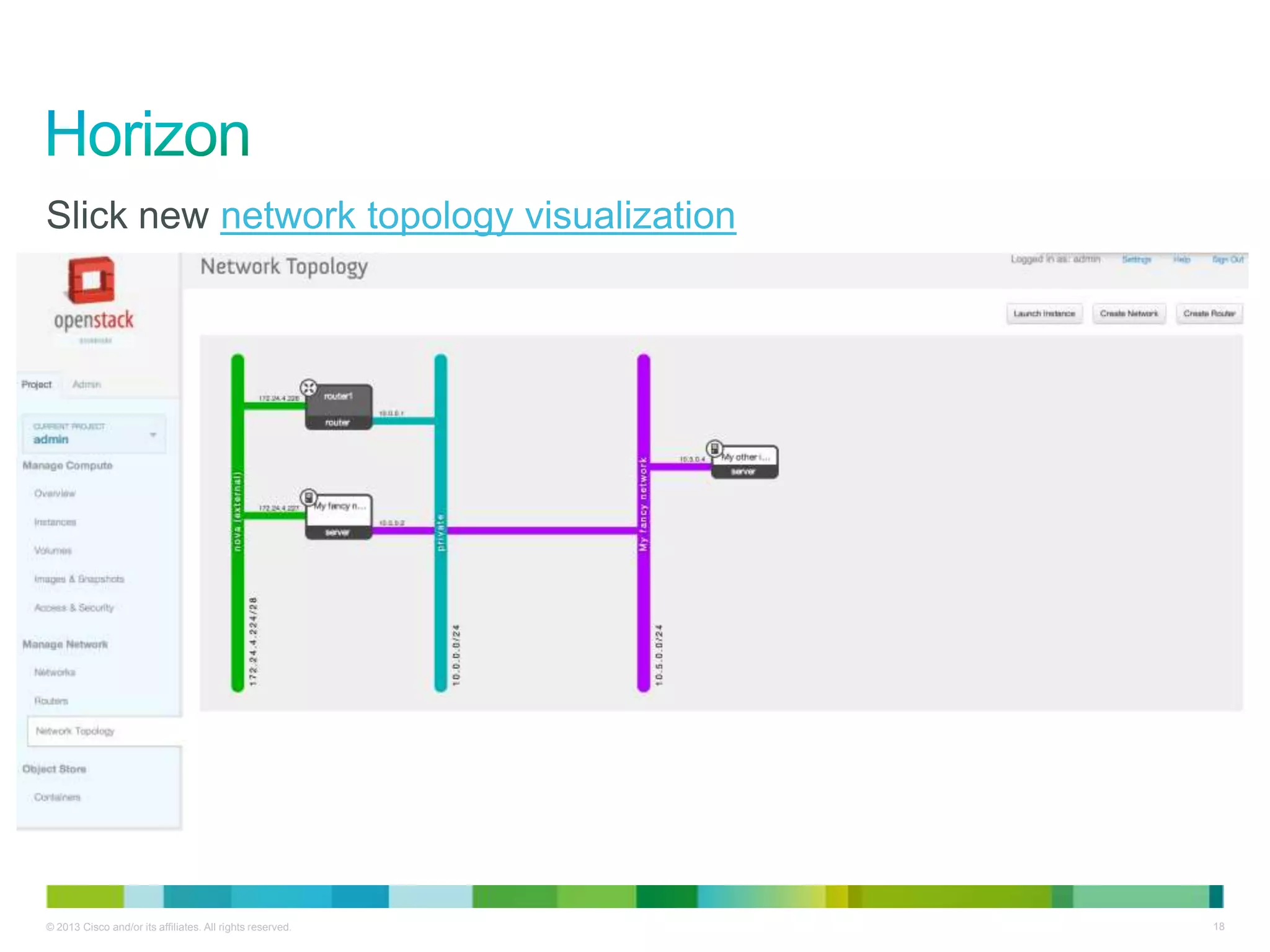

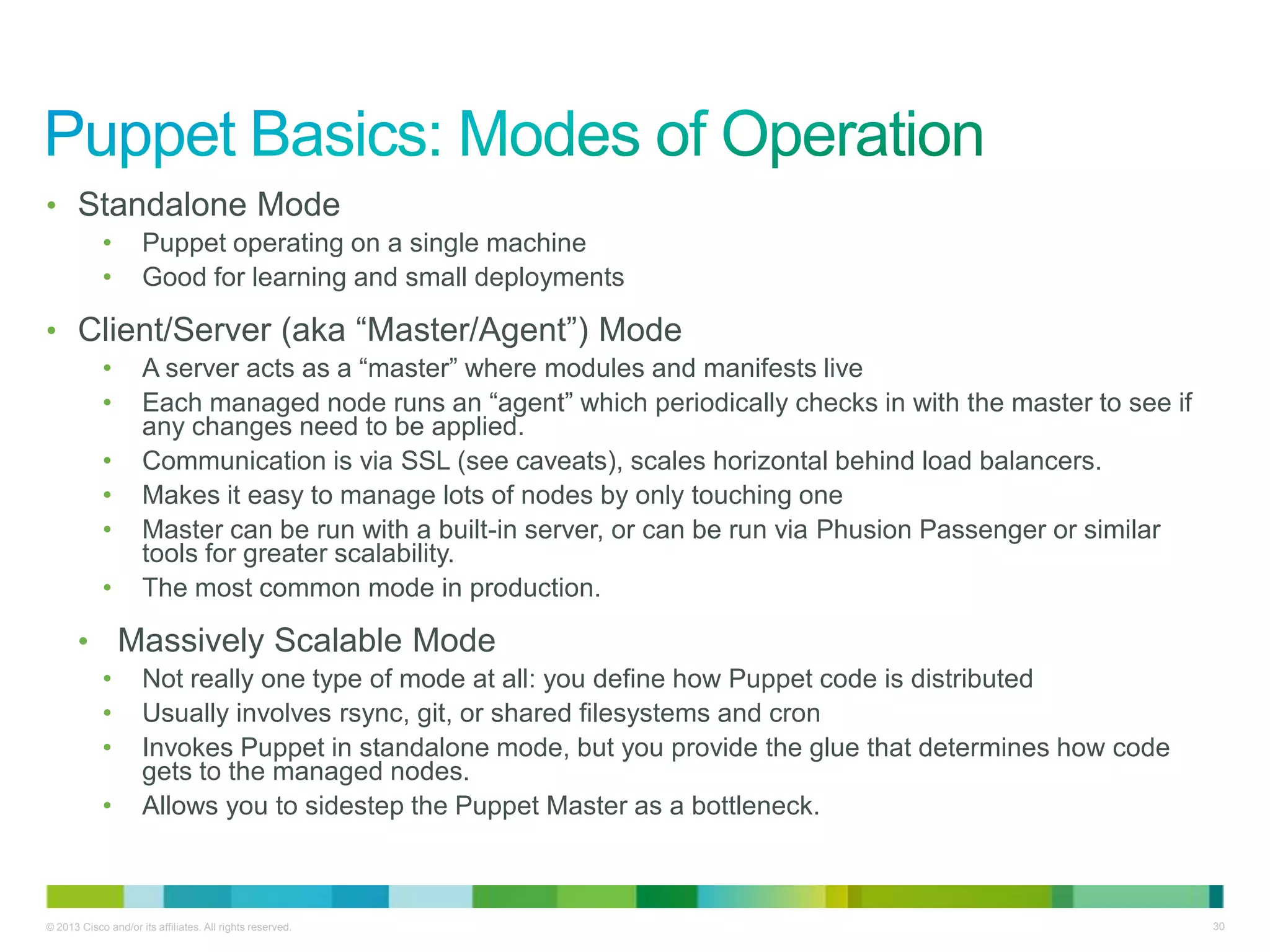

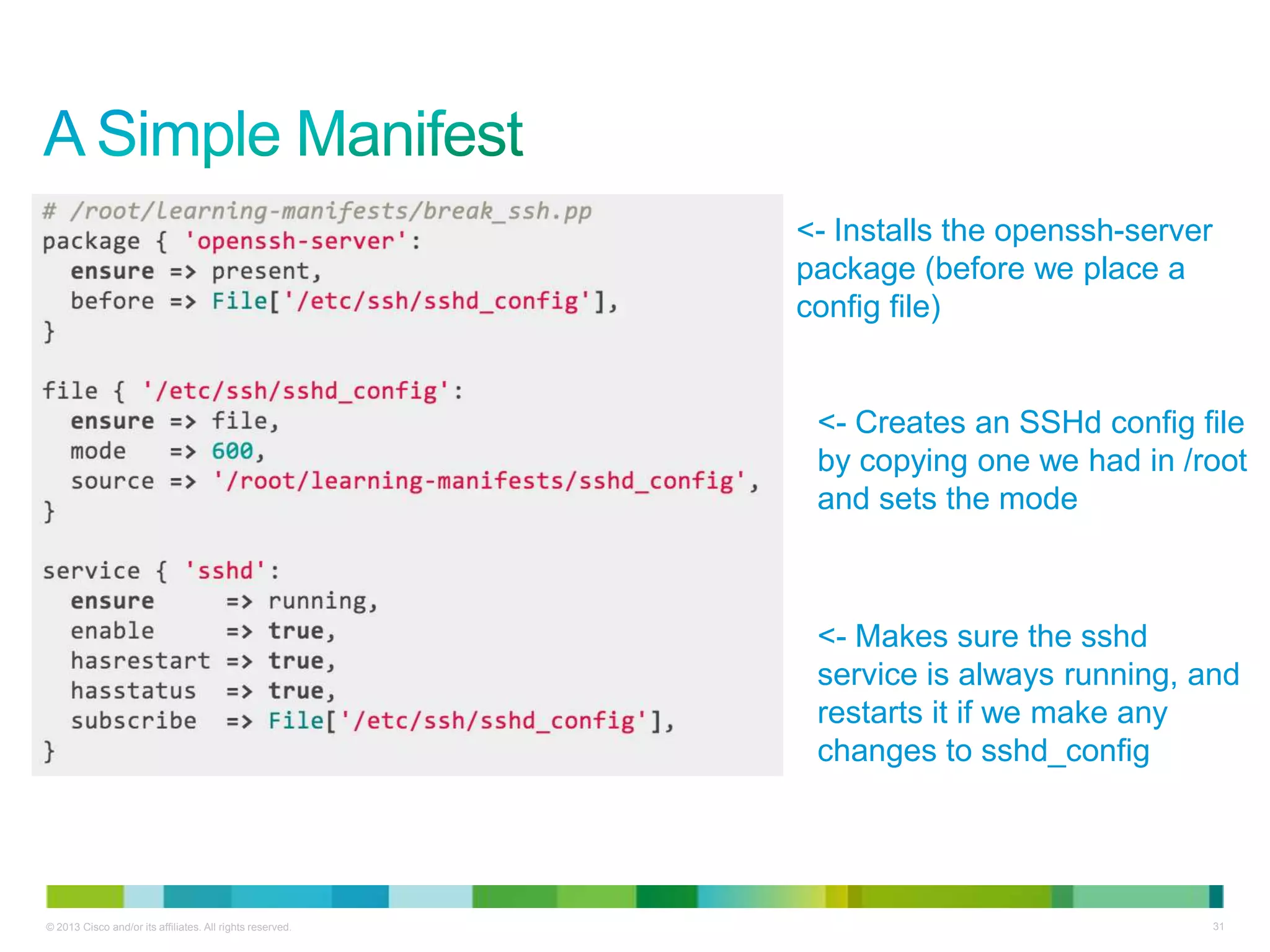

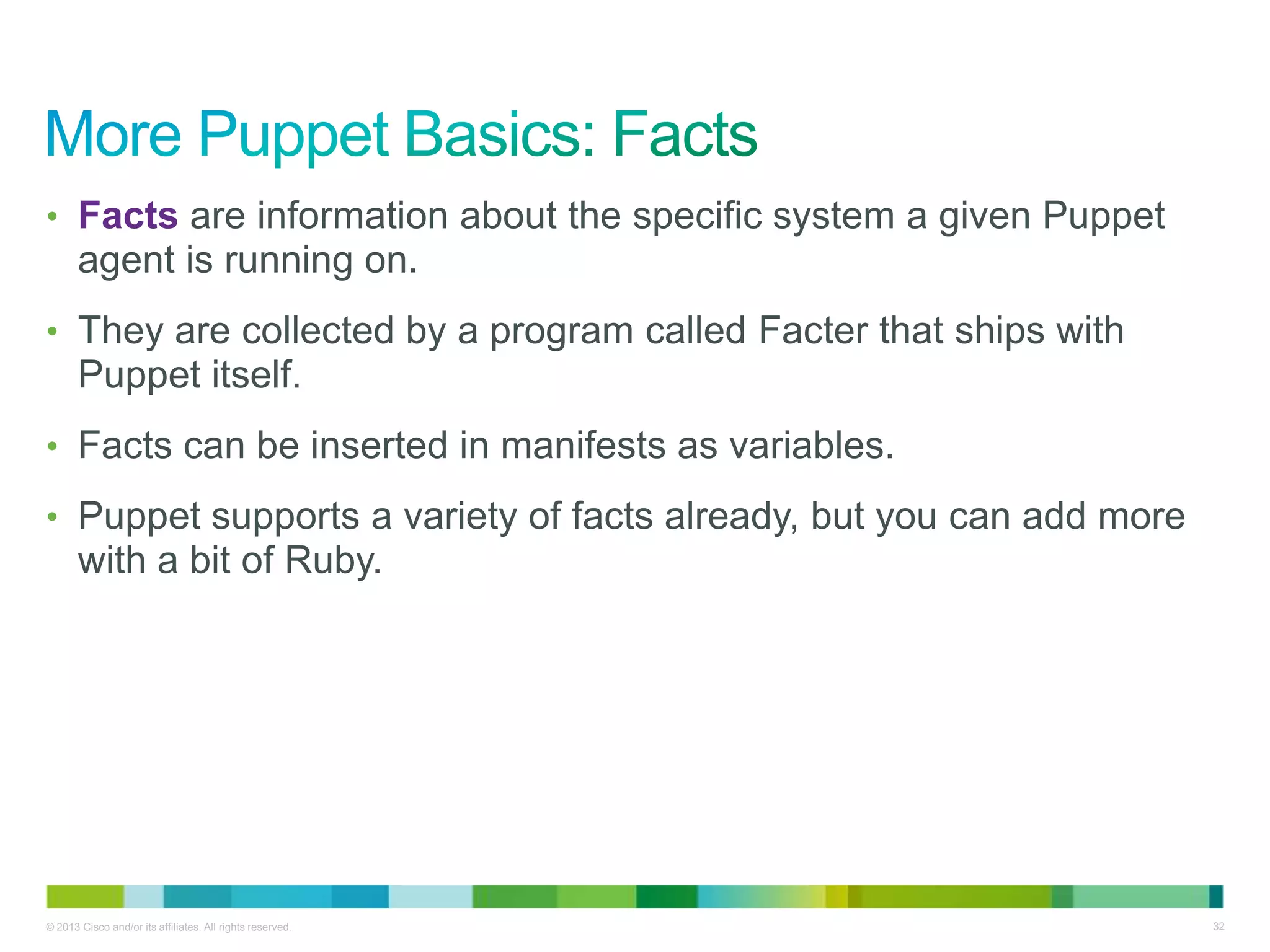

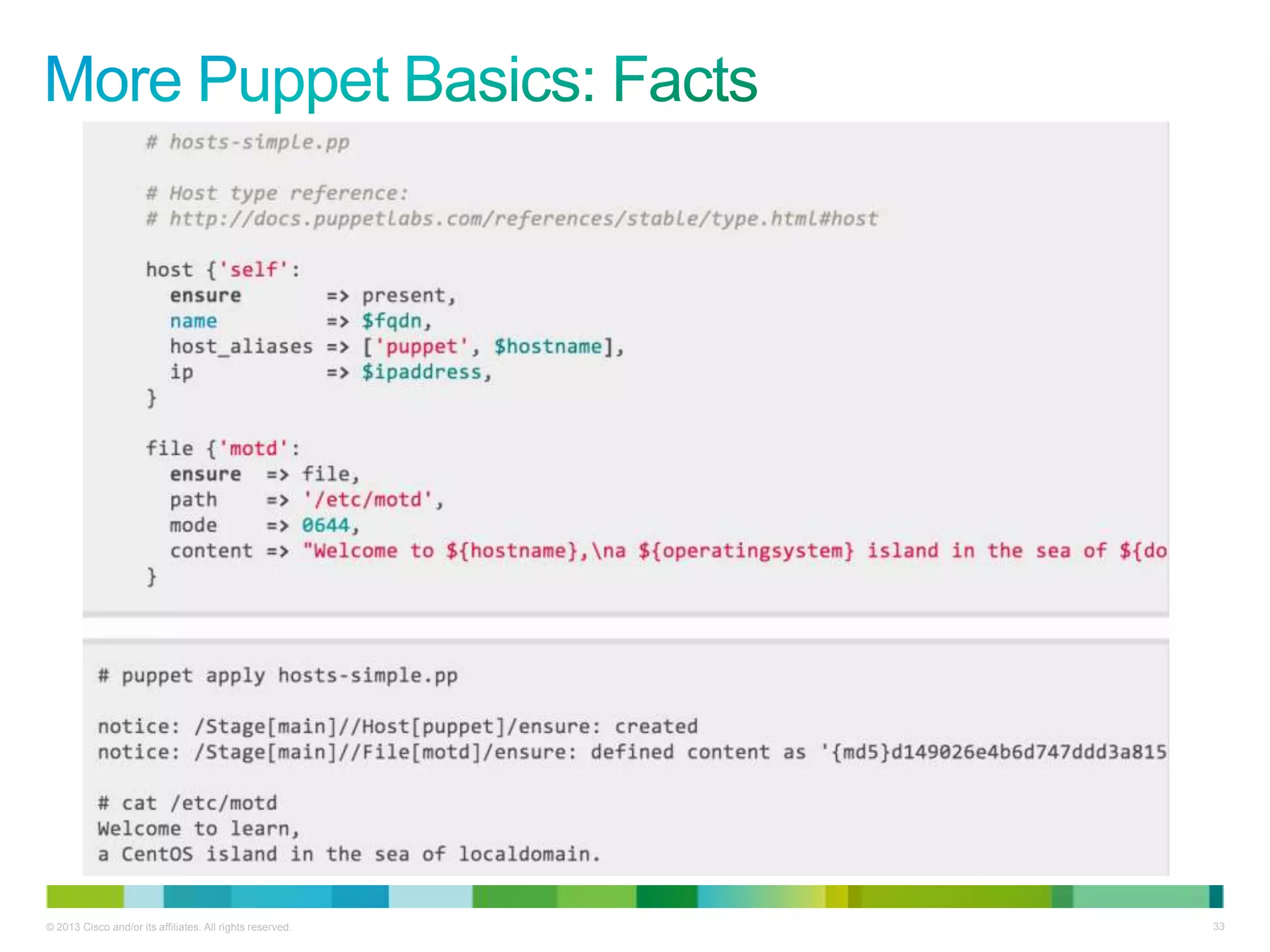

- Two technical talks from 4:45-5:30pm on new features in the Grizzly release of OpenStack and automating OpenStack with Puppet

- An open question and answer forum from 5:30-5:45pm

- Pizza will be served around 5:45pm

Bios of the three meetup organizers are also provided.