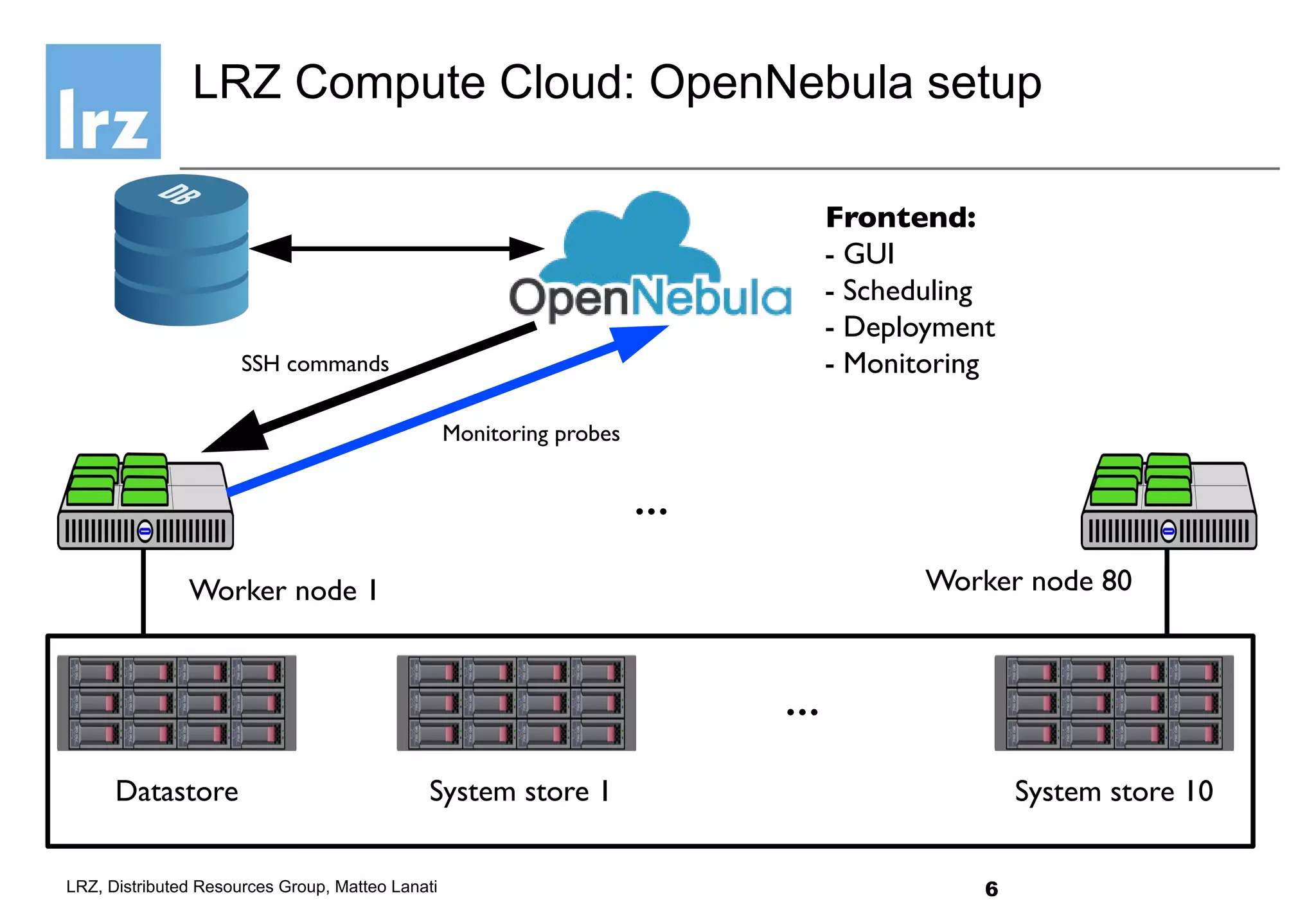

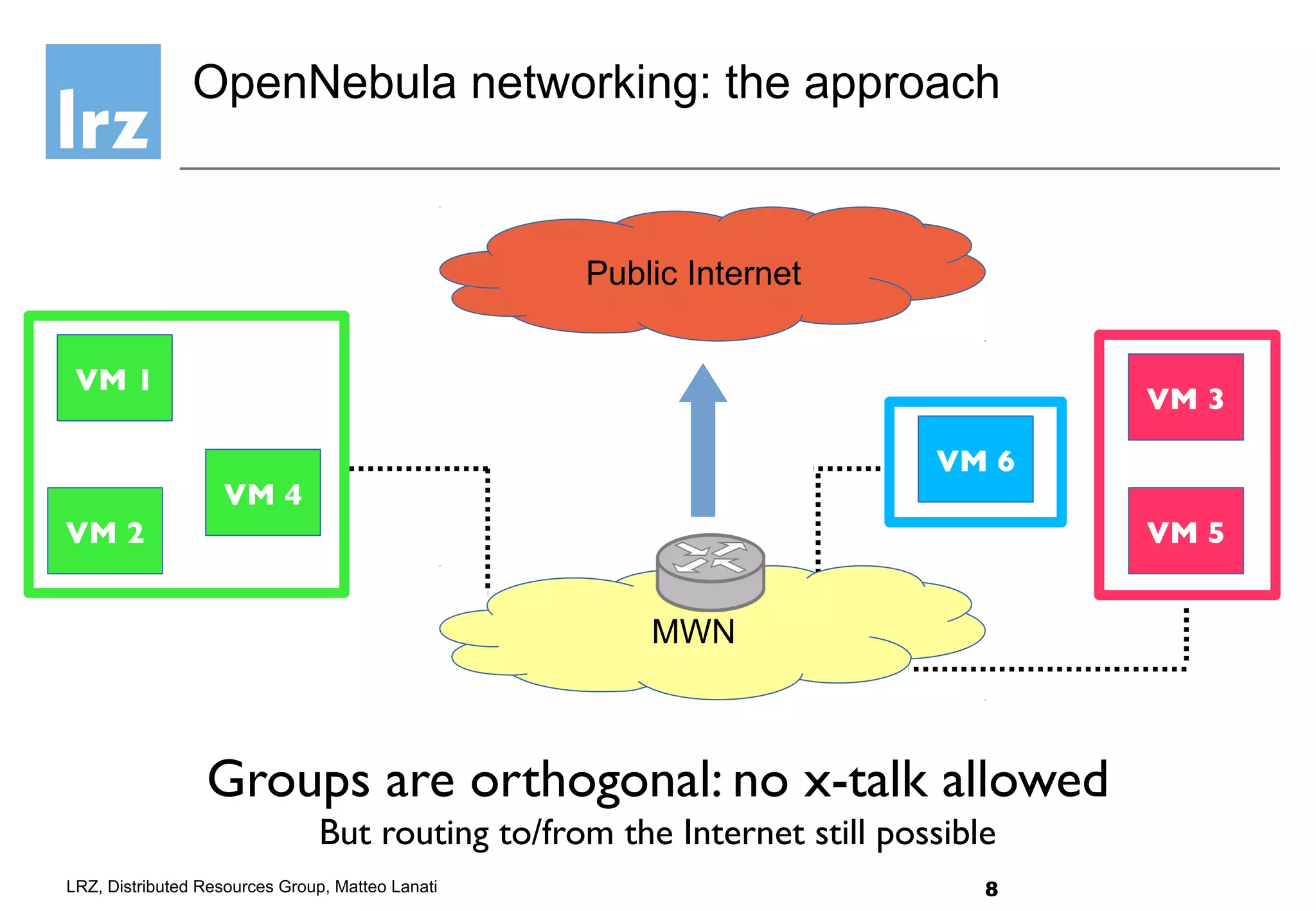

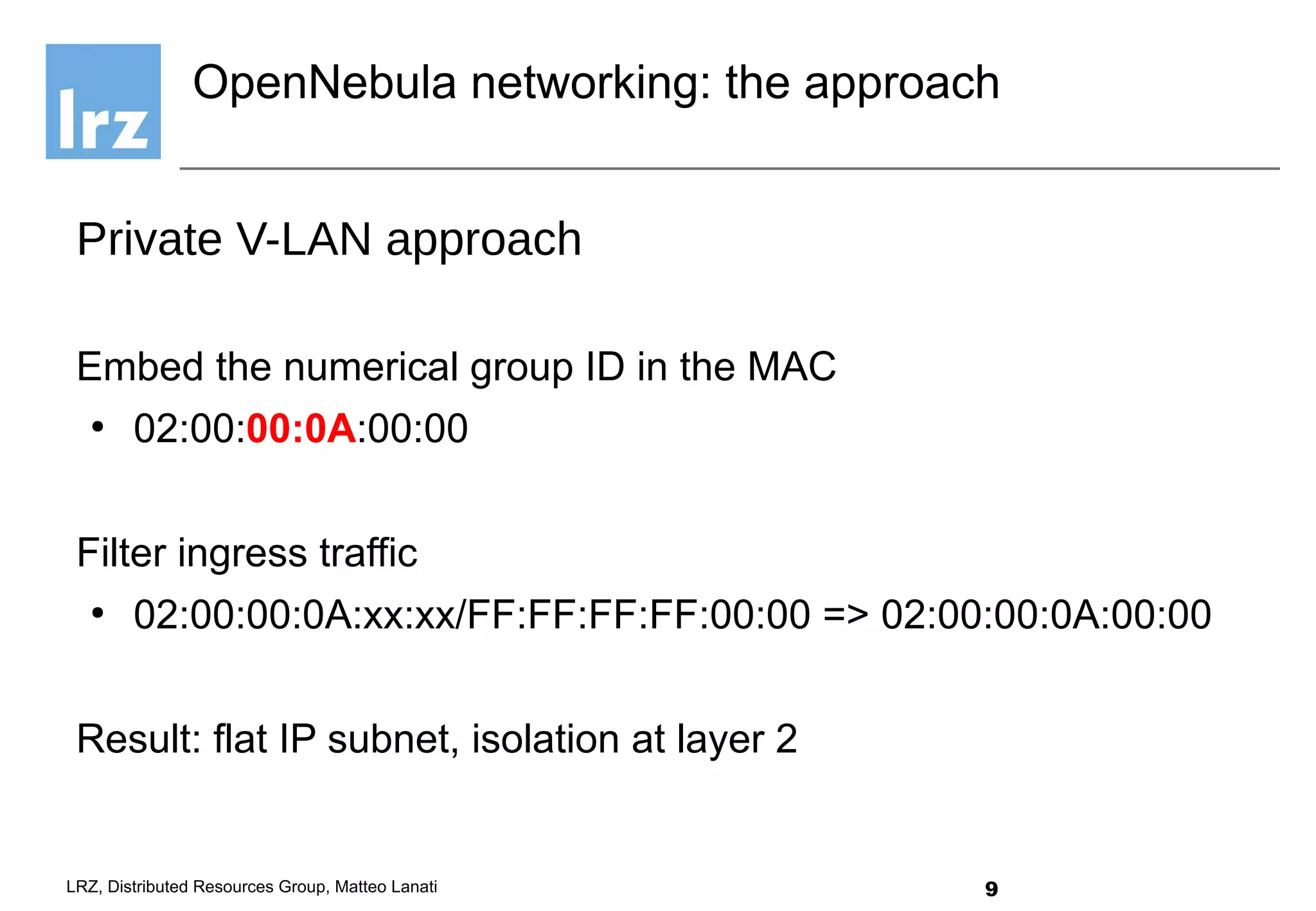

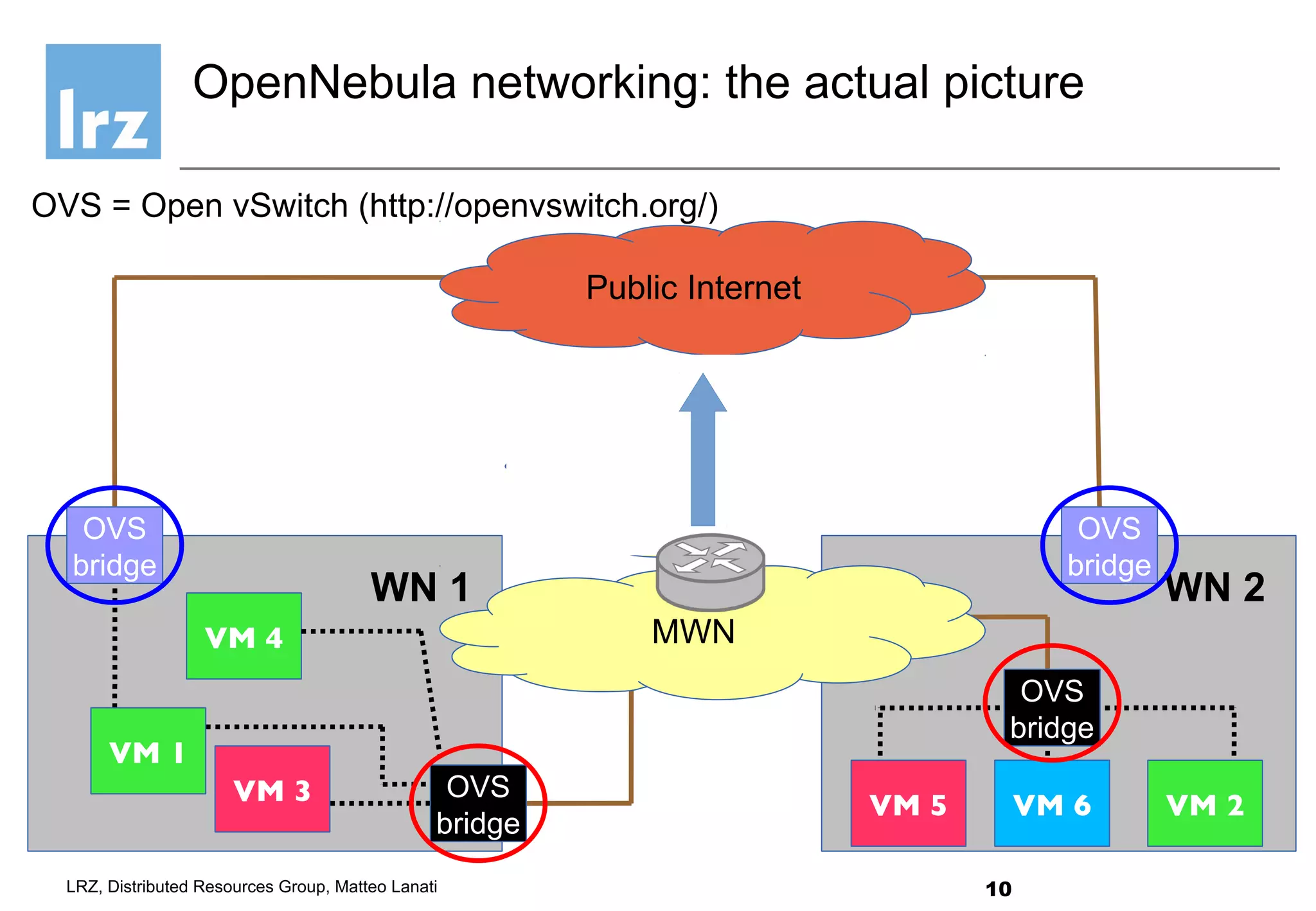

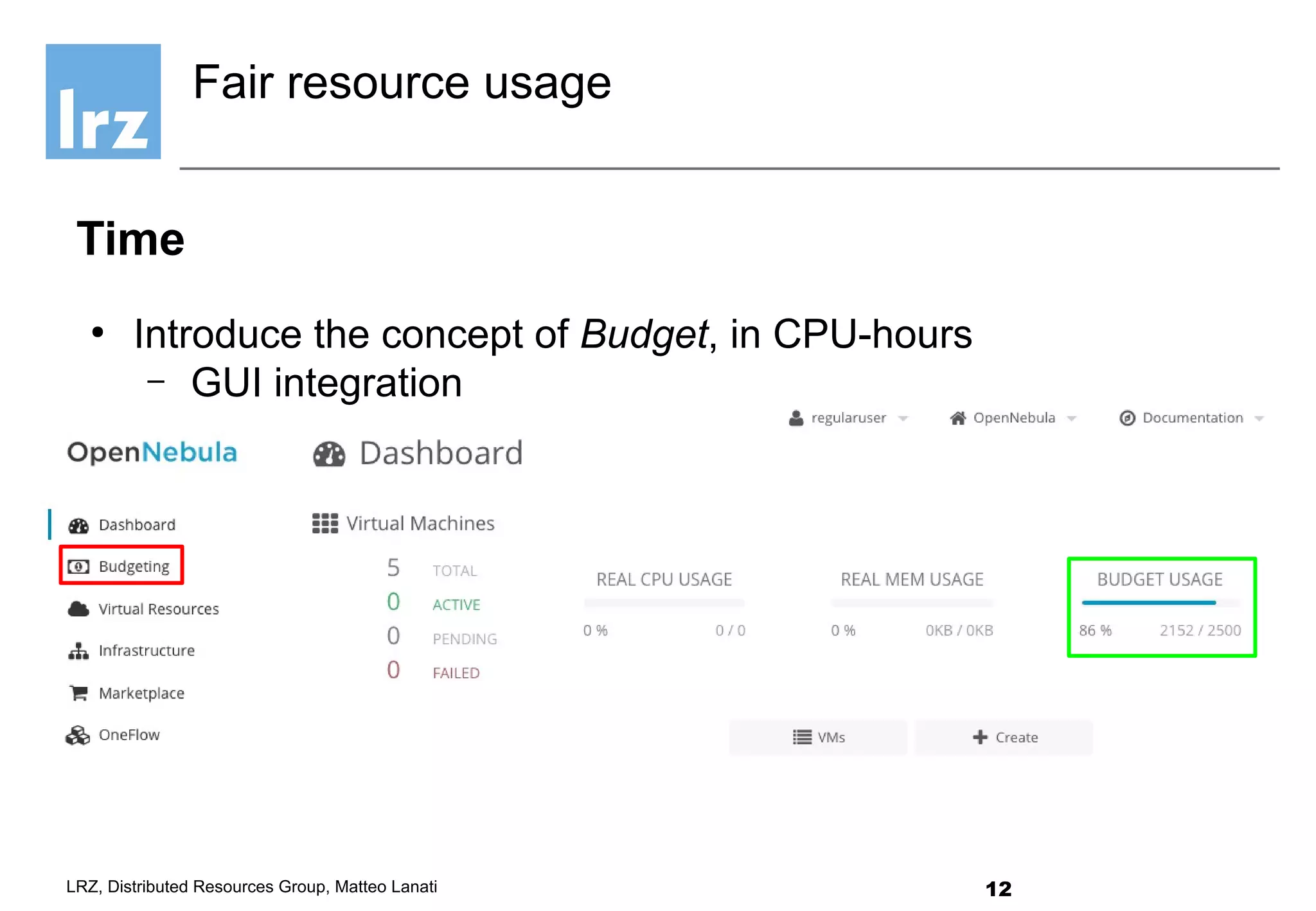

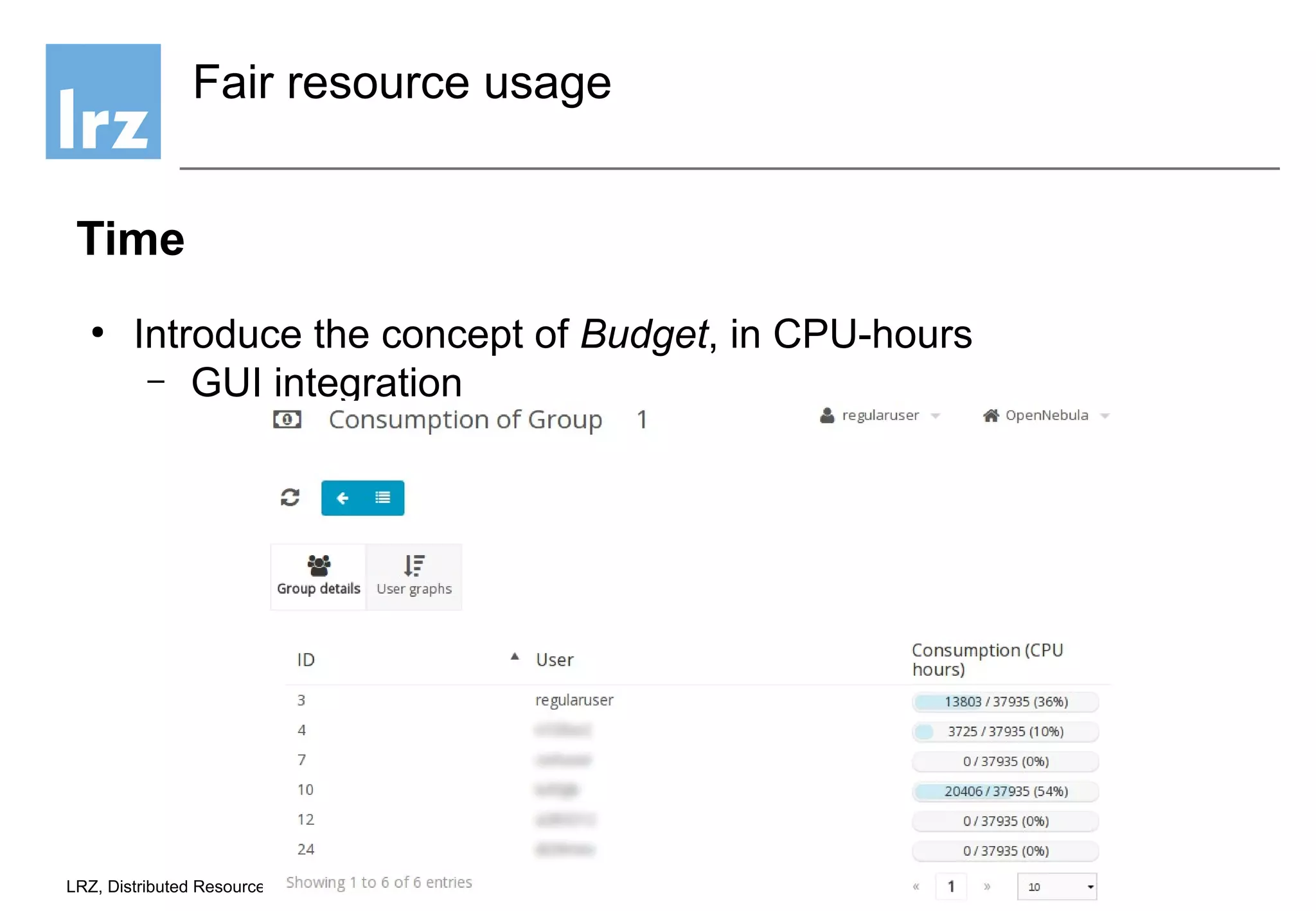

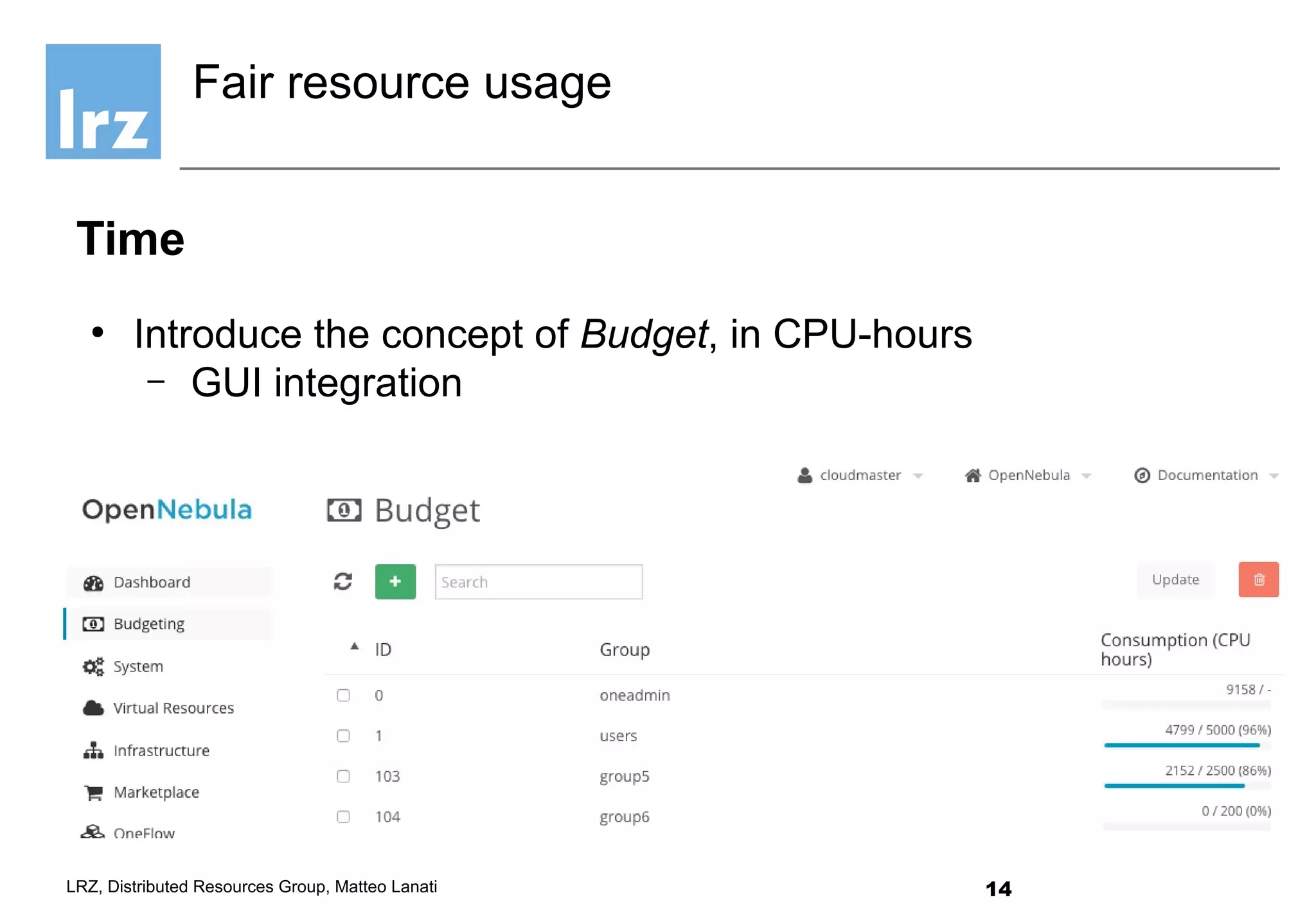

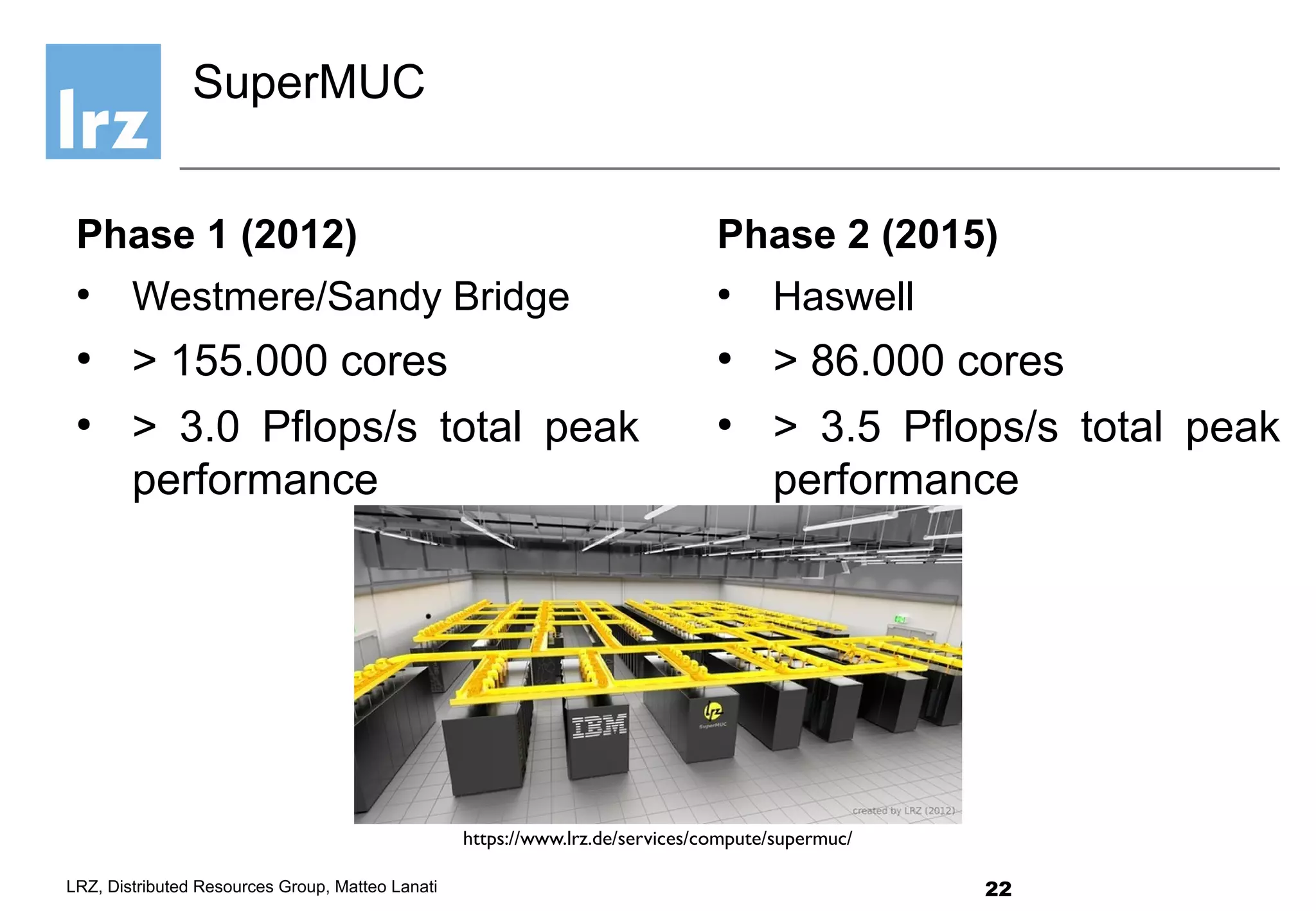

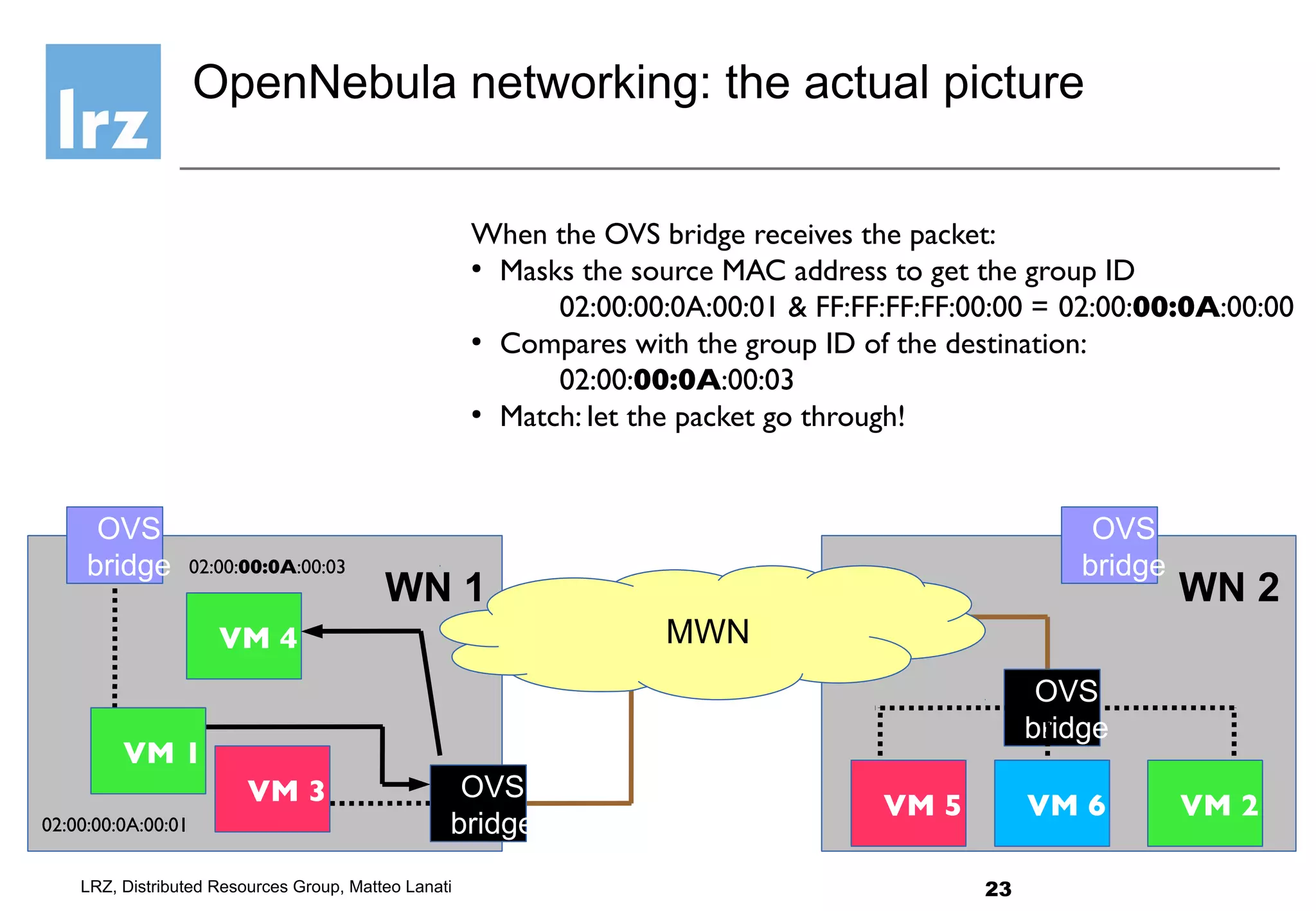

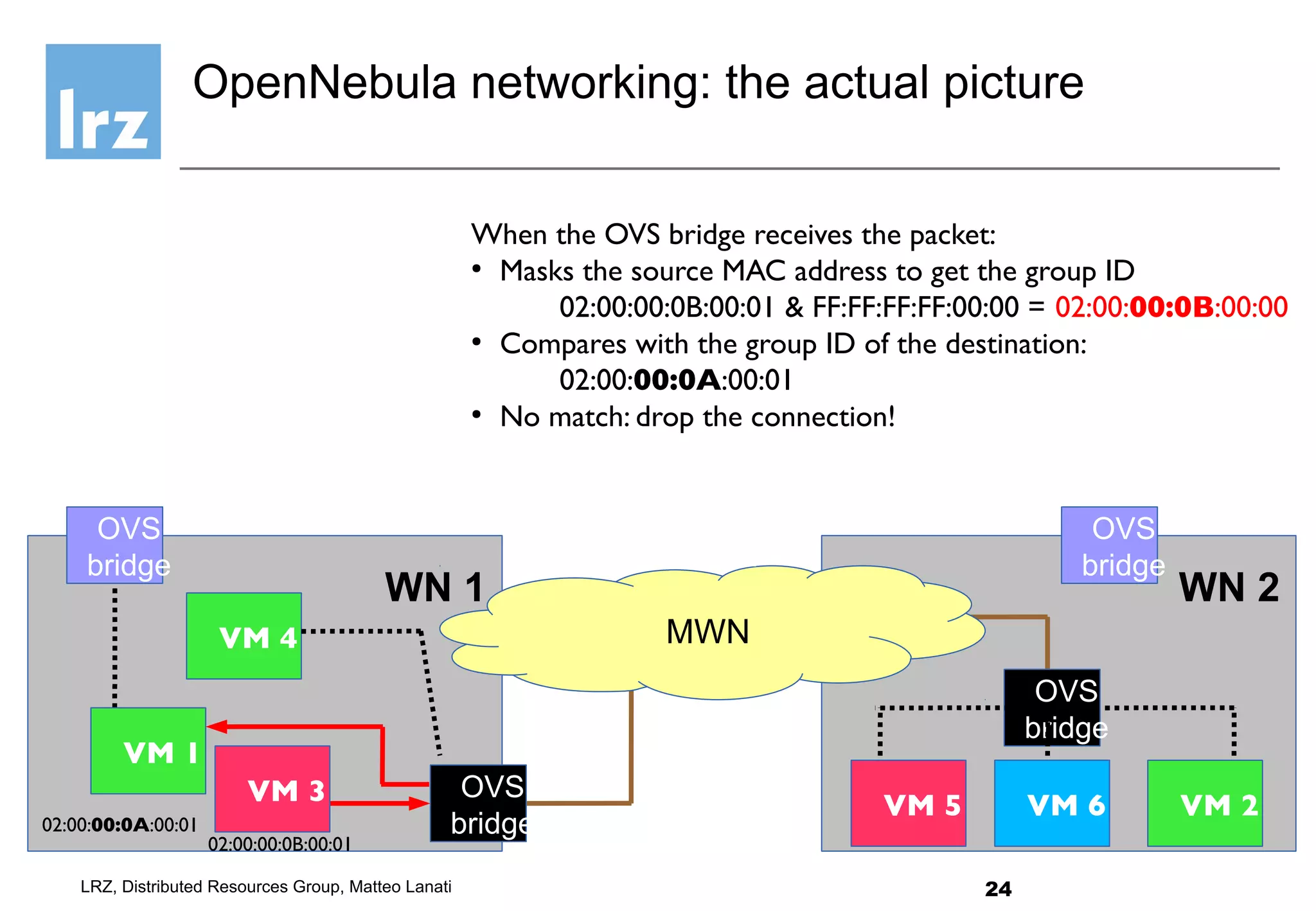

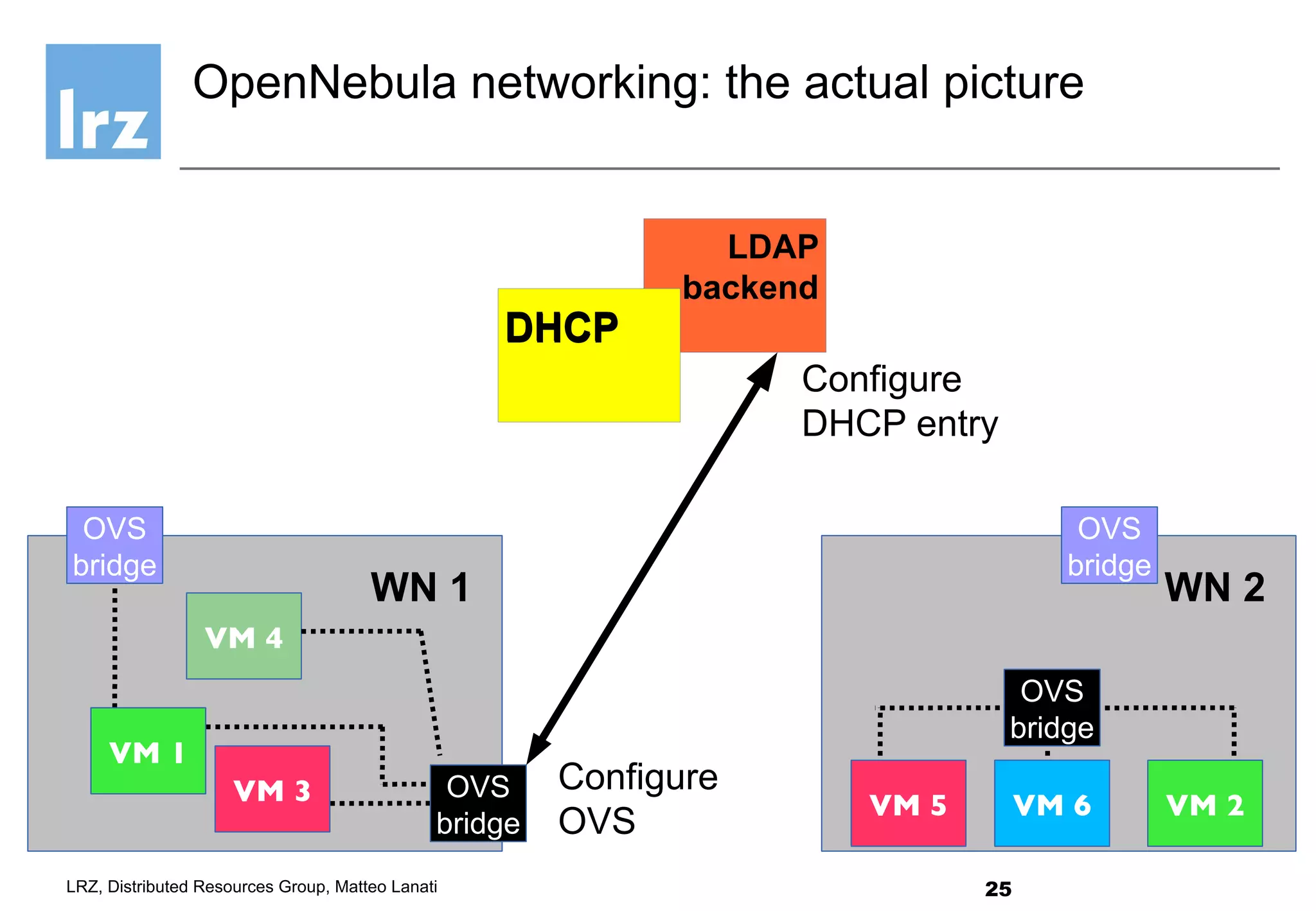

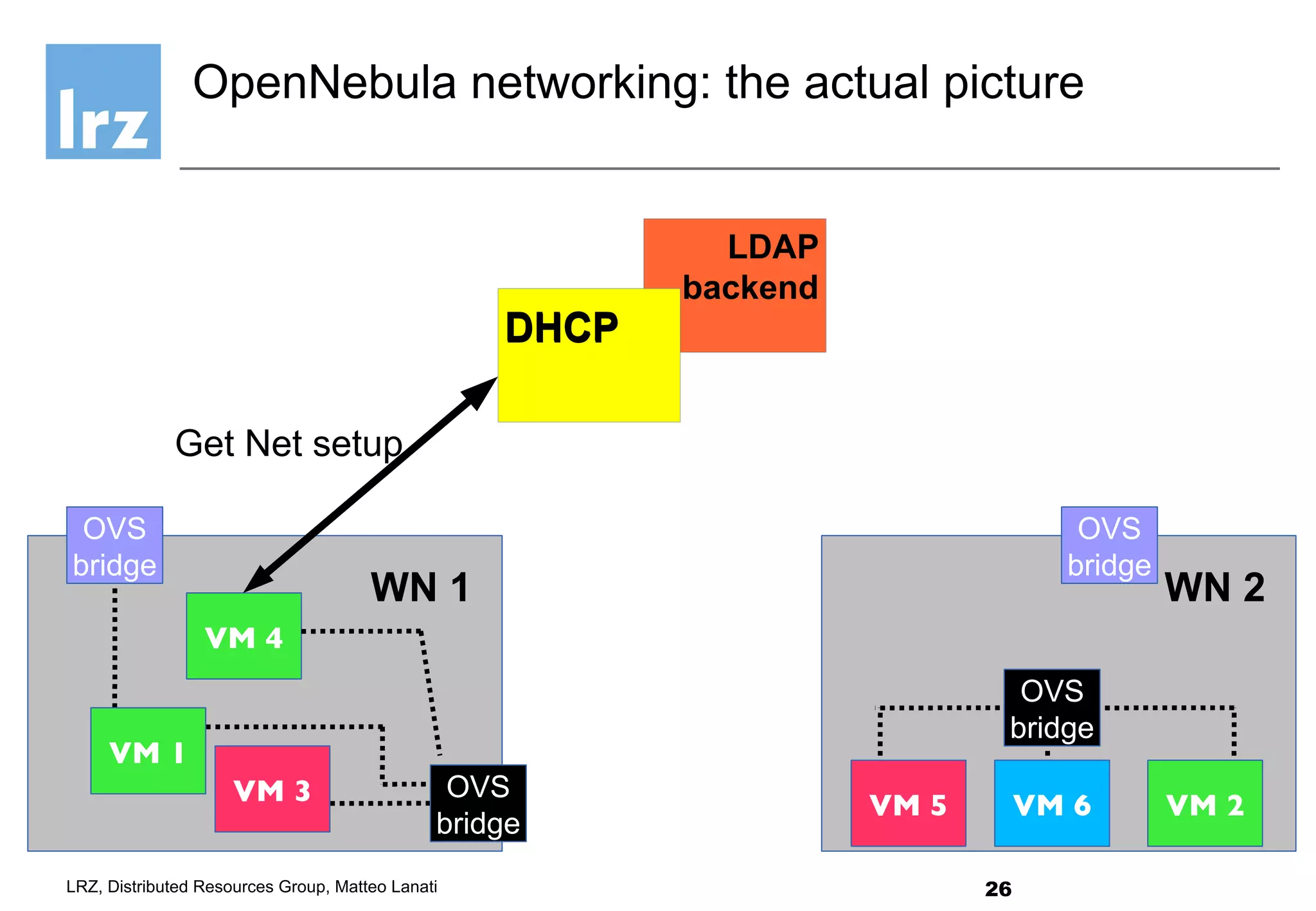

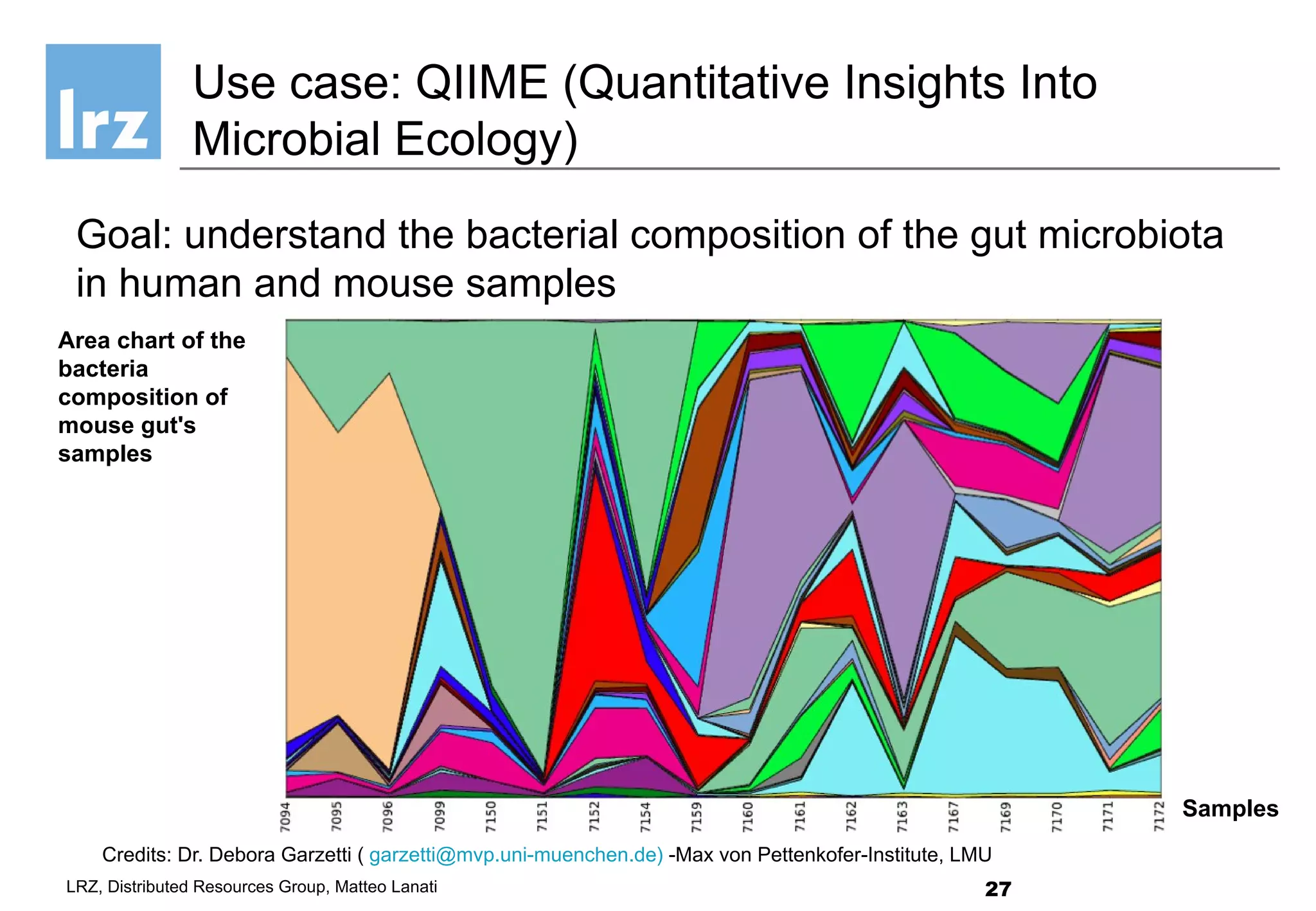

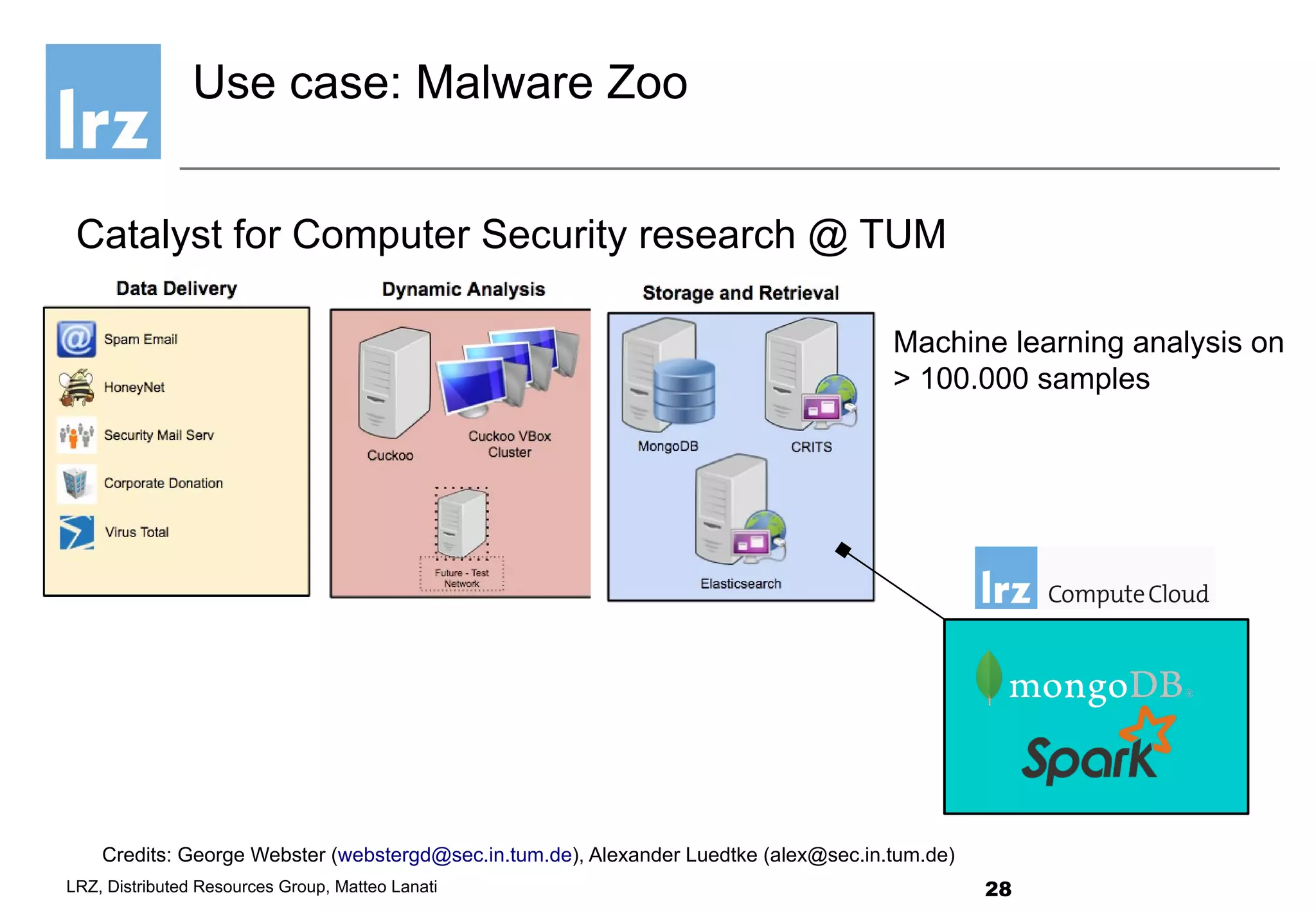

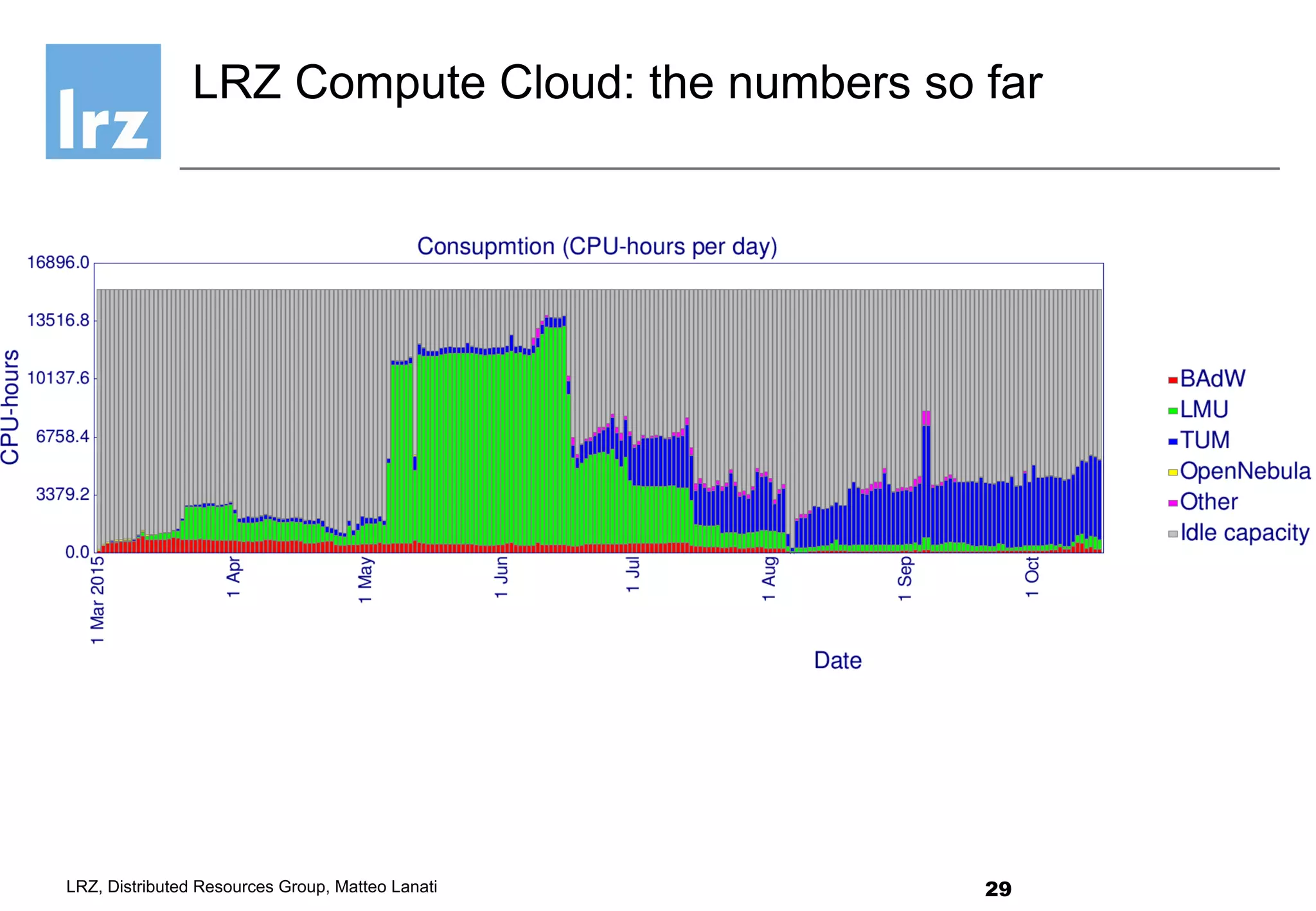

The document presents an overview of the implementation and features of OpenNebula at the Leibniz Supercomputing Centre, covering its use in high-performance computing environments and addressing various challenges such as resource management and network isolation. Key topics include the integration of budgeting for CPU-resource usage, modifications for public IP pool handling, and techniques for grouping and isolating virtual machines. The author, Dr. Matteo Lanati, shares personal experiences and future goals related to the project.