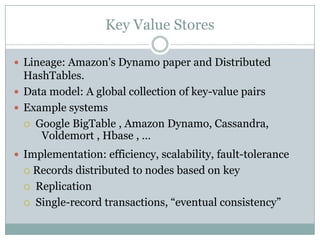

The document provides an introduction to NOSQL databases. It begins with basic concepts of databases and DBMS. It then discusses SQL and relational databases. The main part of the document defines NOSQL and explains why NOSQL databases were developed as an alternative to relational databases for handling large datasets. It provides examples of popular NOSQL databases like MongoDB, Cassandra, HBase, and CouchDB and describes their key features and use cases.