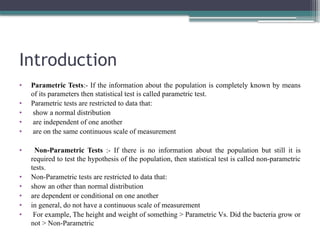

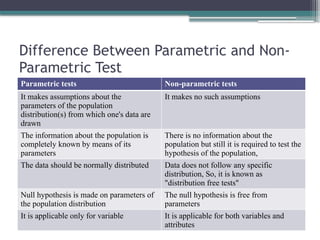

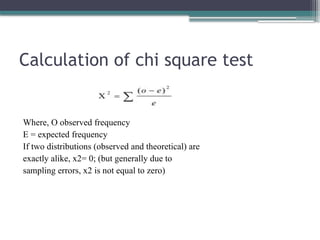

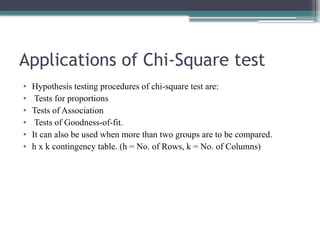

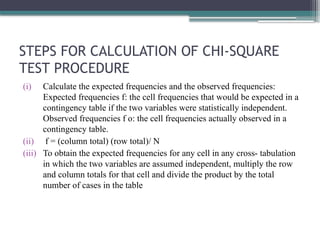

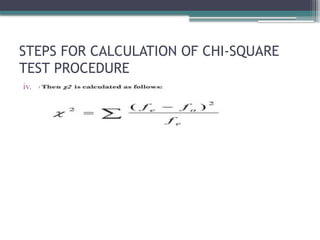

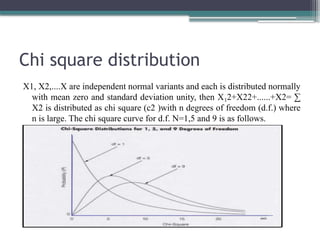

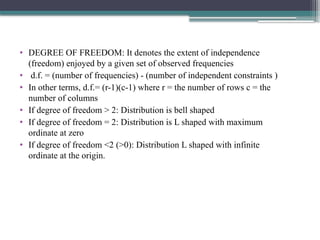

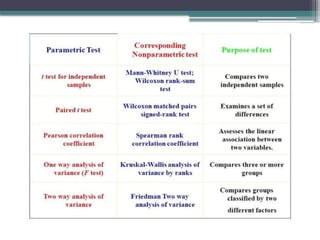

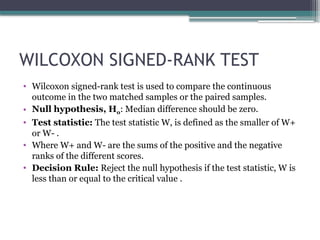

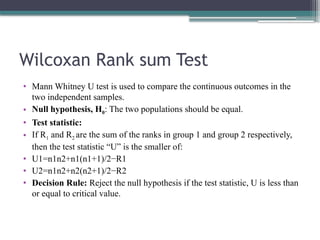

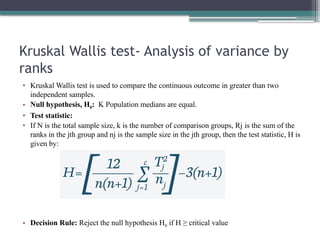

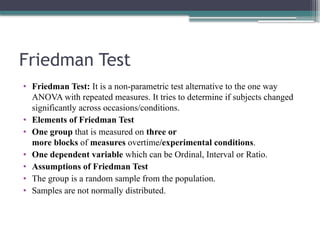

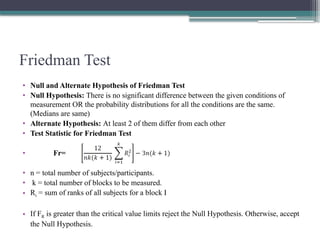

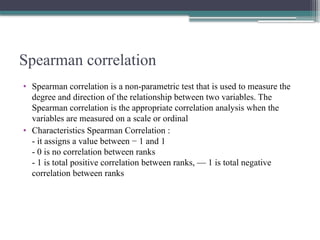

The document provides an overview of parametric and non-parametric statistical tests, outlining their definitions, assumptions, advantages, and disadvantages. It discusses various tests such as the Wilcoxon rank test, Chi-square test, and their applications in hypothesis testing. Additionally, it highlights the conditions under which non-parametric tests are preferred over parametric tests.