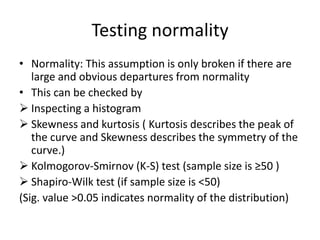

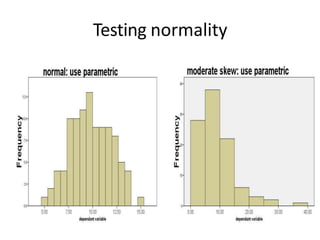

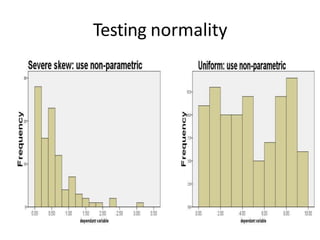

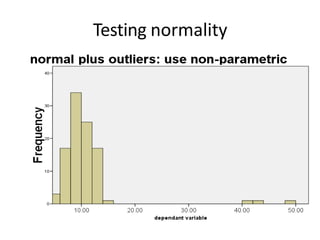

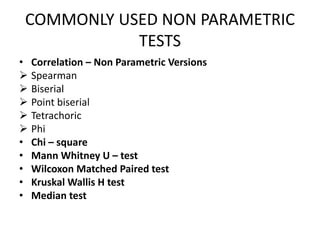

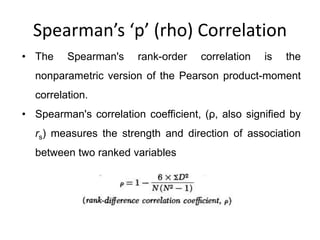

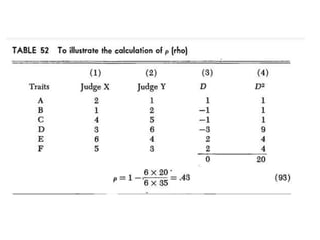

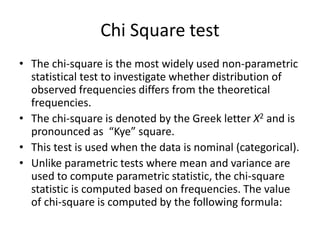

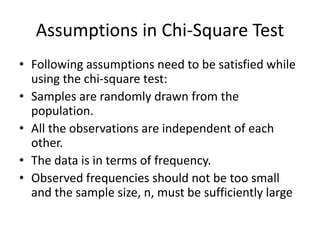

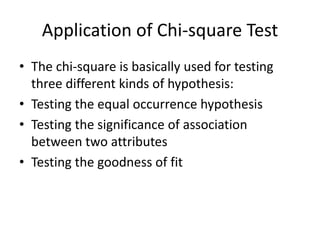

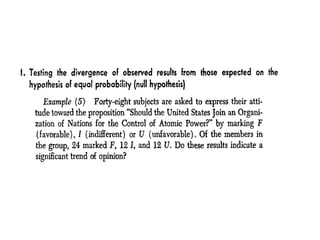

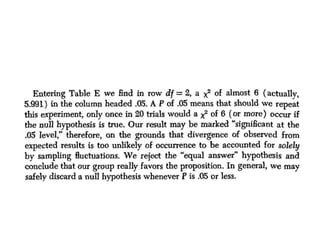

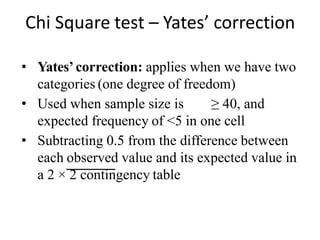

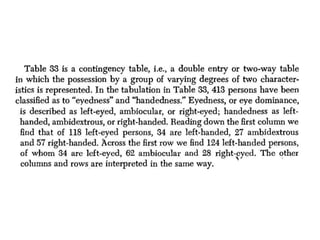

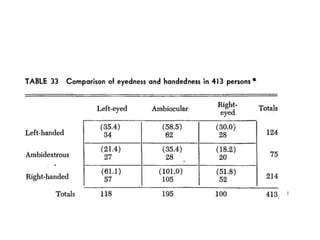

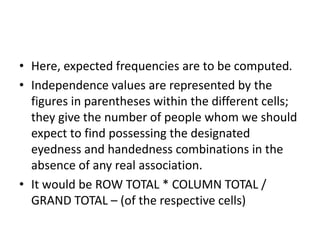

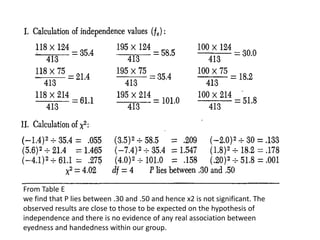

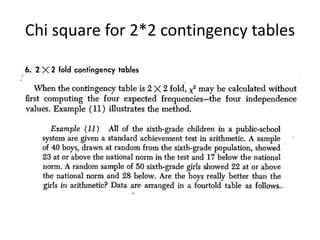

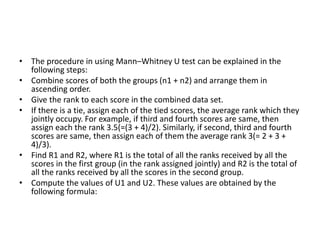

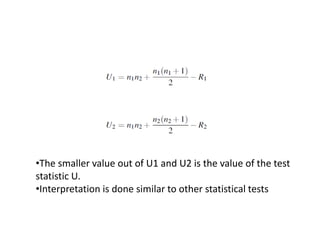

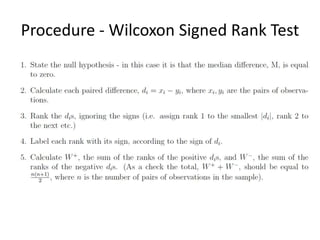

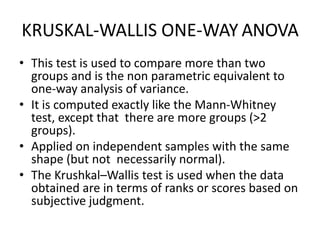

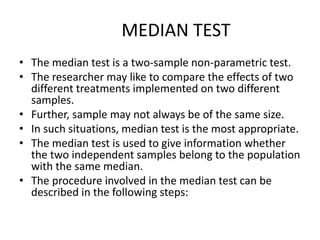

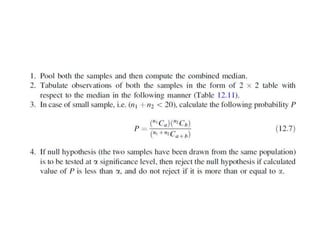

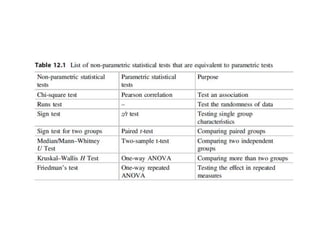

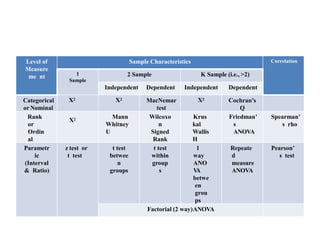

This document provides information about non-parametric tests. It begins by explaining that non-parametric tests do not assume a specific distribution or make assumptions about the population. It then discusses tests for normality like the Kolmogorov-Smirnov test and Shapiro-Wilk test. Commonly used non-parametric tests like Spearman's rank correlation, Mann-Whitney U test, and Kruskal-Wallis H test are explained. The chi-square test and assumptions are also covered in detail. Advantages of non-parametric tests include fewer assumptions and applicability to small sample sizes. A disadvantage is they are less powerful than parametric tests.