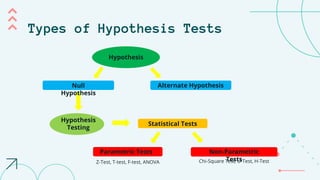

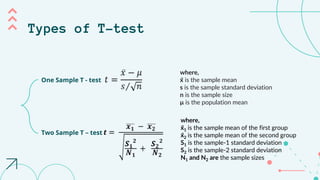

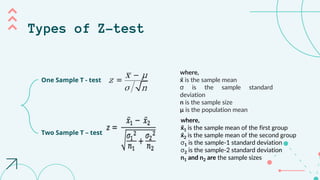

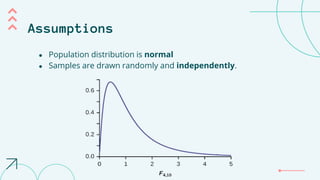

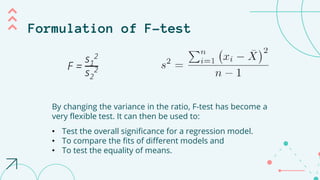

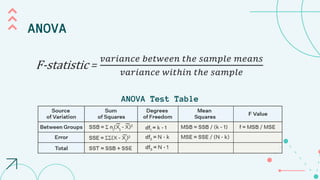

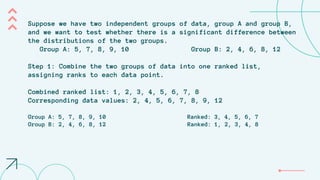

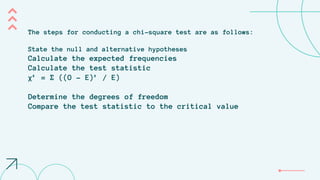

This document provides an overview of parametric and non-parametric hypothesis tests. It defines parametric tests as those that assume an underlying normal distribution, and lists common parametric tests like the z-test, t-test, F-test, and ANOVA. Non-parametric tests make no distributional assumptions and common examples discussed include the Mann-Whitney U test, chi-square test, and Kruskal-Wallis test. The document provides details on assumptions and procedures for conducting each of these important statistical hypothesis tests.

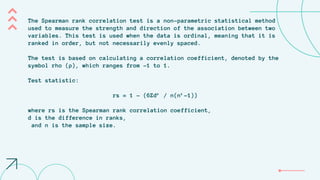

![The Kruskal-Wallis test is a nonparametric test used to compare the

medians of three or more independent groups. It is often used as an

alternative to the one-way analysis of variance (ANOVA) when the

assumption of normality is violated, or when the data is ordinal or

categorical.

H = [(12 / (n(n+1))) * Σ(Rj - (n+1)/2)²] - 3(n+1)

where H is the Kruskal-Wallis test statistic,

n is the total number of observations across all groups,

Rj is the sum of the ranks in group j,

and Σ is the sum of all the groups.](https://image.slidesharecdn.com/marketingresearchfinal-240204082423-886801f4/85/Marketing-Research-Hypothesis-Testing-pptx-37-320.jpg)

![We first rank the data:

Rank: 30, 32, 33, 35, 36, 37, 38, 40, 40, 42, 42, 43, 45, 45, 47, 50

H = [(12 / (n(n+1))) * Σ(Rj - (n+1)/2)²] - 3(n+1)

We then calculate the test statistic:

H = [(12 / (16(16+1))) * Σ(Rj - (16+1)/2)²] - 3(16+1)

= [(12 / 272) * ((10.5-8)² + (14.5-8)² + (26.5-8)² + (21.5-8)²)] - 51

= 10.55

Degrees of freedom = number of groups - 1 = 4 - 1 = 3](https://image.slidesharecdn.com/marketingresearchfinal-240204082423-886801f4/85/Marketing-Research-Hypothesis-Testing-pptx-39-320.jpg)