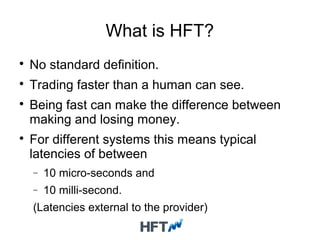

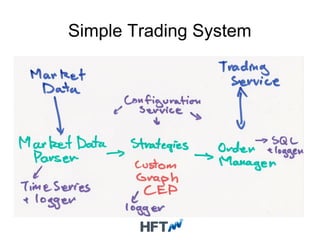

This document discusses high frequency trading systems and the requirements and technologies used, including:

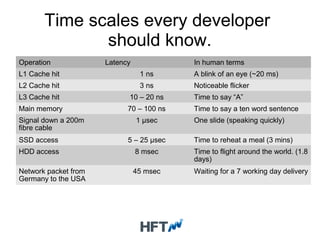

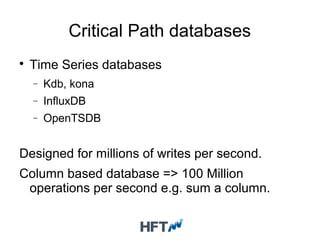

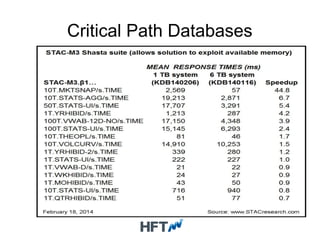

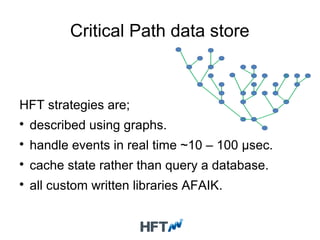

- HFT systems require extremely low latency databases (microseconds) and event-driven processing to minimize latency.

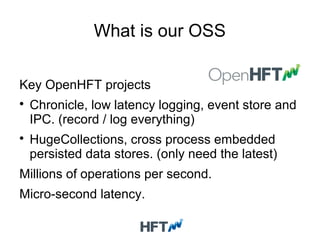

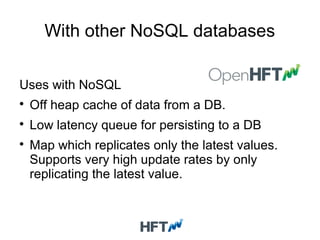

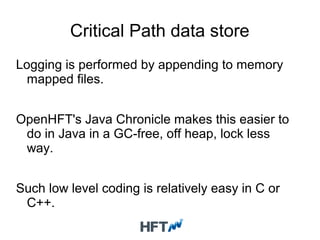

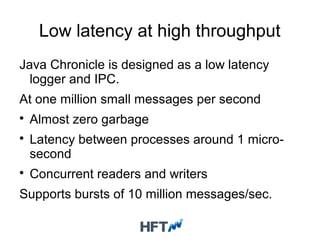

- OpenHFT provides low-latency logging and data storage technologies like Chronicle and HugeCollections for use in HFT systems.

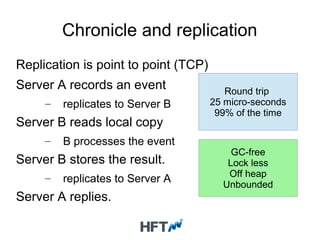

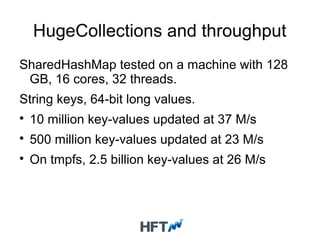

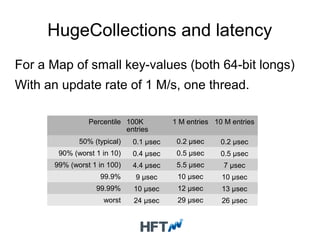

- Chronicle provides microsecond-latency logging and replication between processes. HugeCollections provides high-throughput concurrent key-value storage with microsecond-level latencies.

- These technologies are useful for critical data in HFT systems where traditional databases cannot meet the latency and throughput requirements.