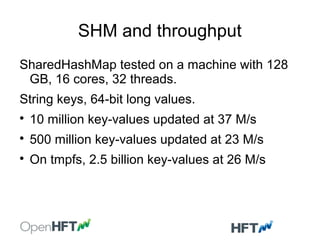

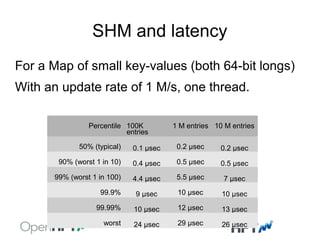

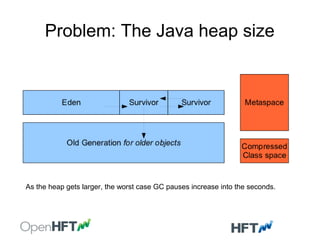

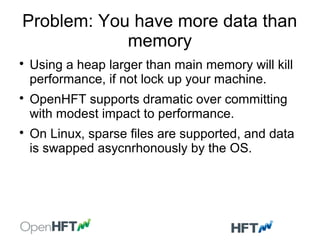

This document discusses advanced inter-process communication (IPC) techniques using off-heap memory in Java. It introduces OpenHFT, a company that develops low-latency software, and their open-source projects Chronicle and OpenHFT Collections that provide high-performance IPC and embedded data stores. It then discusses problems with on-heap memory and solutions using off-heap memory mapped files for sharing data across processes at microsecond latency levels and high throughput.

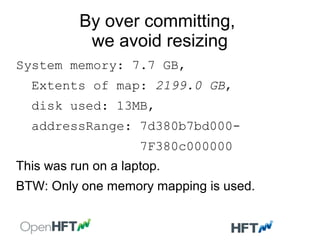

![Over commiting your size.

File file = File.createTempFile("over-sized", "deleteme");

SharedHashMap<String, String> map = new

SharedHashMapBuilder()

.entrySize(1024 * 1024)

.entries(1024 * 1024)

.create(file, String.class, String.class);

for (int i = 0; i < 1000; i++) {

char[] chars = new char[i];

Arrays.fill(chars, '+');

map.put("key-" + i, new String(chars));

}](https://image.slidesharecdn.com/advancedoffheapipc-140604234946-phpapp01/85/Advanced-off-heap-ipc-12-320.jpg)