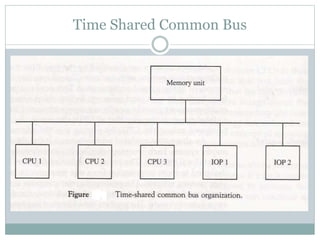

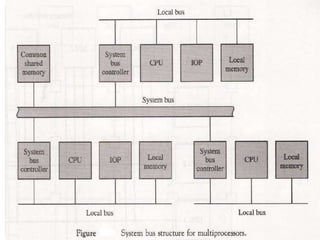

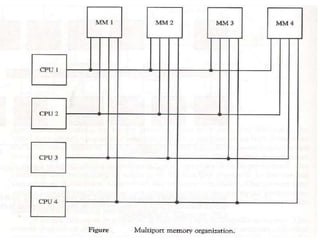

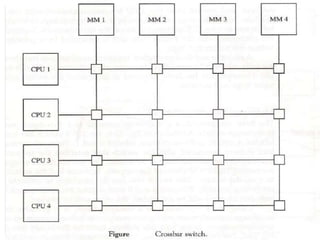

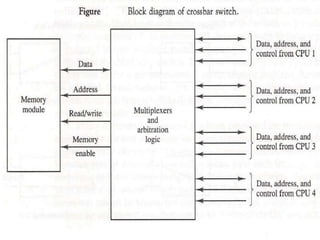

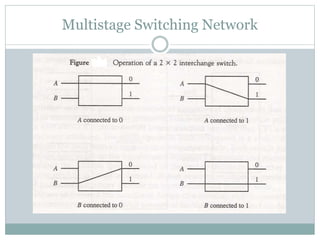

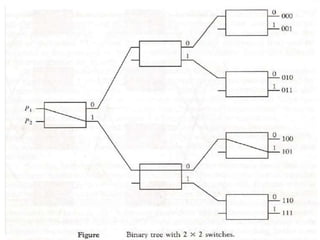

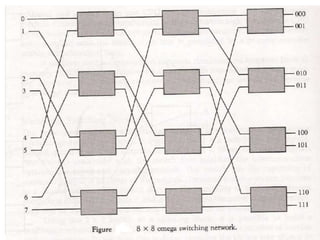

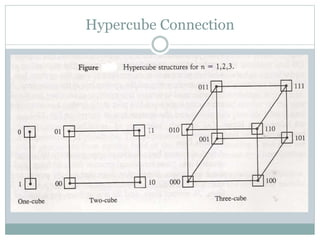

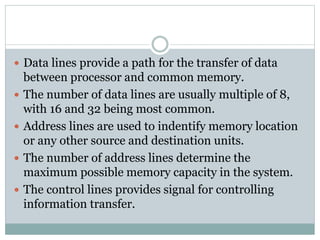

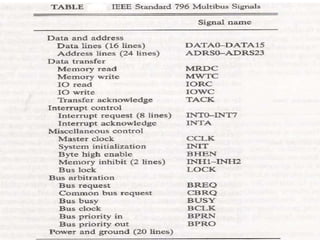

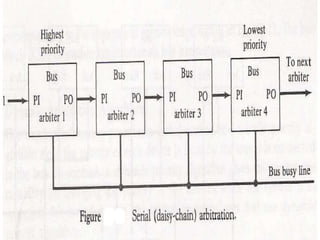

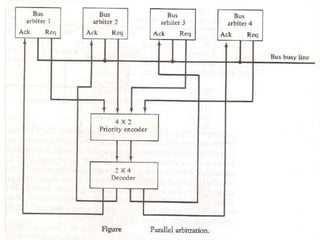

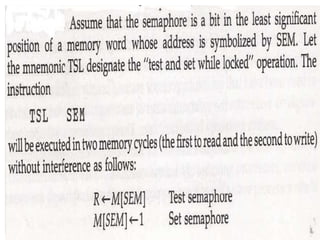

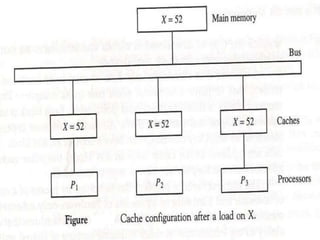

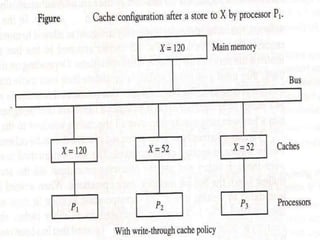

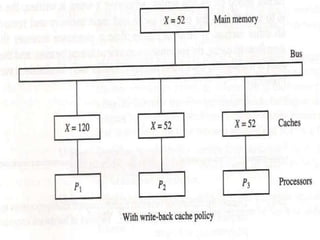

This document discusses multiprocessor systems, including their interconnection structures, interprocessor arbitration, communication and synchronization, and cache coherence. Multiprocessor systems connect two or more CPUs with shared memory and I/O to improve reliability and enable parallel processing. They use various interconnection structures like buses, switches, and hypercubes. Arbitration logic manages shared resources and bus access. Synchronization ensures orderly access to shared data through techniques like semaphores. Cache coherence protocols ensure data consistency across processor caches and main memory.