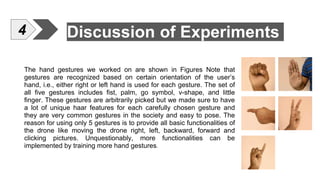

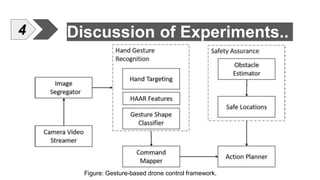

This document describes a study on using hand gestures to control drones. The proposed framework uses skin detection, hand posture comparison, and principal component analysis to recognize gestures in different lighting conditions and backgrounds. A set of five gestures (fist, palm, go symbol, v-shape, little finger) were used to control basic drone functions like moving up, down, left, right, and taking pictures. Experiments found gesture recognition accuracy was best when the drone was closer to the operator. The goal was to enable accurate gesture control for low-cost drones using software-based image recognition.