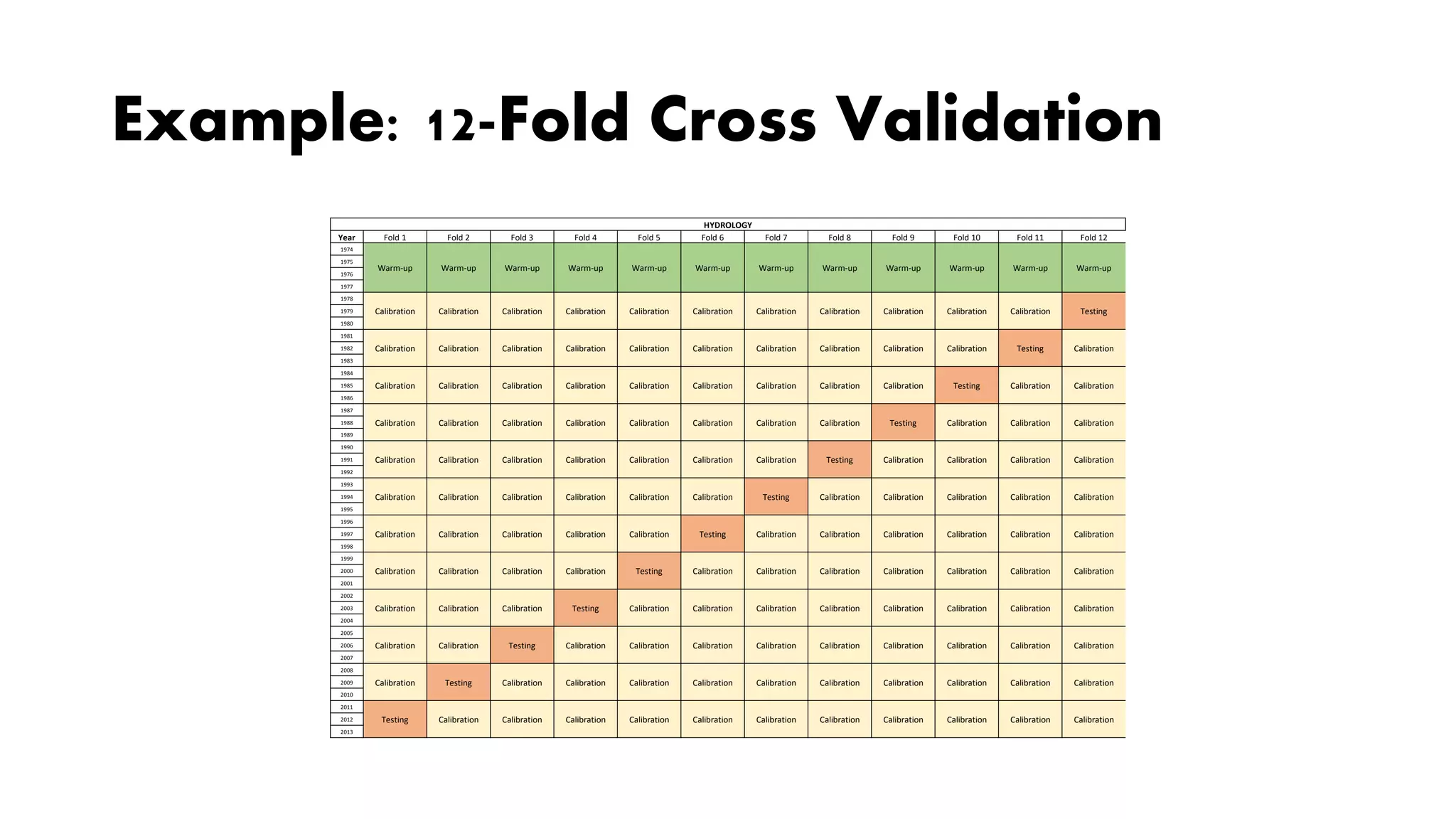

This document provides an overview of calibration, validation, and uncertainty analysis for environmental and hydrological modeling. It defines key concepts like calibration, validation, and uncertainty analysis. For calibration, it discusses finding parameter sets that minimize error between model outputs and observations while avoiding overfitting. Validation assesses model performance on new data. Uncertainty analysis quantifies uncertainty in model predictions. It also discusses sources of error and challenges in applying Bayesian methods due to non-normal errors and computational complexity. Simpler methods like GLUE (Generalized Likelihood Uncertainty Estimation) are also covered.