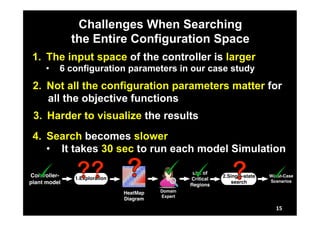

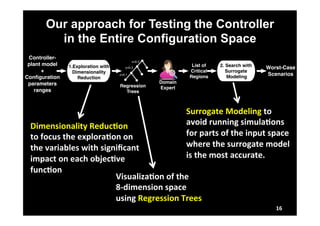

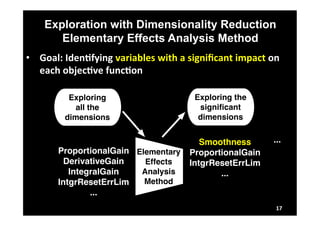

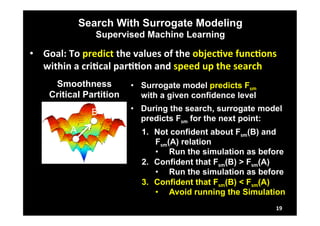

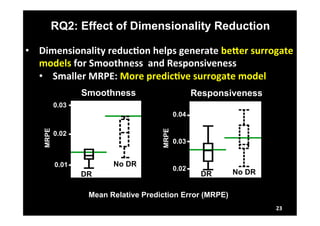

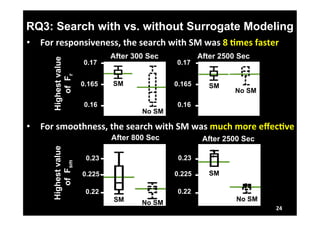

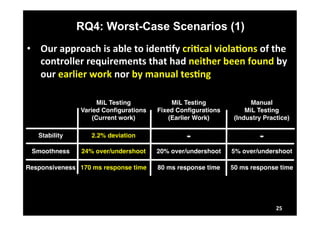

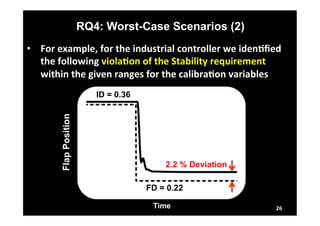

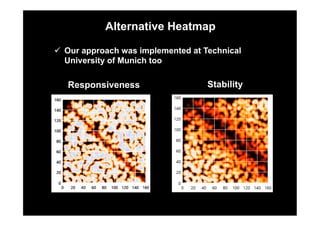

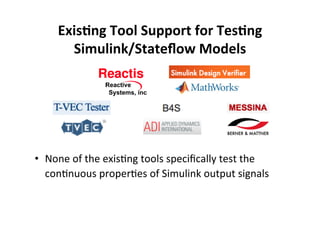

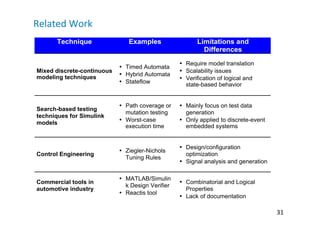

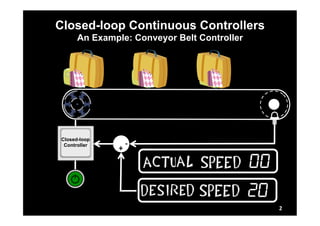

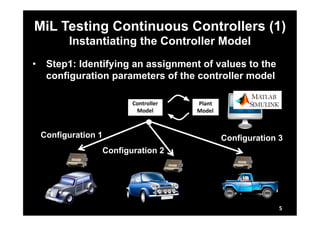

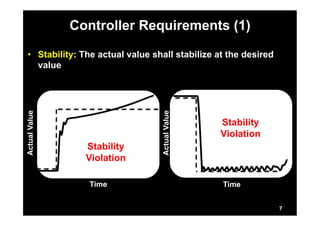

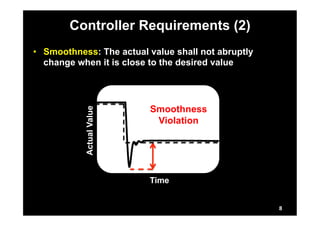

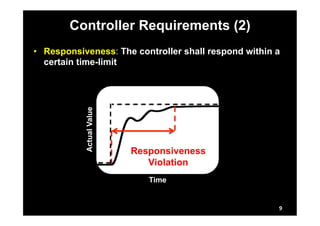

This document describes research on model-in-the-loop (MiL) testing of highly configurable continuous controllers. The researchers developed an approach using dimensionality reduction, surrogate modeling, and search-based techniques to efficiently test controllers across large configuration spaces. They applied their approach to an industrial conveyor belt controller case study. Evaluation results showed that their technique could find stability, smoothness, and responsiveness violations that previous approaches had missed. It provided a scalable way to thoroughly test continuous controller models over varied parameter configurations.

![Our Earlier Work:

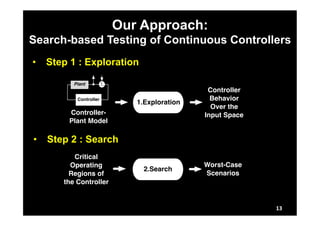

Search-based Testing of Continuous Controllers

with fixed values for the configuration parameters

1.Exploration 2.Single-state

ID FD

FD

ID

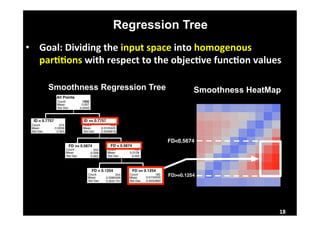

Smoothness HeatMap

Initial Desired (ID)

Final Desired (FD)

Smoothness Worst-case Scenario

Controller-plant

model

HeatMap

Diagram

Worst-Case

Scenarios

List of

Critical

Regions

Domain

Expert

search

Controller Input Space

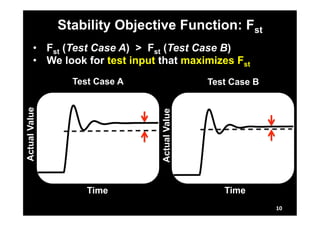

Fst

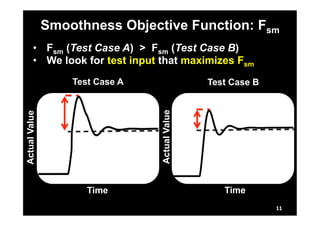

Fsm

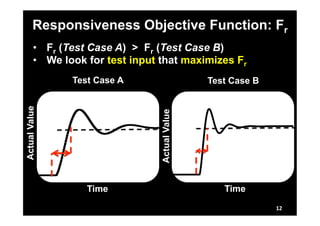

Fr

14

Published

in

[IST

Journal

2014,

SSBSE

2013]](https://image.slidesharecdn.com/reza-ase-140922102523-phpapp02/85/MiL-Testing-of-Highly-Configurable-Continuous-Controllers-15-320.jpg)