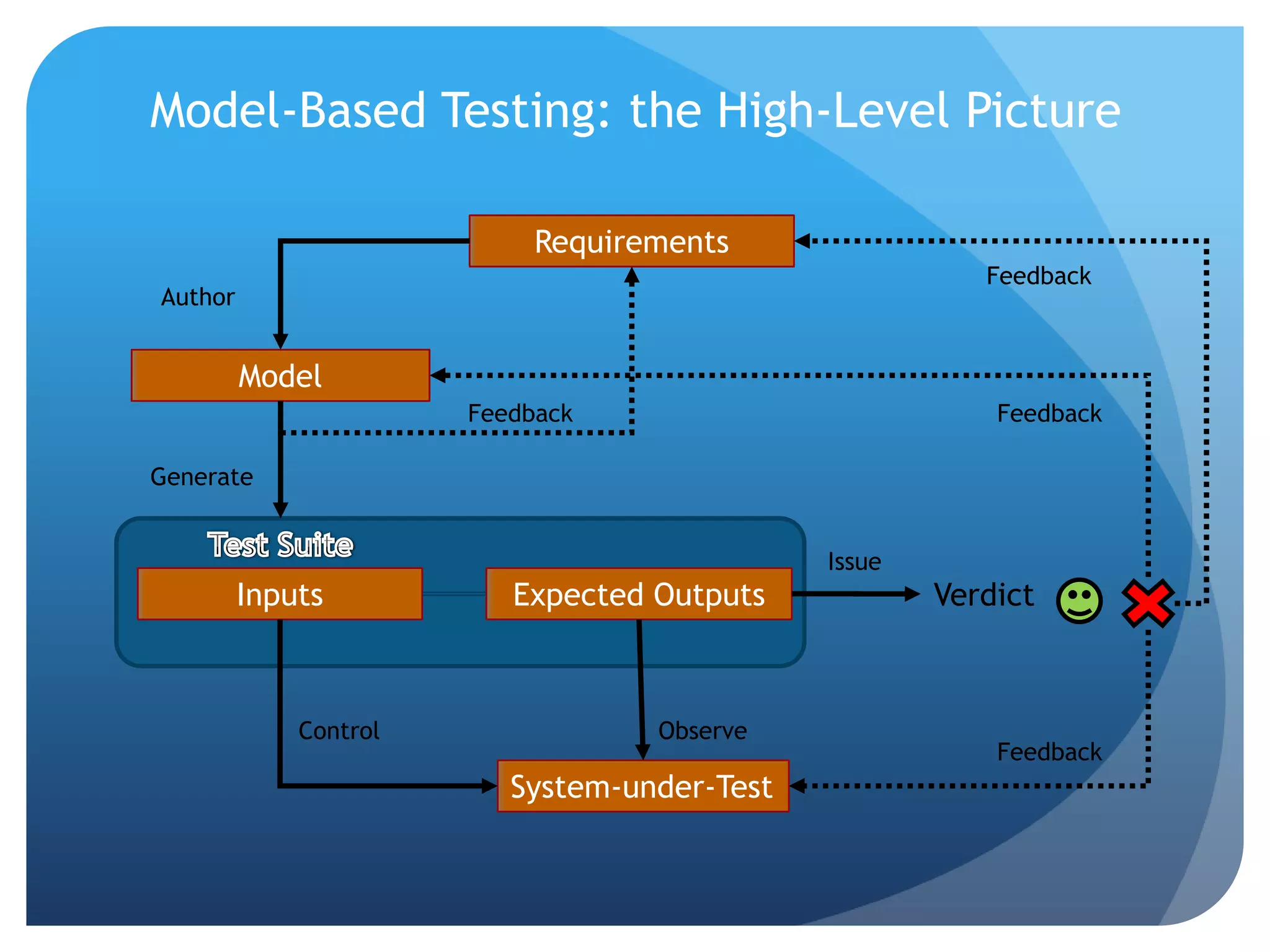

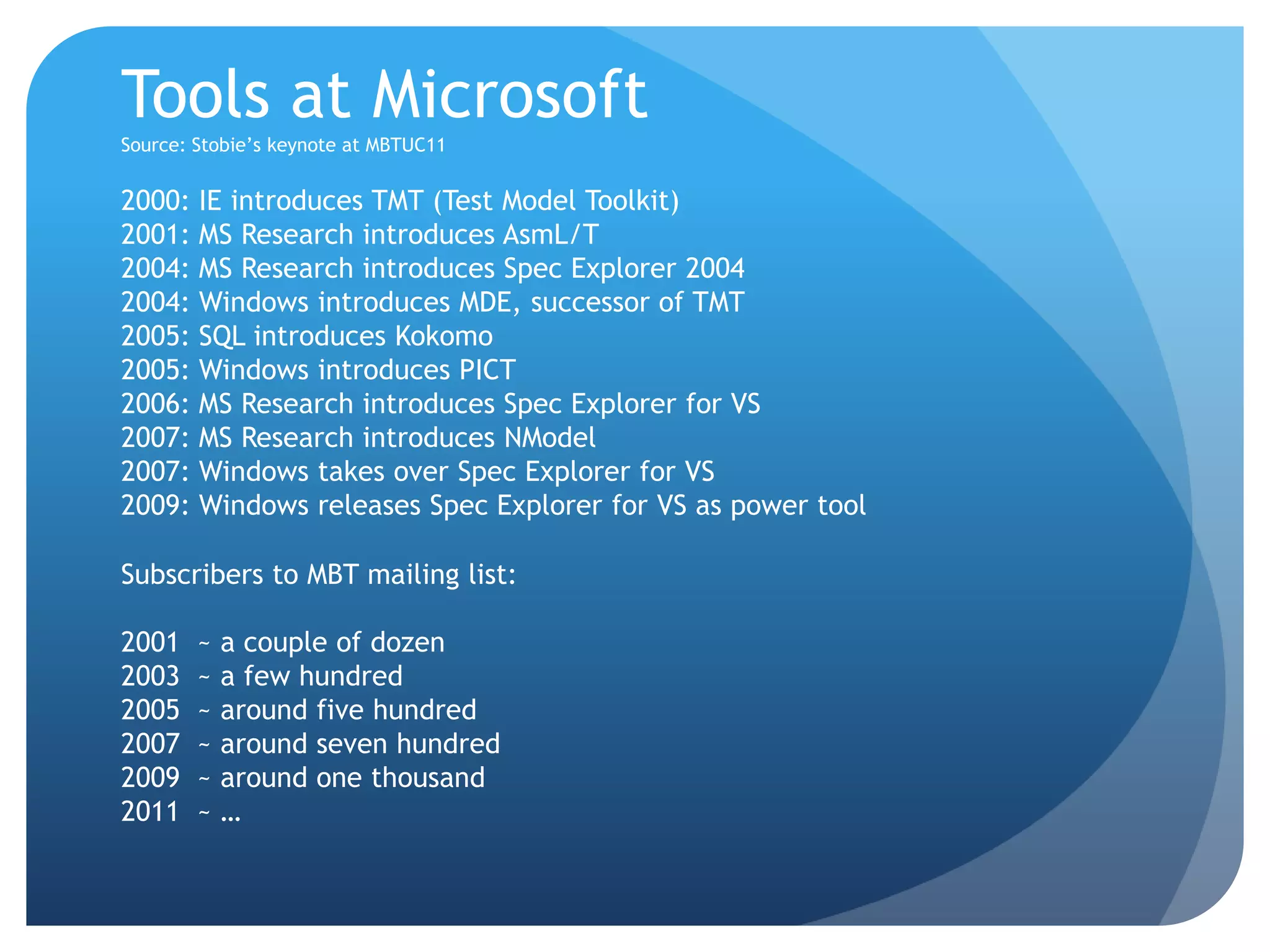

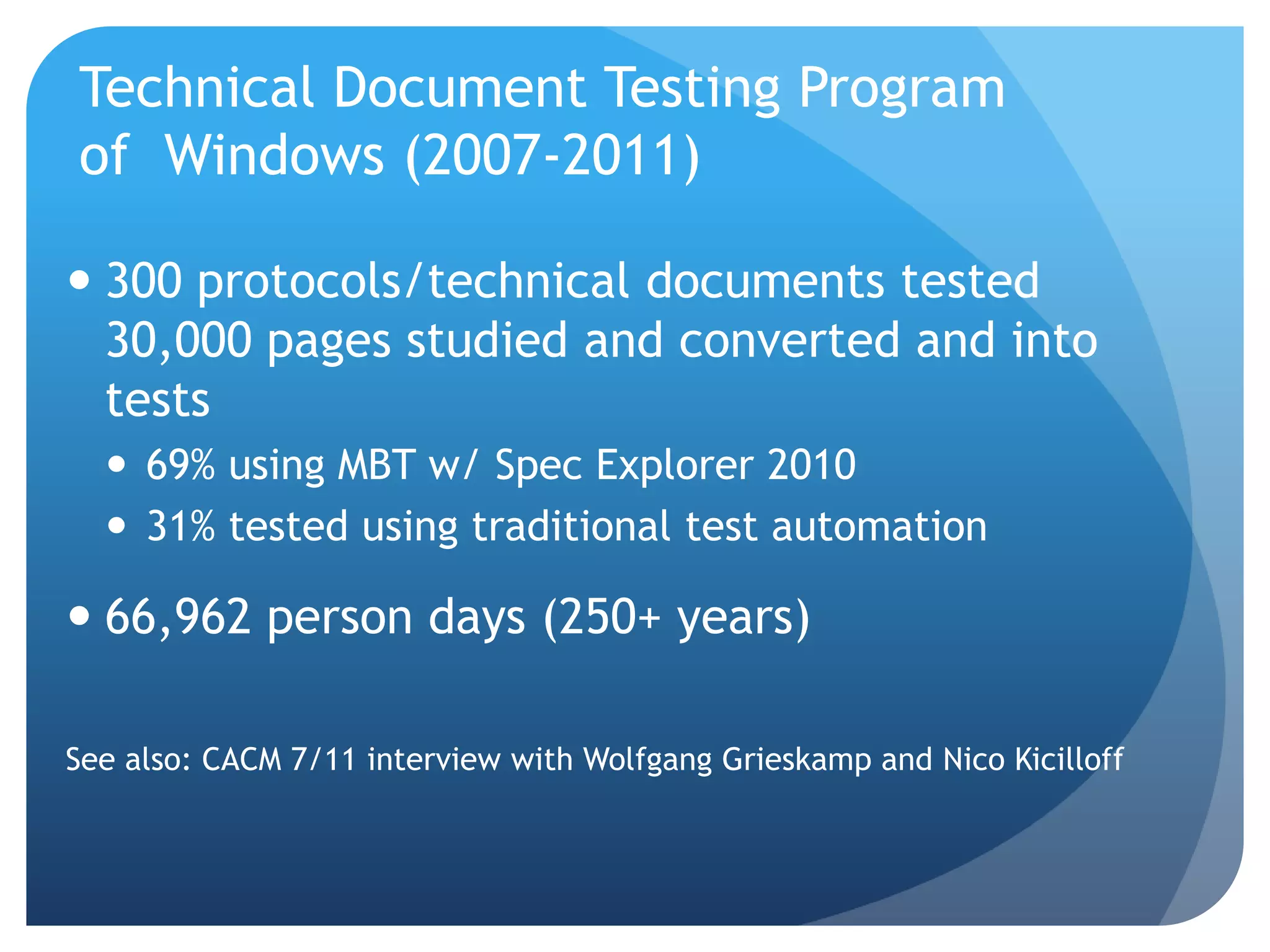

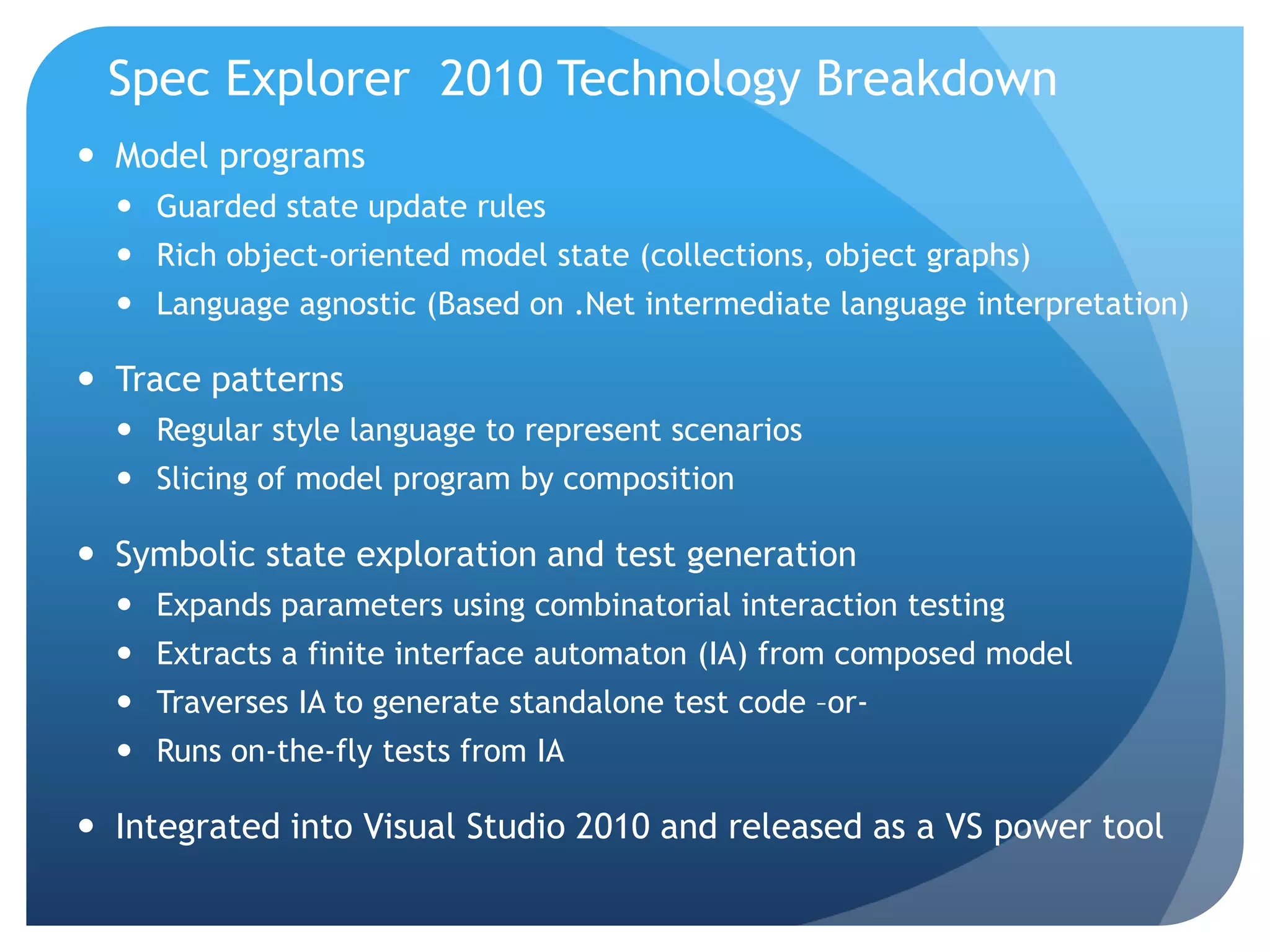

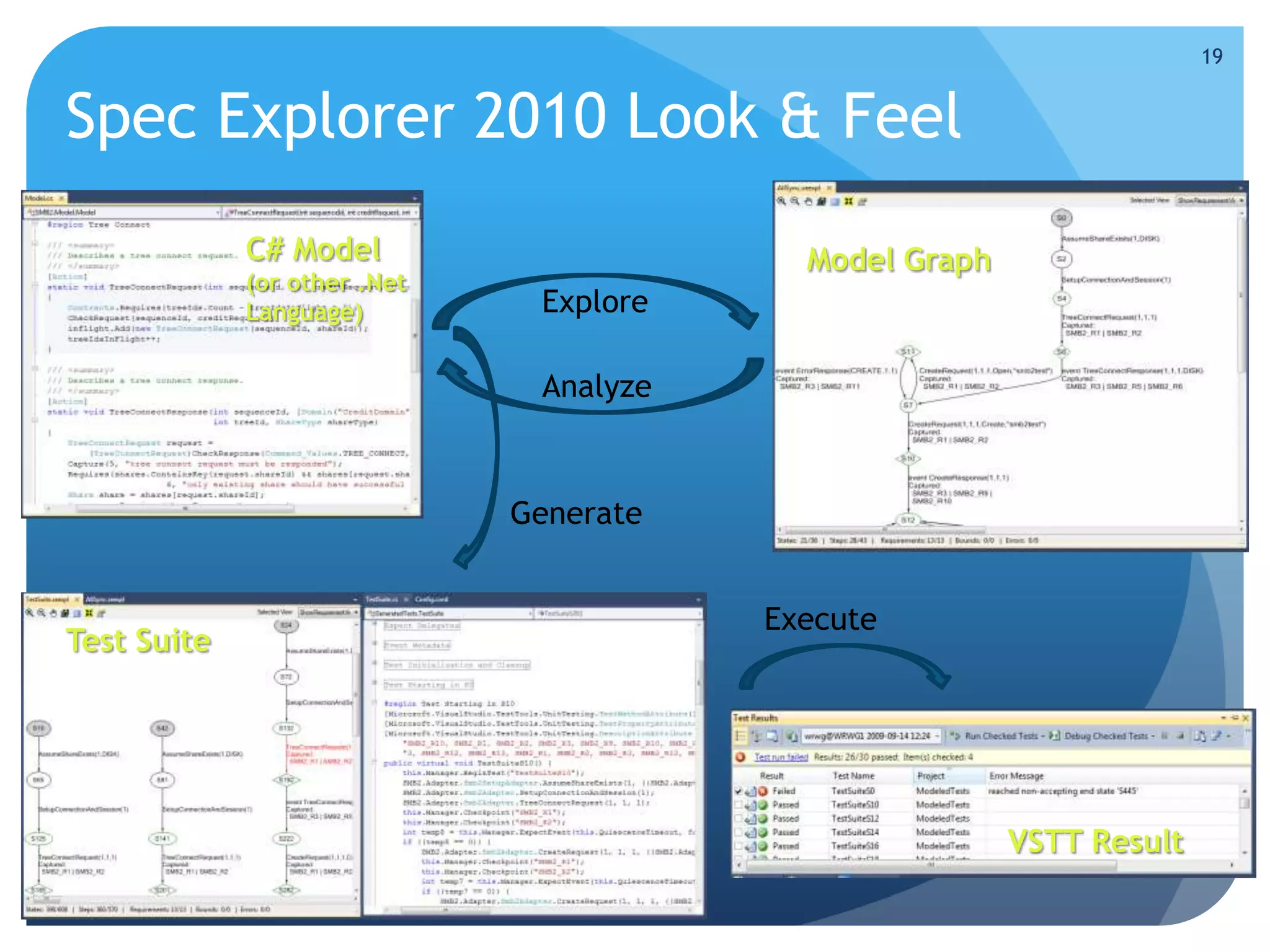

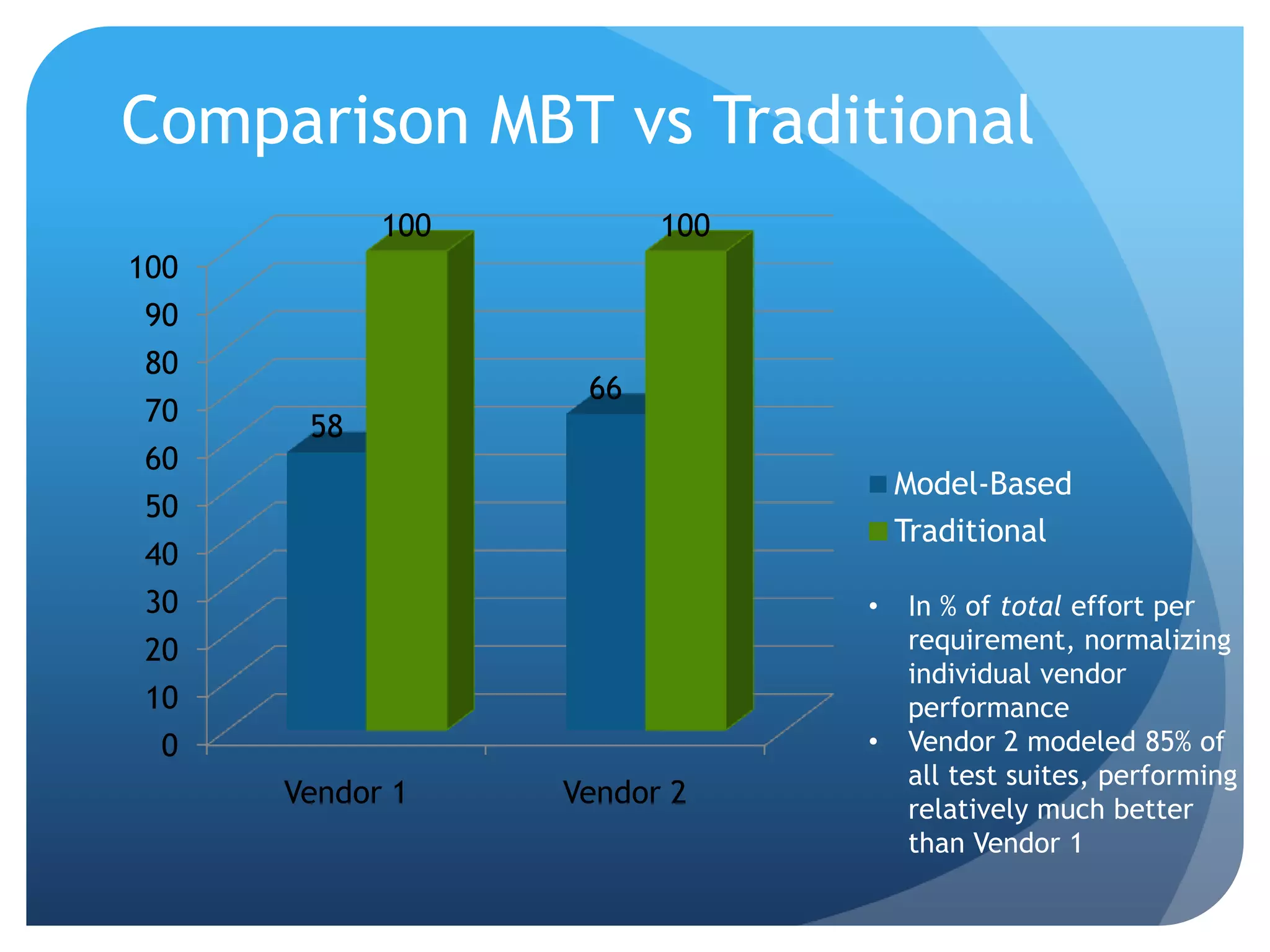

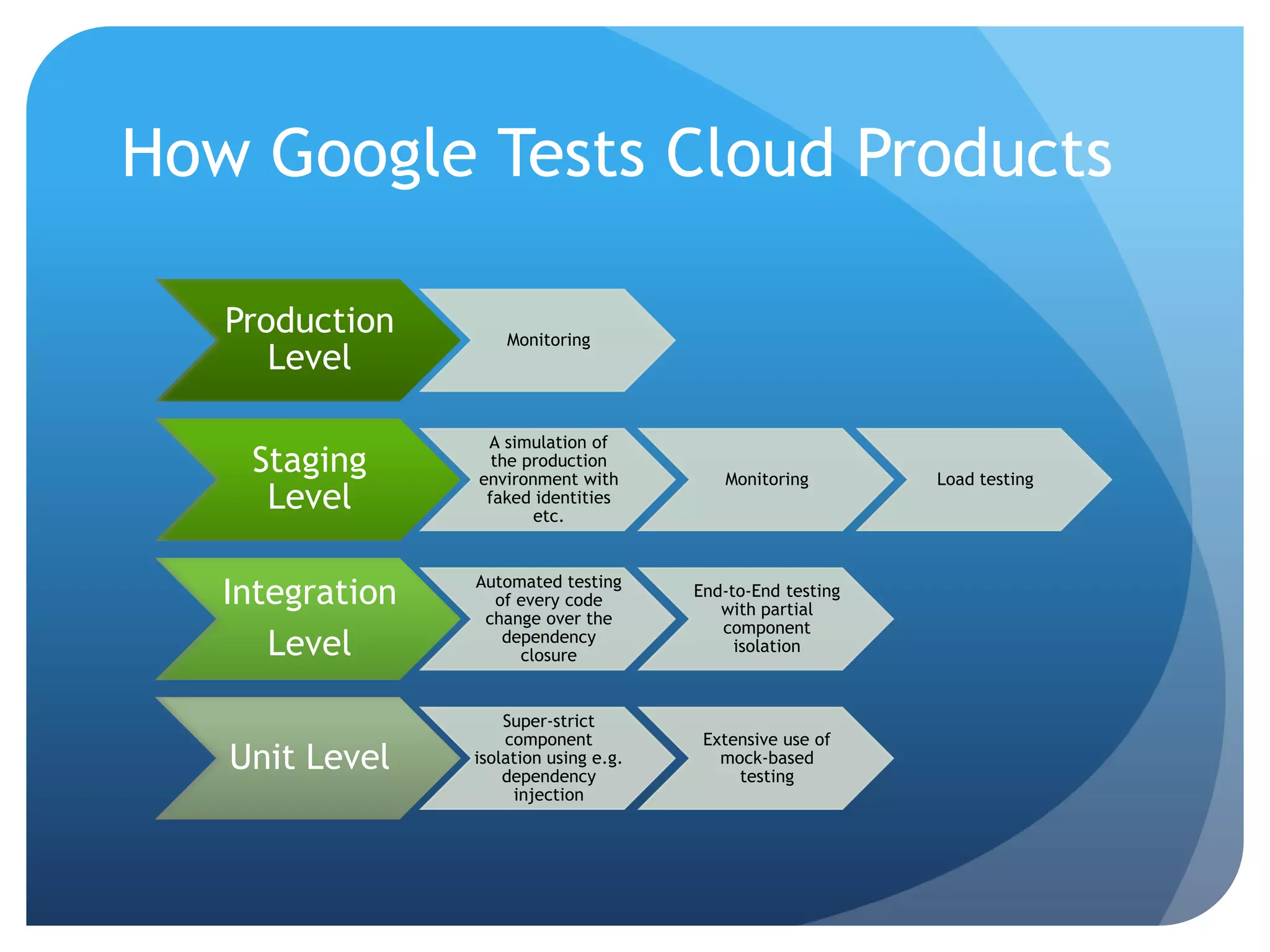

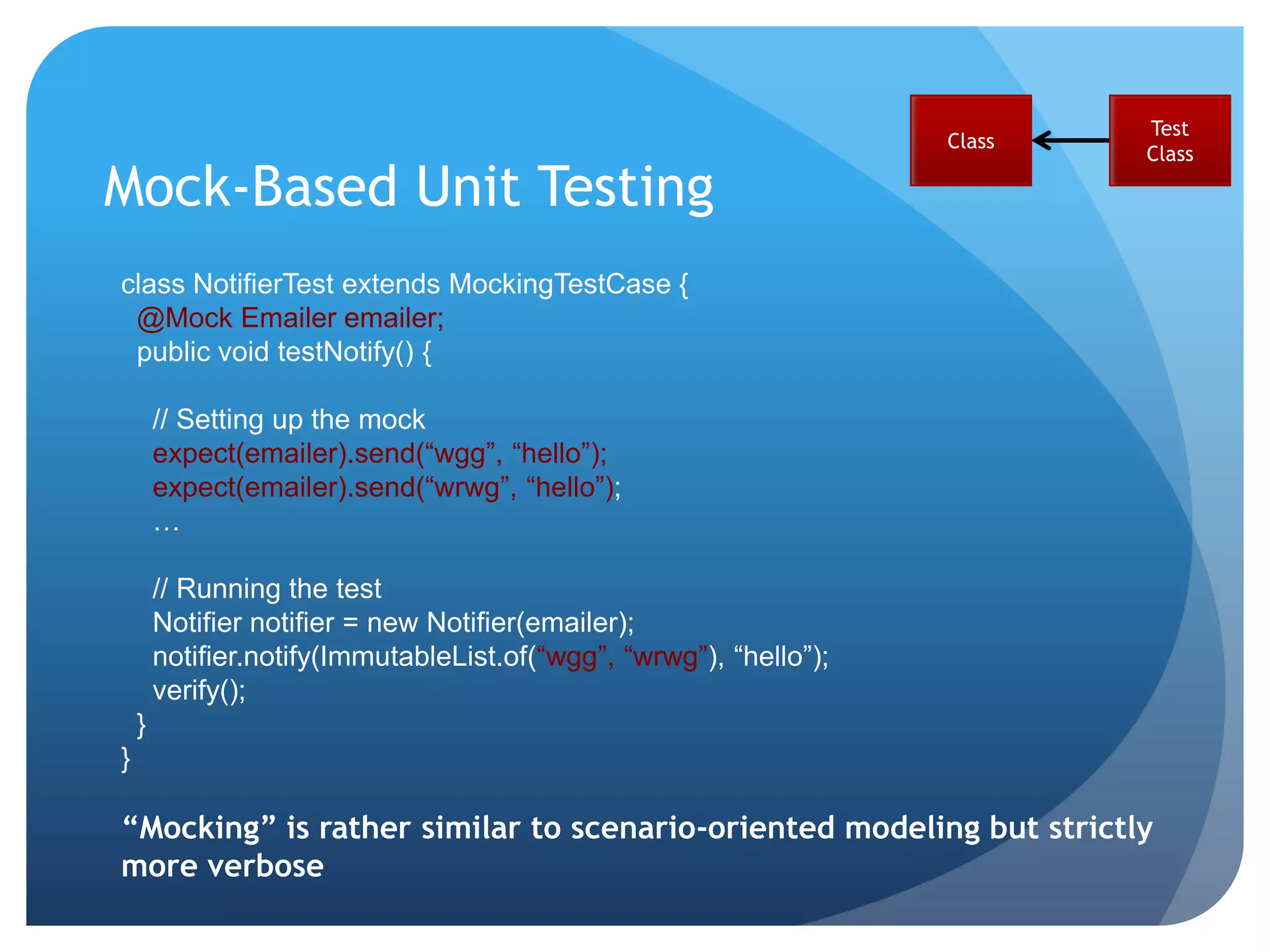

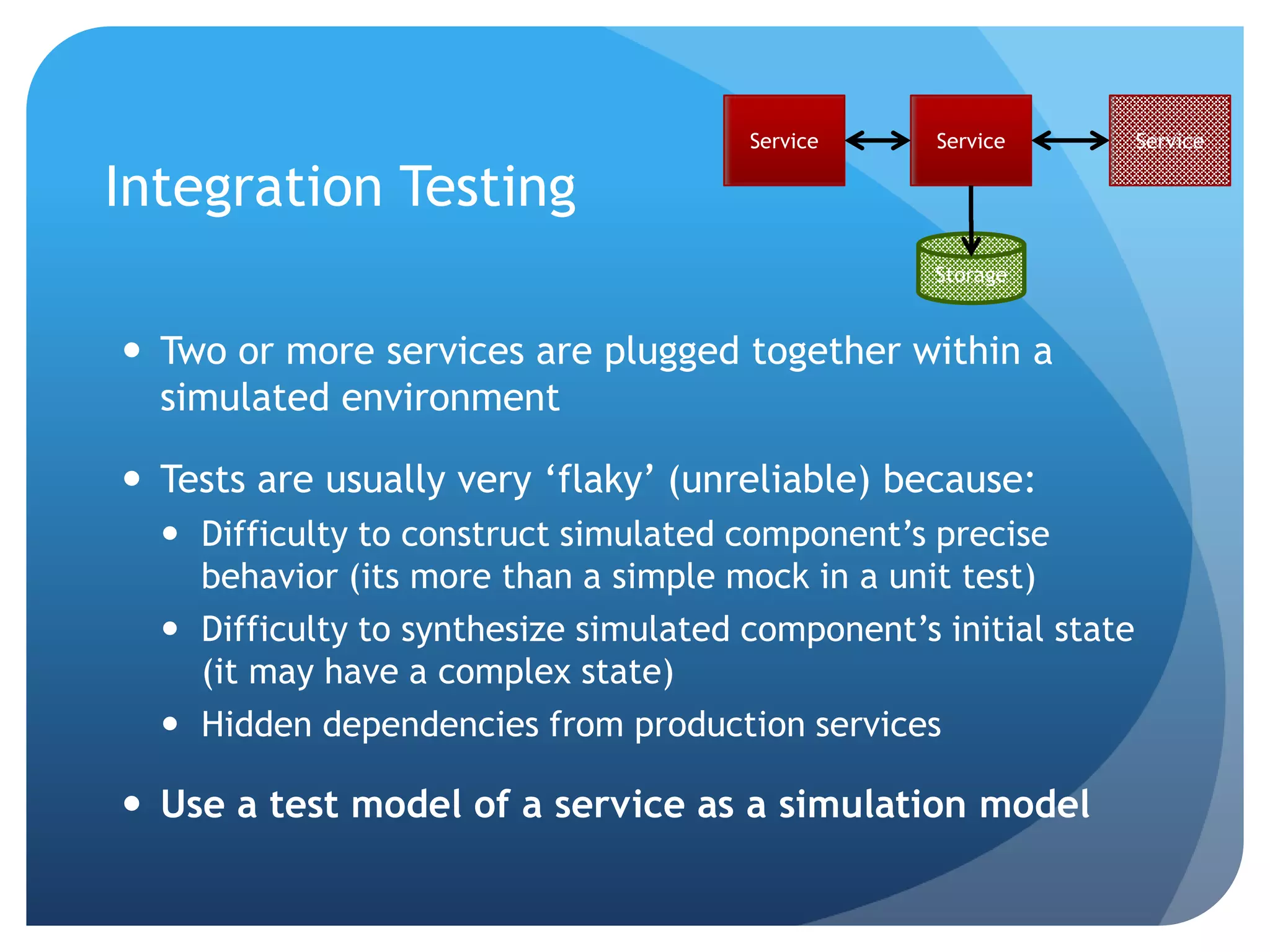

The document discusses model-based testing (MBT), covering its historical development, theoretical foundations, and practical application in organizations like Microsoft and Google. It emphasizes the consolidation of MBT theory, the complexity of necessary technology for industrial adoption, and lessons learned regarding test selection and tooling challenges. Additionally, it explores new opportunities for MBT in cloud environments, highlighting both the promise and ongoing challenges in practice.