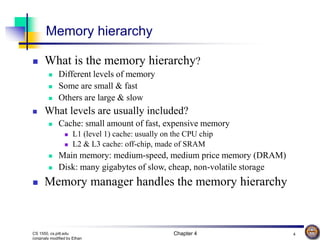

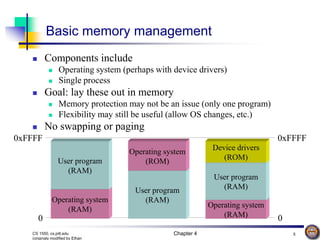

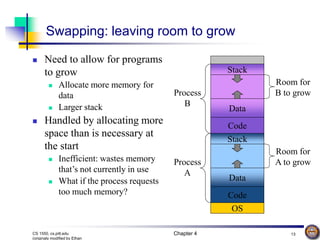

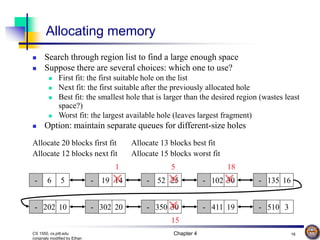

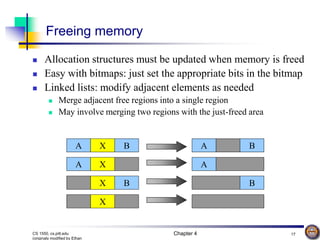

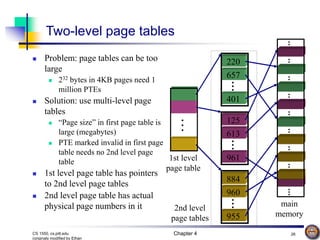

The document summarizes key concepts of memory management techniques used in operating systems, including basic memory management, swapping, virtual memory, and paging. It explains how memory is divided into pages that are mapped to physical frames using page tables, allowing processes to access more memory than is physically available through swapping pages in and out of main memory from disk. The goal of these techniques is to make the best use of available memory and allow processes to run without being aware of physical memory limitations.