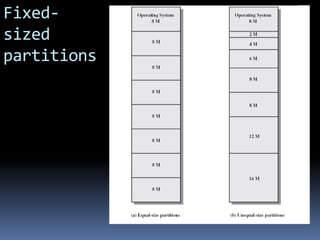

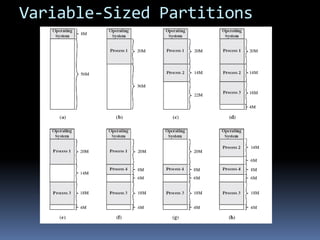

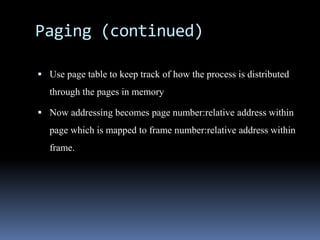

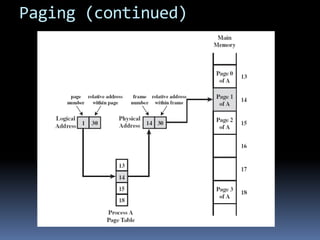

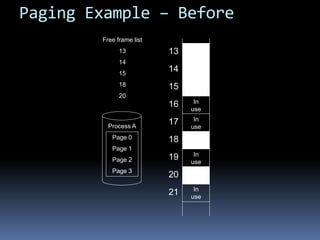

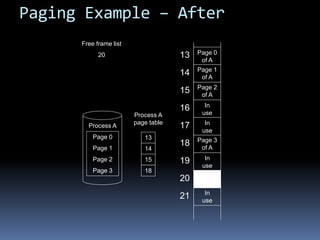

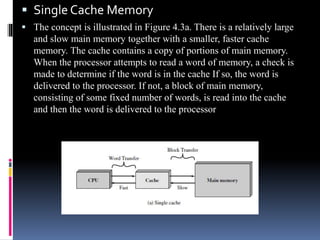

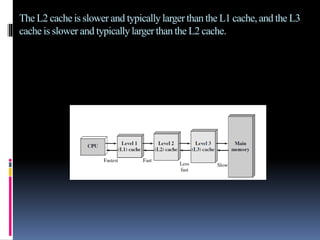

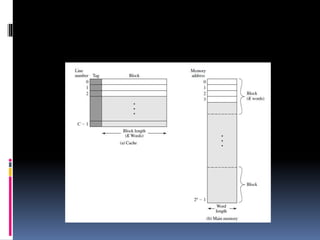

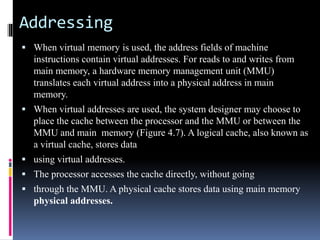

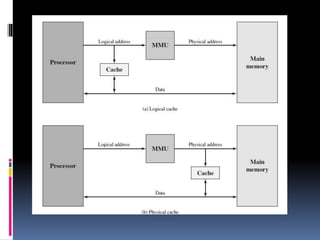

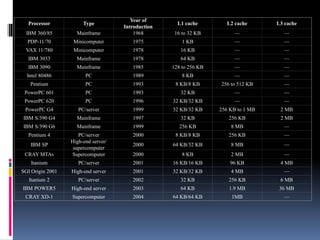

The document discusses computer memory management and caching. It covers topics like memory partitioning, paging, segmentation, virtual memory, and cache memory principles. Memory can be partitioned into fixed or variable sized blocks to allocate to processes. Paging and segmentation improve memory usage by allowing processes to be non-contiguous in memory. Virtual memory uses demand paging to treat memory as larger than physical RAM by swapping pages to disk as needed. Cache memory holds frequently used data from main memory to improve access speed.