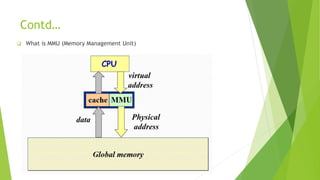

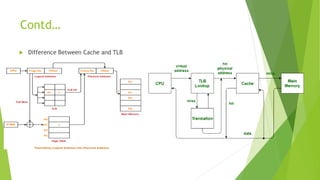

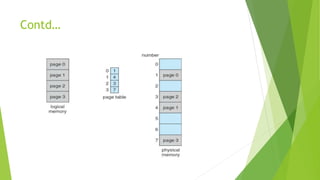

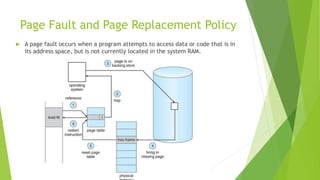

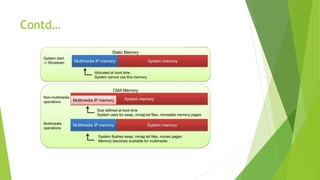

The document provides an in-depth overview of Linux memory management, covering key concepts such as virtual vs. physical memory, memory types, allocation methods, and various memory management units. It discusses the importance of contiguous memory allocation (CMA), page faults, page replacement policies, and introduces Google's ION memory manager. Additionally, the document highlights issues related to memory fragmentation and outlines solutions like guaranteed CMA and transcendent memory.