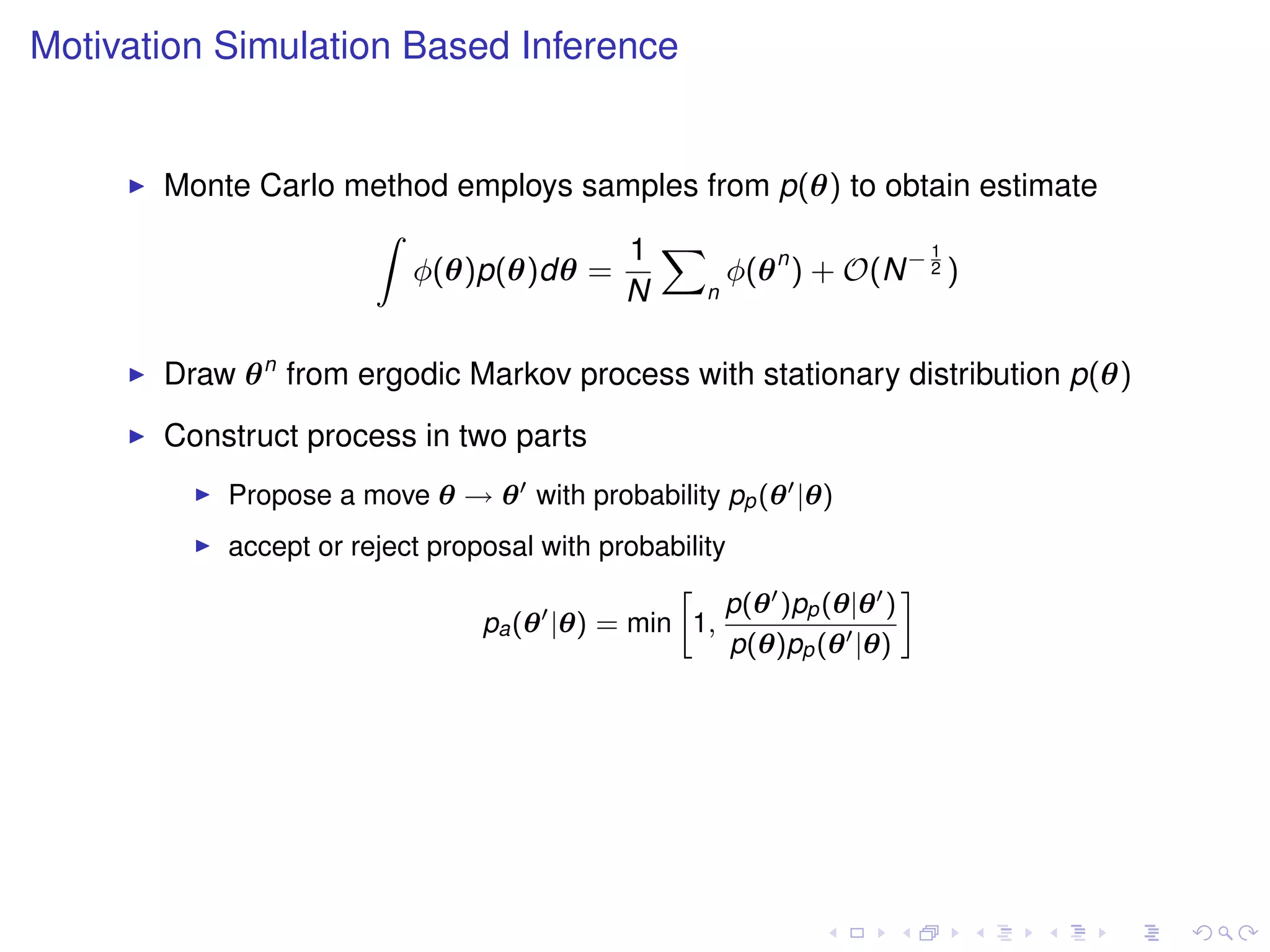

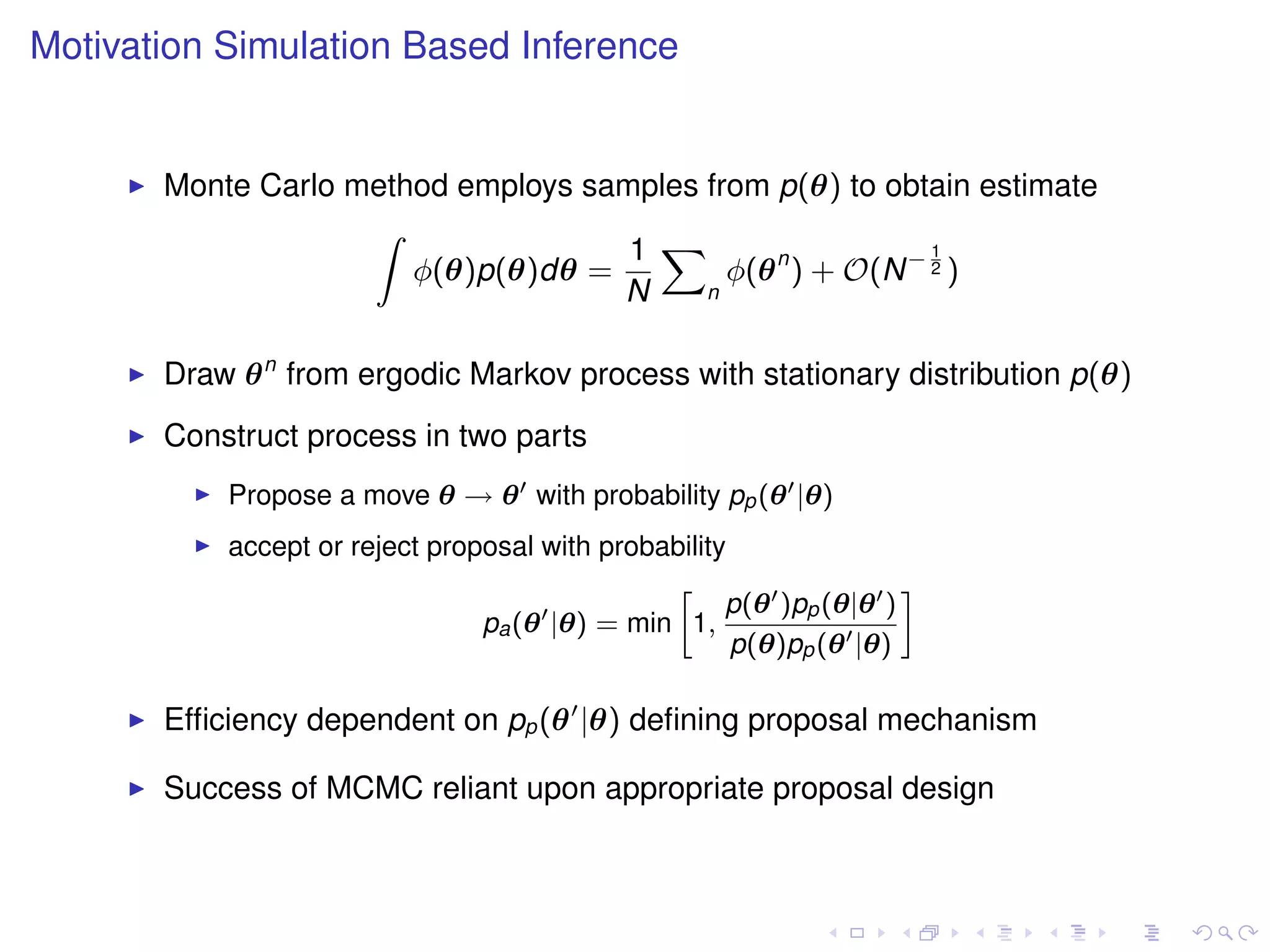

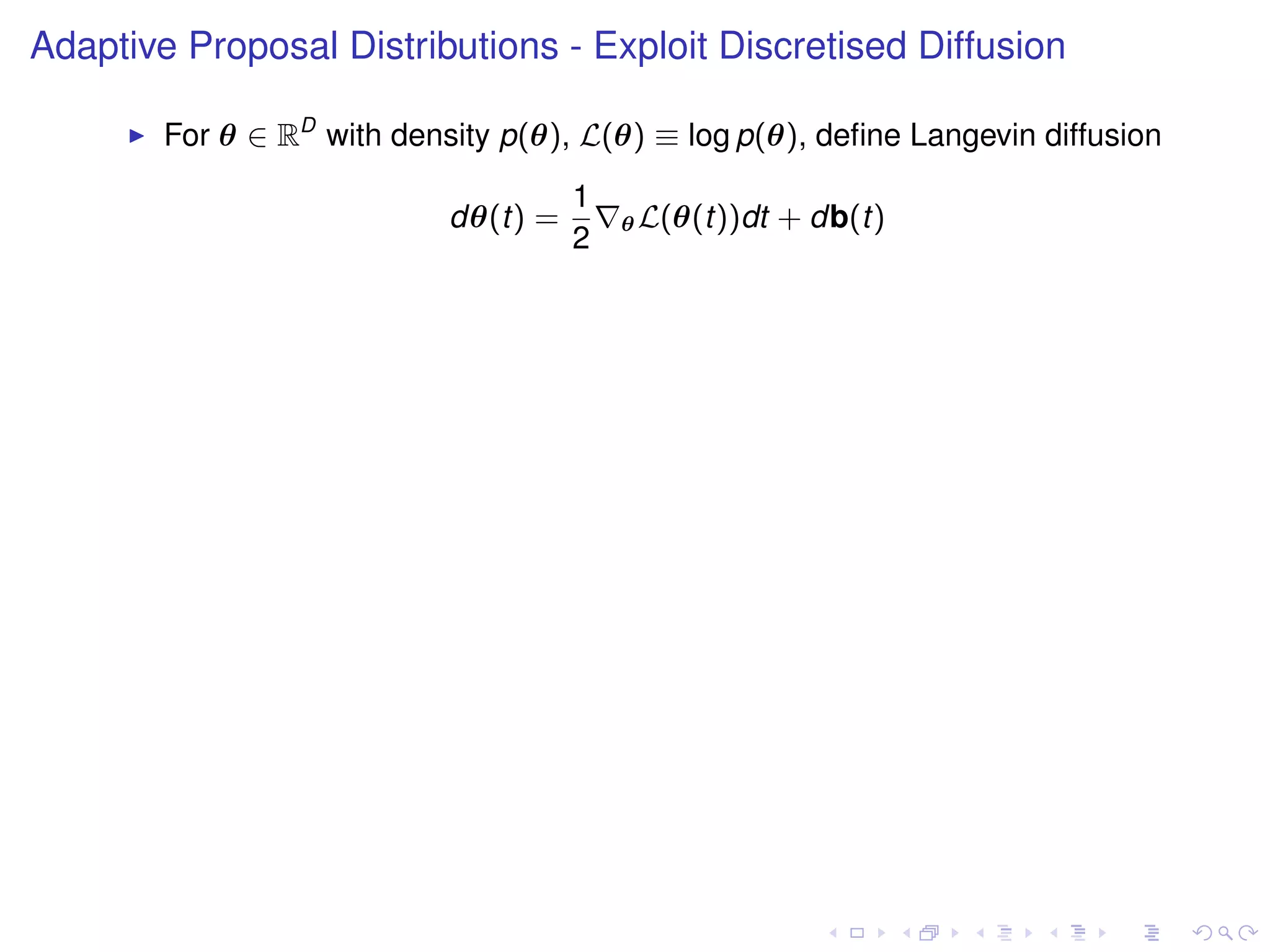

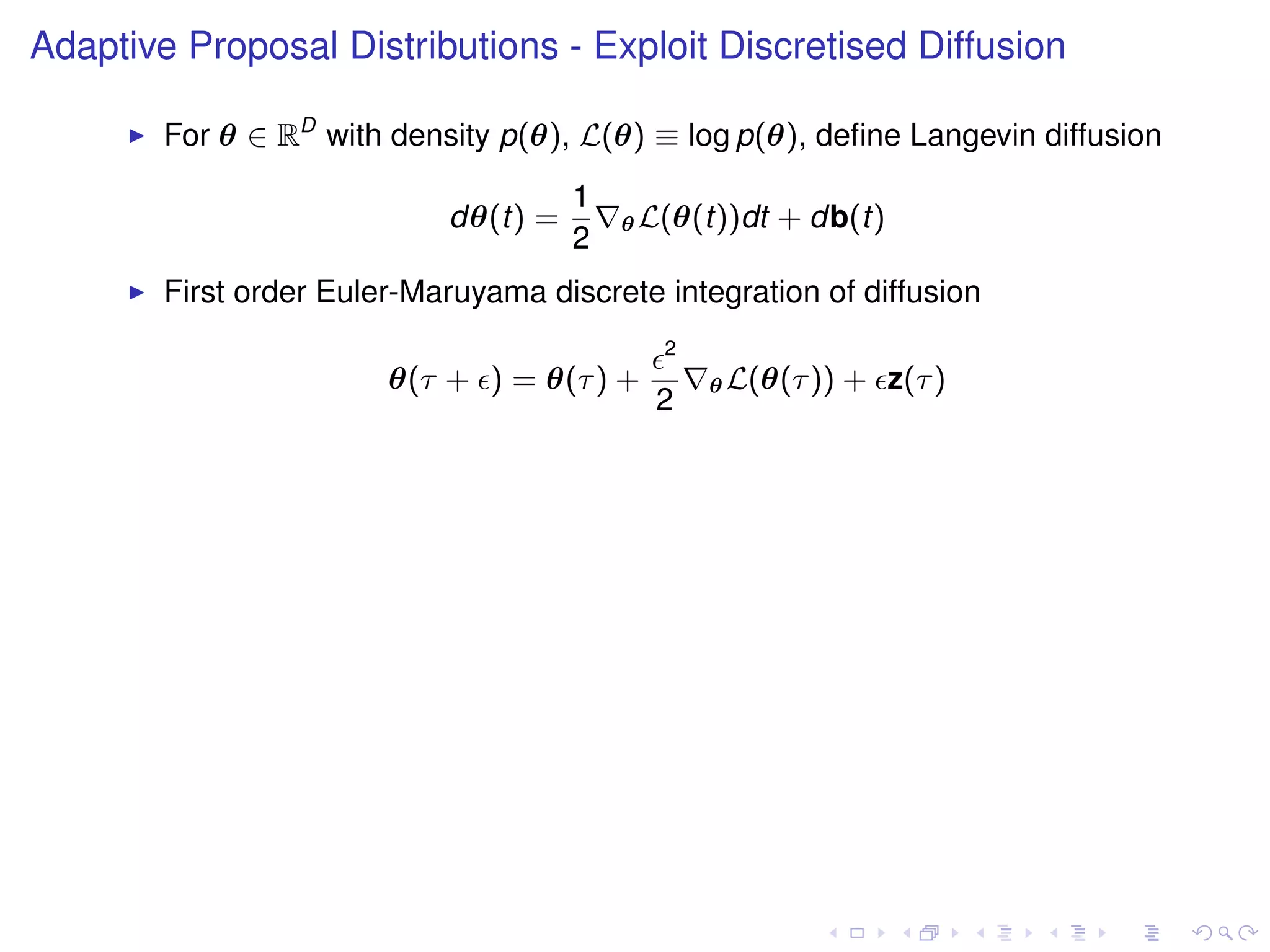

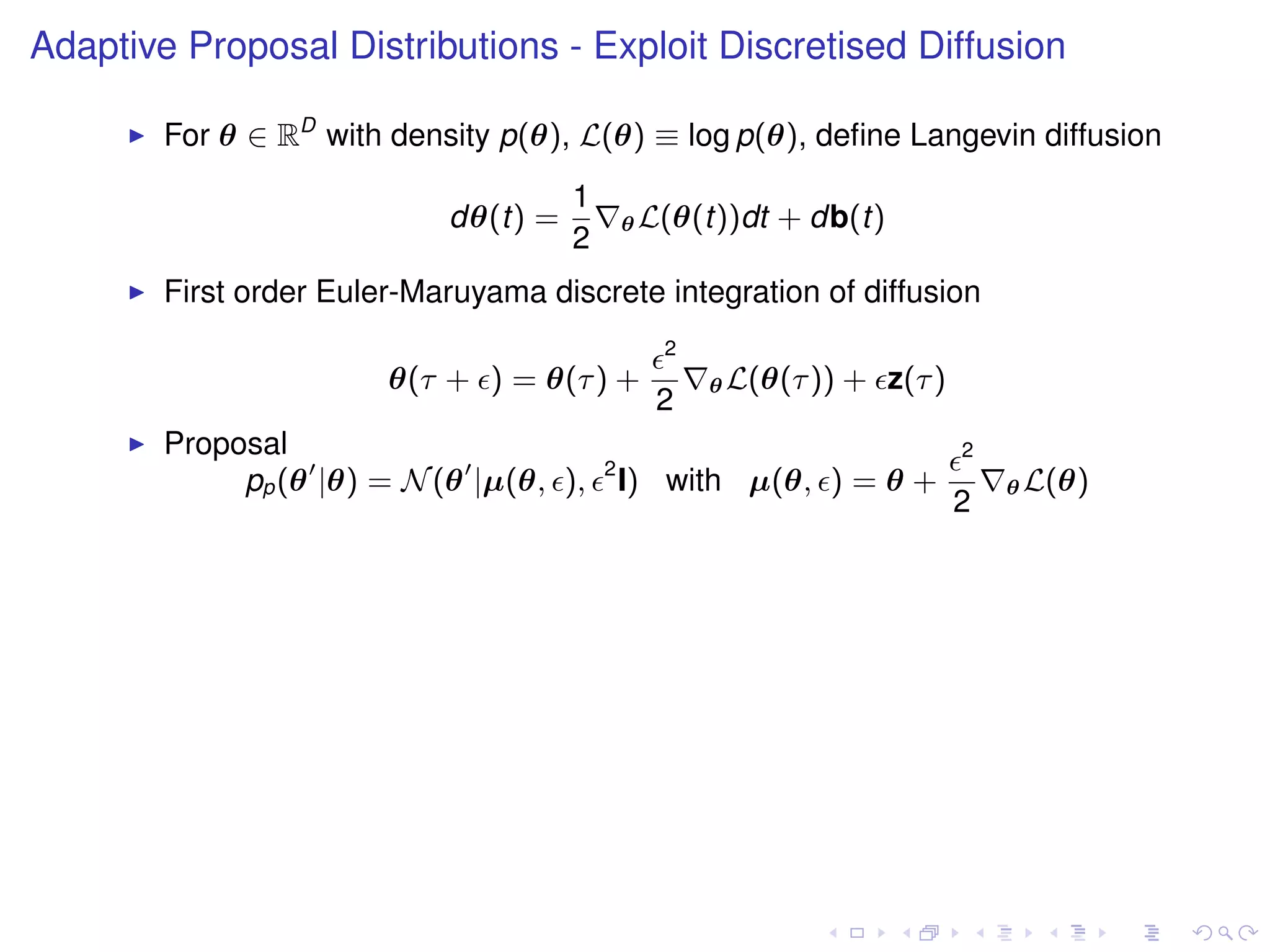

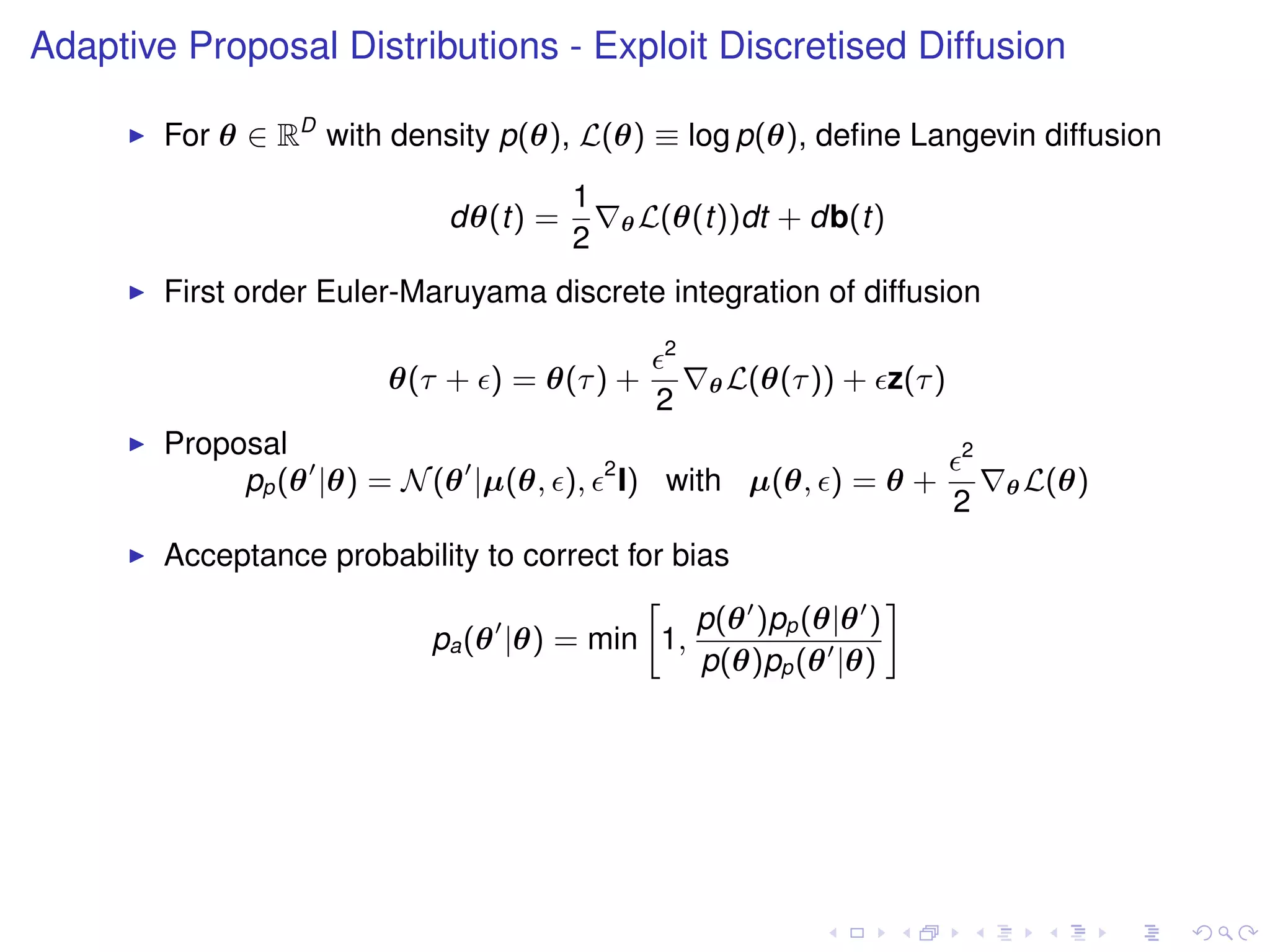

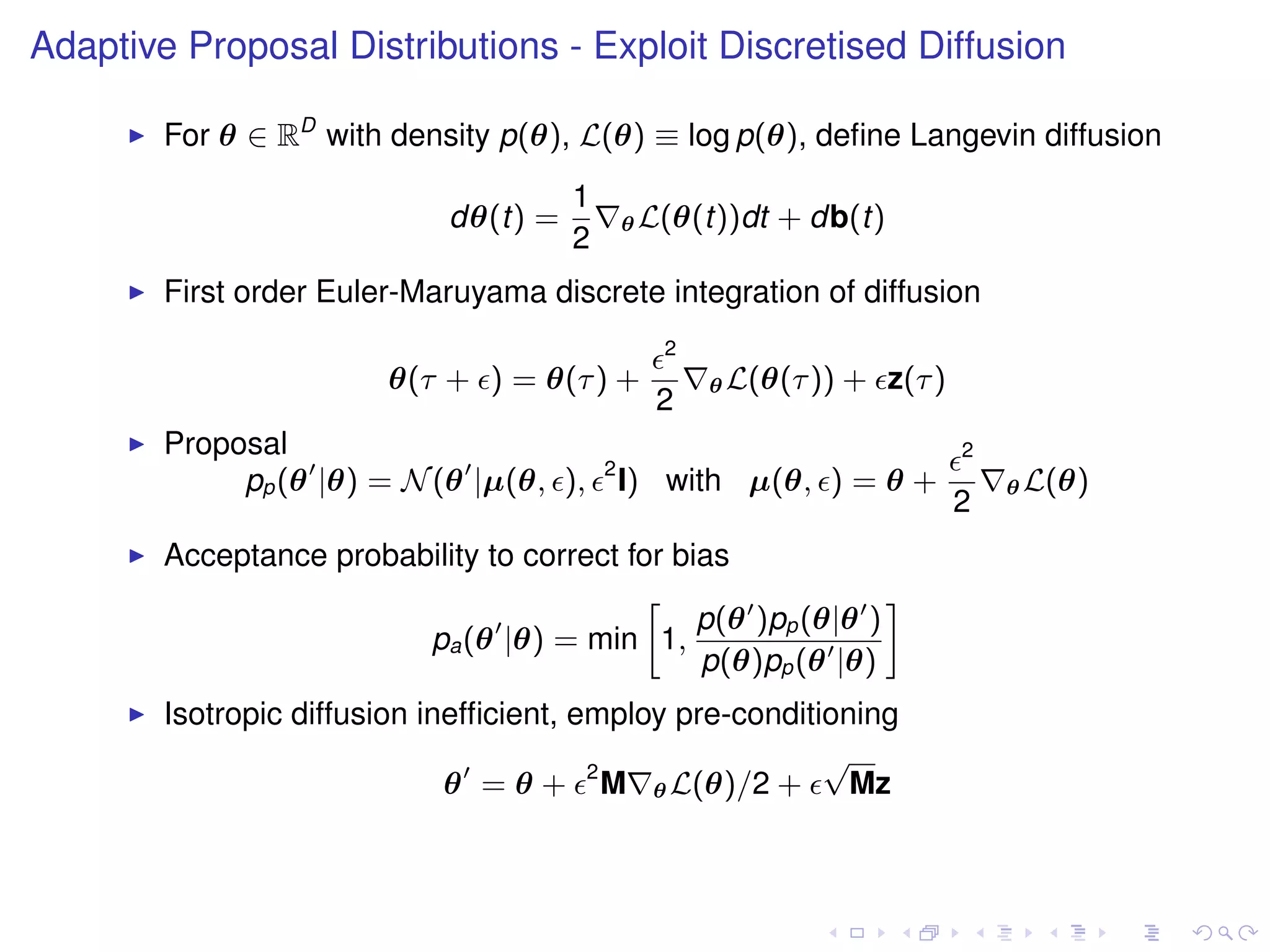

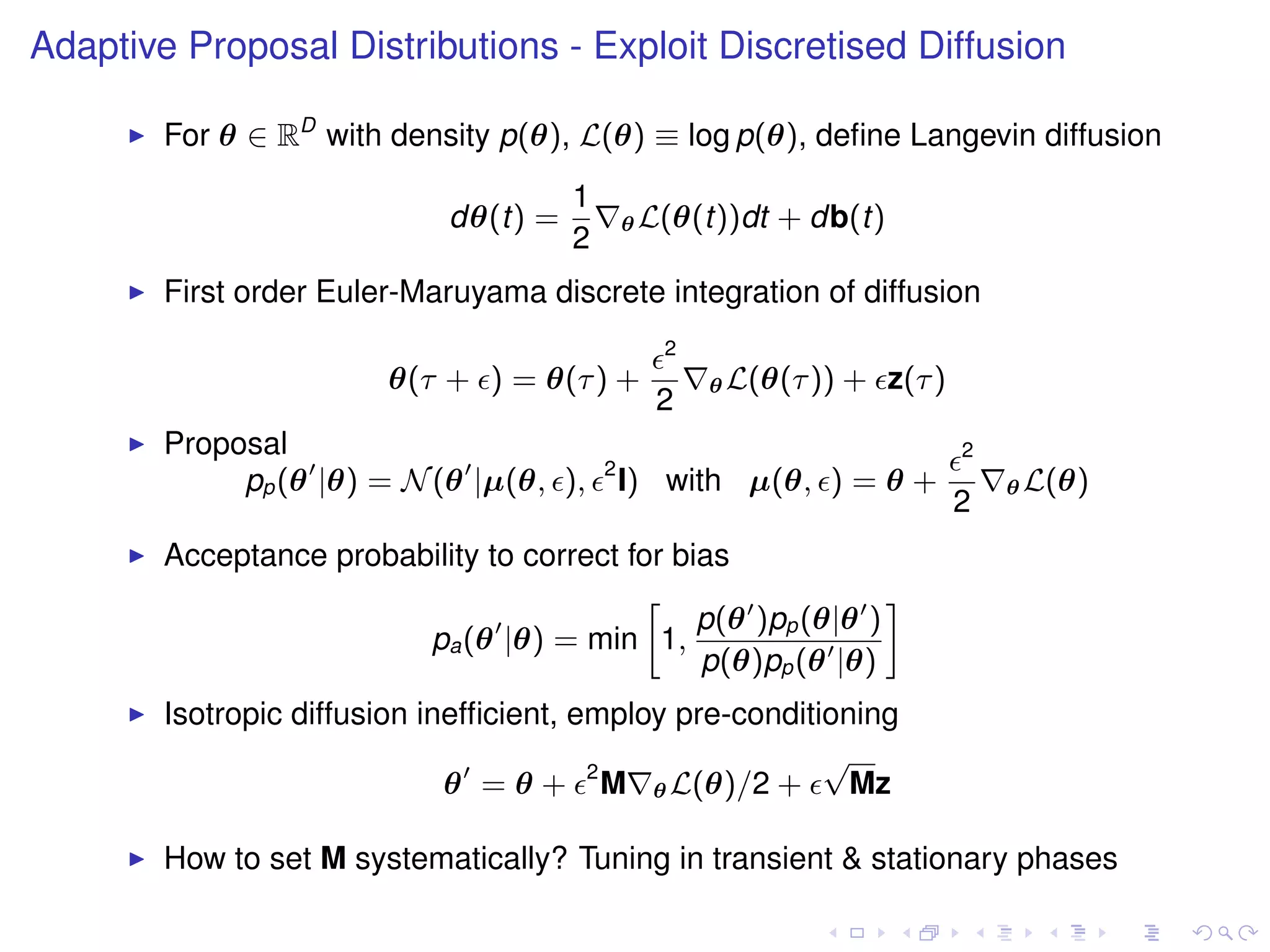

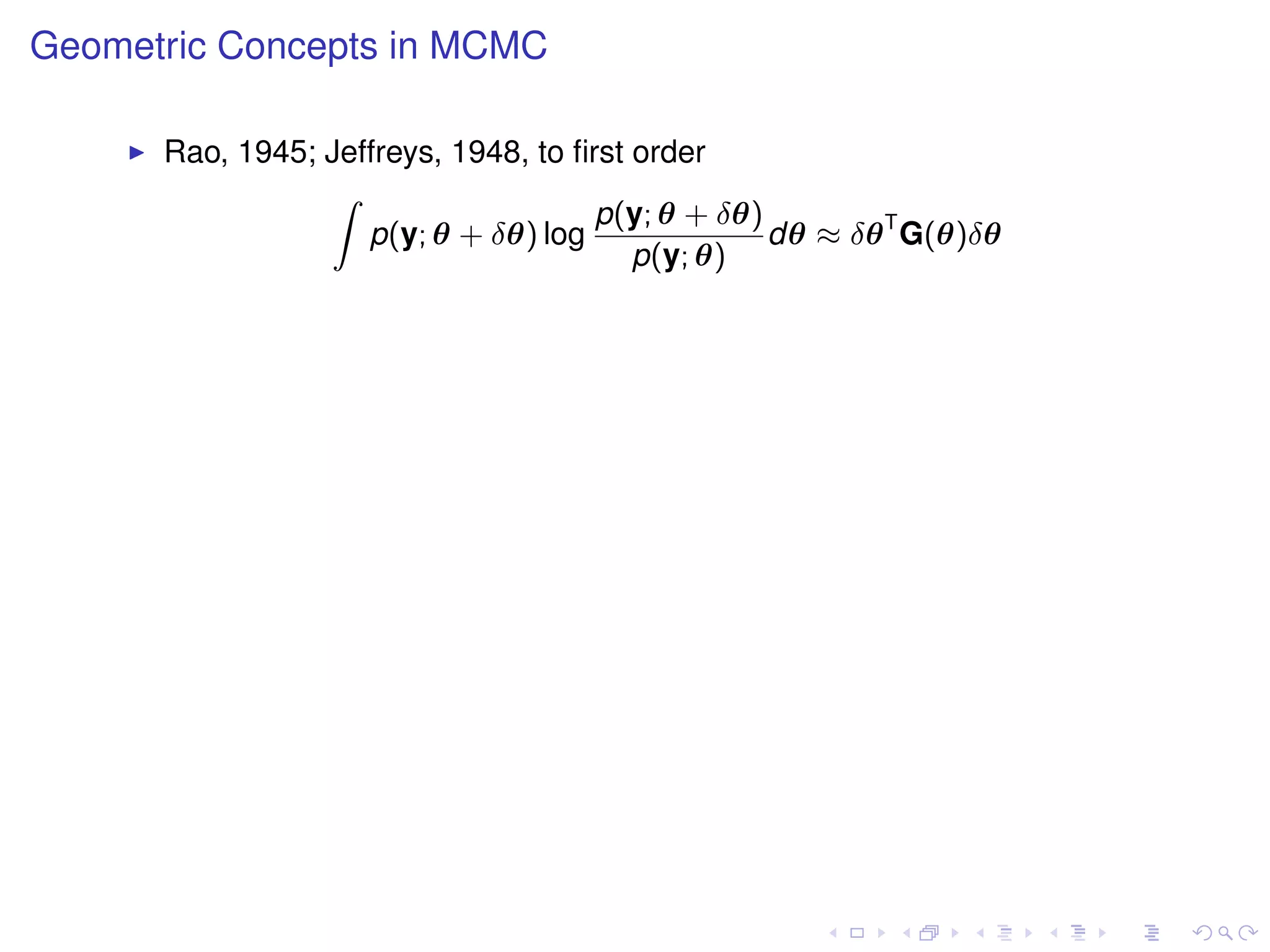

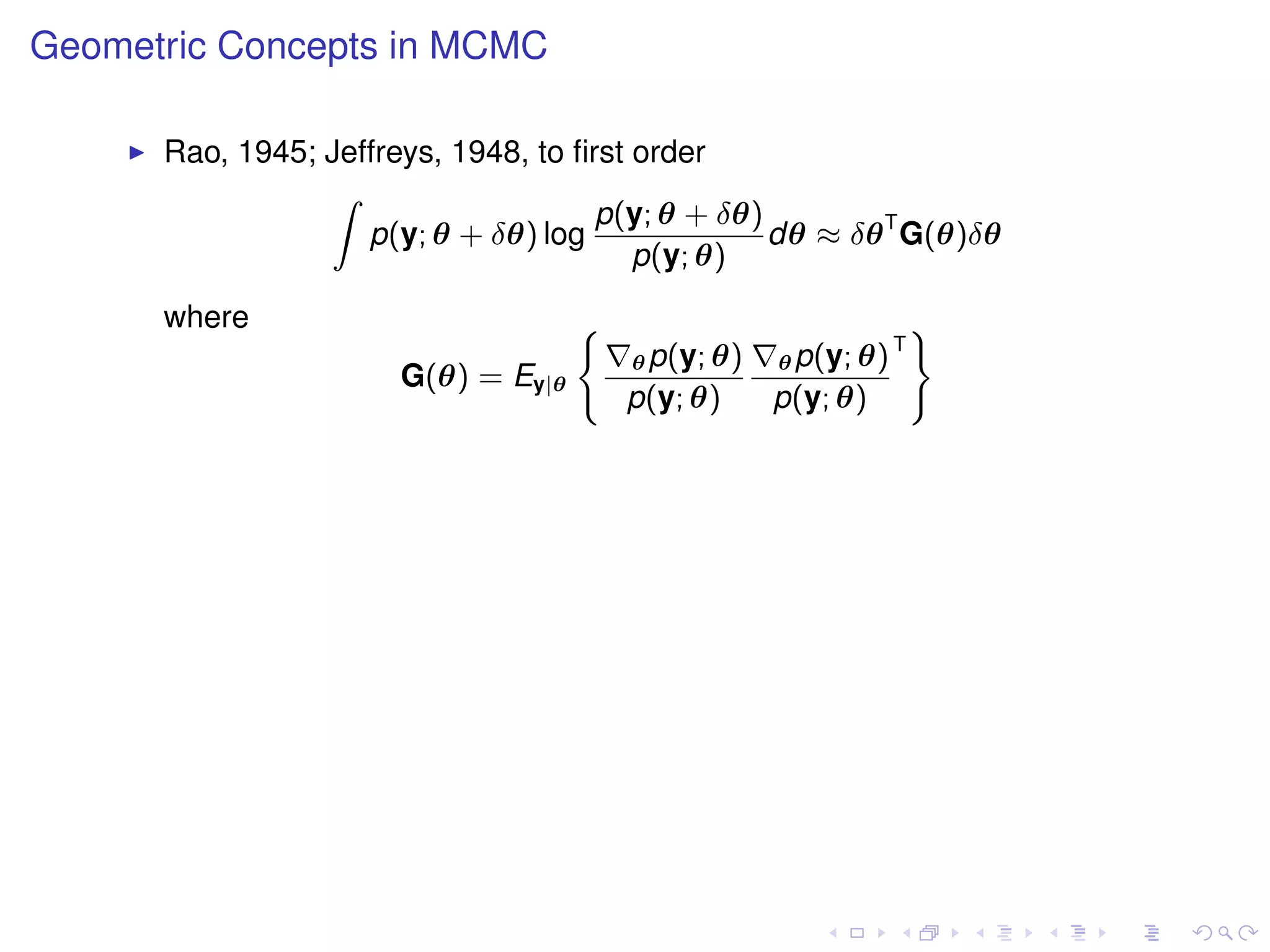

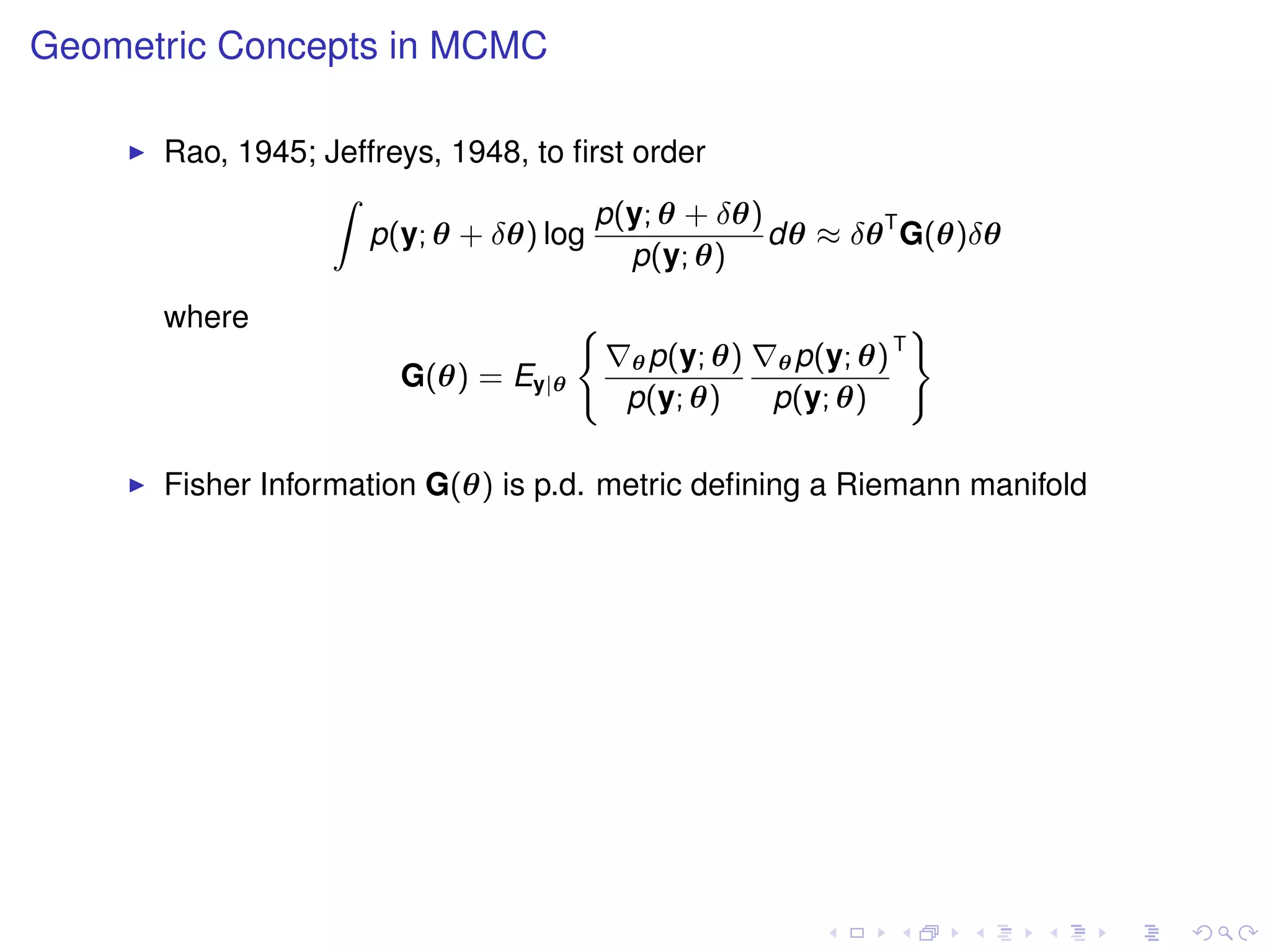

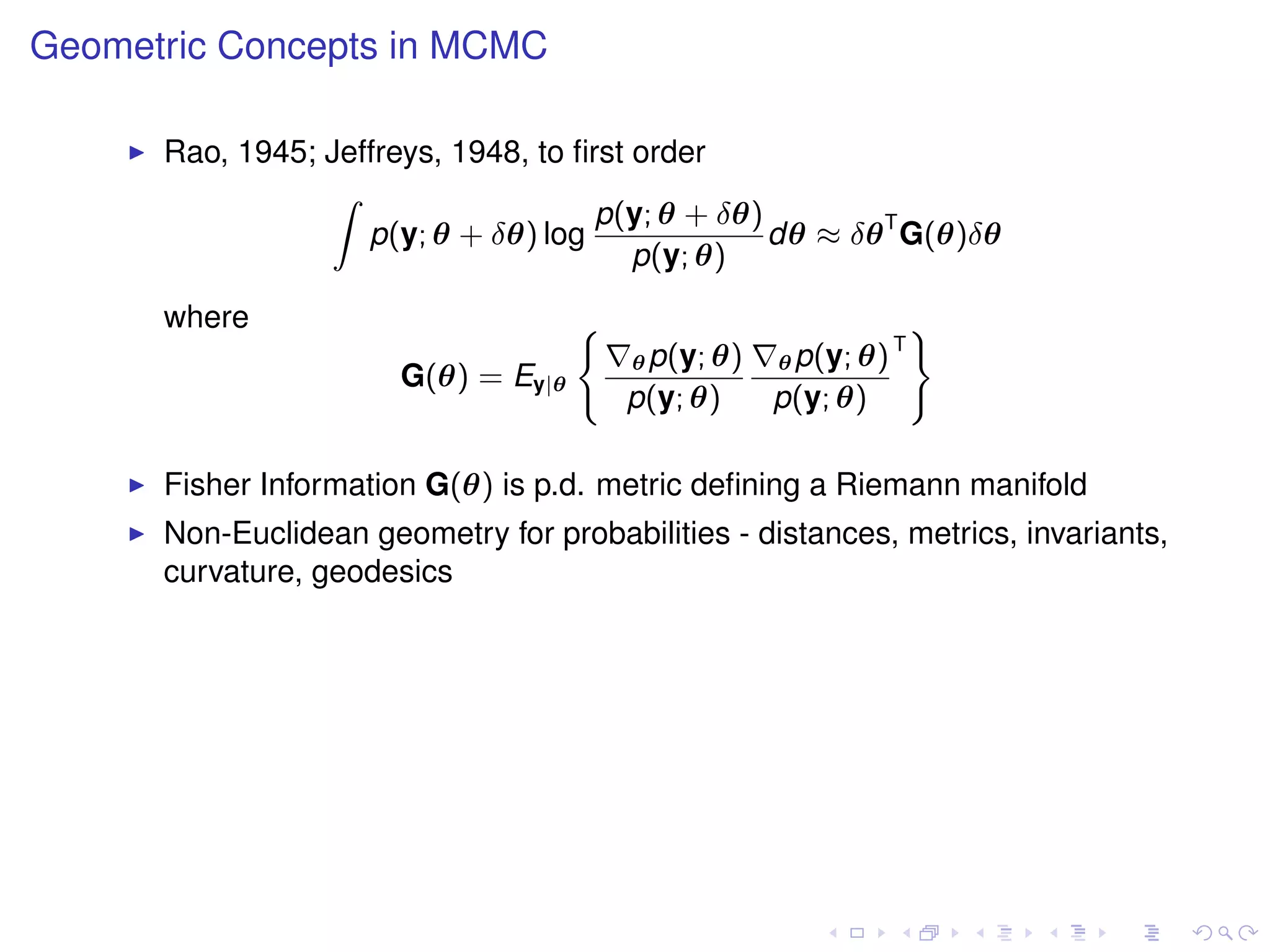

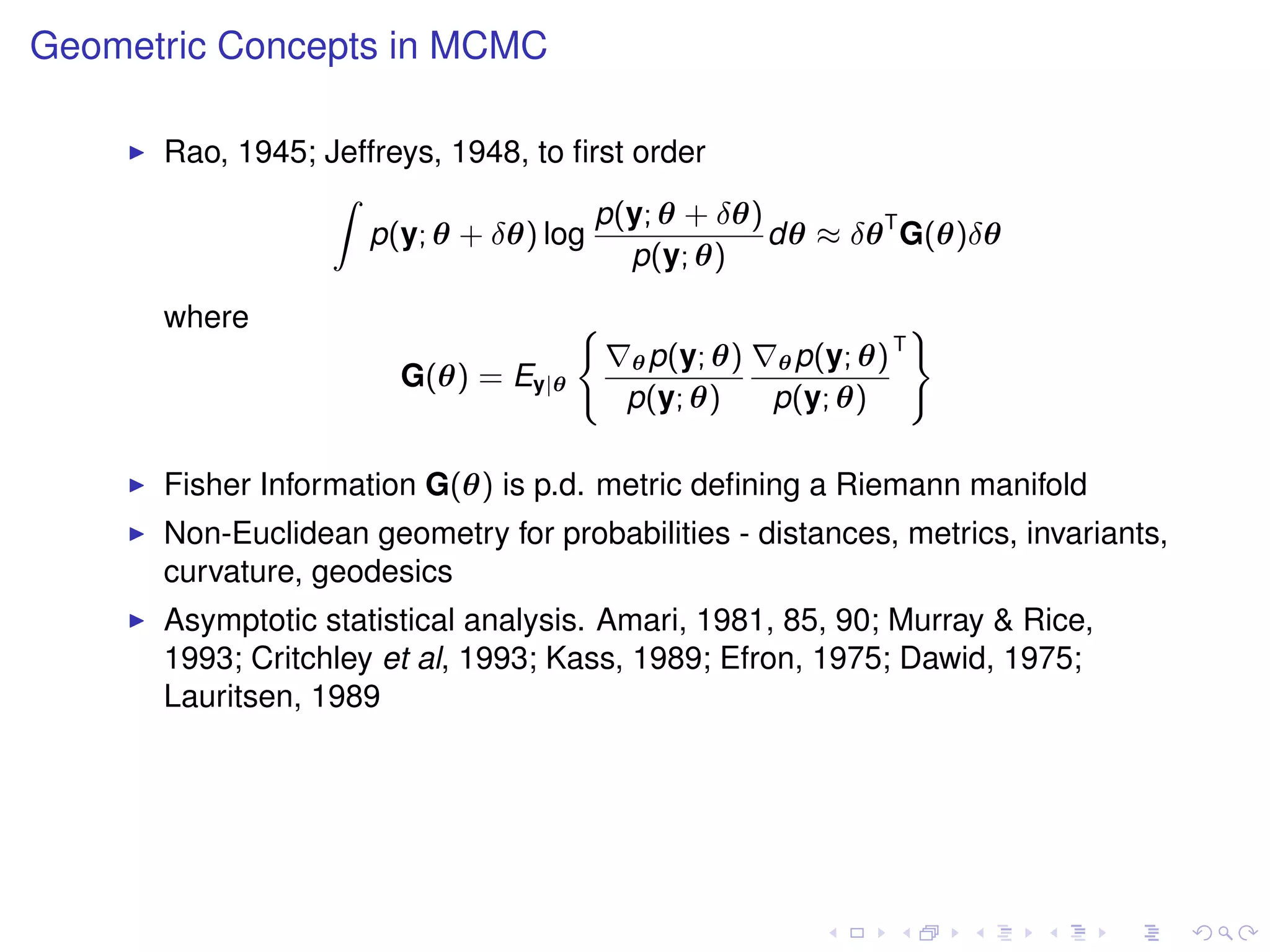

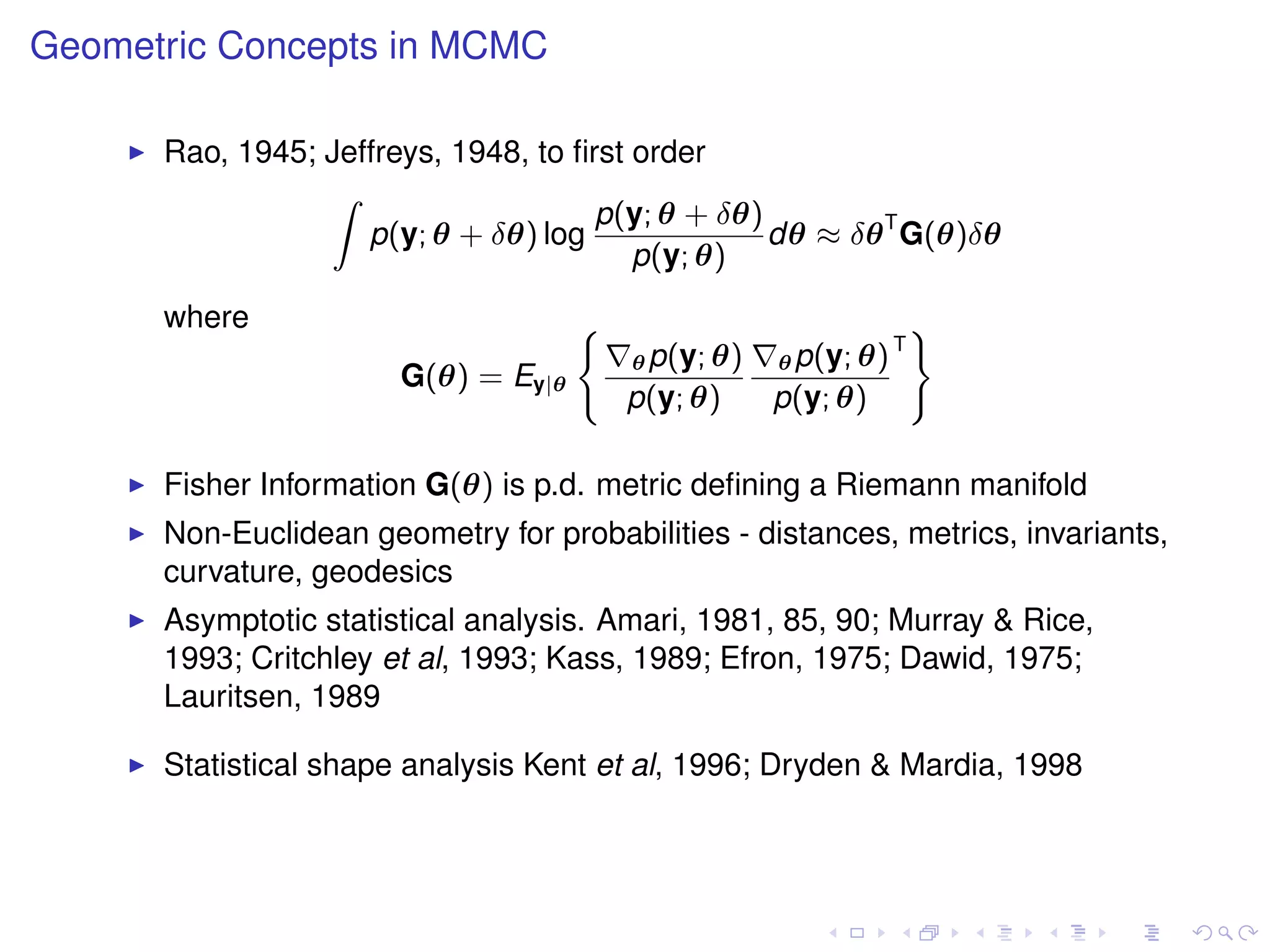

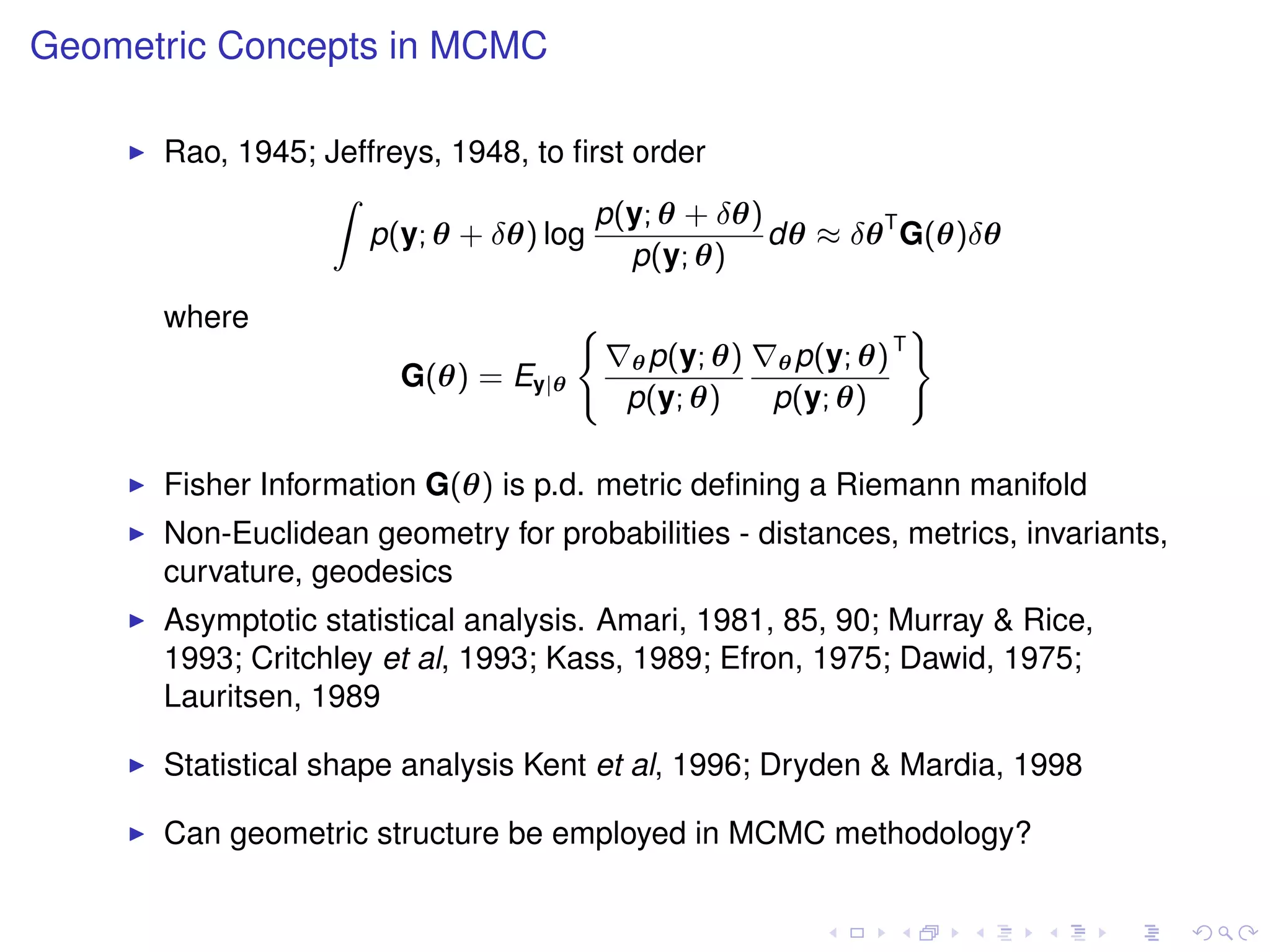

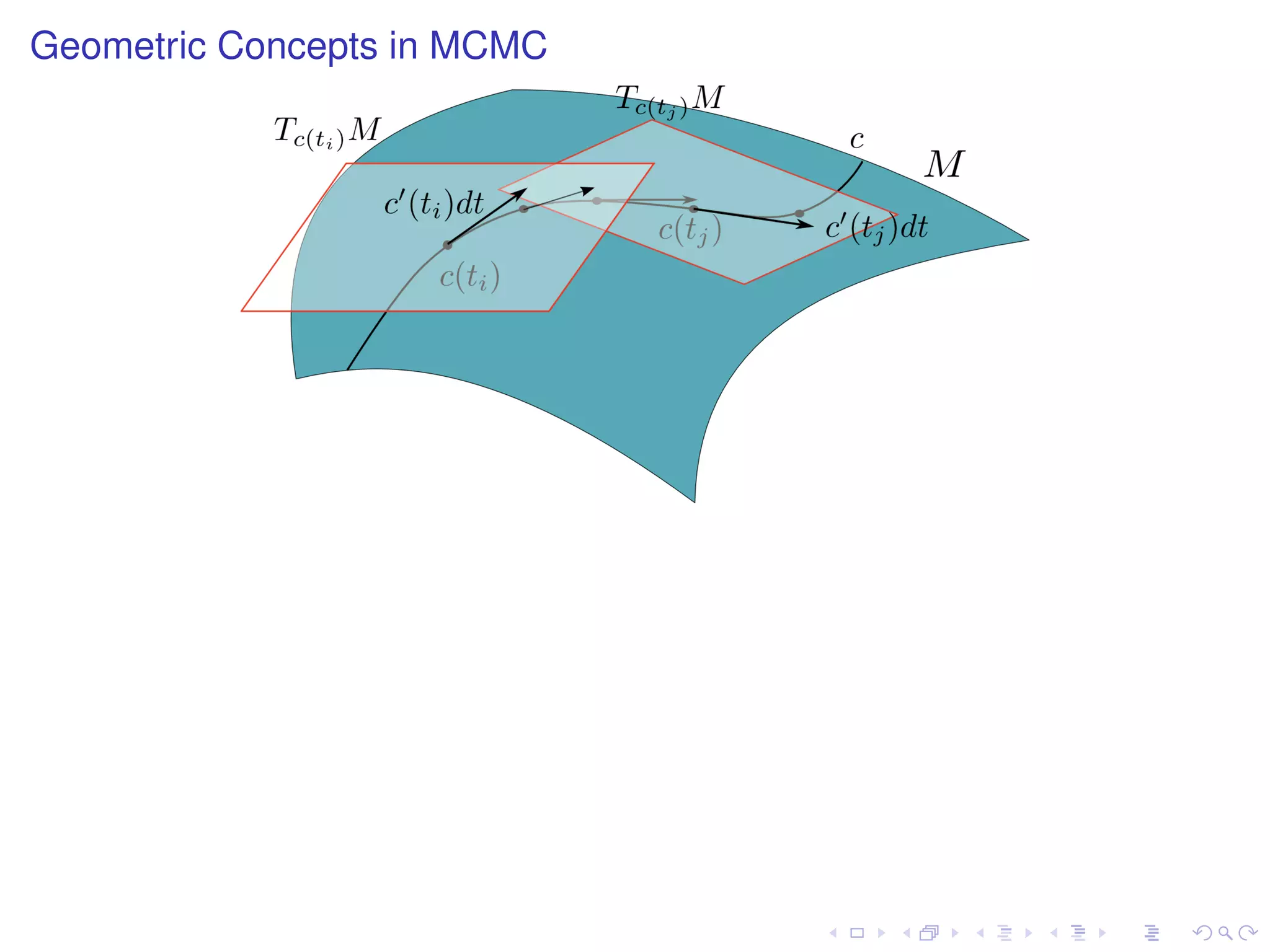

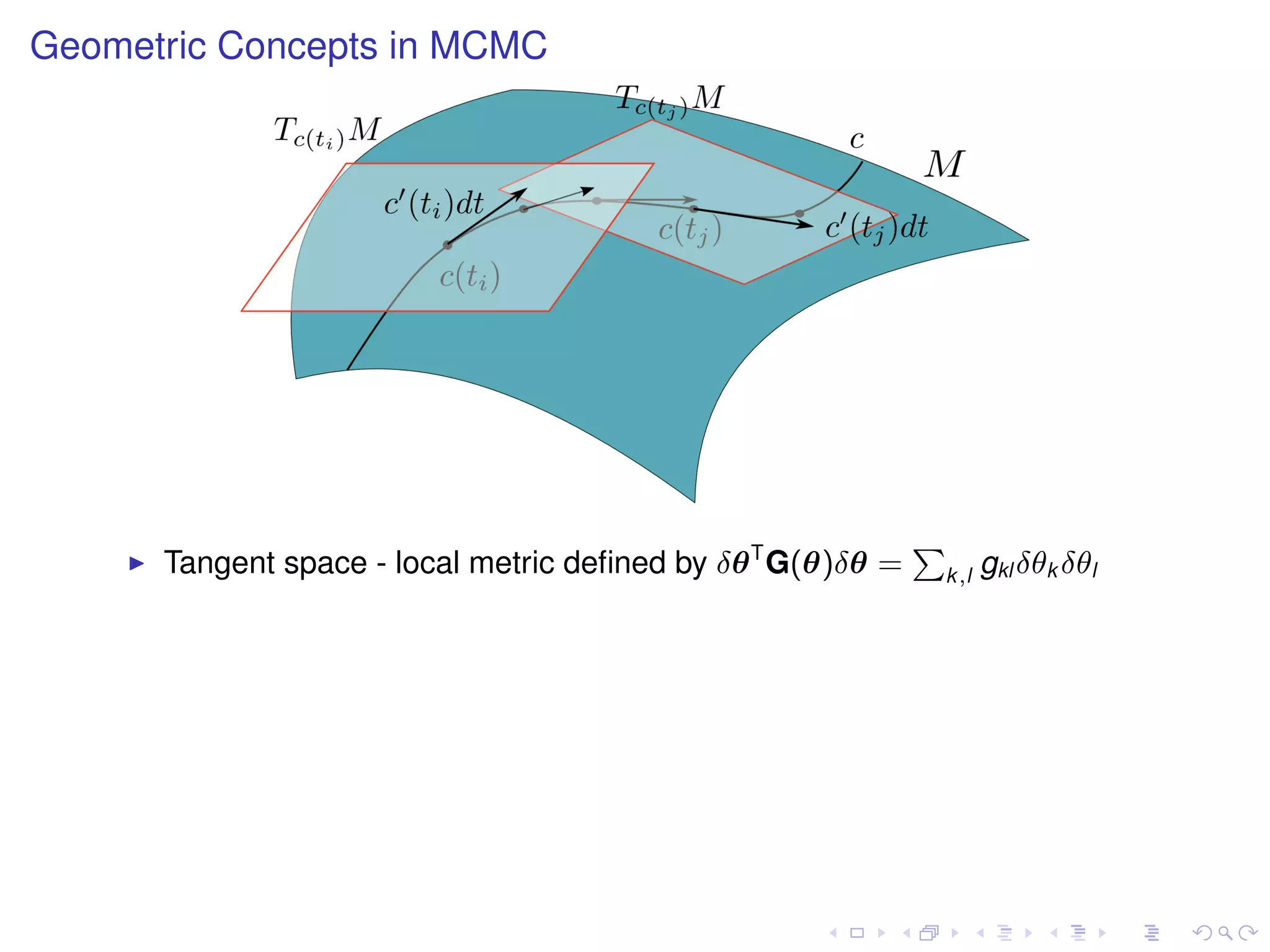

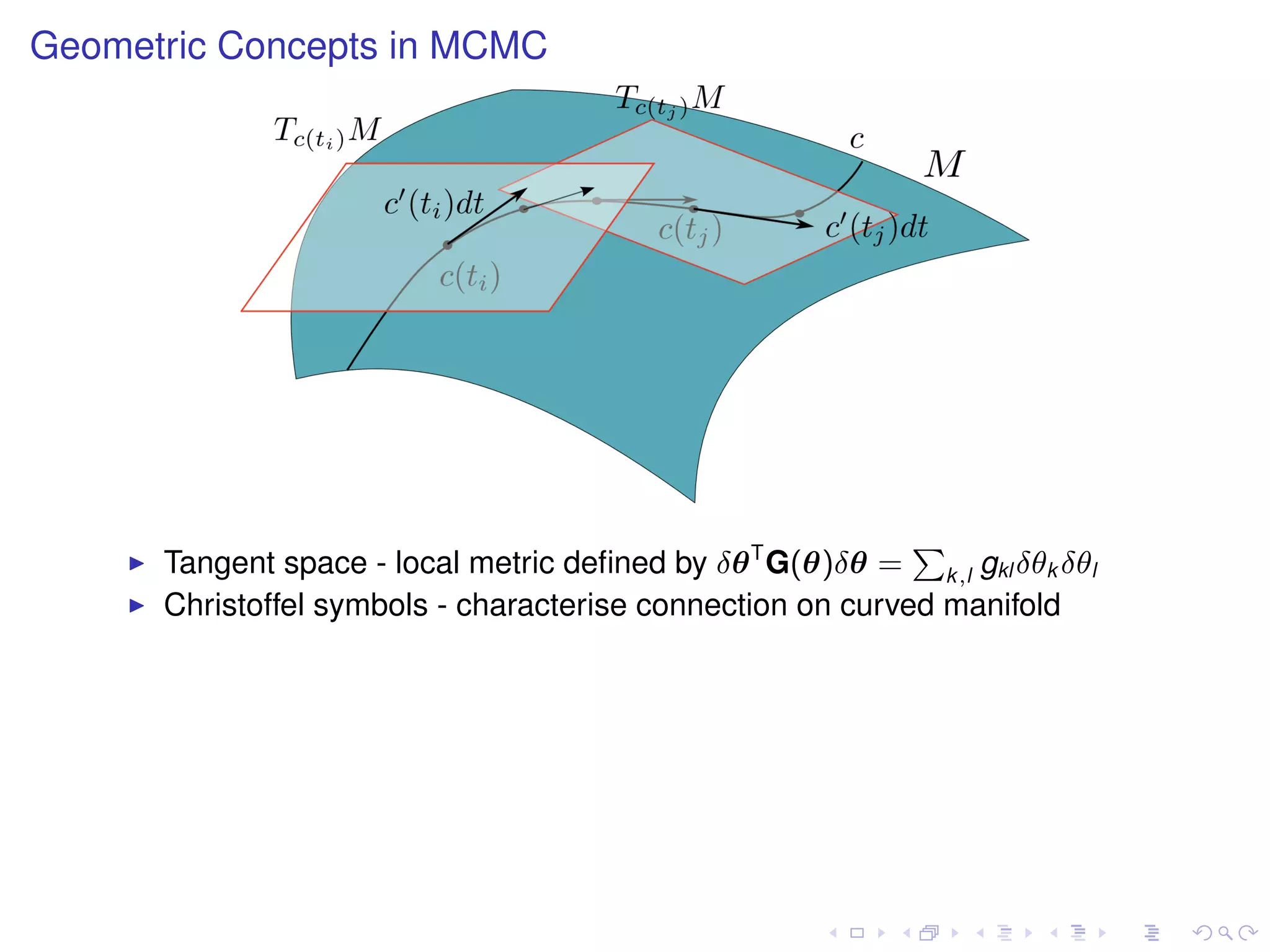

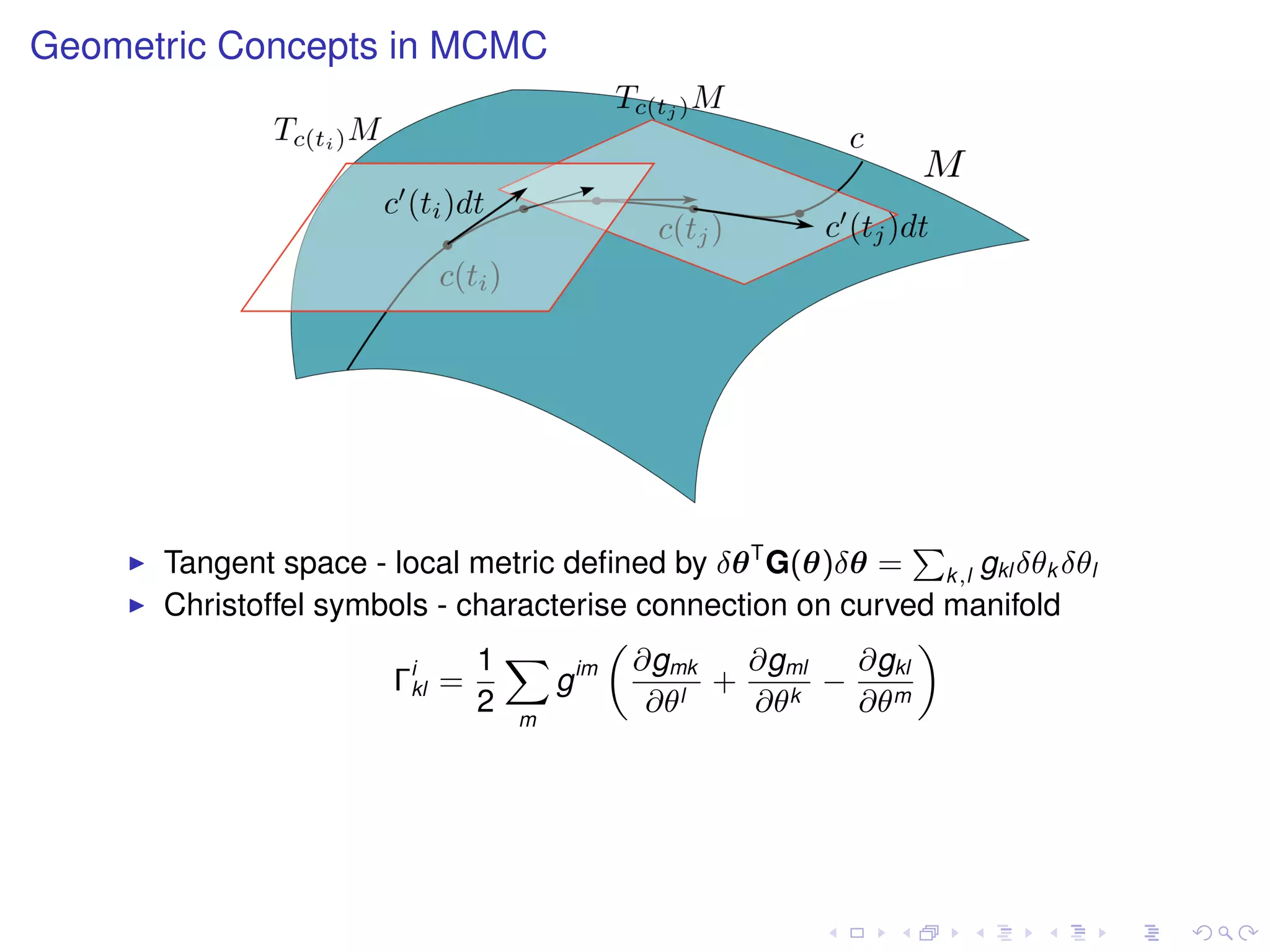

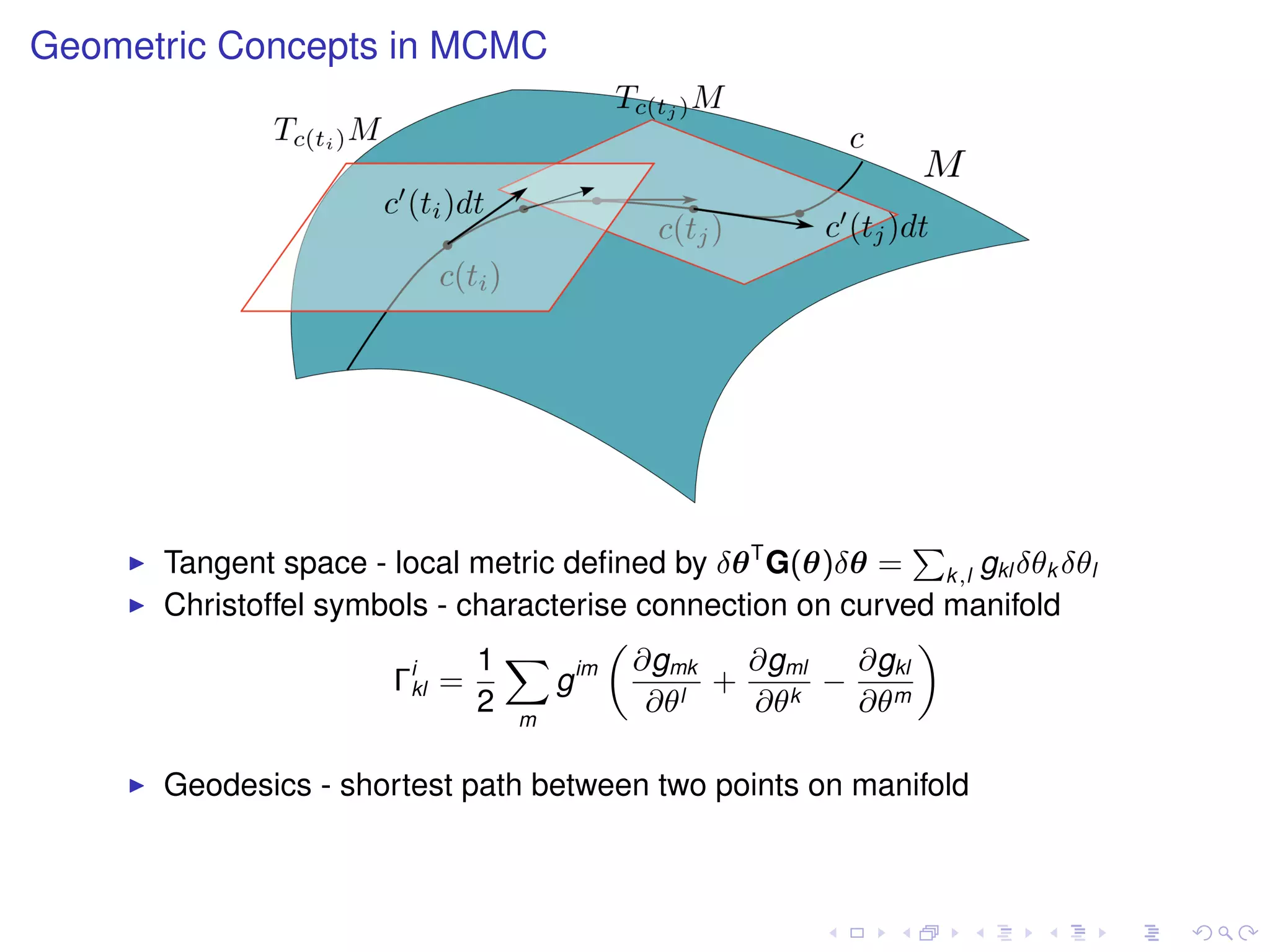

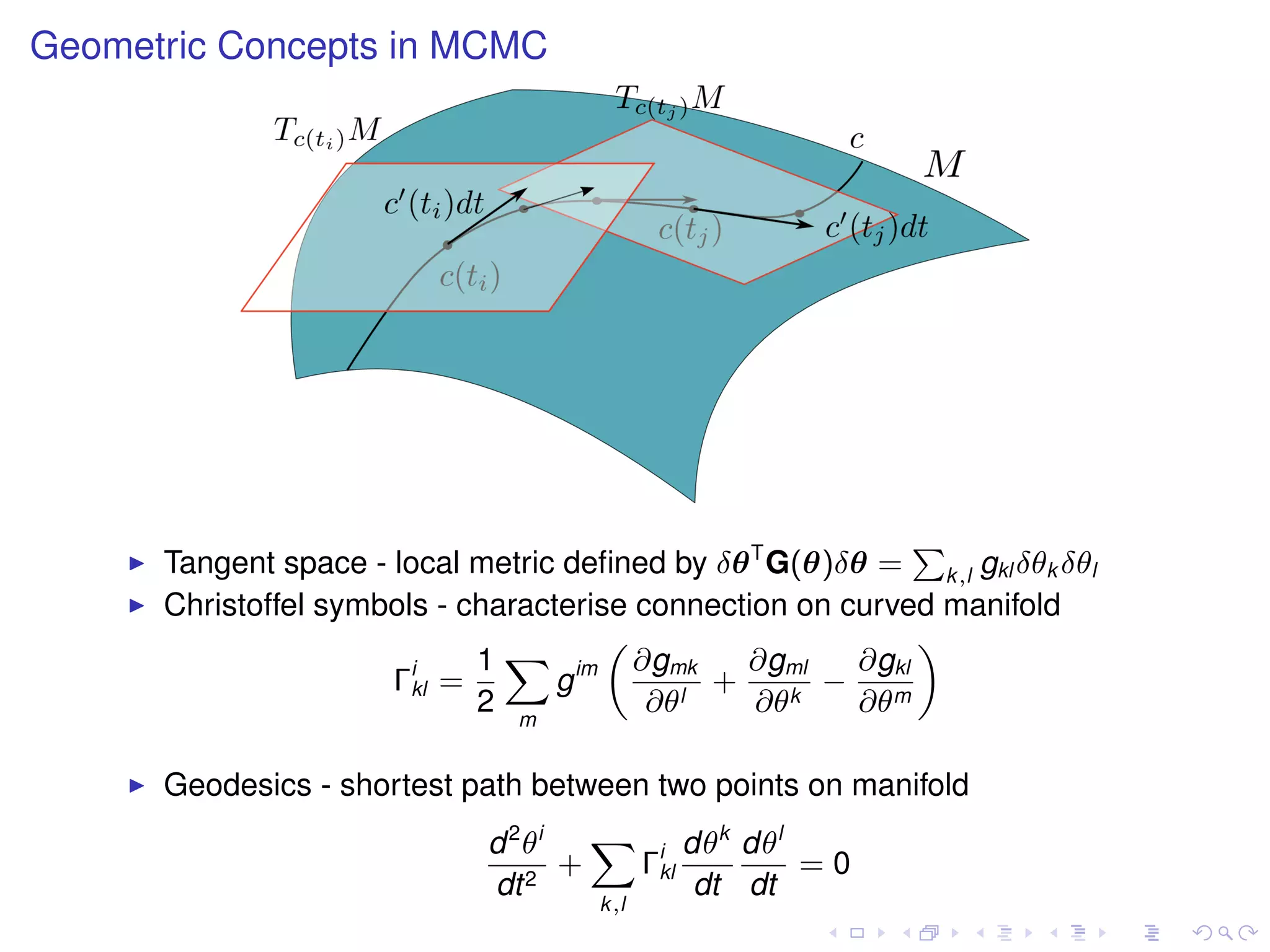

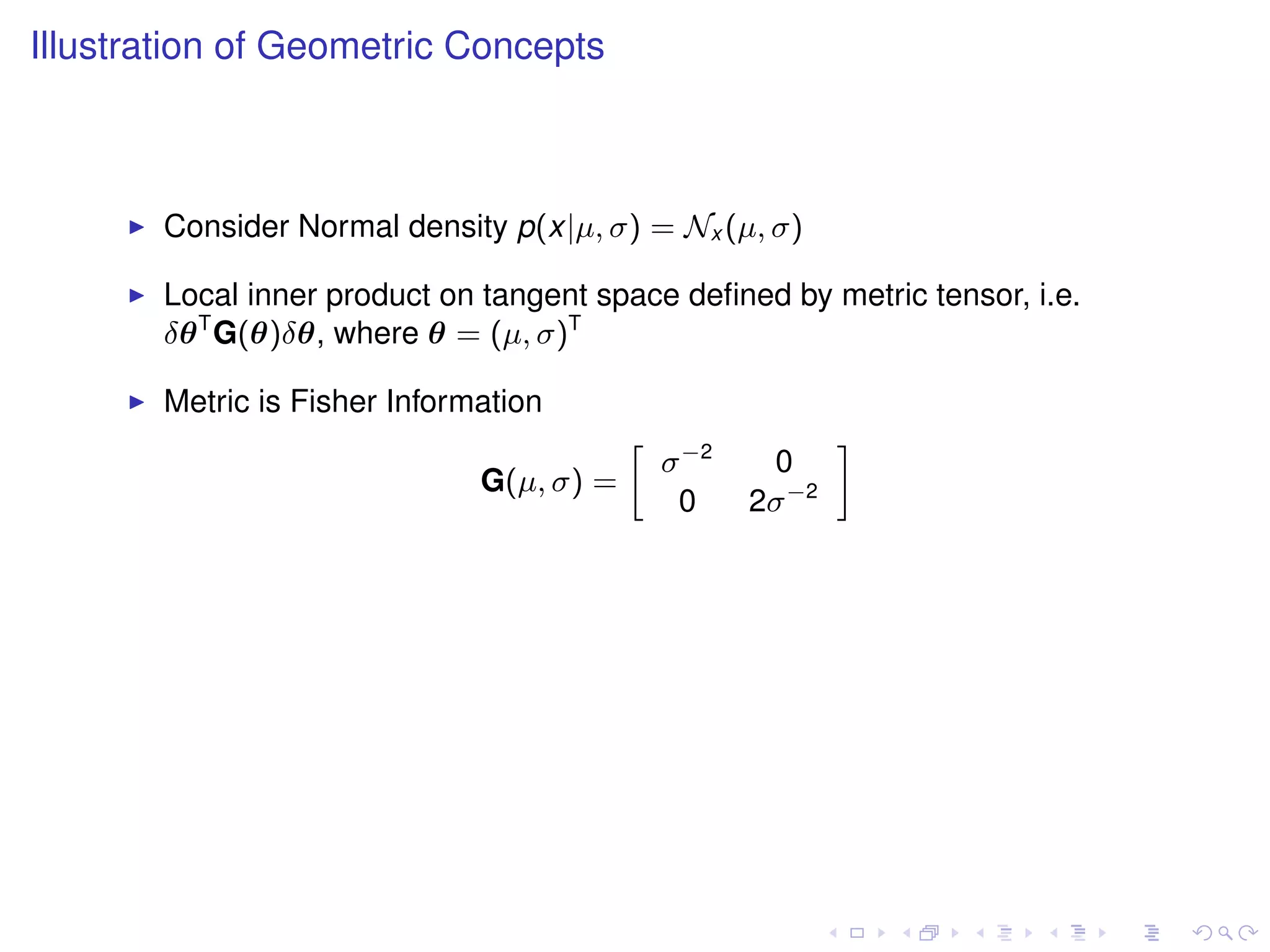

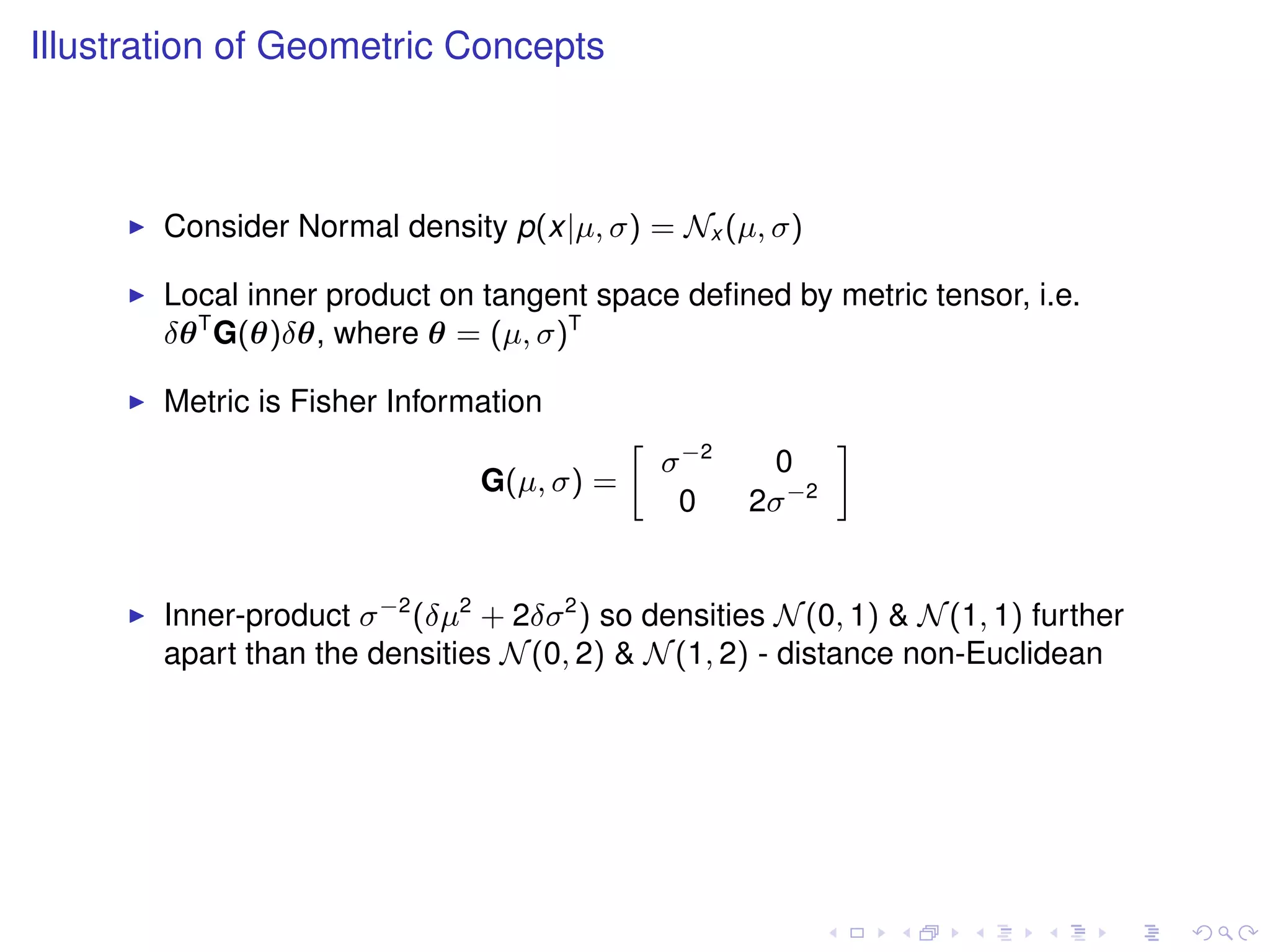

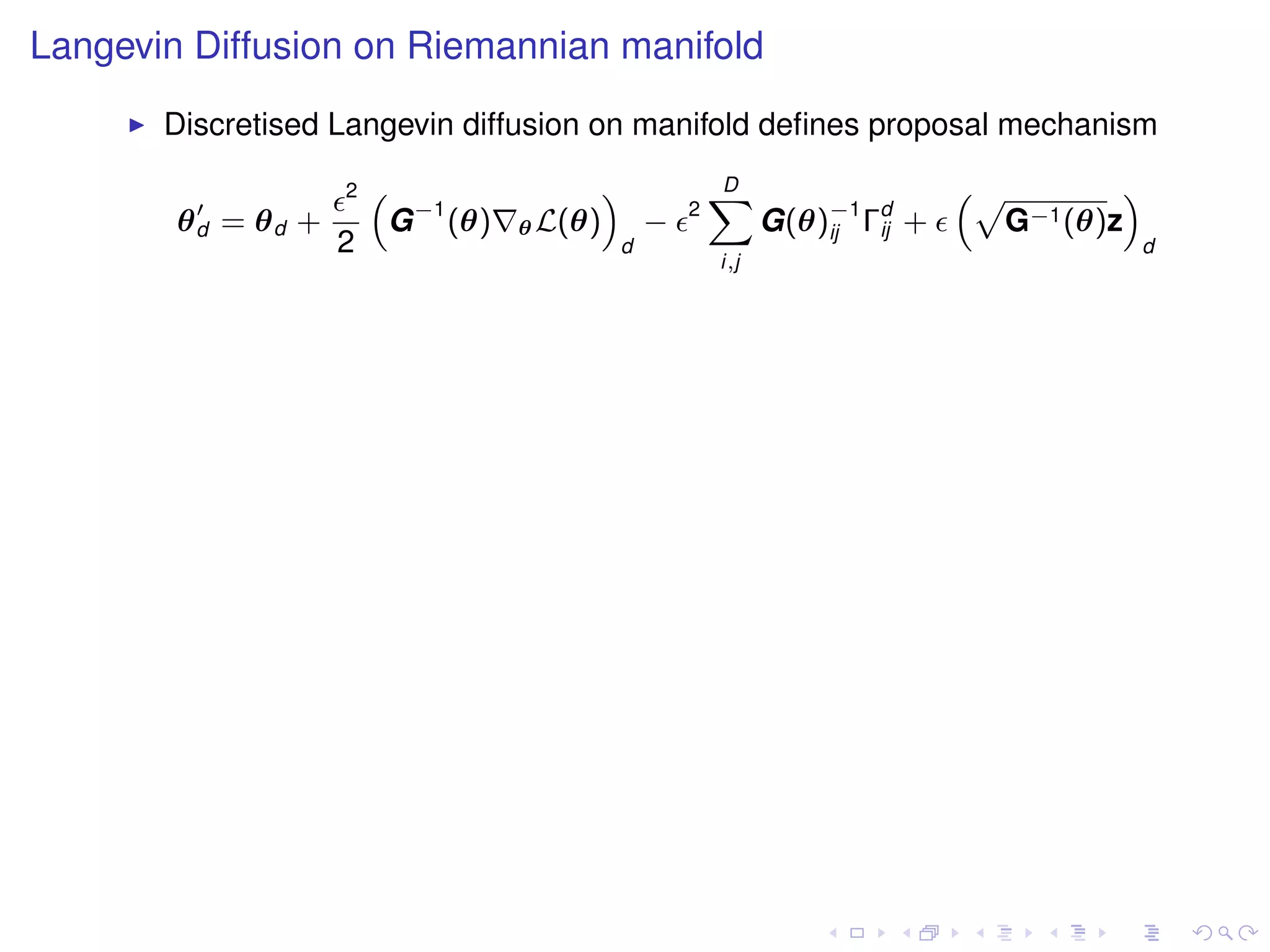

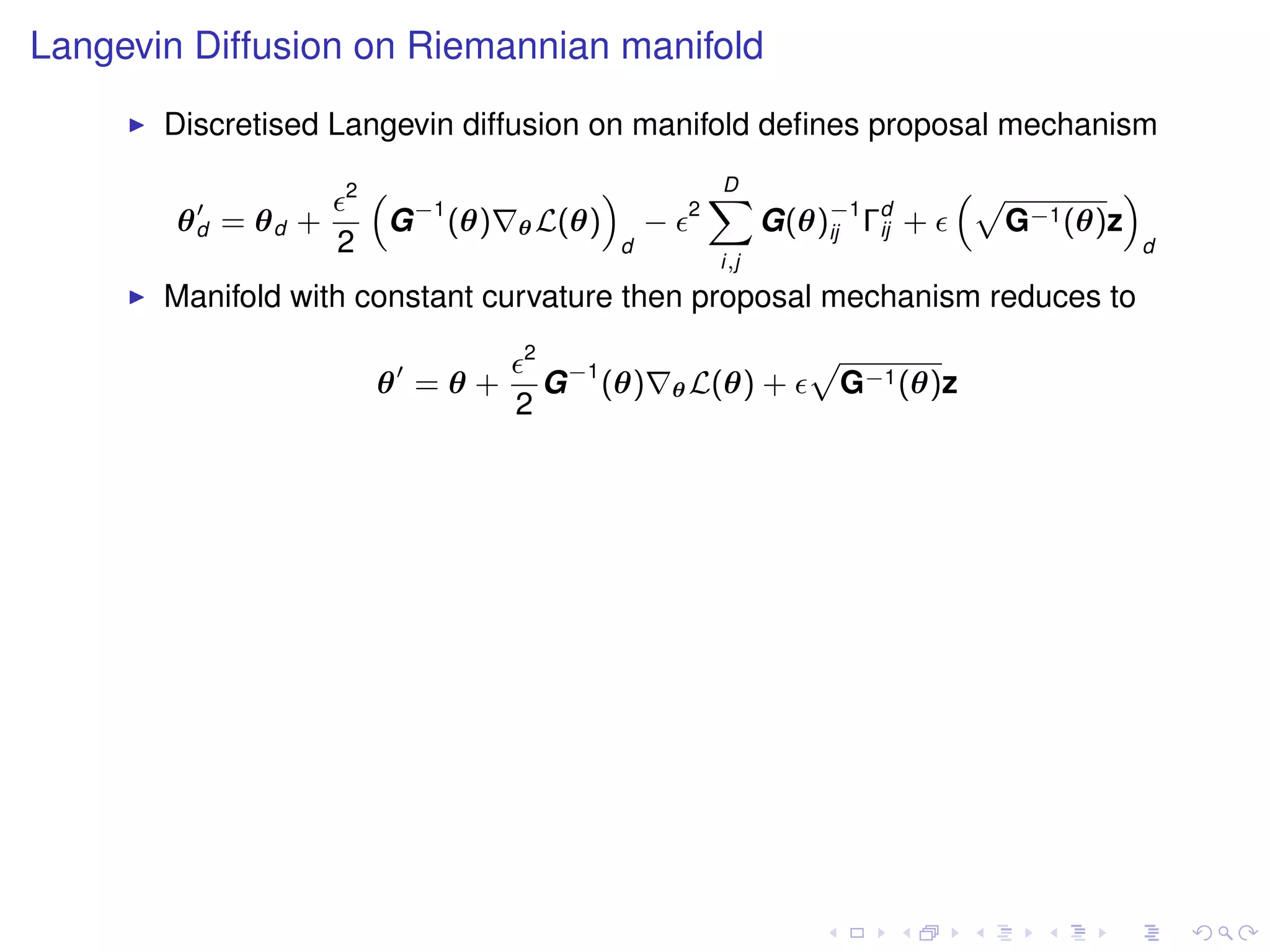

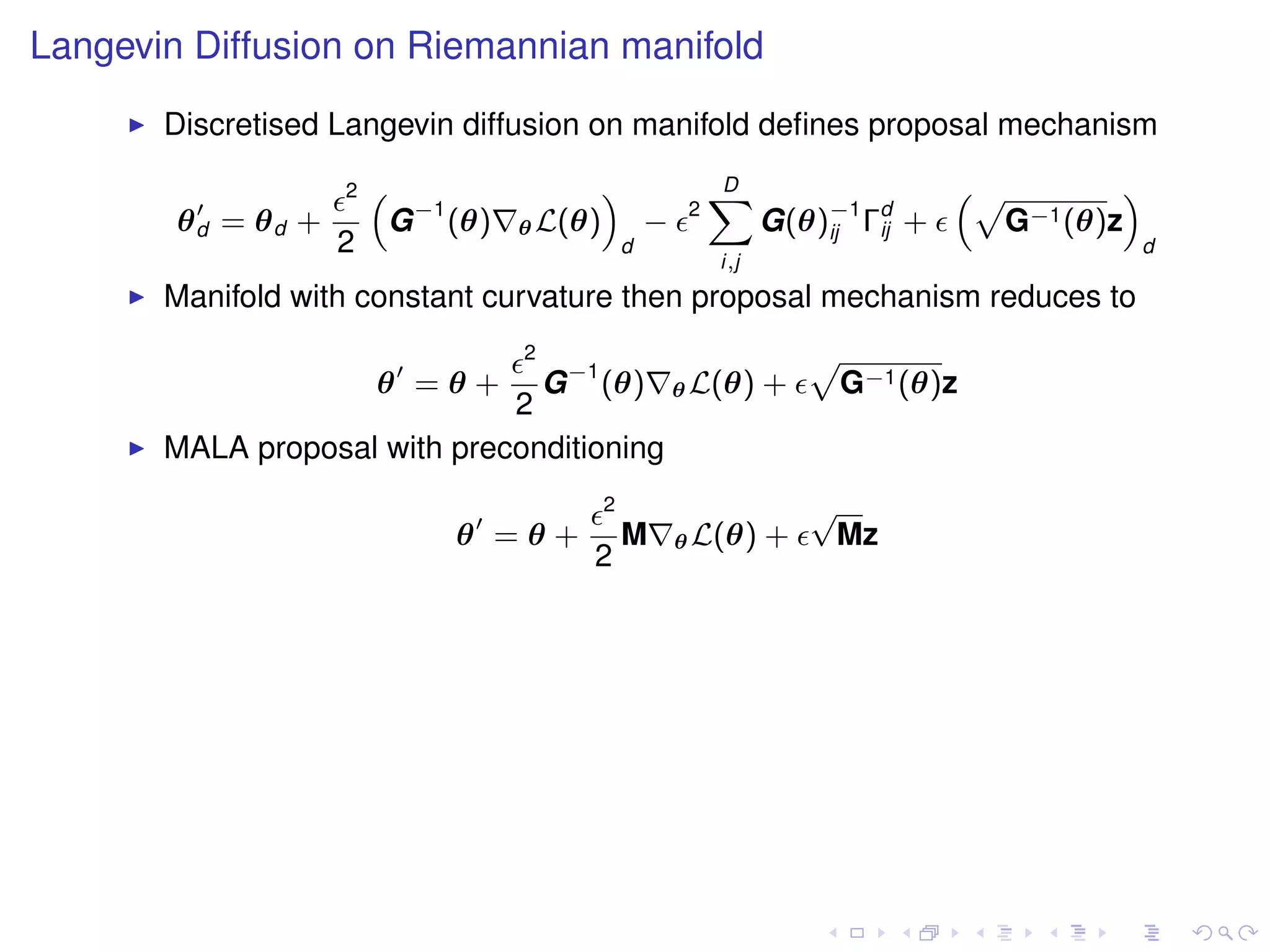

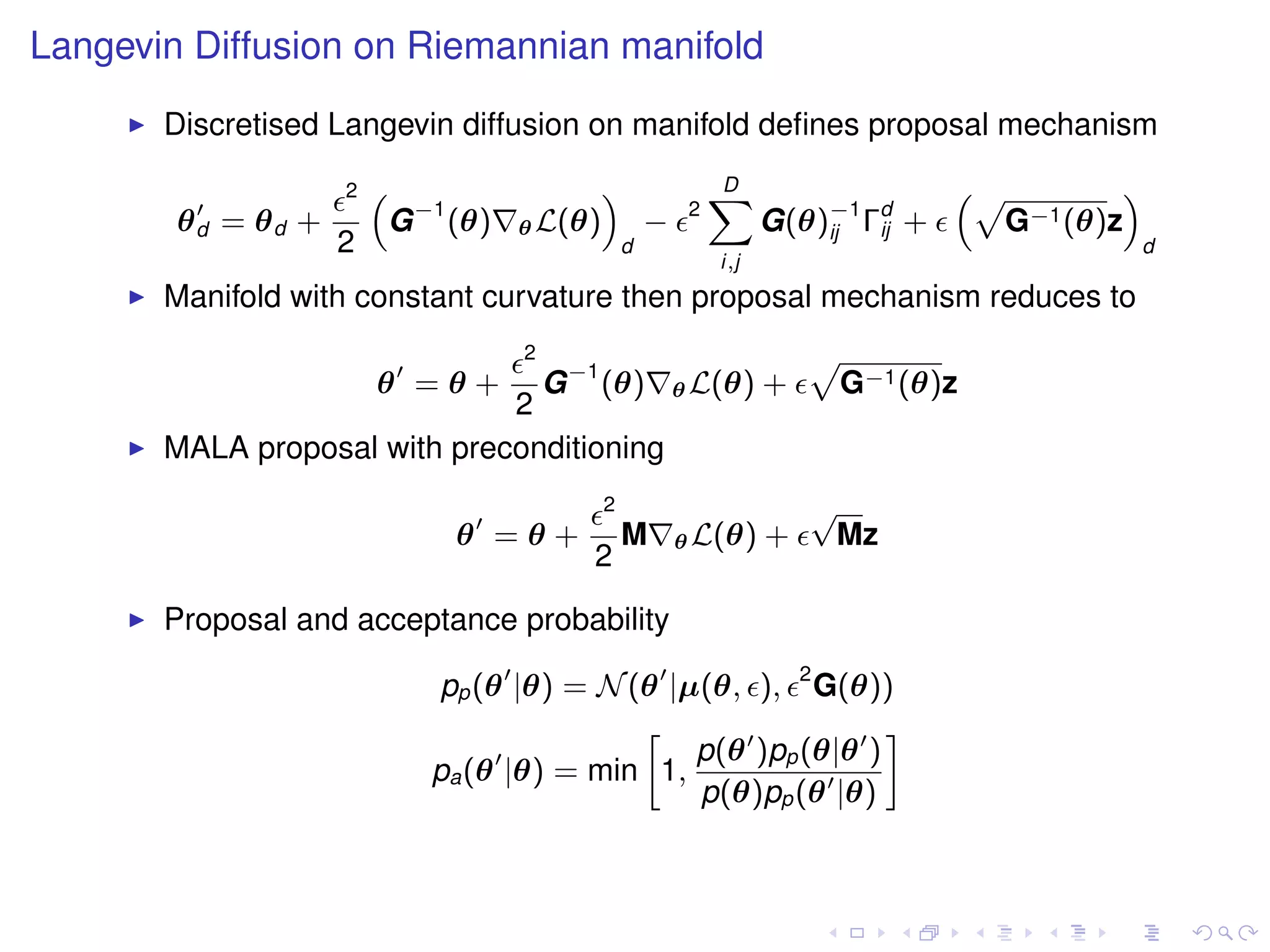

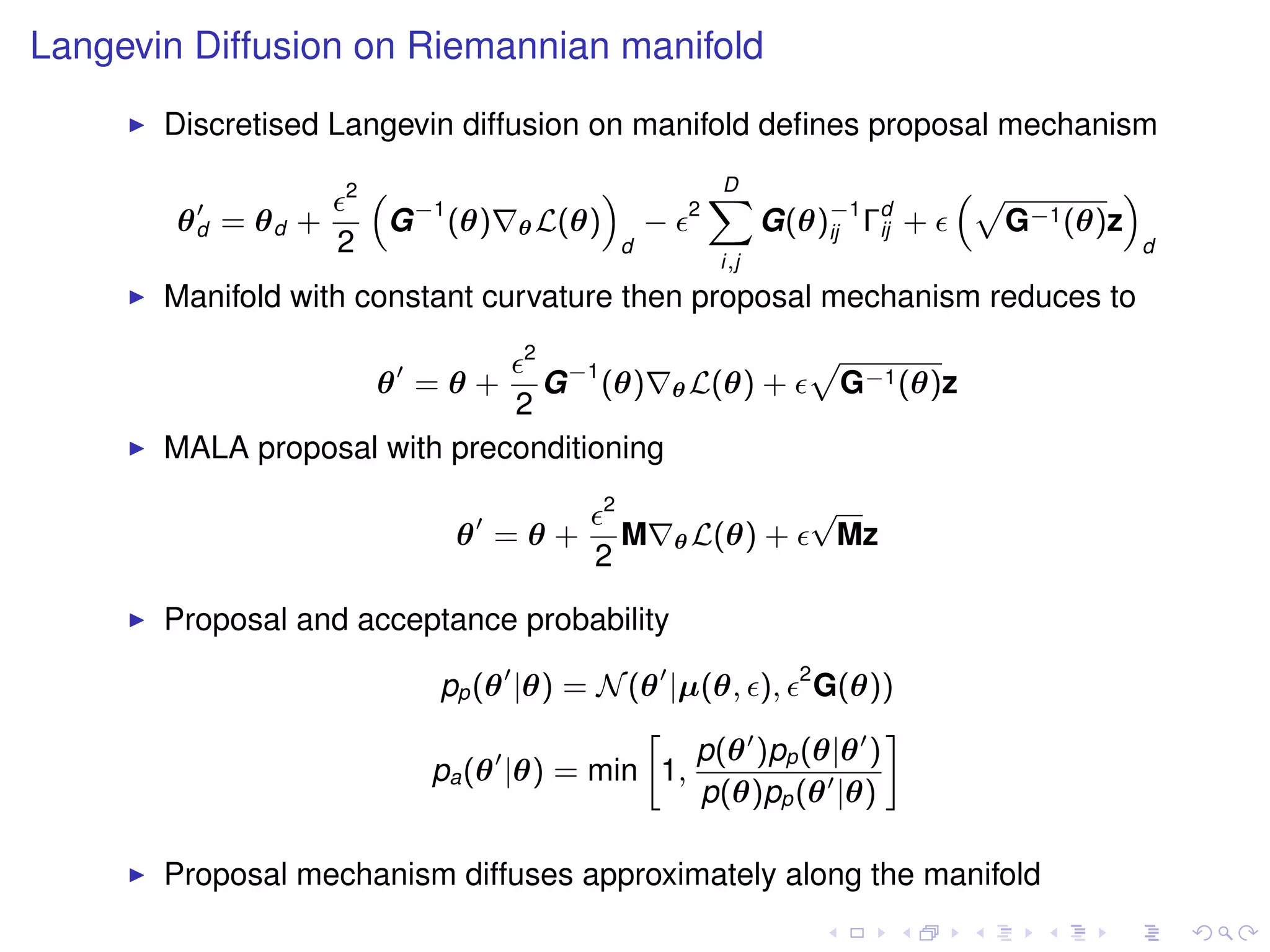

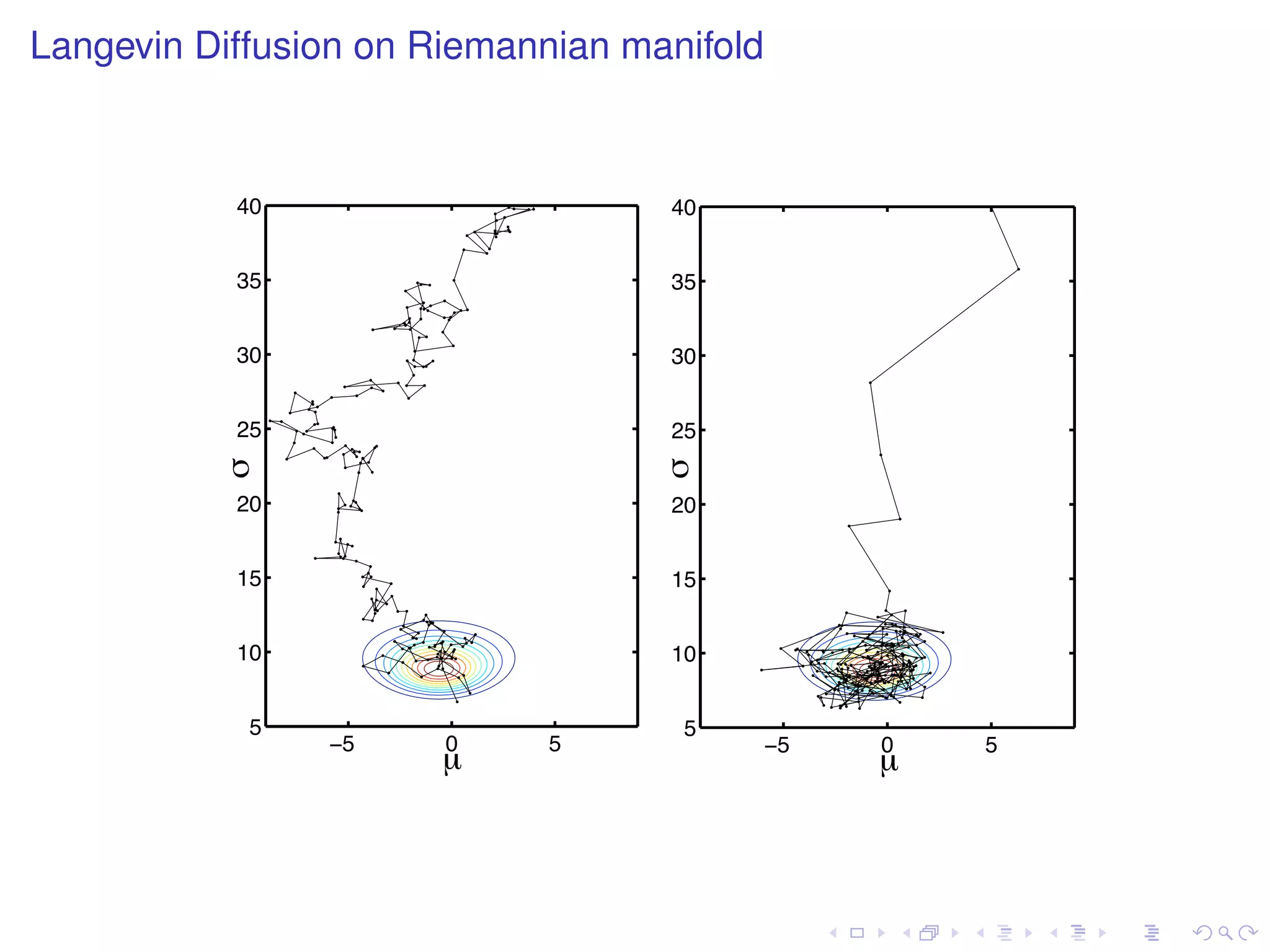

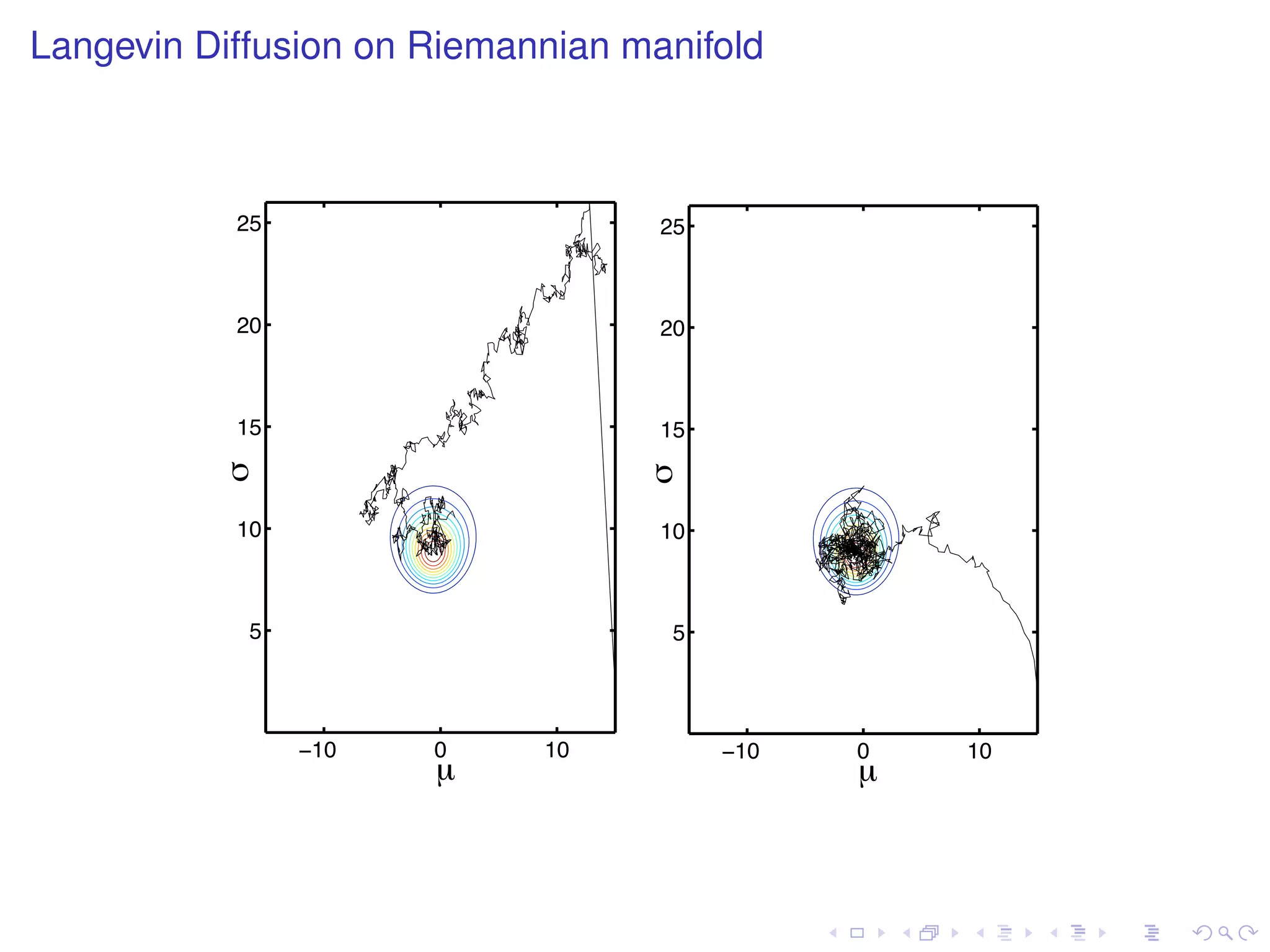

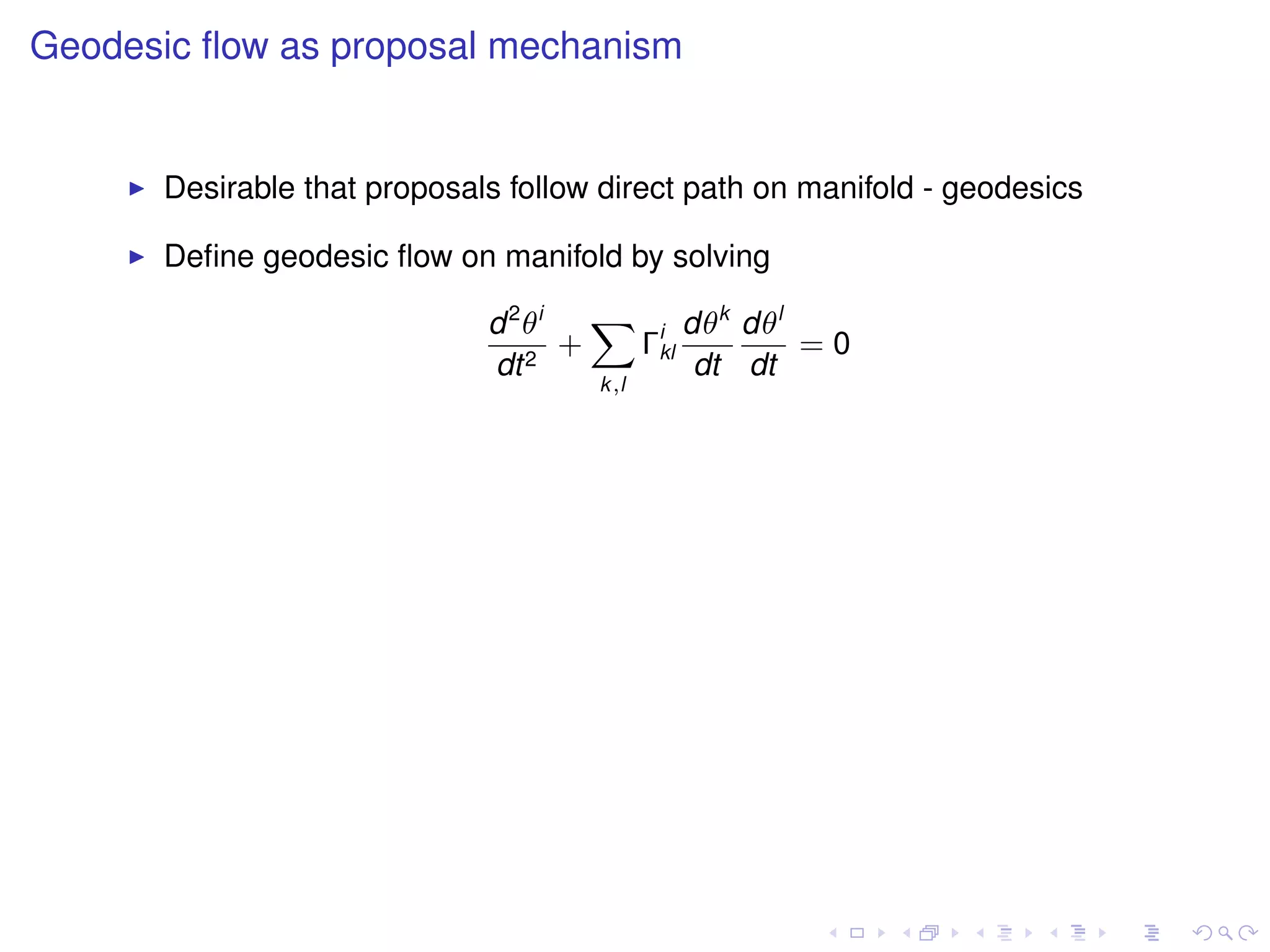

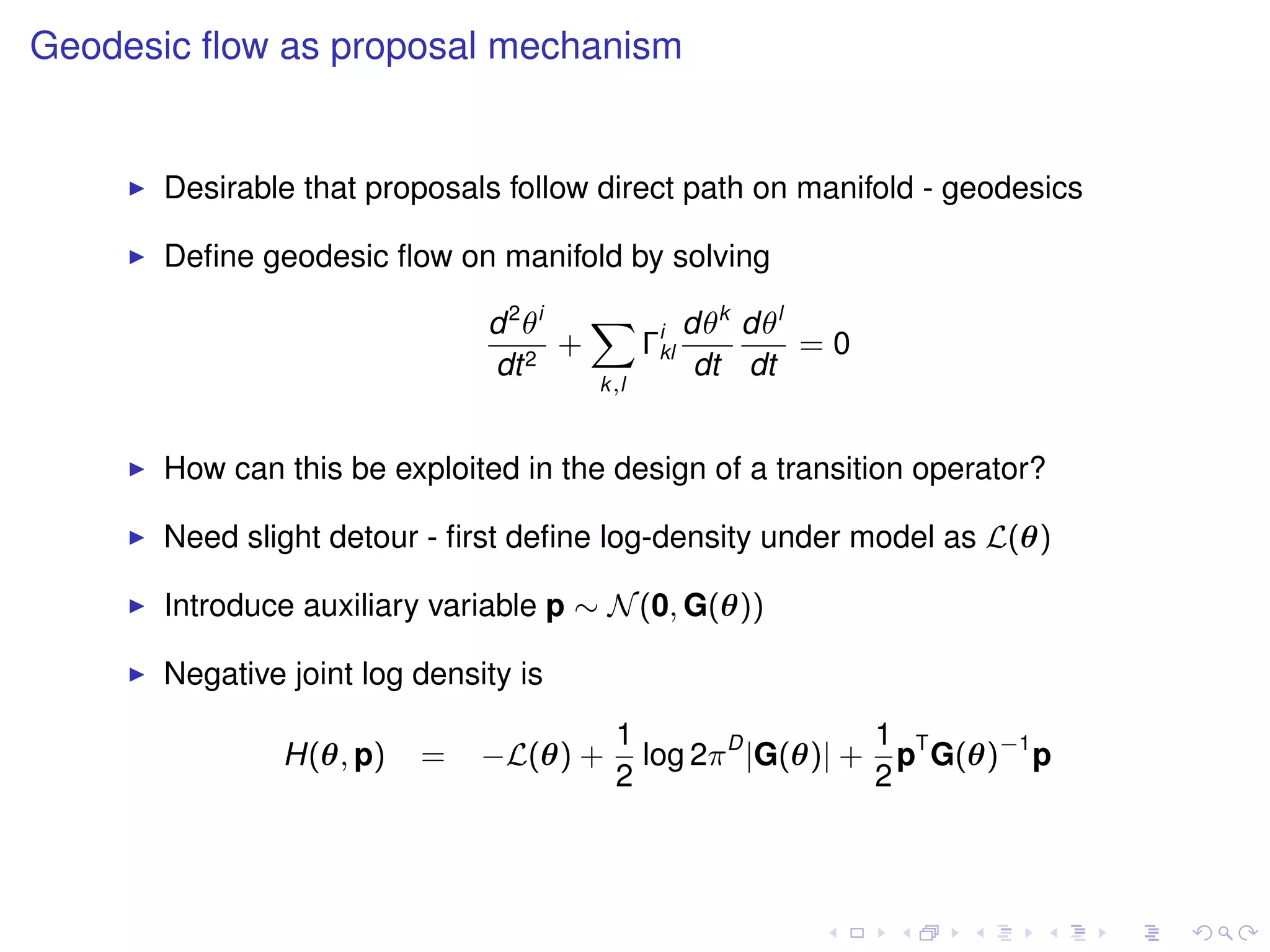

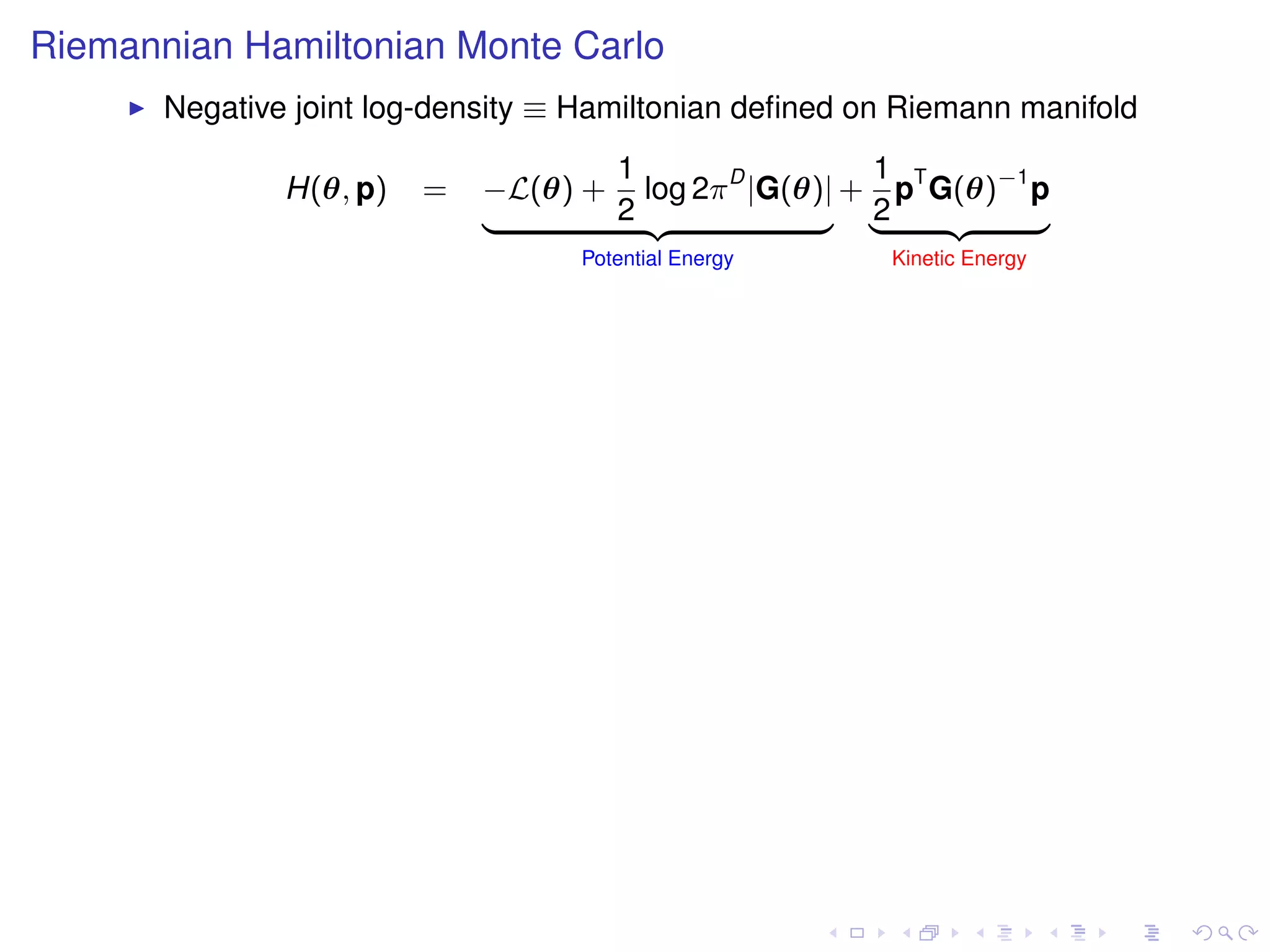

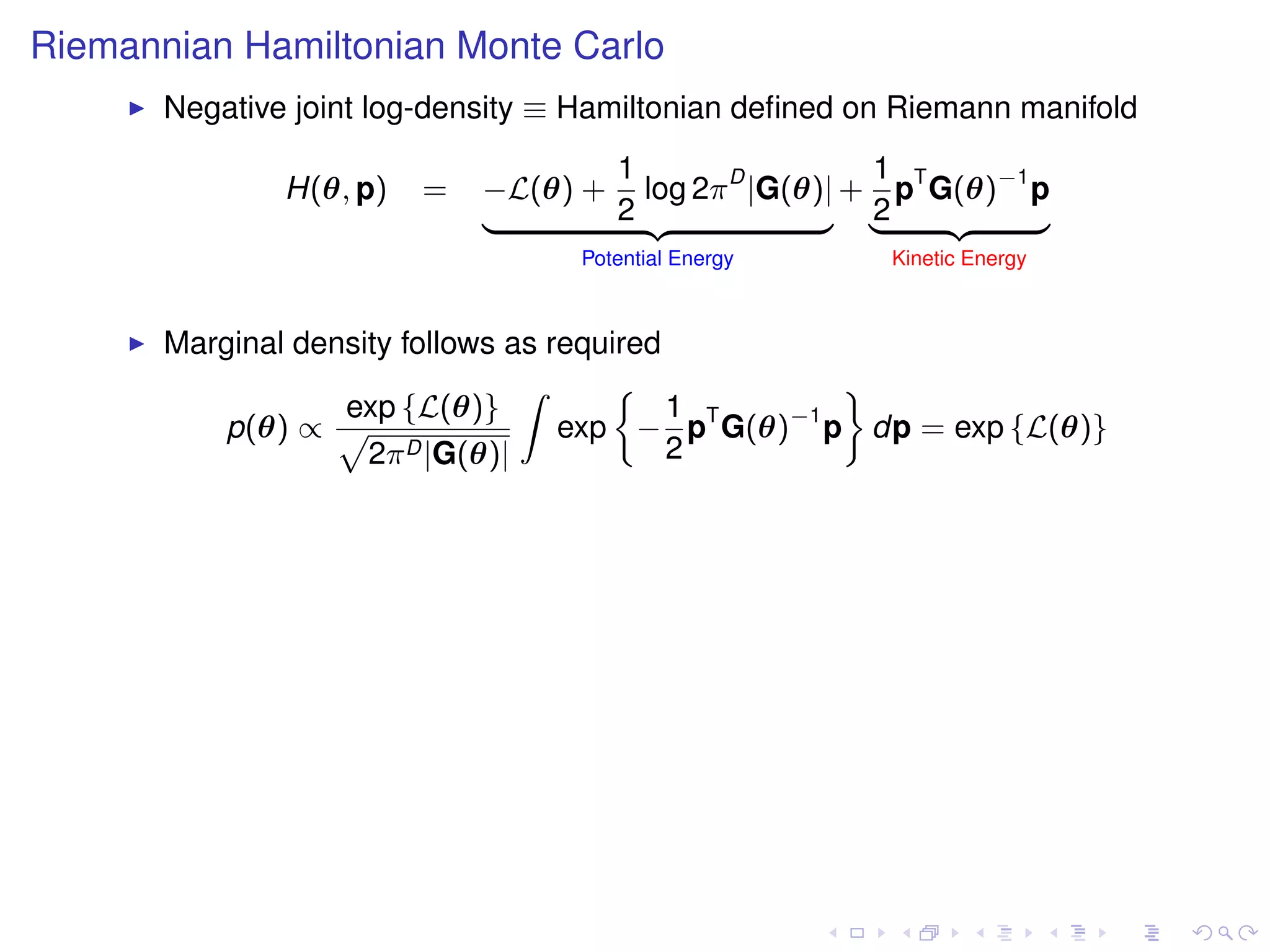

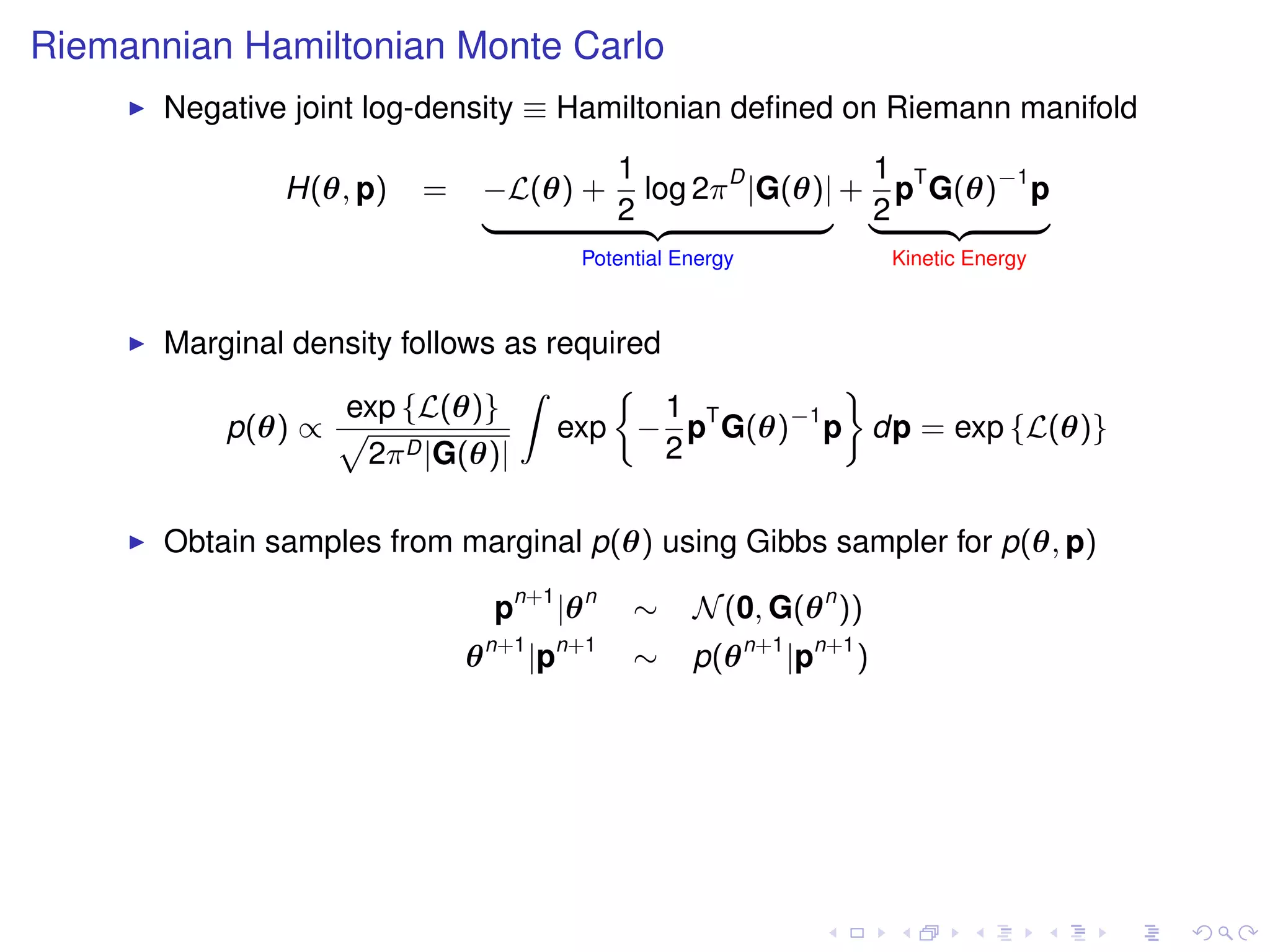

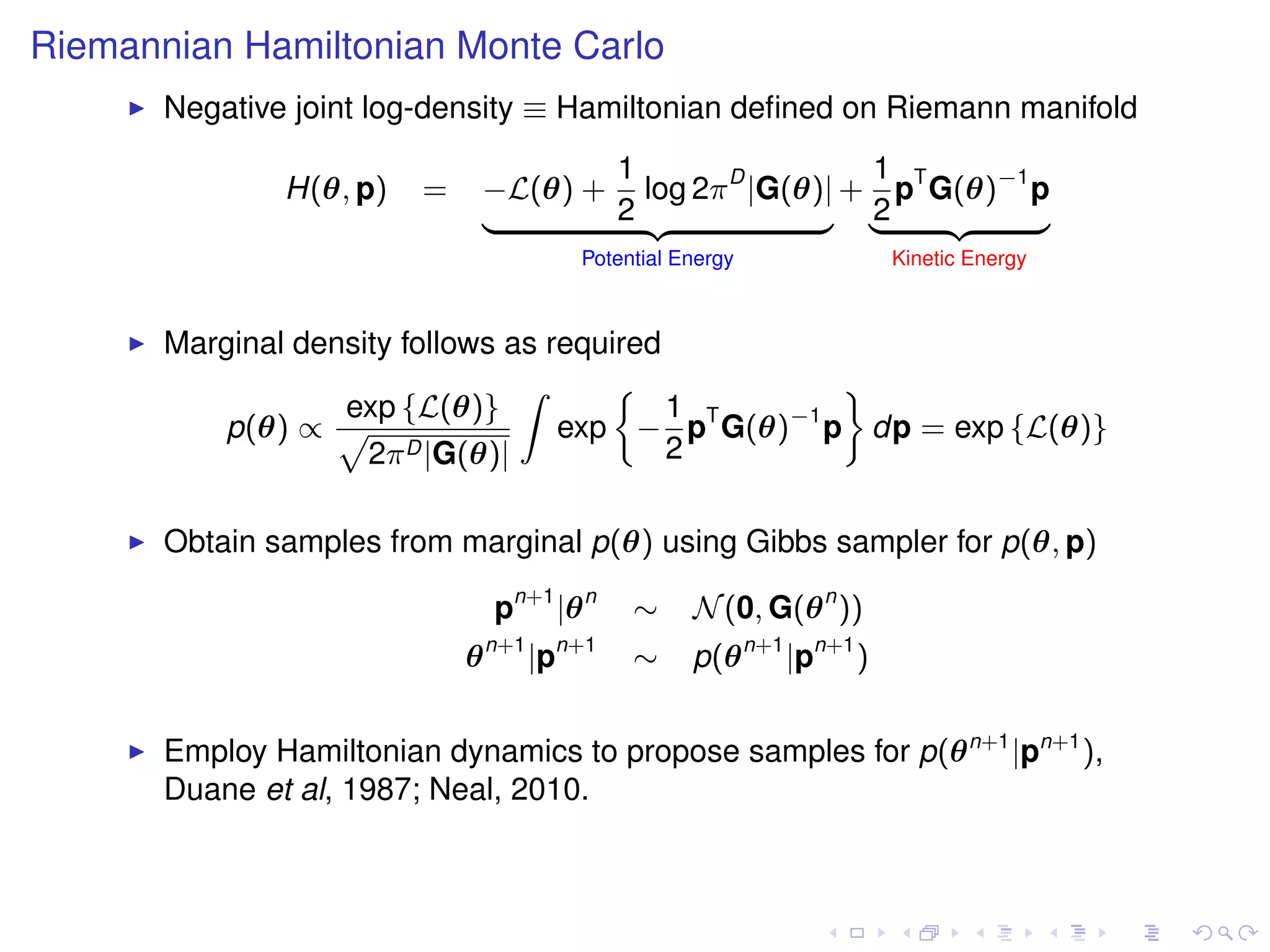

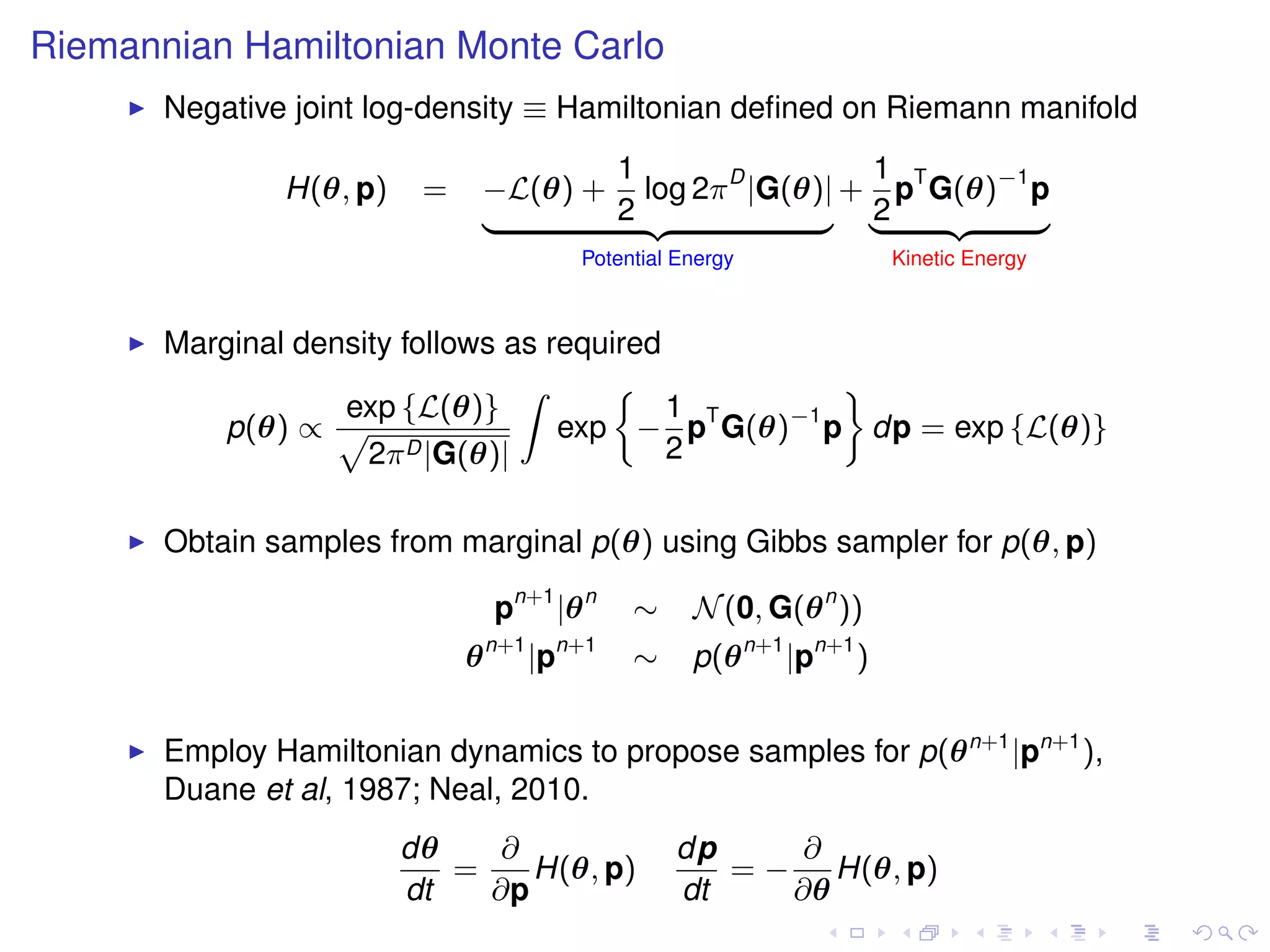

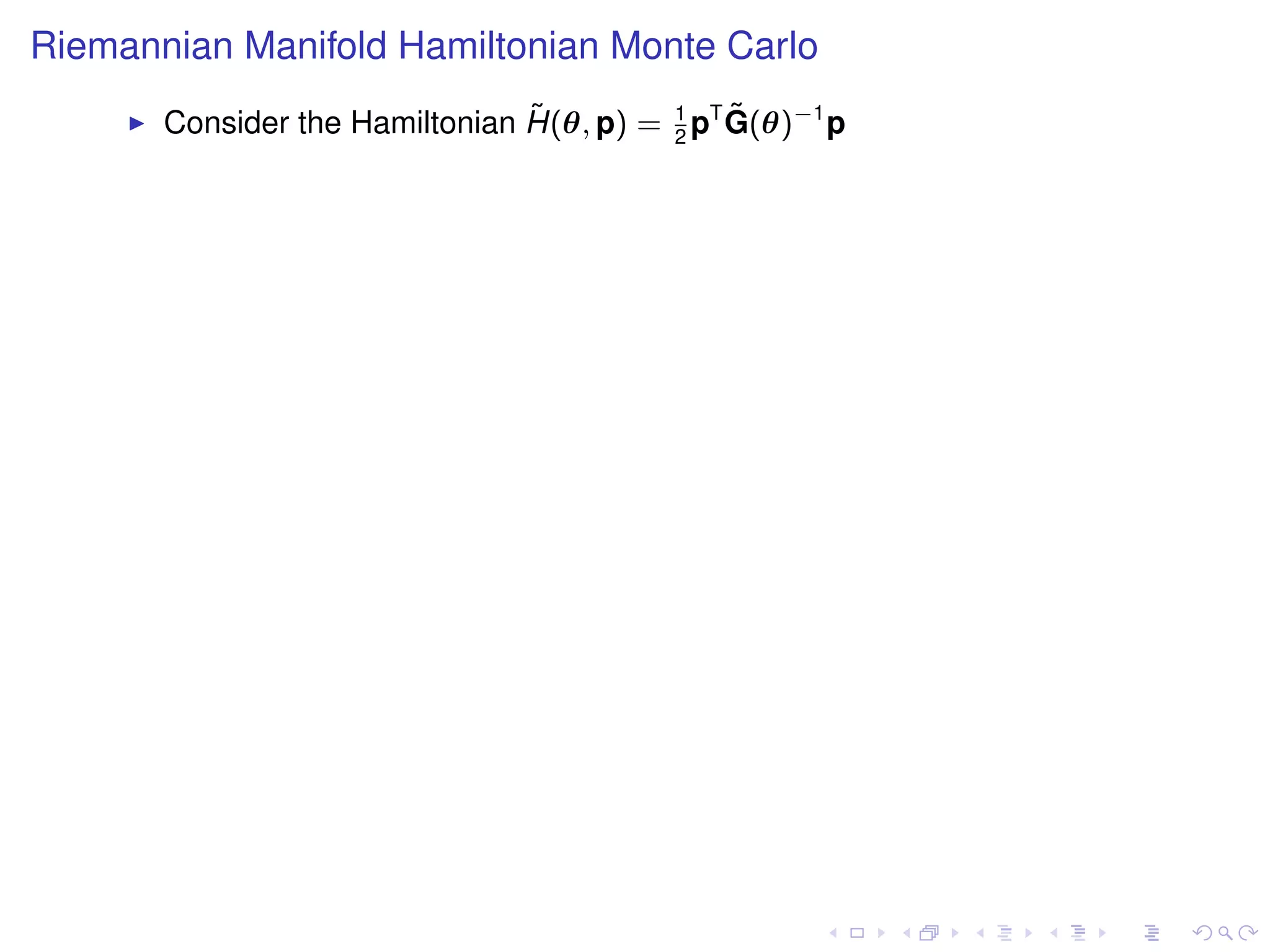

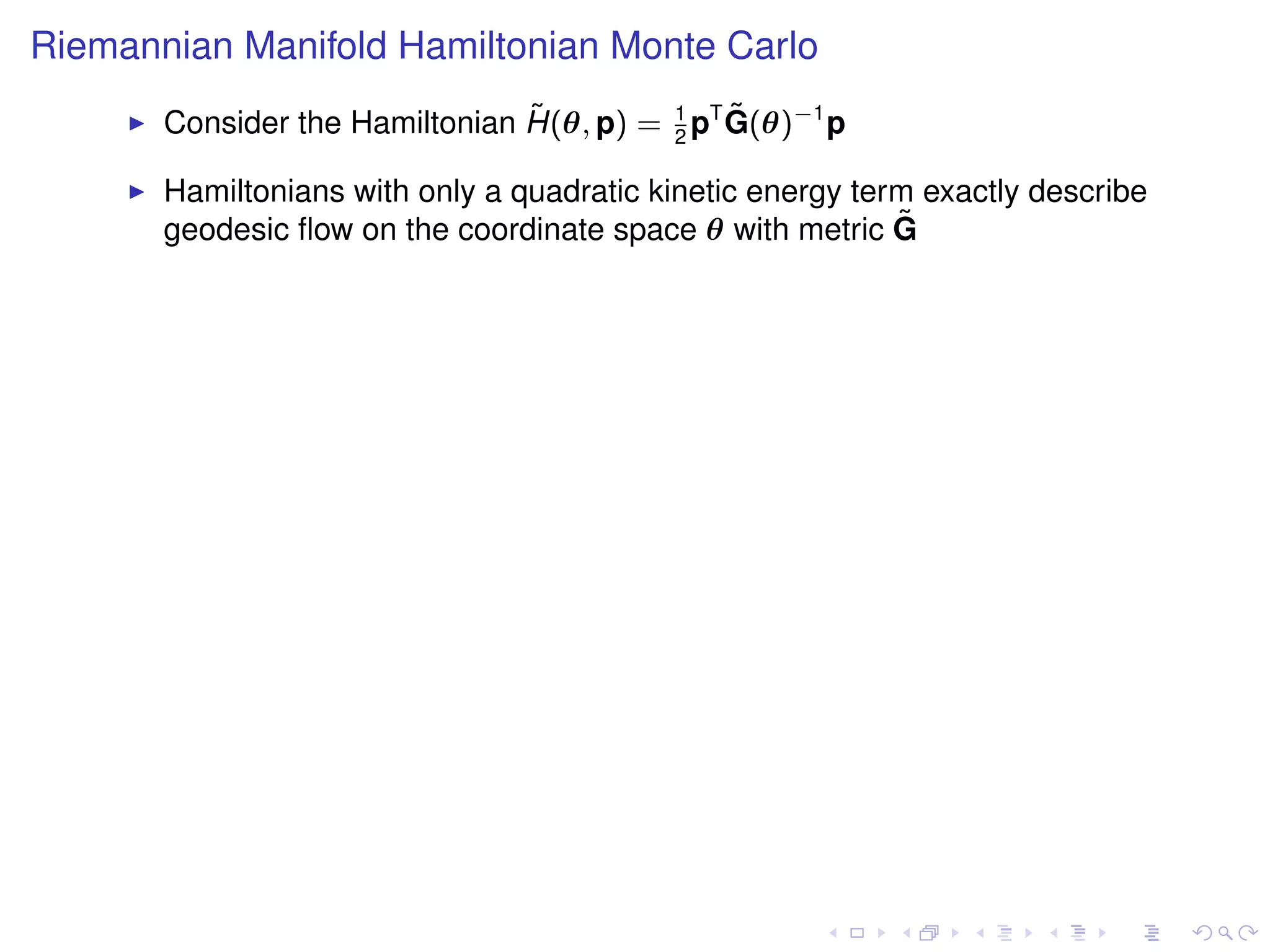

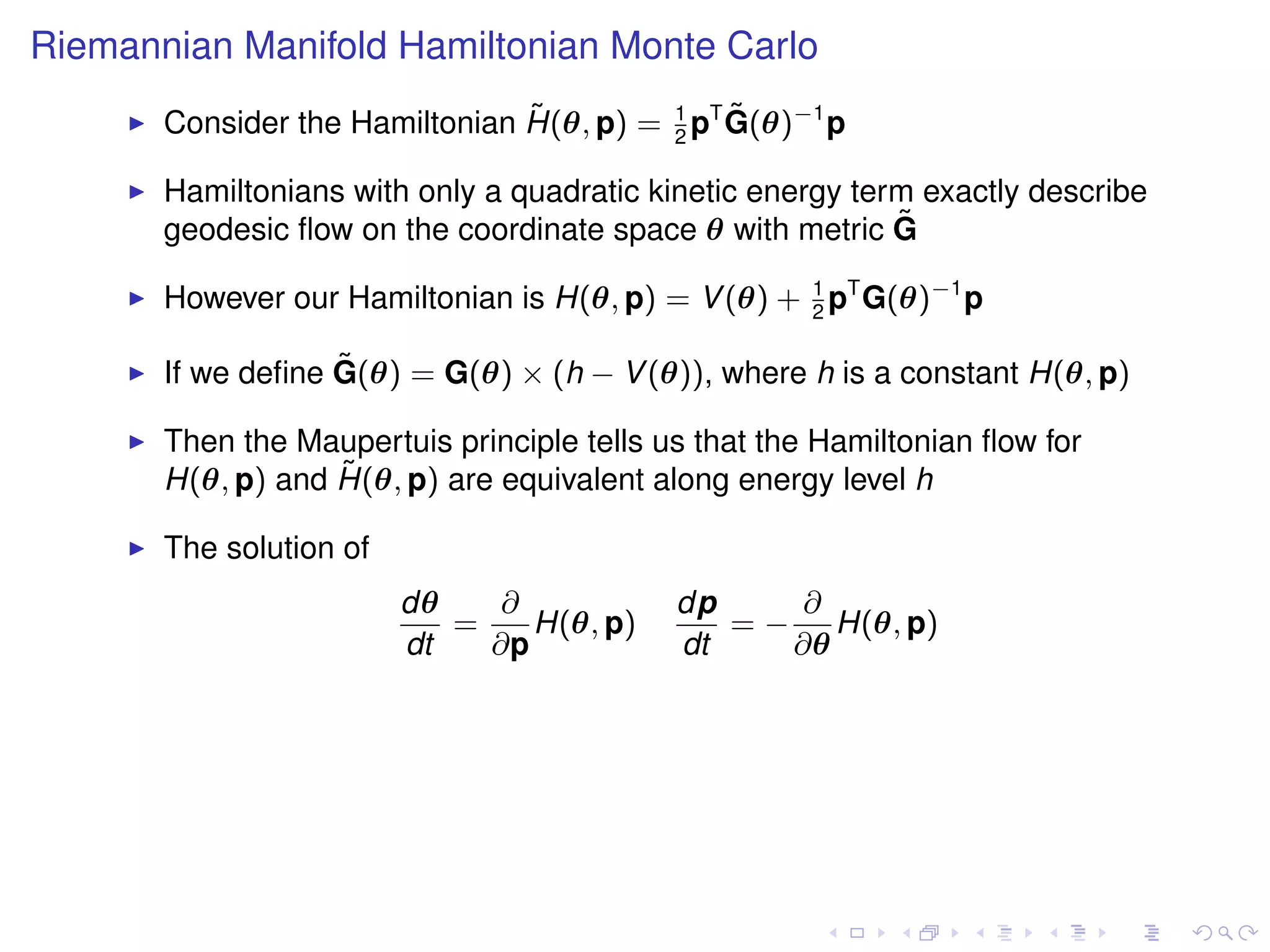

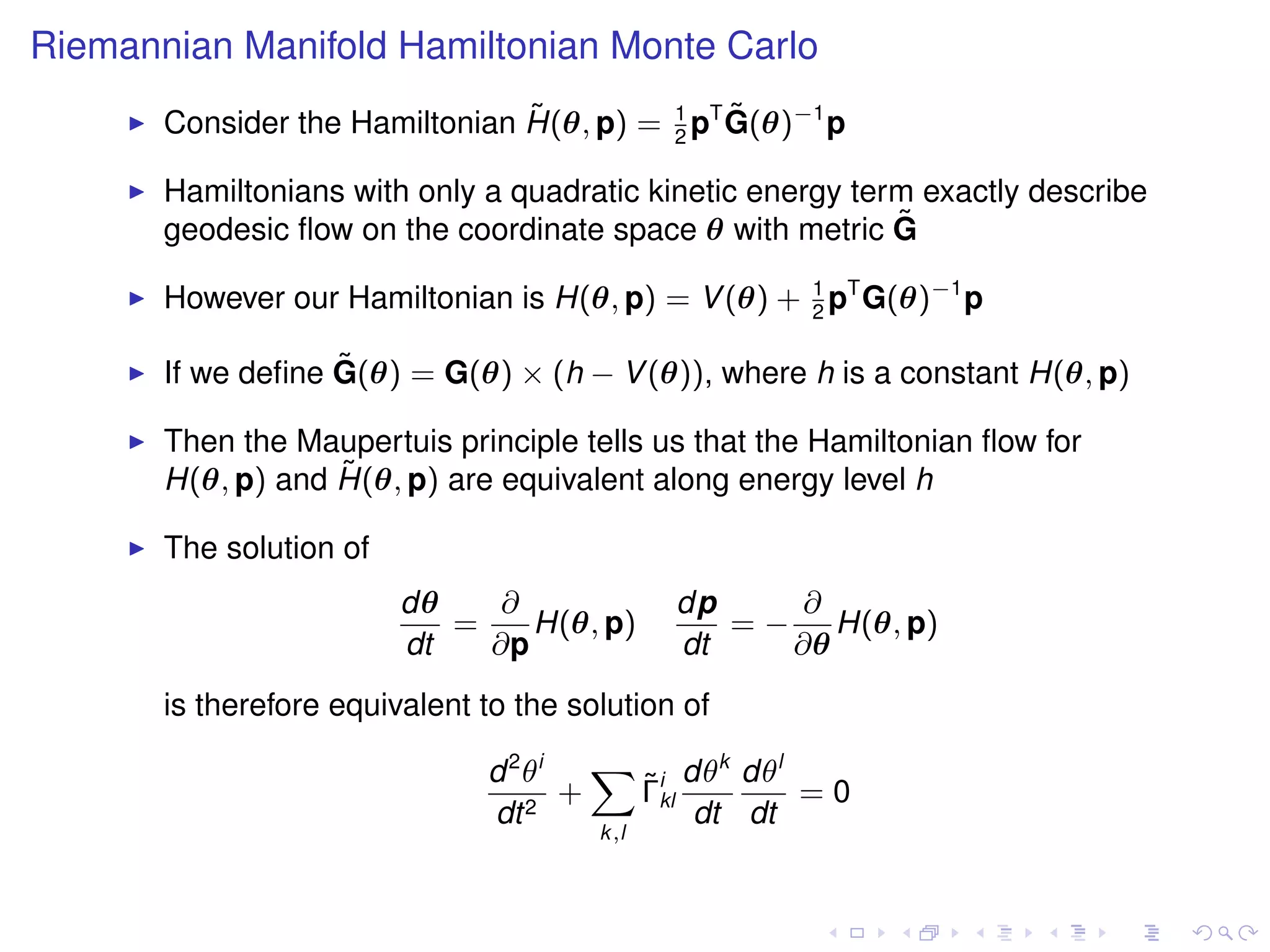

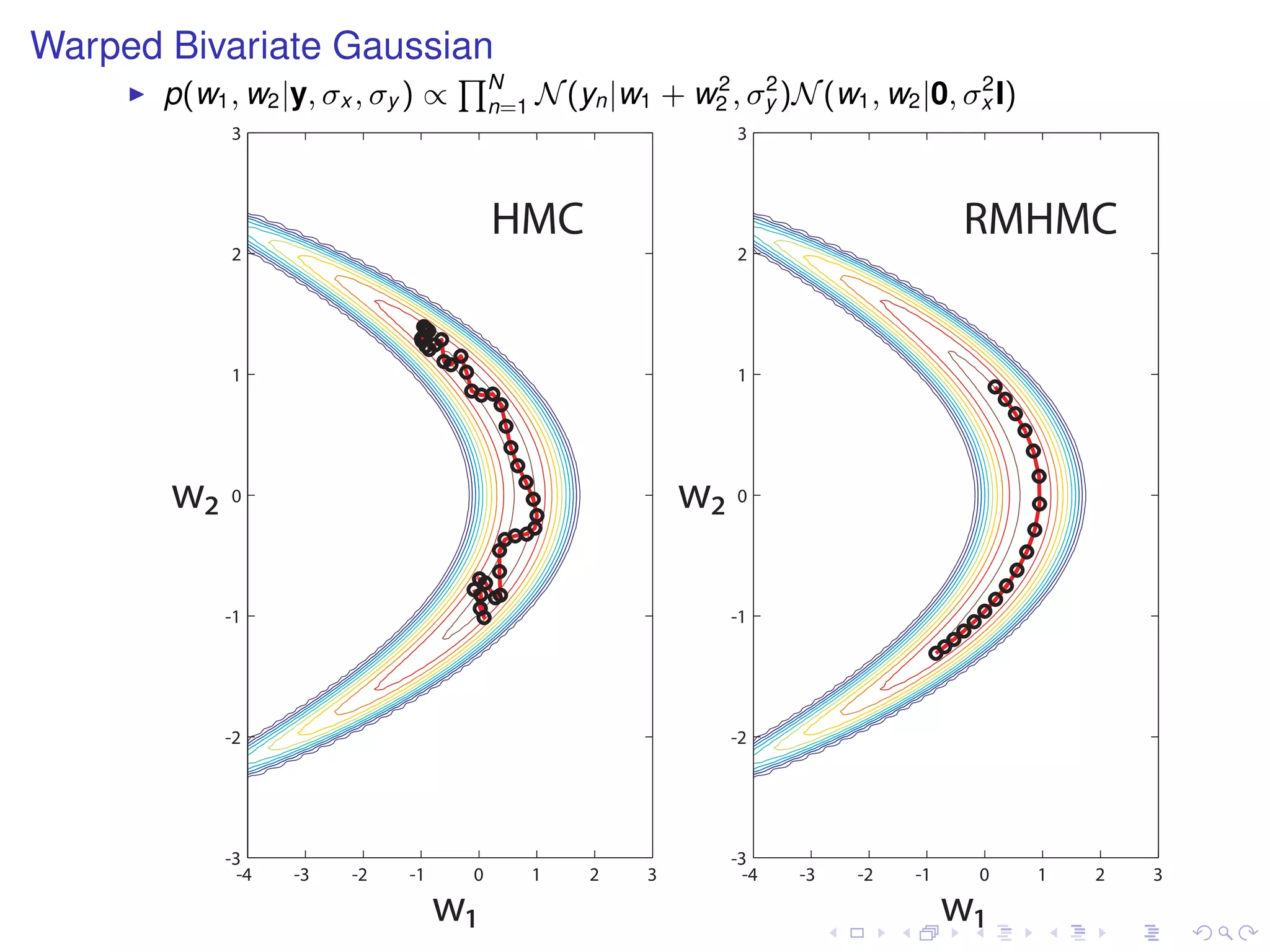

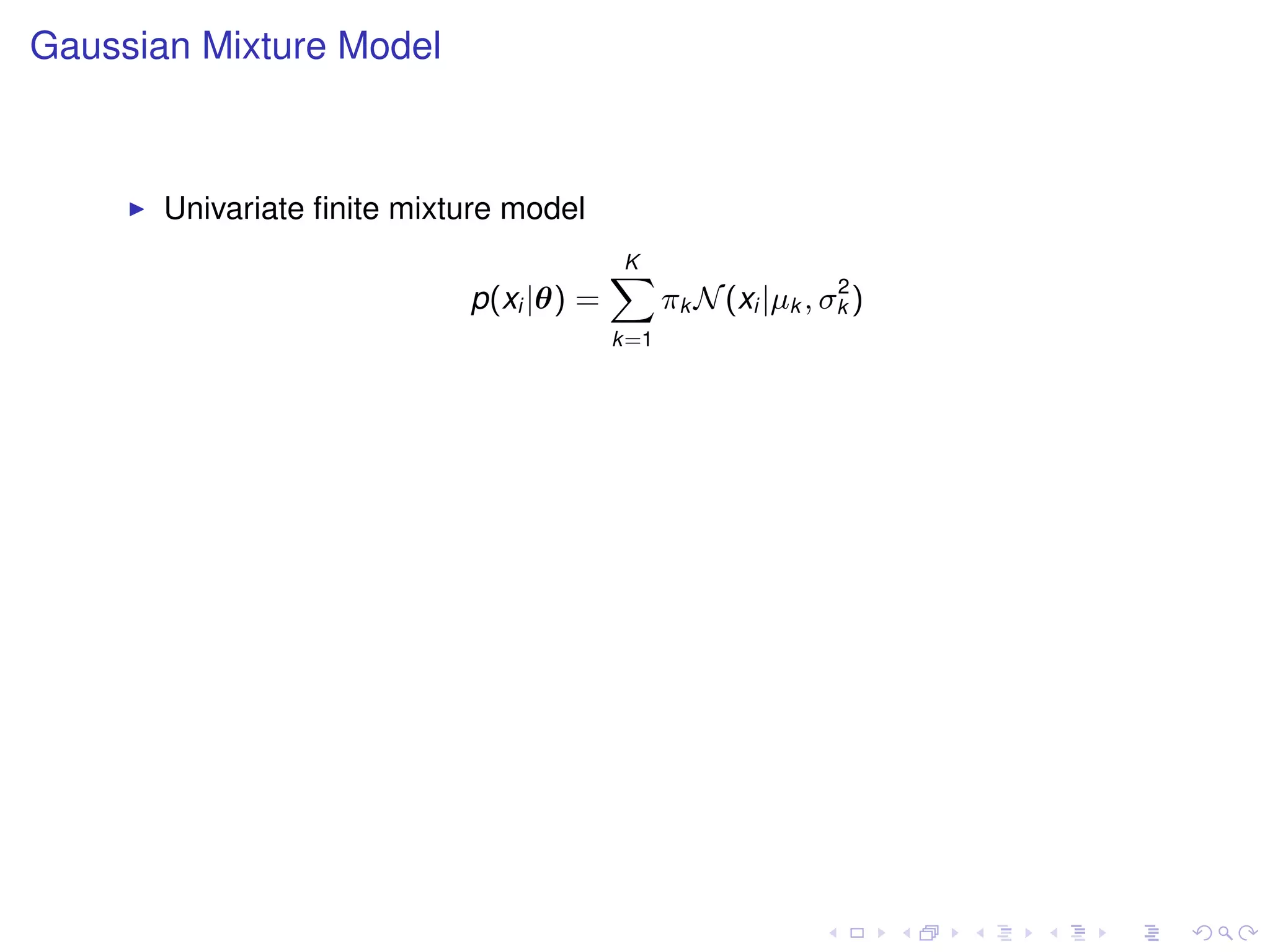

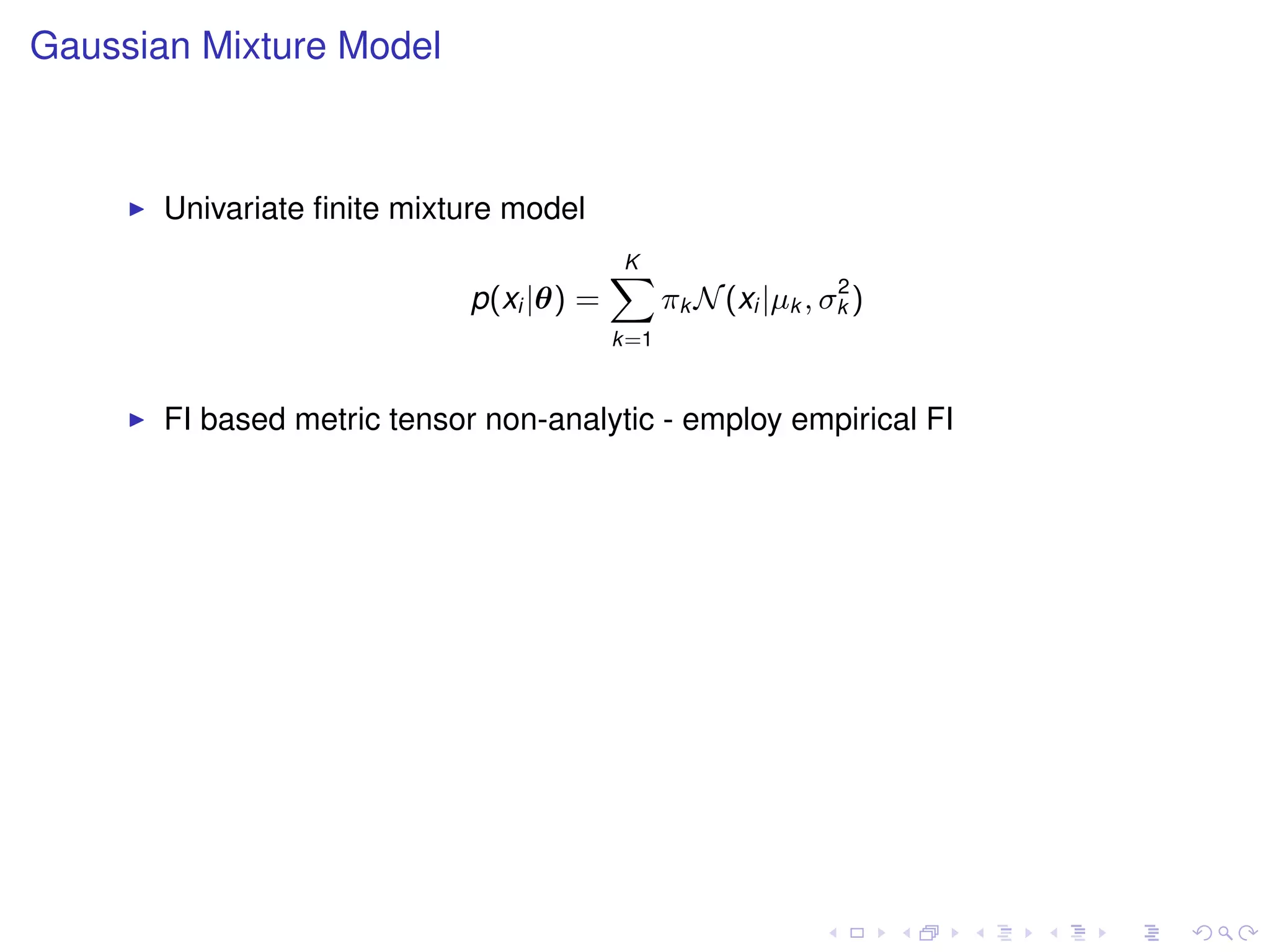

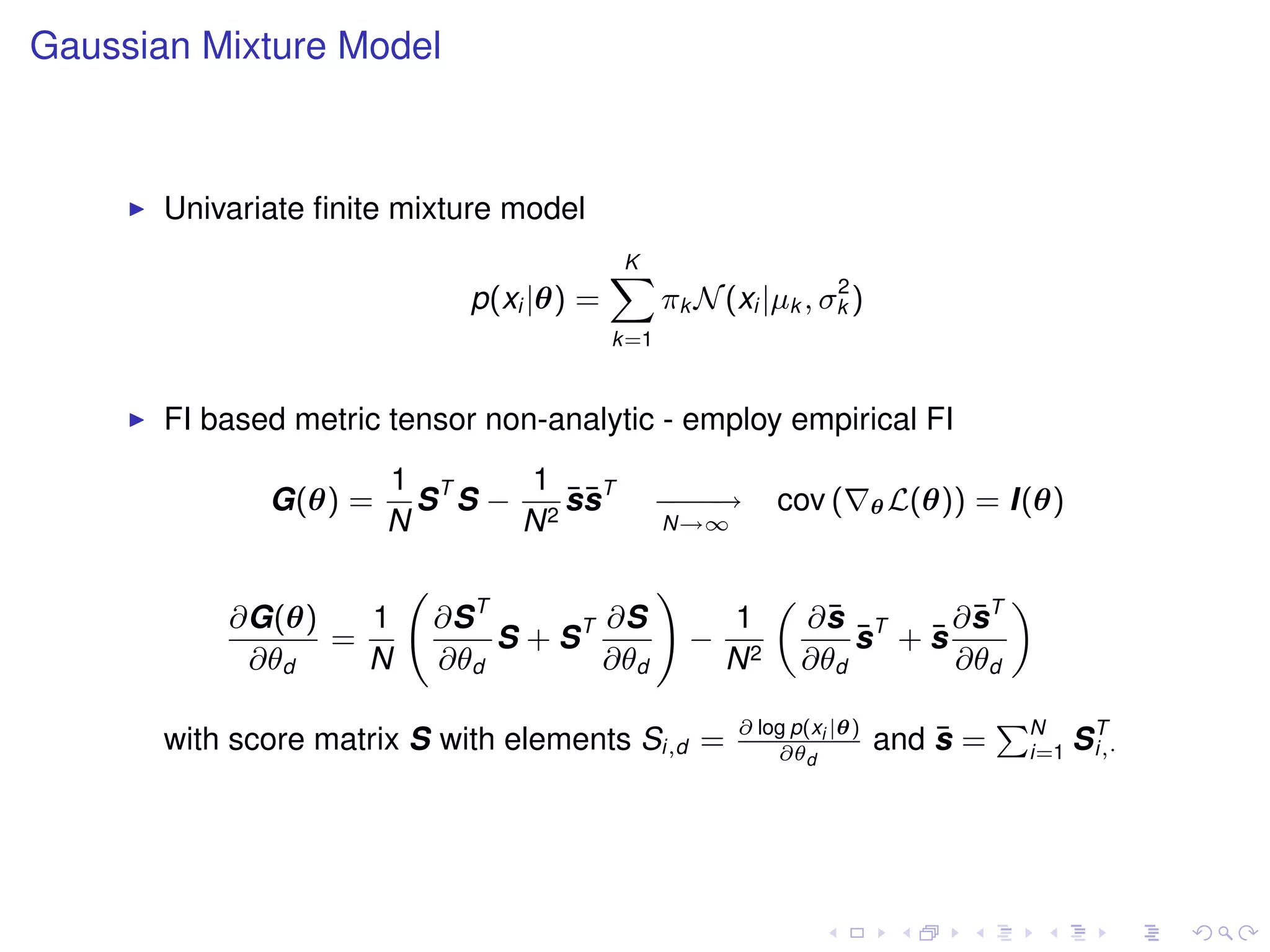

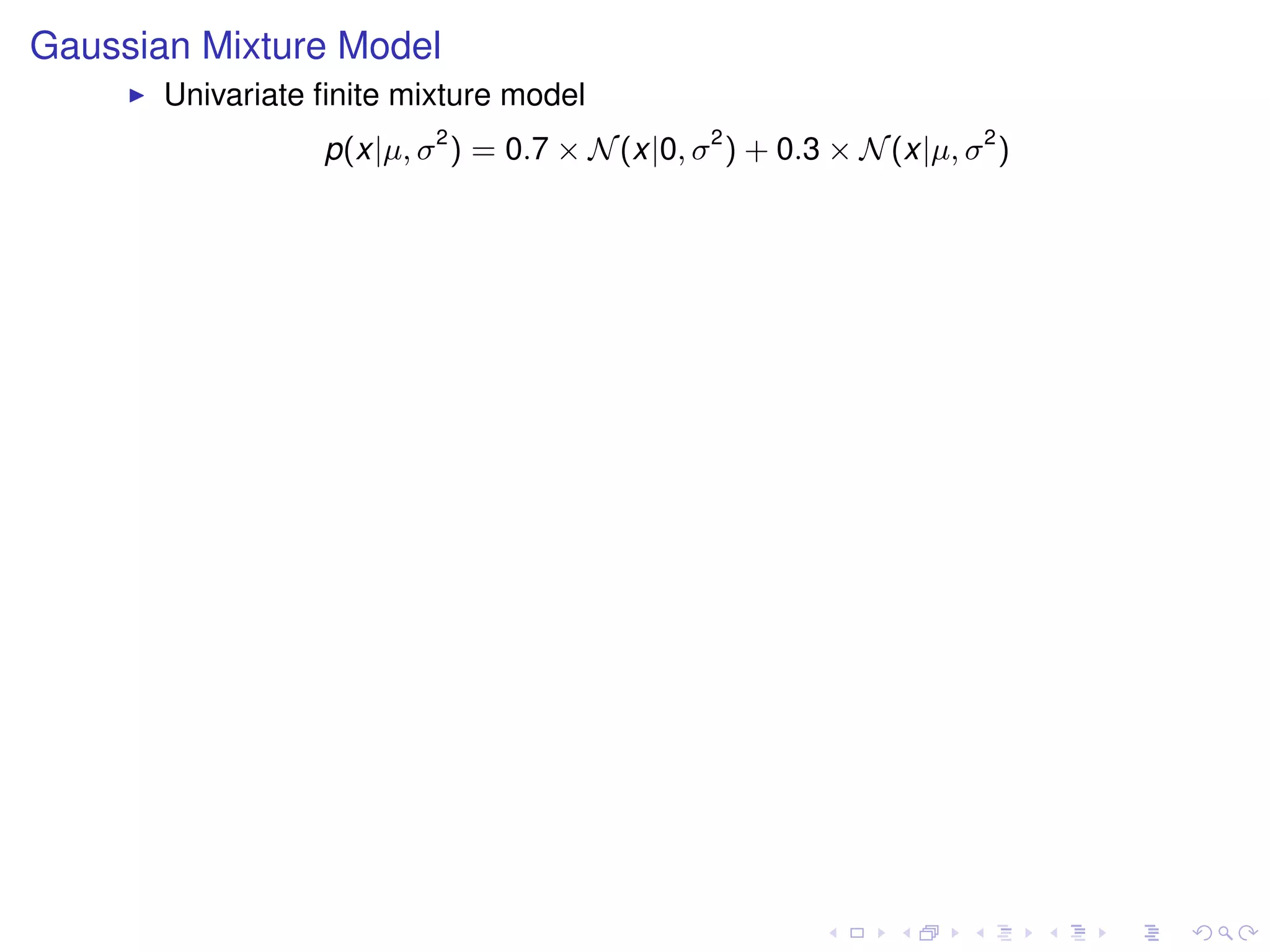

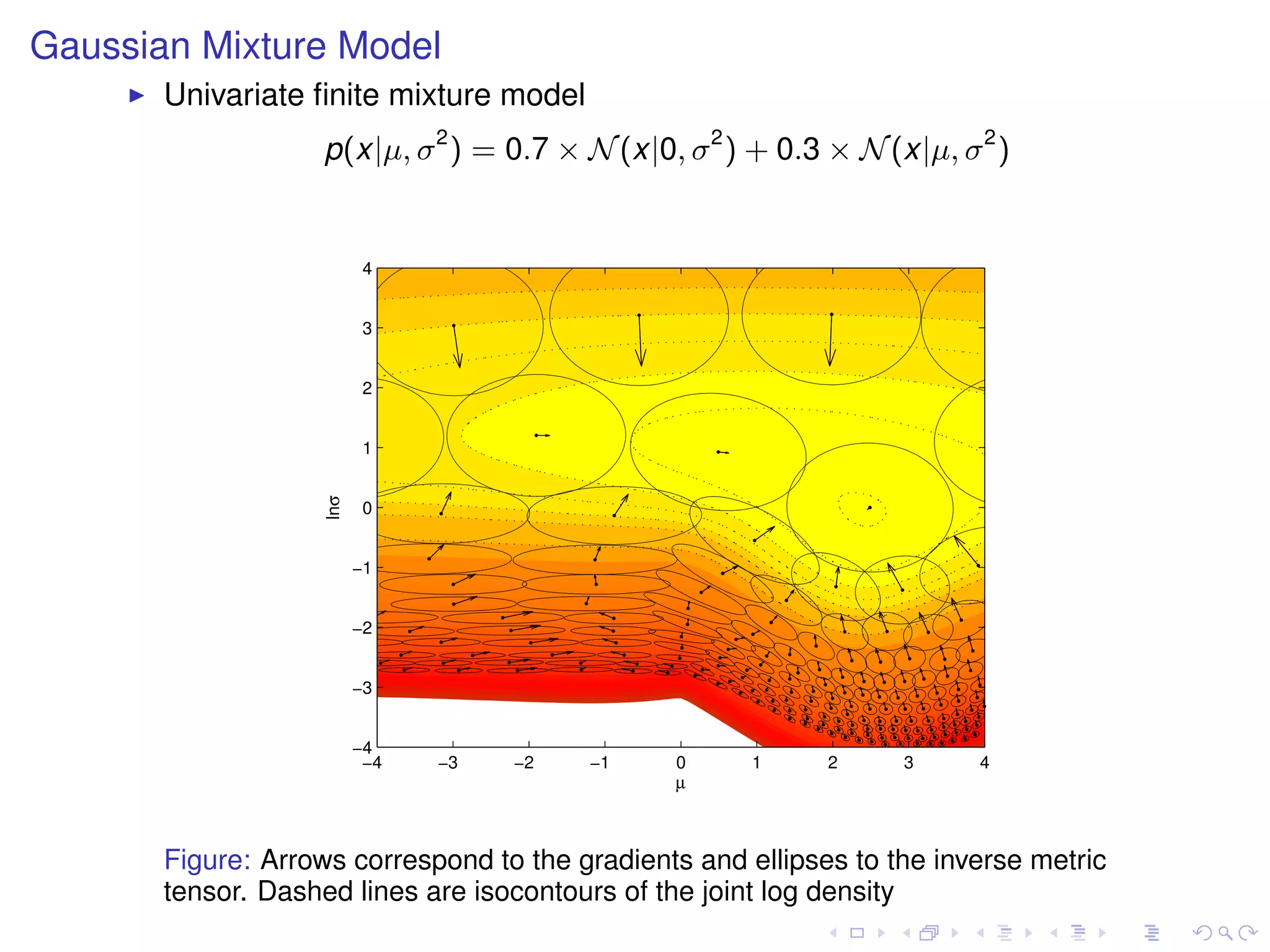

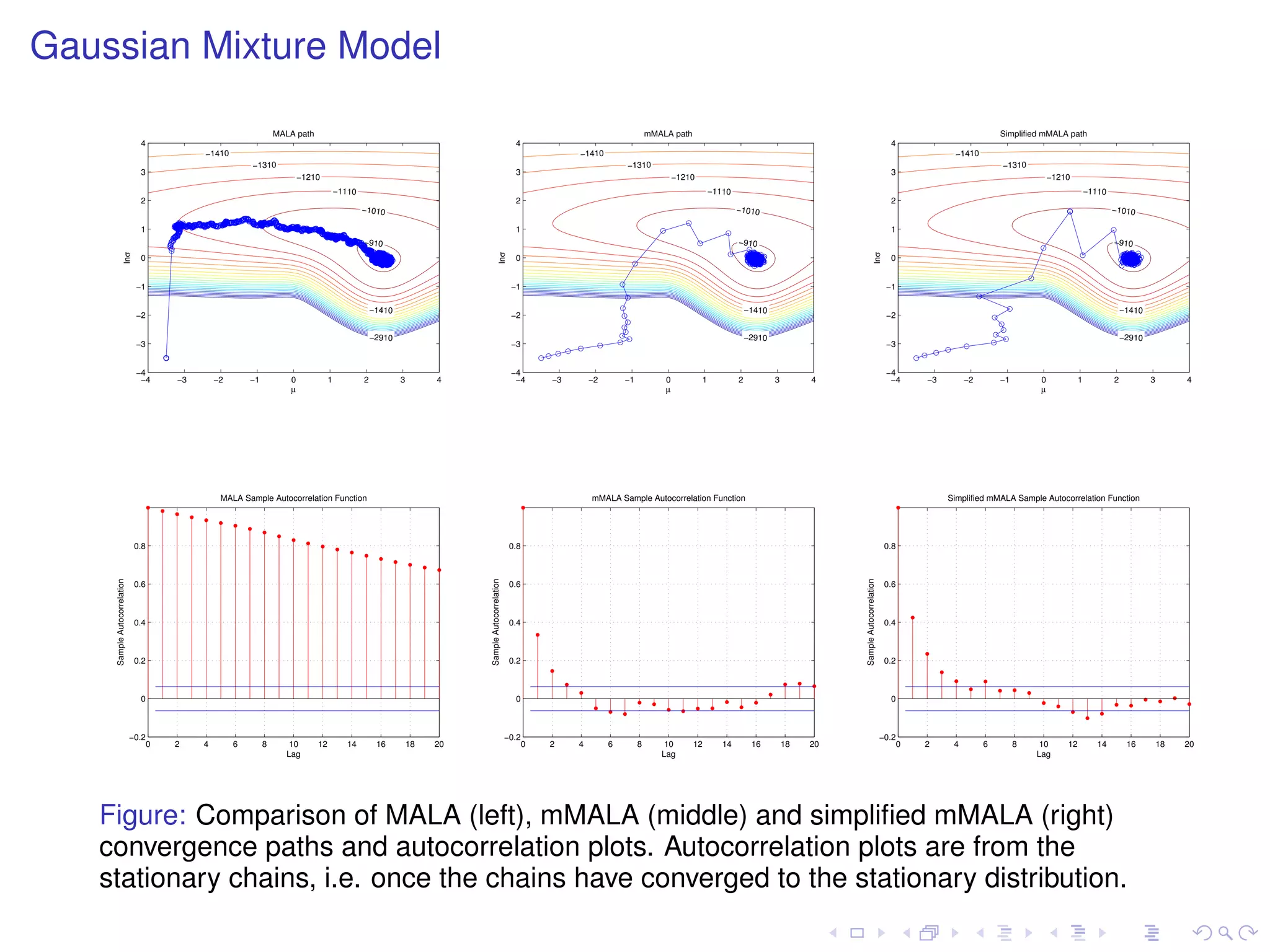

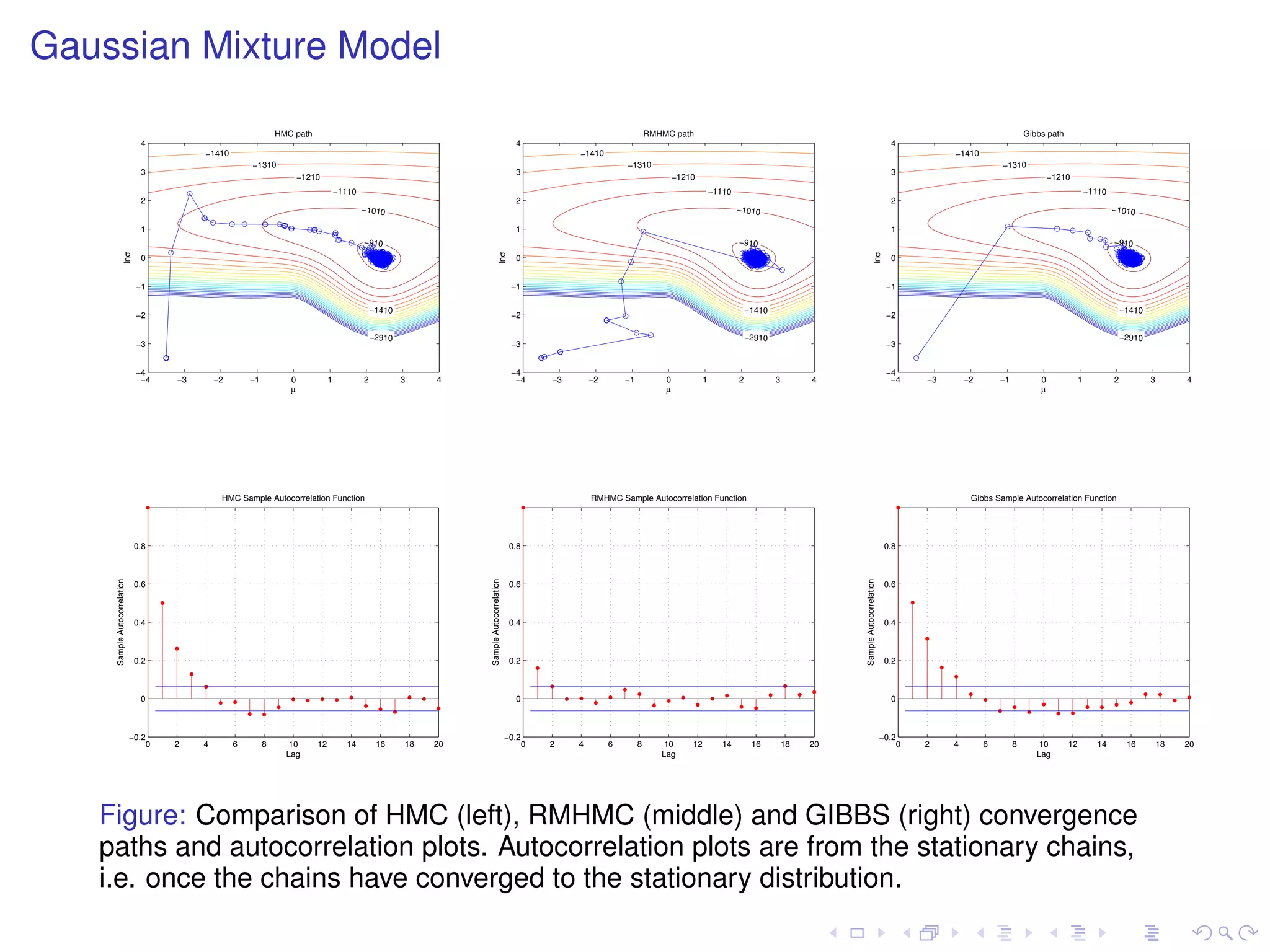

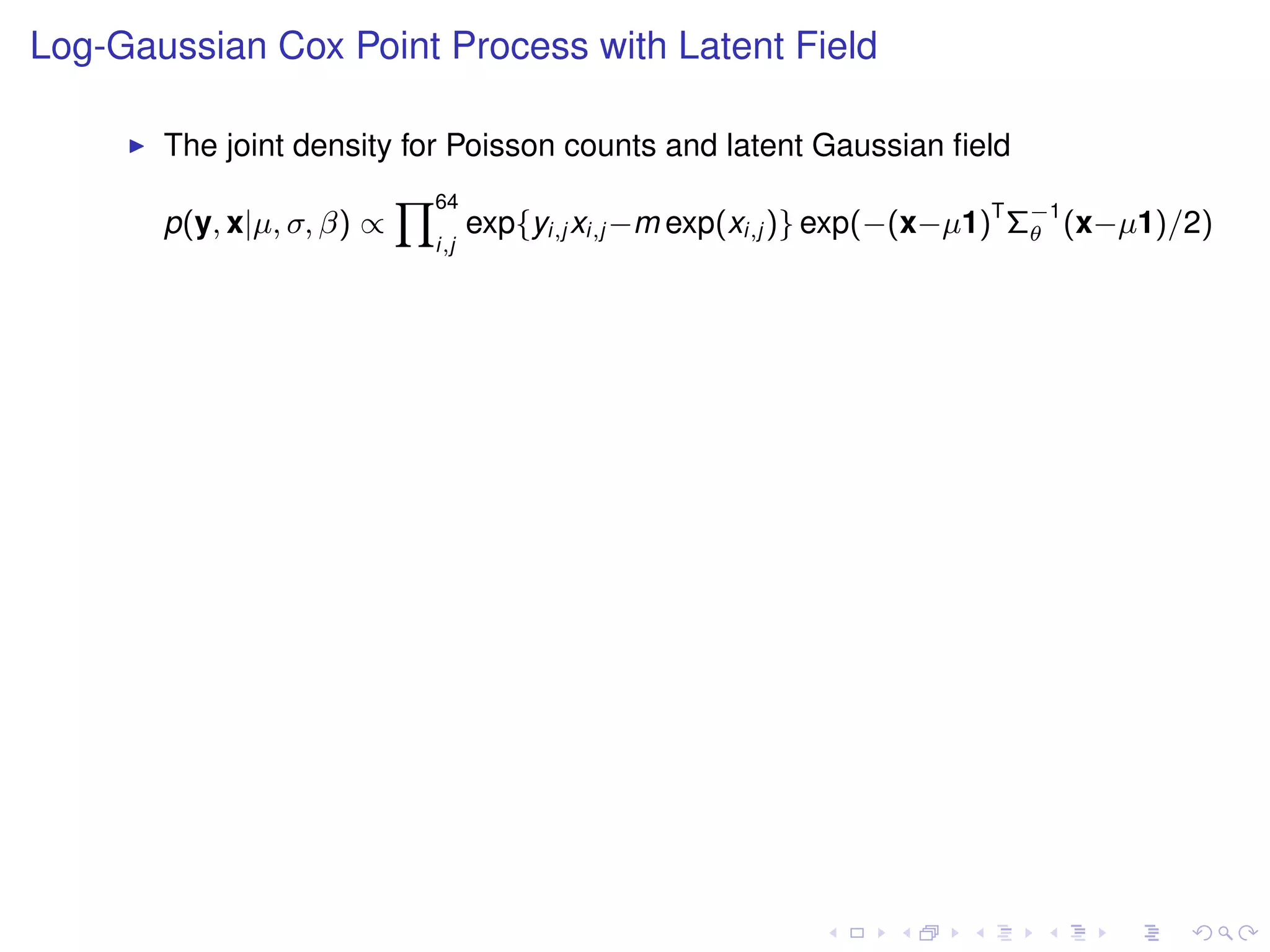

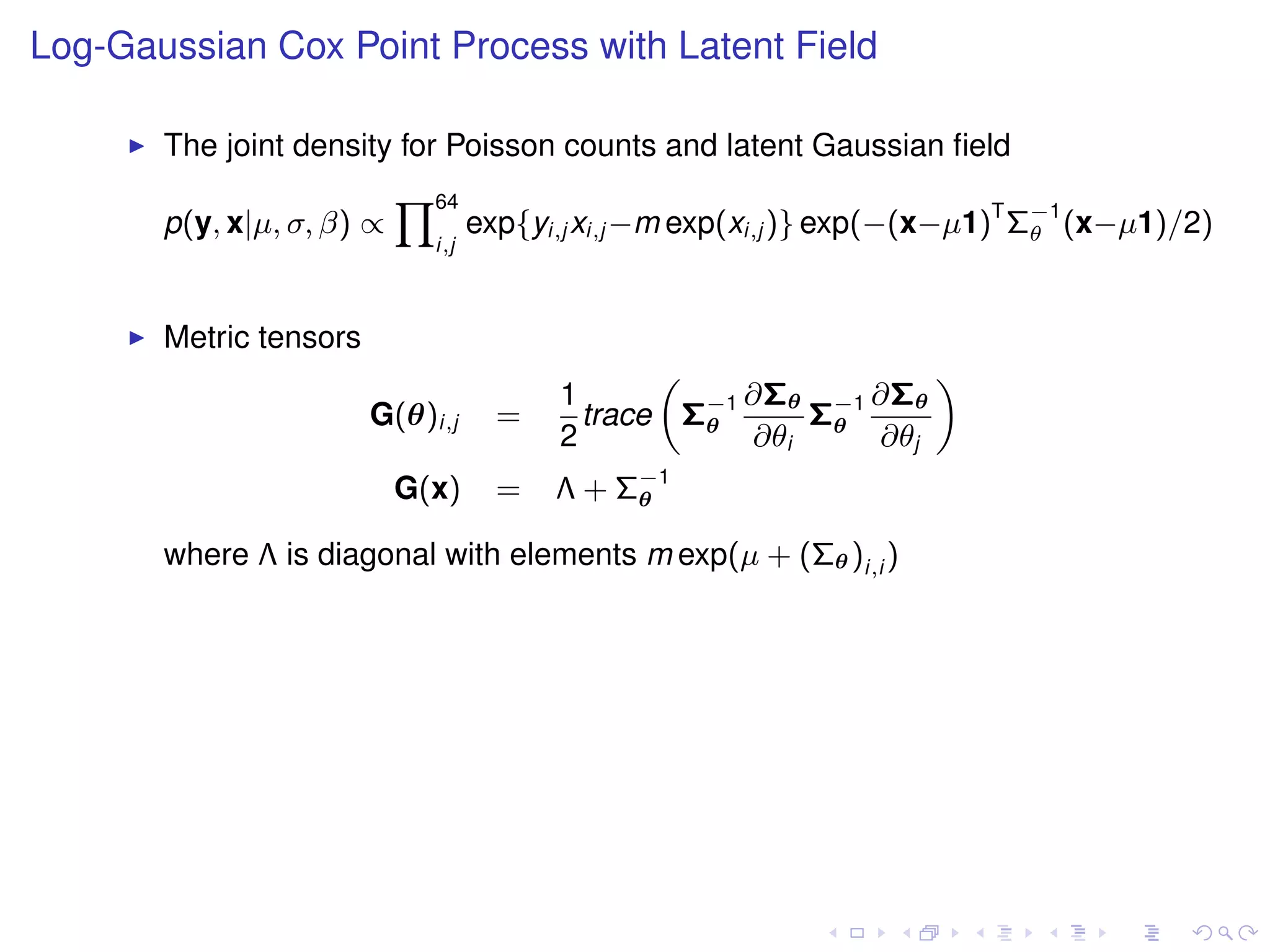

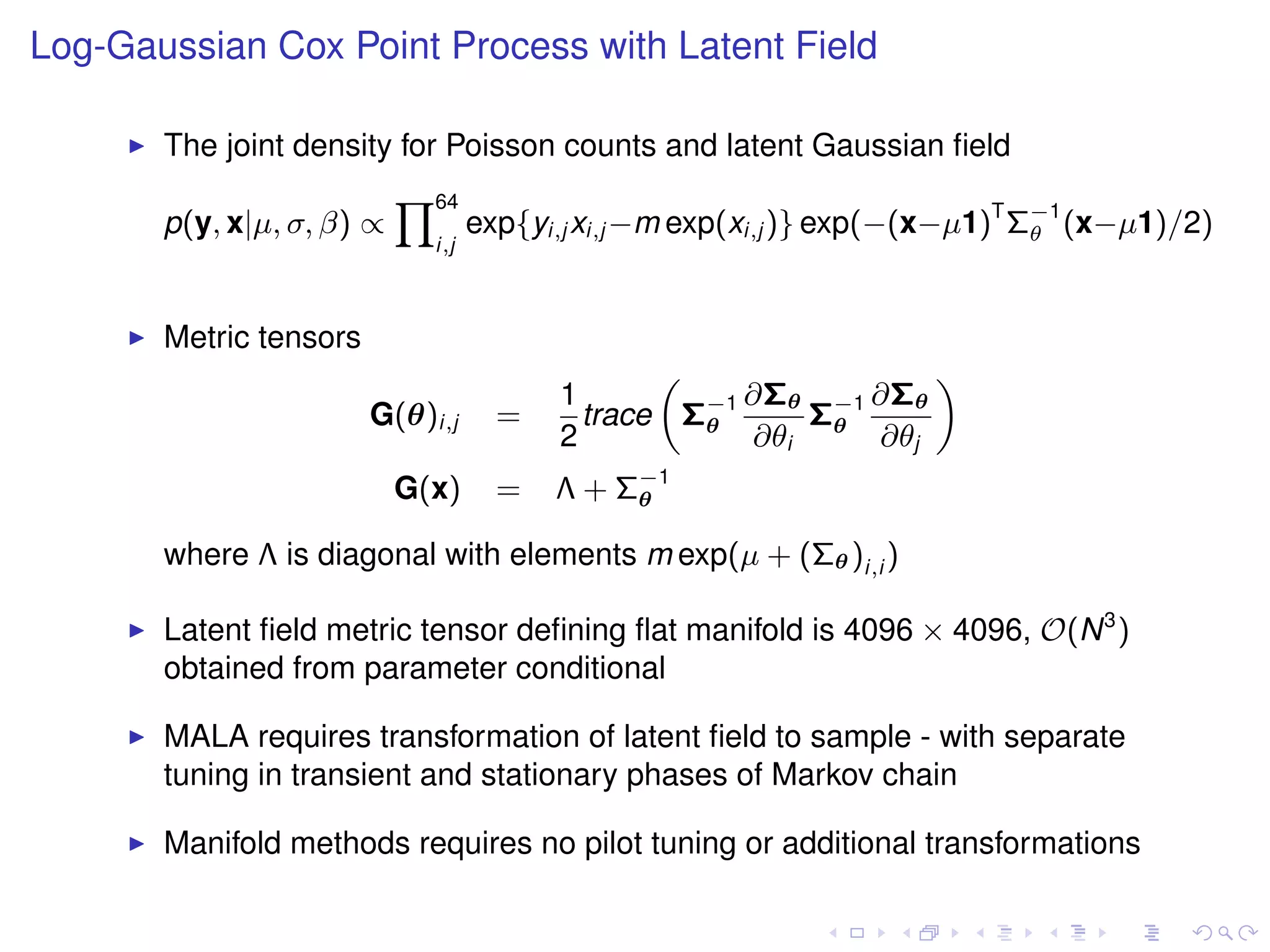

The document summarizes a talk given by Mark Girolami on manifold Monte Carlo methods. It discusses using stochastic diffusions and geometric concepts to improve MCMC methods. Specifically, it proposes using discretized Langevin and Hamiltonian diffusions across a Riemann manifold as an adaptive proposal mechanism. This is founded on deterministic geodesic flows on the manifold. Examples presented include a warped bivariate Gaussian, Gaussian mixture model, and log-Gaussian Cox process.