This document discusses various importance sampling methods for approximating Bayes factors, which are used for Bayesian model selection. It compares regular importance sampling, bridge sampling, harmonic means, mixtures to bridge sampling, and Chib's solution. An example application to probit modeling of diabetes in Pima Indian women is presented to illustrate regular importance sampling. Markov chain Monte Carlo methods like the Metropolis-Hastings algorithm and Gibbs sampling can be used to sample from the probit models.

![On approximating Bayes factors

Introduction

Bayes factor

Bayes factor

Definition (Bayes factors)

For comparing model M0 with θ ∈ Θ0 vs. M1 with θ ∈ Θ1 , under

priors π0 (θ) and π1 (θ), central quantity

f0 (x|θ0 )π0 (θ0 )dθ0

π(Θ0 |x) π(Θ0 ) Θ0

B01 = =

π(Θ1 |x) π(Θ1 )

f1 (x|θ)π1 (θ1 )dθ1

Θ1

[Jeffreys, 1939]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-9-320.jpg)

![On approximating Bayes factors

Introduction

Evidence

Evidence

Problems using a similar quantity, the evidence

Zk = πk (θk )Lk (θk ) dθk ,

Θk

aka the marginal likelihood.

[Jeffreys, 1939]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-10-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

A comparison of importance sampling solutions

1 Introduction

2 Importance sampling solutions compared

Regular importance

Bridge sampling

Harmonic means

Mixtures to bridge

Chib’s solution

The Savage–Dickey ratio

3 Nested sampling

[Marin & Robert, 2010]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-11-320.jpg)

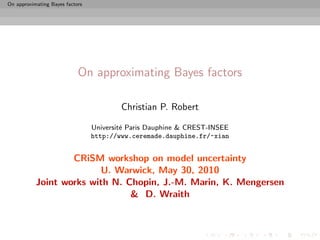

![On approximating Bayes factors

Importance sampling solutions compared

Regular importance

Probit modelling on Pima Indian women

Probability of diabetes function of above variables

P(y = 1|x) = Φ(x1 β1 + x2 β2 + x3 β3 ) ,

Test of H0 : β3 = 0 for 200 observations of Pima.tr based on a

g-prior modelling:

β ∼ N3 (0, n XT X)−1

[Marin & Robert, 2007]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-14-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Regular importance

Probit modelling on Pima Indian women

Probability of diabetes function of above variables

P(y = 1|x) = Φ(x1 β1 + x2 β2 + x3 β3 ) ,

Test of H0 : β3 = 0 for 200 observations of Pima.tr based on a

g-prior modelling:

β ∼ N3 (0, n XT X)−1

[Marin & Robert, 2007]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-15-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Regular importance

MCMC 101 for probit models

Use of either a random walk proposal

β =β+

in a Metropolis-Hastings algorithm (since the likelihood is

available) or of a Gibbs sampler that takes advantage of the

missing/latent variable

z|y, x, β ∼ N (xT β, 1) Iy × I1−y

z≥0 z≤0

(since β|y, X, z is distributed as a standard normal)

[Gibbs three times faster]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-16-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Regular importance

MCMC 101 for probit models

Use of either a random walk proposal

β =β+

in a Metropolis-Hastings algorithm (since the likelihood is

available) or of a Gibbs sampler that takes advantage of the

missing/latent variable

z|y, x, β ∼ N (xT β, 1) Iy × I1−y

z≥0 z≤0

(since β|y, X, z is distributed as a standard normal)

[Gibbs three times faster]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-17-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Regular importance

MCMC 101 for probit models

Use of either a random walk proposal

β =β+

in a Metropolis-Hastings algorithm (since the likelihood is

available) or of a Gibbs sampler that takes advantage of the

missing/latent variable

z|y, x, β ∼ N (xT β, 1) Iy × I1−y

z≥0 z≤0

(since β|y, X, z is distributed as a standard normal)

[Gibbs three times faster]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-18-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Regular importance

Importance sampling for the Pima Indian dataset

Use of the importance function inspired from the MLE estimate

distribution

ˆ ˆ

β ∼ N (β, Σ)

R Importance sampling code

model1=summary(glm(y~-1+X1,family=binomial(link="probit")))

is1=rmvnorm(Niter,mean=model1$coeff[,1],sigma=2*model1$cov.unscaled)

is2=rmvnorm(Niter,mean=model2$coeff[,1],sigma=2*model2$cov.unscaled)

bfis=mean(exp(probitlpost(is1,y,X1)-dmvlnorm(is1,mean=model1$coeff[,1],

sigma=2*model1$cov.unscaled))) / mean(exp(probitlpost(is2,y,X2)-

dmvlnorm(is2,mean=model2$coeff[,1],sigma=2*model2$cov.unscaled)))](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-19-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Regular importance

Importance sampling for the Pima Indian dataset

Use of the importance function inspired from the MLE estimate

distribution

ˆ ˆ

β ∼ N (β, Σ)

R Importance sampling code

model1=summary(glm(y~-1+X1,family=binomial(link="probit")))

is1=rmvnorm(Niter,mean=model1$coeff[,1],sigma=2*model1$cov.unscaled)

is2=rmvnorm(Niter,mean=model2$coeff[,1],sigma=2*model2$cov.unscaled)

bfis=mean(exp(probitlpost(is1,y,X1)-dmvlnorm(is1,mean=model1$coeff[,1],

sigma=2*model1$cov.unscaled))) / mean(exp(probitlpost(is2,y,X2)-

dmvlnorm(is2,mean=model2$coeff[,1],sigma=2*model2$cov.unscaled)))](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-20-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Bridge sampling

Bridge sampling

Special case:

If

π1 (θ1 |x) ∝ π1 (θ1 |x)

˜

π2 (θ2 |x) ∝ π2 (θ2 |x)

˜

live on the same space (Θ1 = Θ2 ), then

n

1 π1 (θi |x)

˜

B12 ≈ θi ∼ π2 (θ|x)

n π2 (θi |x)

˜

i=1

[Gelman & Meng, 1998; Chen, Shao & Ibrahim, 2000]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-22-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Bridge sampling

Optimal bridge sampling (2)

Reason:

Var(B12 ) 1 π1 (θ)π2 (θ)[n1 π1 (θ) + n2 π2 (θ)]α(θ)2 dθ

2 ≈ 2 −1

B12 n1 n2 π1 (θ)π2 (θ)α(θ) dθ

(by the δ method)

Drawback: Dependence on the unknown normalising constants

solved iteratively](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-27-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Bridge sampling

Optimal bridge sampling (2)

Reason:

Var(B12 ) 1 π1 (θ)π2 (θ)[n1 π1 (θ) + n2 π2 (θ)]α(θ)2 dθ

2 ≈ 2 −1

B12 n1 n2 π1 (θ)π2 (θ)α(θ) dθ

(by the δ method)

Drawback: Dependence on the unknown normalising constants

solved iteratively](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-28-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Bridge sampling

Extension to varying dimensions

When dim(Θ1 ) = dim(Θ2 ), e.g. θ2 = (θ1 , ψ), introduction of a

pseudo-posterior density, ω(ψ|θ1 , x), augmenting π1 (θ1 |x) into

joint distribution

π1 (θ1 |x) × ω(ψ|θ1 , x)

on Θ2 so that

π1 (θ1 |x)α(θ1 , ψ)π2 (θ1 , ψ|x)dθ1 ω(ψ|θ1 , x) dψ

˜

B12 =

π2 (θ1 , ψ|x)α(θ1 , ψ)π1 (θ1 |x)dθ1 ω(ψ|θ1 , x) dψ

˜

π1 (θ1 )ω(ψ|θ1 )

˜ Eϕ [˜1 (θ1 )ω(ψ|θ1 )/ϕ(θ1 , ψ)]

π

= Eπ2 =

π2 (θ1 , ψ)

˜ Eϕ [˜2 (θ1 , ψ)/ϕ(θ1 , ψ)]

π

for any conditional density ω(ψ|θ1 ) and any joint density ϕ.](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-29-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Bridge sampling

Extension to varying dimensions

When dim(Θ1 ) = dim(Θ2 ), e.g. θ2 = (θ1 , ψ), introduction of a

pseudo-posterior density, ω(ψ|θ1 , x), augmenting π1 (θ1 |x) into

joint distribution

π1 (θ1 |x) × ω(ψ|θ1 , x)

on Θ2 so that

π1 (θ1 |x)α(θ1 , ψ)π2 (θ1 , ψ|x)dθ1 ω(ψ|θ1 , x) dψ

˜

B12 =

π2 (θ1 , ψ|x)α(θ1 , ψ)π1 (θ1 |x)dθ1 ω(ψ|θ1 , x) dψ

˜

π1 (θ1 )ω(ψ|θ1 )

˜ Eϕ [˜1 (θ1 )ω(ψ|θ1 )/ϕ(θ1 , ψ)]

π

= Eπ2 =

π2 (θ1 , ψ)

˜ Eϕ [˜2 (θ1 , ψ)/ϕ(θ1 , ψ)]

π

for any conditional density ω(ψ|θ1 ) and any joint density ϕ.](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-30-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Bridge sampling

Illustration for the Pima Indian dataset

Use of the MLE induced conditional of β3 given (β1 , β2 ) as a

pseudo-posterior and mixture of both MLE approximations on β3

in bridge sampling estimate

R bridge sampling code

cova=model2$cov.unscaled

expecta=model2$coeff[,1]

covw=cova[3,3]-t(cova[1:2,3])%*%ginv(cova[1:2,1:2])%*%cova[1:2,3]

probit1=hmprobit(Niter,y,X1)

probit2=hmprobit(Niter,y,X2)

pseudo=rnorm(Niter,meanw(probit1),sqrt(covw))

probit1p=cbind(probit1,pseudo)

bfbs=mean(exp(probitlpost(probit2[,1:2],y,X1)+dnorm(probit2[,3],meanw(probit2[,1:2]),

sqrt(covw),log=T))/ (dmvnorm(probit2,expecta,cova)+dnorm(probit2[,3],expecta[3],

cova[3,3])))/ mean(exp(probitlpost(probit1p,y,X2))/(dmvnorm(probit1p,expecta,cova)+

dnorm(pseudo,expecta[3],cova[3,3])))](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-31-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Bridge sampling

Illustration for the Pima Indian dataset

Use of the MLE induced conditional of β3 given (β1 , β2 ) as a

pseudo-posterior and mixture of both MLE approximations on β3

in bridge sampling estimate

R bridge sampling code

cova=model2$cov.unscaled

expecta=model2$coeff[,1]

covw=cova[3,3]-t(cova[1:2,3])%*%ginv(cova[1:2,1:2])%*%cova[1:2,3]

probit1=hmprobit(Niter,y,X1)

probit2=hmprobit(Niter,y,X2)

pseudo=rnorm(Niter,meanw(probit1),sqrt(covw))

probit1p=cbind(probit1,pseudo)

bfbs=mean(exp(probitlpost(probit2[,1:2],y,X1)+dnorm(probit2[,3],meanw(probit2[,1:2]),

sqrt(covw),log=T))/ (dmvnorm(probit2,expecta,cova)+dnorm(probit2[,3],expecta[3],

cova[3,3])))/ mean(exp(probitlpost(probit1p,y,X2))/(dmvnorm(probit1p,expecta,cova)+

dnorm(pseudo,expecta[3],cova[3,3])))](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-32-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Harmonic means

The original harmonic mean estimator

When θki ∼ πk (θ|x),

T

1 1

T L(θkt |x)

t=1

is an unbiased estimator of 1/mk (x)

[Newton & Raftery, 1994]

Highly dangerous: Most often leads to an infinite variance!!!](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-34-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Harmonic means

The original harmonic mean estimator

When θki ∼ πk (θ|x),

T

1 1

T L(θkt |x)

t=1

is an unbiased estimator of 1/mk (x)

[Newton & Raftery, 1994]

Highly dangerous: Most often leads to an infinite variance!!!](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-35-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Harmonic means

“The Worst Monte Carlo Method Ever”

“The good news is that the Law of Large Numbers guarantees that

this estimator is consistent ie, it will very likely be very close to the

correct answer if you use a sufficiently large number of points from

the posterior distribution.

The bad news is that the number of points required for this

estimator to get close to the right answer will often be greater

than the number of atoms in the observable universe. The even

worse news is that it’s easy for people to not realize this, and to

na¨ıvely accept estimates that are nowhere close to the correct

value of the marginal likelihood.”

[Radford Neal’s blog, Aug. 23, 2008]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-36-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Harmonic means

“The Worst Monte Carlo Method Ever”

“The good news is that the Law of Large Numbers guarantees that

this estimator is consistent ie, it will very likely be very close to the

correct answer if you use a sufficiently large number of points from

the posterior distribution.

The bad news is that the number of points required for this

estimator to get close to the right answer will often be greater

than the number of atoms in the observable universe. The even

worse news is that it’s easy for people to not realize this, and to

na¨ıvely accept estimates that are nowhere close to the correct

value of the marginal likelihood.”

[Radford Neal’s blog, Aug. 23, 2008]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-37-320.jpg)

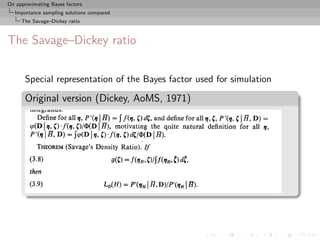

![On approximating Bayes factors

Importance sampling solutions compared

Harmonic means

Approximating Zk from a posterior sample

Use of the [harmonic mean] identity

ϕ(θk ) ϕ(θk ) πk (θk )Lk (θk ) 1

Eπk x = dθk =

πk (θk )Lk (θk ) πk (θk )Lk (θk ) Zk Zk

no matter what the proposal ϕ(·) is.

[Gelfand & Dey, 1994; Bartolucci et al., 2006]

Direct exploitation of the MCMC output](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-38-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Harmonic means

Approximating Zk from a posterior sample

Use of the [harmonic mean] identity

ϕ(θk ) ϕ(θk ) πk (θk )Lk (θk ) 1

Eπk x = dθk =

πk (θk )Lk (θk ) πk (θk )Lk (θk ) Zk Zk

no matter what the proposal ϕ(·) is.

[Gelfand & Dey, 1994; Bartolucci et al., 2006]

Direct exploitation of the MCMC output](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-39-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Mixtures to bridge

Approximating Zk using a mixture representation

Bridge sampling redux

Design a specific mixture for simulation [importance sampling]

purposes, with density

ϕk (θk ) ∝ ω1 πk (θk )Lk (θk ) + ϕ(θk ) ,

where ϕ(·) is arbitrary (but normalised)

Note: ω1 is not a probability weight

[Chopin & Robert, 2010]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-45-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Mixtures to bridge

Approximating Zk using a mixture representation

Bridge sampling redux

Design a specific mixture for simulation [importance sampling]

purposes, with density

ϕk (θk ) ∝ ω1 πk (θk )Lk (θk ) + ϕ(θk ) ,

where ϕ(·) is arbitrary (but normalised)

Note: ω1 is not a probability weight

[Chopin & Robert, 2010]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-46-320.jpg)

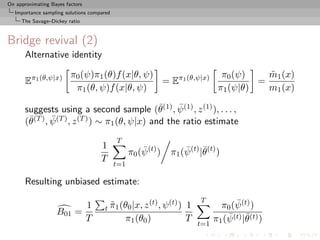

![On approximating Bayes factors

Importance sampling solutions compared

Mixtures to bridge

Evidence approximation by mixtures

Rao-Blackwellised estimate

T

ˆ 1

ξ=

(t) (t)

ω1 πk (θk )Lk (θk )

(t) (t)

ω1 πk (θk )Lk (θk ) + ϕ(θk ) ,

(t)

T

t=1

converges to ω1 Zk /{ω1 Zk + 1}

3k

ˆ ˆ ˆ

Deduce Zˆ from ω1 Z3k /{ω1 Z3k + 1} = ξ ie

T (t) (t) (t) (t) (t)

t=1 ω1 πk (θk )Lk (θk ) ω1 π(θk )Lk (θk ) + ϕ(θk )

ˆ

Z3k =

T (t) (t) (t) (t)

t=1 ϕ(θk ) ω1 πk (θk )Lk (θk ) + ϕ(θk )

[Bridge sampler]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-50-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Mixtures to bridge

Evidence approximation by mixtures

Rao-Blackwellised estimate

T

ˆ 1

ξ=

(t) (t)

ω1 πk (θk )Lk (θk )

(t) (t)

ω1 πk (θk )Lk (θk ) + ϕ(θk ) ,

(t)

T

t=1

converges to ω1 Zk /{ω1 Zk + 1}

3k

ˆ ˆ ˆ

Deduce Zˆ from ω1 Z3k /{ω1 Z3k + 1} = ξ ie

T (t) (t) (t) (t) (t)

t=1 ω1 πk (θk )Lk (θk ) ω1 π(θk )Lk (θk ) + ϕ(θk )

ˆ

Z3k =

T (t) (t) (t) (t)

t=1 ϕ(θk ) ω1 πk (θk )Lk (θk ) + ϕ(θk )

[Bridge sampler]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-51-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Label switching

A mixture model [special case of missing variable model] is

invariant under permutations of the indices of the components.

E.g., mixtures

0.3N (0, 1) + 0.7N (2.3, 1)

and

0.7N (2.3, 1) + 0.3N (0, 1)

are exactly the same!

c The component parameters θi are not identifiable

marginally since they are exchangeable](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-55-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Label switching

A mixture model [special case of missing variable model] is

invariant under permutations of the indices of the components.

E.g., mixtures

0.3N (0, 1) + 0.7N (2.3, 1)

and

0.7N (2.3, 1) + 0.3N (0, 1)

are exactly the same!

c The component parameters θi are not identifiable

marginally since they are exchangeable](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-56-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Connected difficulties

1 Number of modes of the likelihood of order O(k!):

c Maximization and even [MCMC] exploration of the

posterior surface harder

2 Under exchangeable priors on (θ, p) [prior invariant under

permutation of the indices], all posterior marginals are

identical:

c Posterior expectation of θ1 equal to posterior expectation

of θ2](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-57-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Connected difficulties

1 Number of modes of the likelihood of order O(k!):

c Maximization and even [MCMC] exploration of the

posterior surface harder

2 Under exchangeable priors on (θ, p) [prior invariant under

permutation of the indices], all posterior marginals are

identical:

c Posterior expectation of θ1 equal to posterior expectation

of θ2](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-58-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Label switching paradox

We should observe the exchangeability of the components [label

switching] to conclude about convergence of the Gibbs sampler.

If we observe it, then we do not know how to estimate the

parameters.

If we do not, then we are uncertain about the convergence!!!](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-60-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Label switching paradox

We should observe the exchangeability of the components [label

switching] to conclude about convergence of the Gibbs sampler.

If we observe it, then we do not know how to estimate the

parameters.

If we do not, then we are uncertain about the convergence!!!](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-61-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Label switching paradox

We should observe the exchangeability of the components [label

switching] to conclude about convergence of the Gibbs sampler.

If we observe it, then we do not know how to estimate the

parameters.

If we do not, then we are uncertain about the convergence!!!](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-62-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Compensation for label switching

(t)

For mixture models, zk usually fails to visit all configurations in a

balanced way, despite the symmetry predicted by the theory

1

πk (θk |x) = πk (σ(θk )|x) = πk (σ(θk )|x)

k!

σ∈S

for all σ’s in Sk , set of all permutations of {1, . . . , k}.

Consequences on numerical approximation, biased by an order k!

Recover the theoretical symmetry by using

T

∗ 1 ∗ (t)

πk (θk |x) = πk (σ(θk )|x, zk ) .

T k!

σ∈Sk t=1

[Berkhof, Mechelen, & Gelman, 2003]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-63-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Compensation for label switching

(t)

For mixture models, zk usually fails to visit all configurations in a

balanced way, despite the symmetry predicted by the theory

1

πk (θk |x) = πk (σ(θk )|x) = πk (σ(θk )|x)

k!

σ∈S

for all σ’s in Sk , set of all permutations of {1, . . . , k}.

Consequences on numerical approximation, biased by an order k!

Recover the theoretical symmetry by using

T

∗ 1 ∗ (t)

πk (θk |x) = πk (σ(θk )|x, zk ) .

T k!

σ∈Sk t=1

[Berkhof, Mechelen, & Gelman, 2003]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-64-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Galaxy dataset

n = 82 galaxies as a mixture of k normal distributions with both

mean and variance unknown.

[Roeder, 1992]

Average density

0.8

0.6

Relative Frequency

0.4

0.2

0.0

−2 −1 0 1 2 3

data](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-65-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Galaxy dataset (k)

∗

Using only the original estimate, with θk as the MAP estimator,

log(mk (x)) = −105.1396

ˆ

for k = 3 (based on 103 simulations), while introducing the

permutations leads to

log(mk (x)) = −103.3479

ˆ

Note that

−105.1396 + log(3!) = −103.3479

k 2 3 4 5 6 7 8

mk (x) -115.68 -103.35 -102.66 -101.93 -102.88 -105.48 -108.44

Estimations of the marginal likelihoods by the symmetrised Chib’s

approximation (based on 105 Gibbs iterations and, for k > 5, 100

permutations selected at random in Sk ).

[Lee, Marin, Mengersen & Robert, 2008]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-66-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Galaxy dataset (k)

∗

Using only the original estimate, with θk as the MAP estimator,

log(mk (x)) = −105.1396

ˆ

for k = 3 (based on 103 simulations), while introducing the

permutations leads to

log(mk (x)) = −103.3479

ˆ

Note that

−105.1396 + log(3!) = −103.3479

k 2 3 4 5 6 7 8

mk (x) -115.68 -103.35 -102.66 -101.93 -102.88 -105.48 -108.44

Estimations of the marginal likelihoods by the symmetrised Chib’s

approximation (based on 105 Gibbs iterations and, for k > 5, 100

permutations selected at random in Sk ).

[Lee, Marin, Mengersen & Robert, 2008]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-67-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Galaxy dataset (k)

∗

Using only the original estimate, with θk as the MAP estimator,

log(mk (x)) = −105.1396

ˆ

for k = 3 (based on 103 simulations), while introducing the

permutations leads to

log(mk (x)) = −103.3479

ˆ

Note that

−105.1396 + log(3!) = −103.3479

k 2 3 4 5 6 7 8

mk (x) -115.68 -103.35 -102.66 -101.93 -102.88 -105.48 -108.44

Estimations of the marginal likelihoods by the symmetrised Chib’s

approximation (based on 105 Gibbs iterations and, for k > 5, 100

permutations selected at random in Sk ).

[Lee, Marin, Mengersen & Robert, 2008]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-68-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Case of the probit model

For the completion by z,

1

π (θ|x) =

ˆ π(θ|x, z (t) )

T t

is a simple average of normal densities

R Bridge sampling code

gibbs1=gibbsprobit(Niter,y,X1)

gibbs2=gibbsprobit(Niter,y,X2)

bfchi=mean(exp(dmvlnorm(t(t(gibbs2$mu)-model2$coeff[,1]),mean=rep(0,3),

sigma=gibbs2$Sigma2)-probitlpost(model2$coeff[,1],y,X2)))/

mean(exp(dmvlnorm(t(t(gibbs1$mu)-model1$coeff[,1]),mean=rep(0,2),

sigma=gibbs1$Sigma2)-probitlpost(model1$coeff[,1],y,X1)))](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-69-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

Chib’s solution

Case of the probit model

For the completion by z,

1

π (θ|x) =

ˆ π(θ|x, z (t) )

T t

is a simple average of normal densities

R Bridge sampling code

gibbs1=gibbsprobit(Niter,y,X1)

gibbs2=gibbsprobit(Niter,y,X2)

bfchi=mean(exp(dmvlnorm(t(t(gibbs2$mu)-model2$coeff[,1]),mean=rep(0,3),

sigma=gibbs2$Sigma2)-probitlpost(model2$coeff[,1],y,X2)))/

mean(exp(dmvlnorm(t(t(gibbs1$mu)-model1$coeff[,1]),mean=rep(0,2),

sigma=gibbs1$Sigma2)-probitlpost(model1$coeff[,1],y,X1)))](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-70-320.jpg)

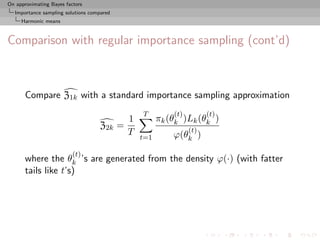

![On approximating Bayes factors

Importance sampling solutions compared

The Savage–Dickey ratio

Savage’s density ratio theorem

Given a test H0 : θ = θ0 in a model f (x|θ, ψ) with a nuisance

parameter ψ, under priors π0 (ψ) and π1 (θ, ψ) such that

π1 (ψ|θ0 ) = π0 (ψ)

then

π1 (θ0 |x)

B01 = ,

π1 (θ0 )

with the obvious notations

π1 (θ) = π1 (θ, ψ)dψ , π1 (θ|x) = π1 (θ, ψ|x)dψ ,

[Dickey, 1971; Verdinelli & Wasserman, 1995]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-73-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

The Savage–Dickey ratio

Rephrased

“Suppose that f0 (θ) = f1 (θ|φ = φ0 ). As f0 (x|θ) = f1 (x|θ, φ = φ0 ),

Z

f0 (x) = f1 (x|θ, φ = φ0 )f1 (θ|φ = φ0 ) dθ = f1 (x|φ = φ0 ) ,

i.e., the denumerator of the Bayes factor is the value of f1 (x|φ) at φ = φ0 , while the denominator is an average

of the values of f1 (x|φ) for φ = φ0 , weighted by the prior distribution f1 (φ) under the augmented model.

Applying Bayes’ theorem to the right-hand side of [the above] we get

‹

f0 (x) = f1 (φ0 |x)f1 (x) f1 (φ0 )

and hence the Bayes factor is given by

‹ ‹

B = f0 (x) f1 (x) = f1 (φ0 |x) f1 (φ0 ) .

the ratio of the posterior to prior densities at φ = φ0 under the augmented model.”

[O’Hagan & Forster, 1996]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-74-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

The Savage–Dickey ratio

Rephrased

“Suppose that f0 (θ) = f1 (θ|φ = φ0 ). As f0 (x|θ) = f1 (x|θ, φ = φ0 ),

Z

f0 (x) = f1 (x|θ, φ = φ0 )f1 (θ|φ = φ0 ) dθ = f1 (x|φ = φ0 ) ,

i.e., the denumerator of the Bayes factor is the value of f1 (x|φ) at φ = φ0 , while the denominator is an average

of the values of f1 (x|φ) for φ = φ0 , weighted by the prior distribution f1 (φ) under the augmented model.

Applying Bayes’ theorem to the right-hand side of [the above] we get

‹

f0 (x) = f1 (φ0 |x)f1 (x) f1 (φ0 )

and hence the Bayes factor is given by

‹ ‹

B = f0 (x) f1 (x) = f1 (φ0 |x) f1 (φ0 ) .

the ratio of the posterior to prior densities at φ = φ0 under the augmented model.”

[O’Hagan & Forster, 1996]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-75-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

The Savage–Dickey ratio

Measure-theoretic difficulty

Representation depends on the choice of versions of conditional

densities:

π0 (ψ)f (x|θ0 , ψ) dψ

B01 = [by definition]

π1 (θ, ψ)f (x|θ, ψ) dψdθ

π1 (ψ|θ0 )f (x|θ0 , ψ) dψ π1 (θ0 )

= [specific version of π1 (ψ|θ0 )

π1 (θ, ψ)f (x|θ, ψ) dψdθ π1 (θ0 )

and arbitrary version of π1 (θ0 )]

π1 (θ0 , ψ)f (x|θ0 , ψ) dψ

= [specific version of π1 (θ0 , ψ)]

m1 (x)π1 (θ0 )

π1 (θ0 |x)

= [version dependent]

π1 (θ0 )](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-76-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

The Savage–Dickey ratio

Measure-theoretic difficulty

Representation depends on the choice of versions of conditional

densities:

π0 (ψ)f (x|θ0 , ψ) dψ

B01 = [by definition]

π1 (θ, ψ)f (x|θ, ψ) dψdθ

π1 (ψ|θ0 )f (x|θ0 , ψ) dψ π1 (θ0 )

= [specific version of π1 (ψ|θ0 )

π1 (θ, ψ)f (x|θ, ψ) dψdθ π1 (θ0 )

and arbitrary version of π1 (θ0 )]

π1 (θ0 , ψ)f (x|θ0 , ψ) dψ

= [specific version of π1 (θ0 , ψ)]

m1 (x)π1 (θ0 )

π1 (θ0 |x)

= [version dependent]

π1 (θ0 )](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-77-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

The Savage–Dickey ratio

Savage–Dickey paradox

Verdinelli-Wasserman extension:

π1 (θ0 |x) π1 (ψ|x,θ0 ,x) π0 (ψ)

B01 = E

π1 (θ0 ) π1 (ψ|θ0 )

similarly depends on choices of versions...

...but Monte Carlo implementation relies on specific versions of all

densities without making mention of it

[Chen, Shao & Ibrahim, 2000]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-80-320.jpg)

![On approximating Bayes factors

Importance sampling solutions compared

The Savage–Dickey ratio

Savage–Dickey paradox

Verdinelli-Wasserman extension:

π1 (θ0 |x) π1 (ψ|x,θ0 ,x) π0 (ψ)

B01 = E

π1 (θ0 ) π1 (ψ|θ0 )

similarly depends on choices of versions...

...but Monte Carlo implementation relies on specific versions of all

densities without making mention of it

[Chen, Shao & Ibrahim, 2000]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-81-320.jpg)

![On approximating Bayes factors

Nested sampling

Properties of nested sampling

1 Introduction

2 Importance sampling solutions compared

3 Nested sampling

Purpose

Implementation

Error rates

Impact of dimension

Constraints

Importance variant

A mixture comparison

[Chopin & Robert, 2010]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-95-320.jpg)

![On approximating Bayes factors

Nested sampling

Purpose

Nested sampling: Goal

Skilling’s (2007) technique using the one-dimensional

representation:

1

Z = Eπ [L(θ)] = ϕ(x) dx

0

with

ϕ−1 (l) = P π (L(θ) > l).

Note; ϕ(·) is intractable in most cases.](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-96-320.jpg)

![On approximating Bayes factors

Nested sampling

Implementation

Nested sampling: First approximation

Approximate Z by a Riemann sum:

j

Z= (xi−1 − xi )ϕ(xi )

i=1

where the xi ’s are either:

deterministic: xi = e−i/N

or random:

x0 = 1, xi+1 = ti xi , ti ∼ Be(N, 1)

so that E[log xi ] = −i/N .](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-97-320.jpg)

![On approximating Bayes factors

Nested sampling

Error rates

Approximation error

Error = Z − Z

j 1

= (xi−1 − xi )ϕi − ϕ(x) dx = − ϕ(x) dx

i=1 0 0

j 1

+ (xi−1 − xi )ϕ(xi ) − ϕ(x) dx (Quadrature Error)

i=1

j

+ (xi−1 − xi ) {ϕi − ϕ(xi )} (Stochastic Error)

i=1

[Dominated by Monte Carlo!]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-105-320.jpg)

![On approximating Bayes factors

Nested sampling

Error rates

A CLT for the Stochastic Error

The (dominating) stochastic error is OP (N −1/2 ):

D

N 1/2 {Stochastic Error} → N (0, V )

with

V =− sϕ (s)tϕ (t) log(s ∨ t) ds dt.

s,t∈[ ,1]

[Proof based on Donsker’s theorem]

The number of simulated points equals the number of iterations j,

and is a multiple of N : if one stops at first iteration j such that

e−j/N < , then: j = N − log .](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-106-320.jpg)

![On approximating Bayes factors

Nested sampling

Error rates

A CLT for the Stochastic Error

The (dominating) stochastic error is OP (N −1/2 ):

D

N 1/2 {Stochastic Error} → N (0, V )

with

V =− sϕ (s)tϕ (t) log(s ∨ t) ds dt.

s,t∈[ ,1]

[Proof based on Donsker’s theorem]

The number of simulated points equals the number of iterations j,

and is a multiple of N : if one stops at first iteration j such that

e−j/N < , then: j = N − log .](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-107-320.jpg)

![On approximating Bayes factors

Nested sampling

Constraints

Sampling from constr’d priors

Exact simulation from the constrained prior is intractable in most

cases!

Skilling (2007) proposes to use MCMC, but:

this introduces a bias (stopping rule).

if MCMC stationary distribution is unconst’d prior, more and

more difficult to sample points such that L(θ) > l as l

increases.

If implementable, then slice sampler can be devised at the same

cost!

[Thanks, Gareth!]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-112-320.jpg)

![On approximating Bayes factors

Nested sampling

Constraints

Sampling from constr’d priors

Exact simulation from the constrained prior is intractable in most

cases!

Skilling (2007) proposes to use MCMC, but:

this introduces a bias (stopping rule).

if MCMC stationary distribution is unconst’d prior, more and

more difficult to sample points such that L(θ) > l as l

increases.

If implementable, then slice sampler can be devised at the same

cost!

[Thanks, Gareth!]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-113-320.jpg)

![On approximating Bayes factors

Nested sampling

Constraints

Sampling from constr’d priors

Exact simulation from the constrained prior is intractable in most

cases!

Skilling (2007) proposes to use MCMC, but:

this introduces a bias (stopping rule).

if MCMC stationary distribution is unconst’d prior, more and

more difficult to sample points such that L(θ) > l as l

increases.

If implementable, then slice sampler can be devised at the same

cost!

[Thanks, Gareth!]](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-114-320.jpg)

![On approximating Bayes factors

Nested sampling

A mixture comparison

Comparison (cont’d)

Nested sampling gets less reliable as sample size increases

Most reliable approach is mixture Z3 although harmonic solution

Z1 close to Chib’s solution [taken as golden standard]

Monte Carlo method Z2 also producing poor approximations to Z

(Kernel φ used in Z2 is a t non-parametric kernel estimate with

standard bandwidth estimation.)](https://image.slidesharecdn.com/warwox-100530021332-phpapp01/85/Approximating-Bayes-Factors-125-320.jpg)