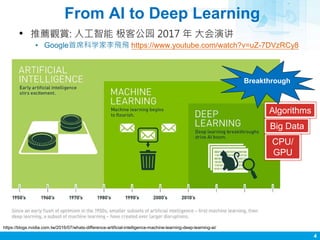

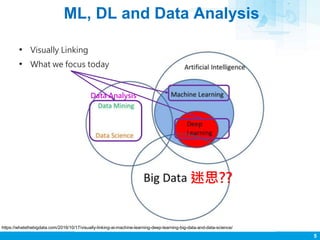

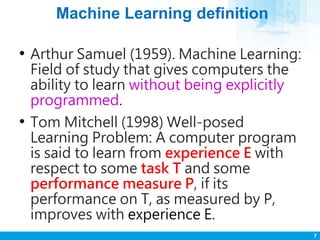

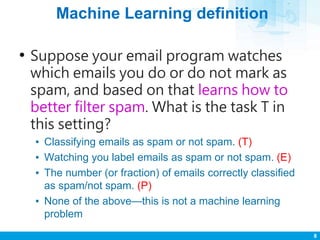

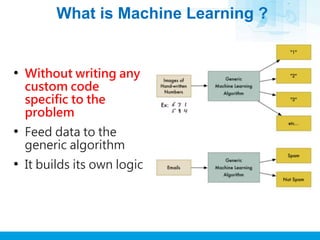

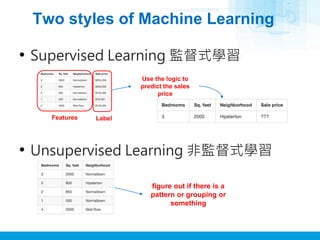

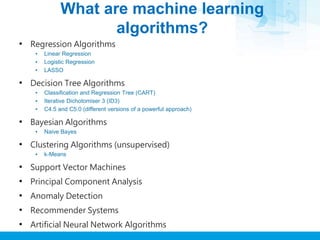

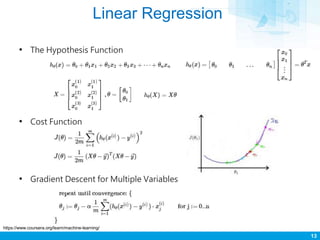

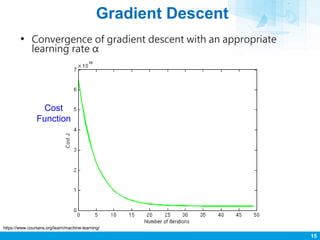

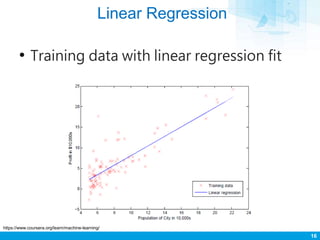

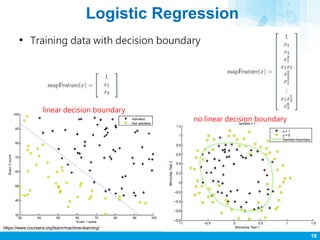

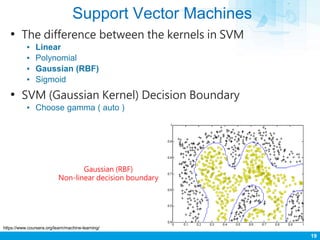

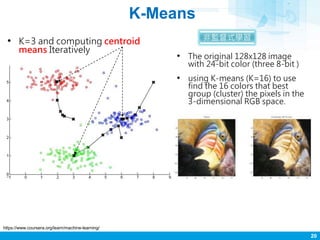

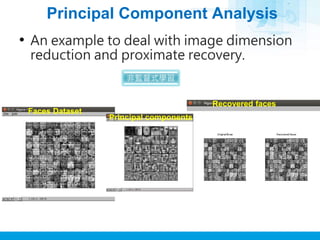

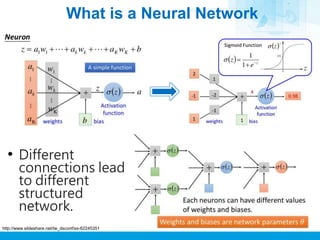

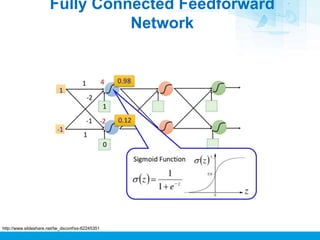

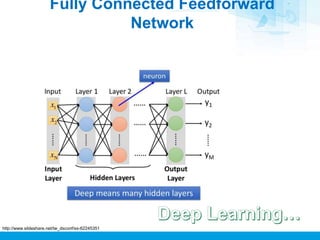

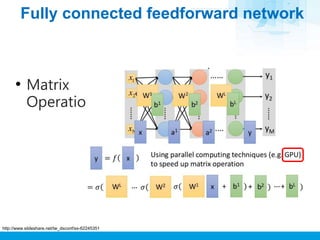

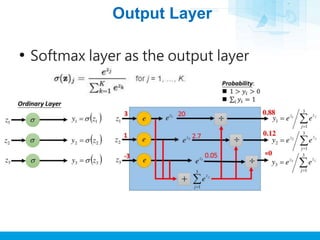

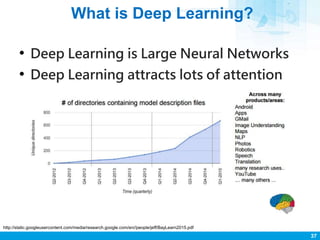

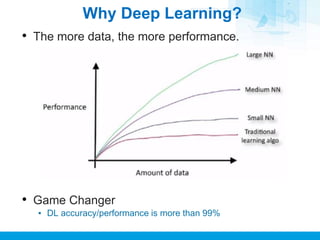

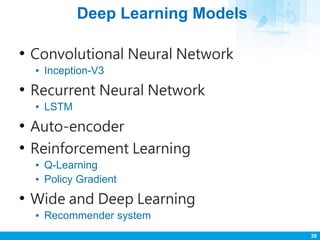

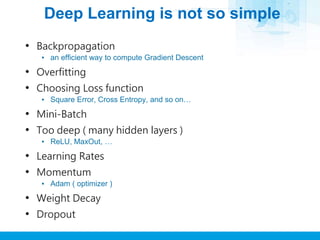

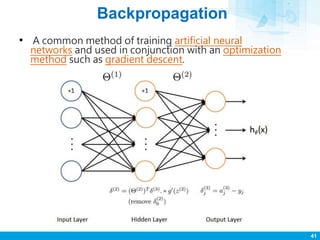

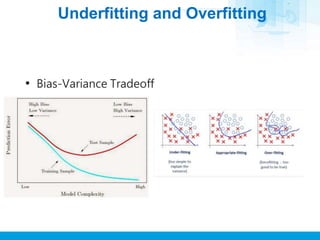

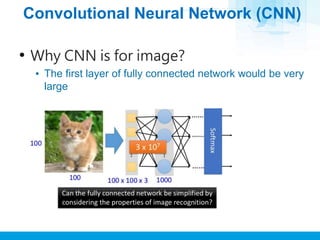

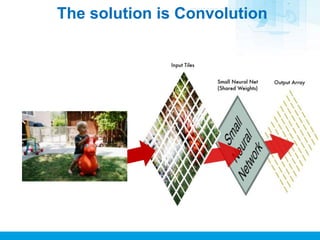

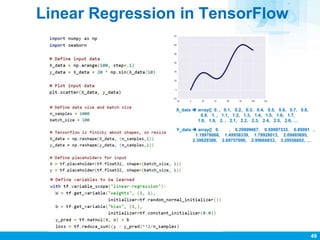

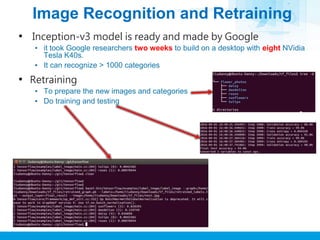

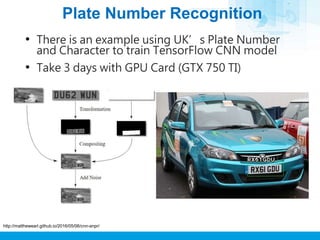

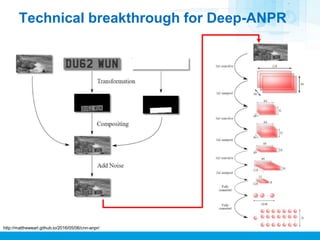

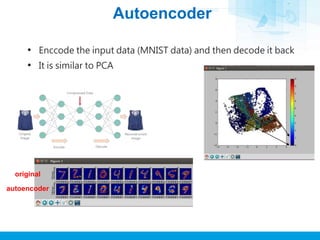

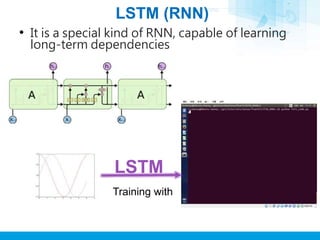

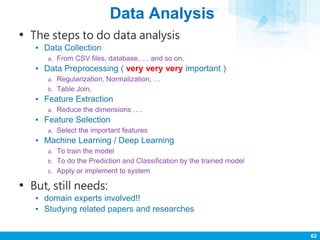

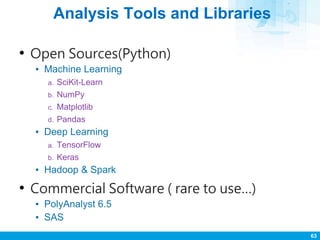

The document provides an introduction and overview of machine learning, deep learning, and data analysis. It discusses key concepts like supervised and unsupervised learning. It also summarizes the speaker's experience taking online courses and studying resources to learn machine learning techniques. Examples of commonly used machine learning algorithms and neural network architectures are briefly outlined.