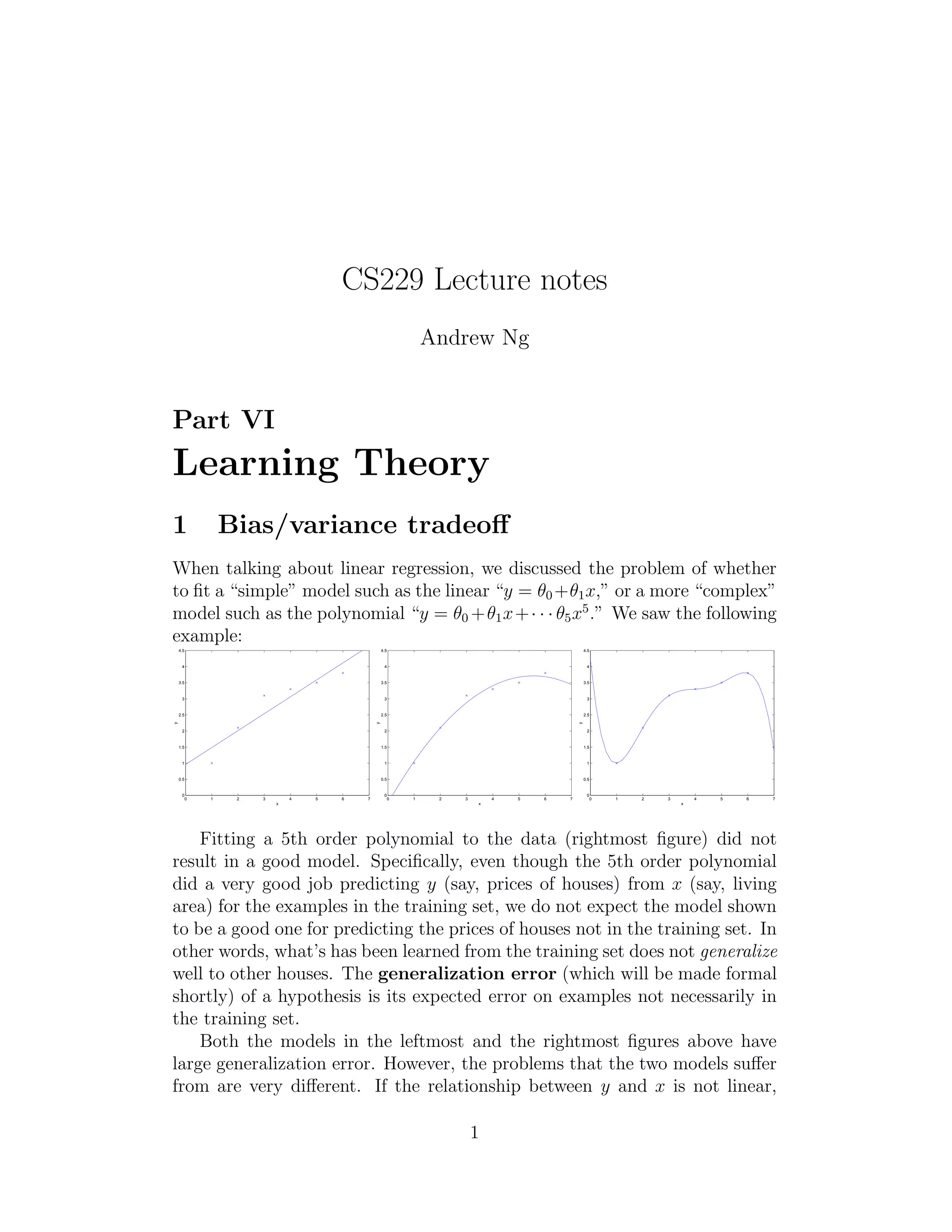

This document summarizes lecture notes from Andrew Ng on learning theory. It discusses the bias-variance tradeoff in machine learning models and introduces key concepts like generalization error, training error, and hypothesis classes. The document proves that if the hypothesis class H is finite, then with high probability the training error of all hypotheses in H will be close to their true generalization errors, provided the training set is sufficiently large. This uniform convergence guarantee allows relating the performance of the empirical risk minimization algorithm to the best possible hypothesis in H.

![6

have that

P(∃ h ∈ H.|(hi) − ˆ(hi)|

) = P(A1 ∪ · · · ∪ Ak)

≤

Xk

i=1

P(Ai)

≤

Xk

i=1

2 exp(−2

2m)

= 2k exp(−2

2m)

If we subtract both sides from 1, we find that

P(¬∃ h ∈ H.|(hi) − ˆ(hi)|

) = P(∀h ∈ H.|(hi) − ˆ(hi)| ≤

)

≥ 1 − 2k exp(−2

2m)

(The “¬” symbol means “not.”) So, with probability at least 1−2k exp(−2

2m),

we have that (h) will be within

of ˆ(h) for all h ∈ H. This is called a uni-form

convergence result, because this is a bound that holds simultaneously

for all (as opposed to just one) h ∈ H.

In the discussion above, what we did was, for particular values of m and

, give a bound on the probability that for some h ∈ H, |(h) − ˆ(h)|

.

There are three quantities of interest here: m,

, and the probability of error;

we can bound either one in terms of the other two.

For instance, we can ask the following question: Given

and some 0,

how large must m be before we can guarantee that with probability at least

1 − , training error will be within

of generalization error? By setting

= 2k exp(−2

2m) and solving for m, [you should convince yourself this is

the right thing to do!], we find that if

m ≥

1

2

2 log

2k

,

then with probability at least 1 − , we have that |(h) − ˆ(h)| ≤

for all

h ∈ H. (Equivalently, this shows that the probability that |(h) − ˆ(h)|

for some h ∈ H is at most .) This bound tells us how many training

examples we need in order make a guarantee. The training set size m that

a certain method or algorithm requires in order to achieve a certain level of

performance is also called the algorithm’s sample complexity.

The key property of the bound above is that the number of training

examples needed to make this guarantee is only logarithmic in k, the number

of hypotheses in H. This will be important later.](https://image.slidesharecdn.com/machinelearning4-140901163649-phpapp01/85/Machine-learning-4-6-320.jpg)

![7

Similarly, we can also hold m and fixed and solve for

in the previous

equation, and show [again, convince yourself that this is right!] that with

probability 1 − , we have that for all h ∈ H,

|ˆ(h) − (h)| ≤

r

1

2m

log

2k

.

Now, let’s assume that uniform convergence holds, i.e., that |(h)−ˆ(h)| ≤

for all h ∈ H. What can we prove about the generalization of our learning

algorithm that picked ˆh

= argminh∈H ˆ(h)?

Define h∗ = argminh∈H (h) to be the best possible hypothesis in H. Note

that h∗ is the best that we could possibly do given that we are using H, so

it makes sense to compare our performance to that of h∗. We have:

(ˆh

) ≤ ˆ(ˆh

) +

≤ ˆ(h∗) +

≤ (h∗) + 2

The first line used the fact that |(ˆh

)−ˆ(ˆh

)| ≤

(by our uniform convergence

ˆh

assumption). The second used the fact that was chosen to minimize (h),

ˆand hence (ˆ) ≤ (h) ˆfor all h, and in particular (ˆ) ≤ (hˆ∗). The third

ˆh

ˆh

line used the uniform convergence assumption again, to show that ˆ(h∗) ≤ (h∗) +

. So, what we’ve shown is the following: If uniform convergence

occurs, then the generalization error of ˆh

is at most 2

worse than the best

possible hypothesis in H!

Let’s put all this together into a theorem.

Theorem. Let |H| = k, and let any m, be fixed. Then with probability at

least 1 − , we have that

(ˆh

) ≤

min

h∈H

(h)

+ 2

r

1

2m

log

2k

.

This is proved by letting

equal the √· term, using our previous argu-

ment that uniform convergence occurs with probability at least 1 − , and

then noting that uniform convergence implies (h) is at most 2

higher than

(h∗) = minh∈H (h) (as we showed previously).

This also quantifies what we were saying previously saying about the

bias/variance tradeoff in model selection. Specifically, suppose we have some

hypothesis class H, and are considering switching to some much larger hy-

pothesis class H′ ⊇ H. If we switch to H′, then the first term minh (h)](https://image.slidesharecdn.com/machinelearning4-140901163649-phpapp01/85/Machine-learning-4-7-320.jpg)