This document provides an overview of machine learning concepts including:

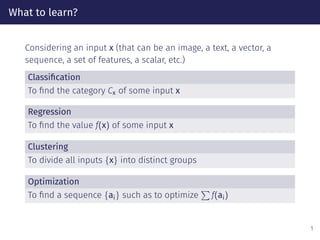

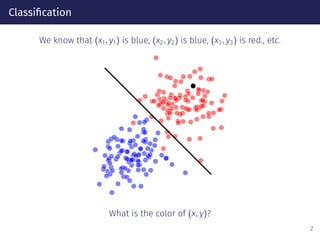

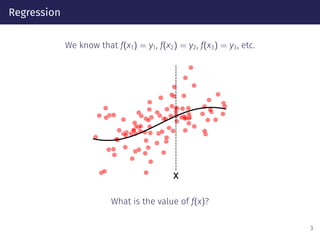

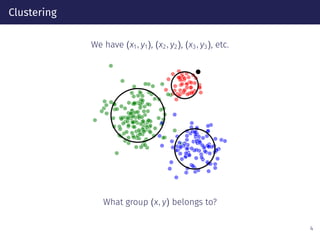

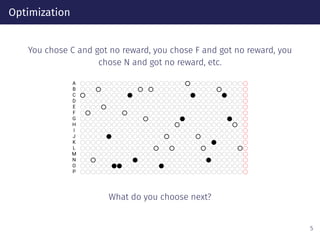

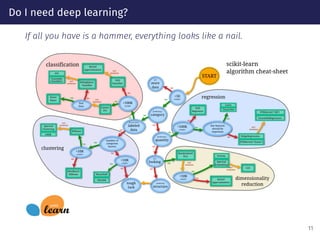

1) The main types of machine learning problems are classification, regression, clustering, and optimization.

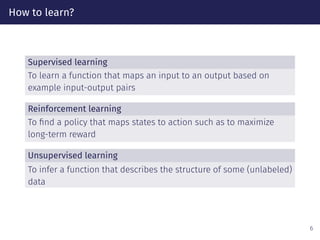

2) Supervised, unsupervised, and reinforcement learning are the main approaches to teach a machine.

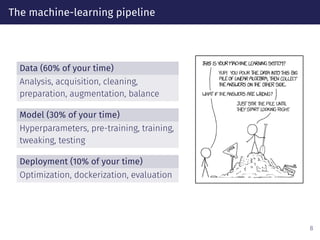

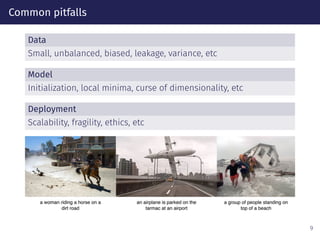

3) Building machine learning models involves data collection/preparation, model training/testing, and deployment of the trained model.