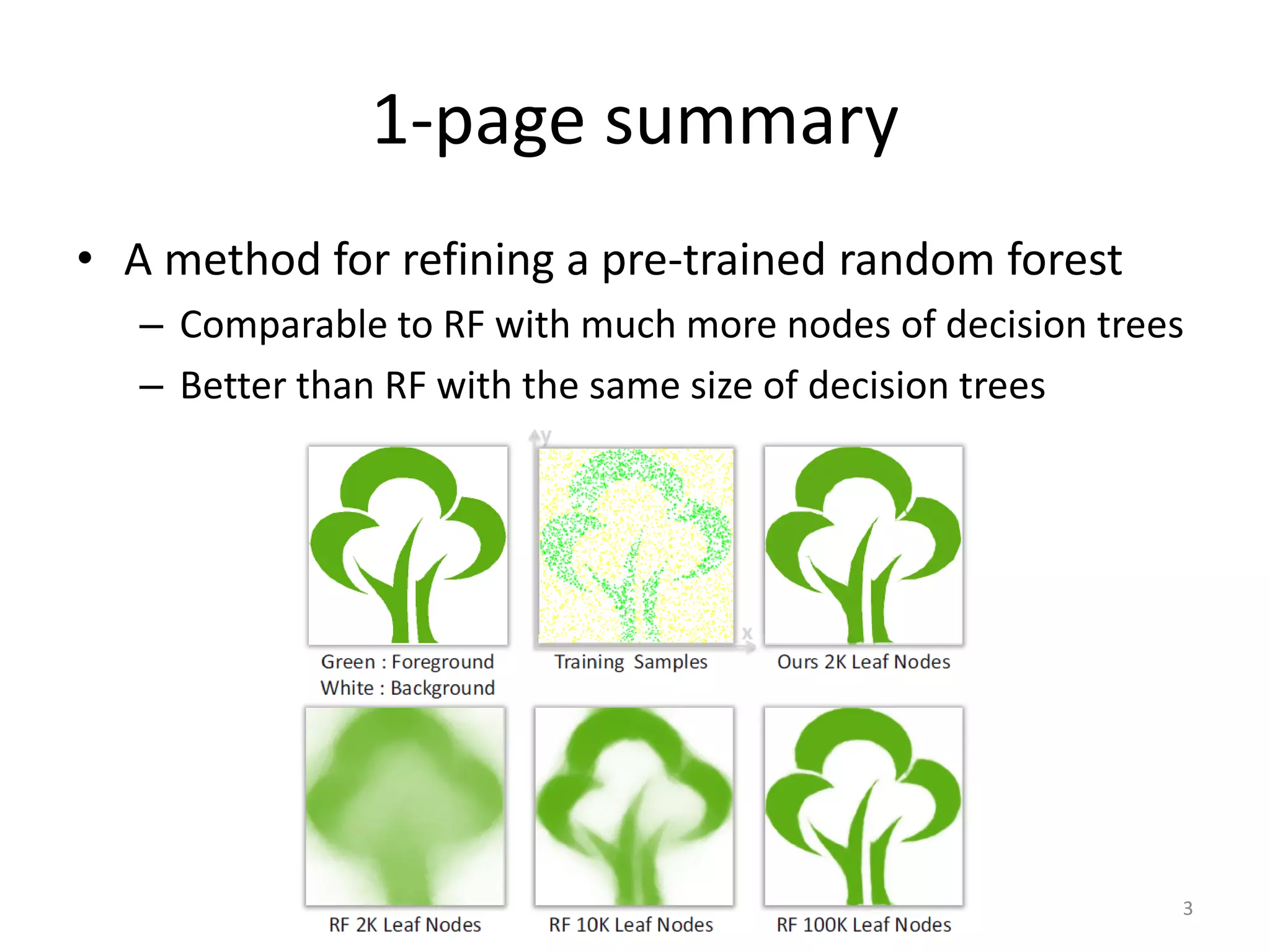

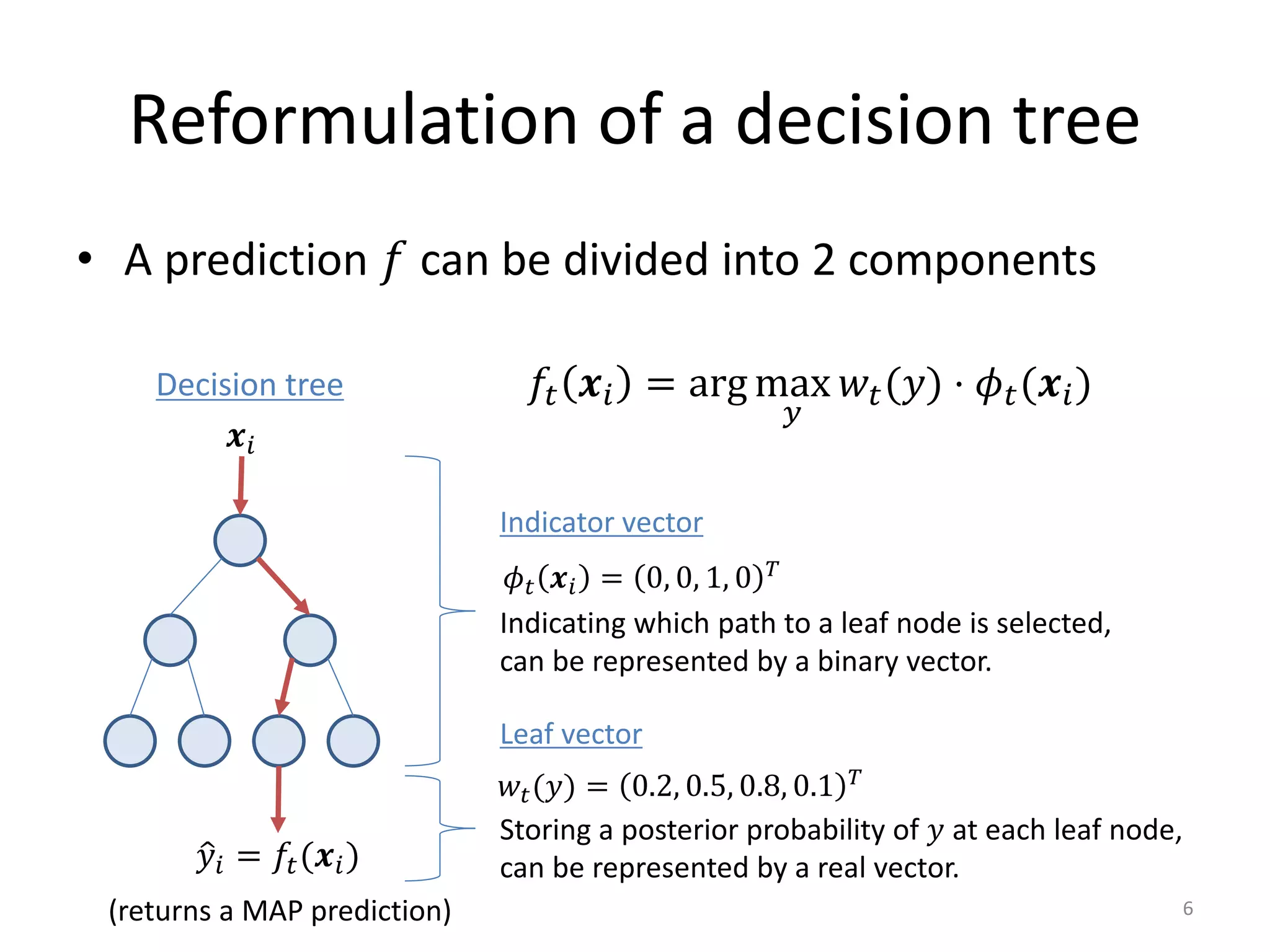

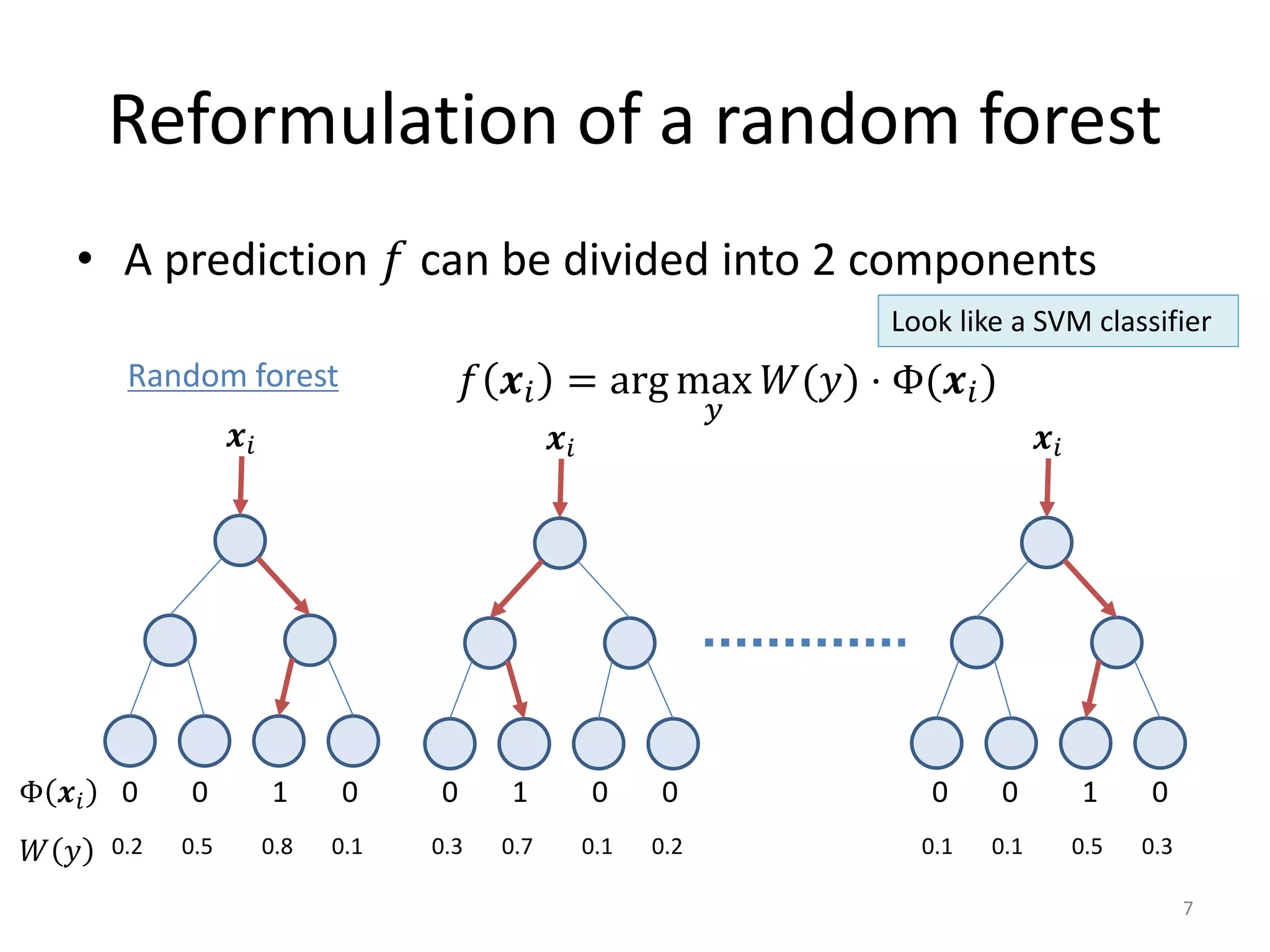

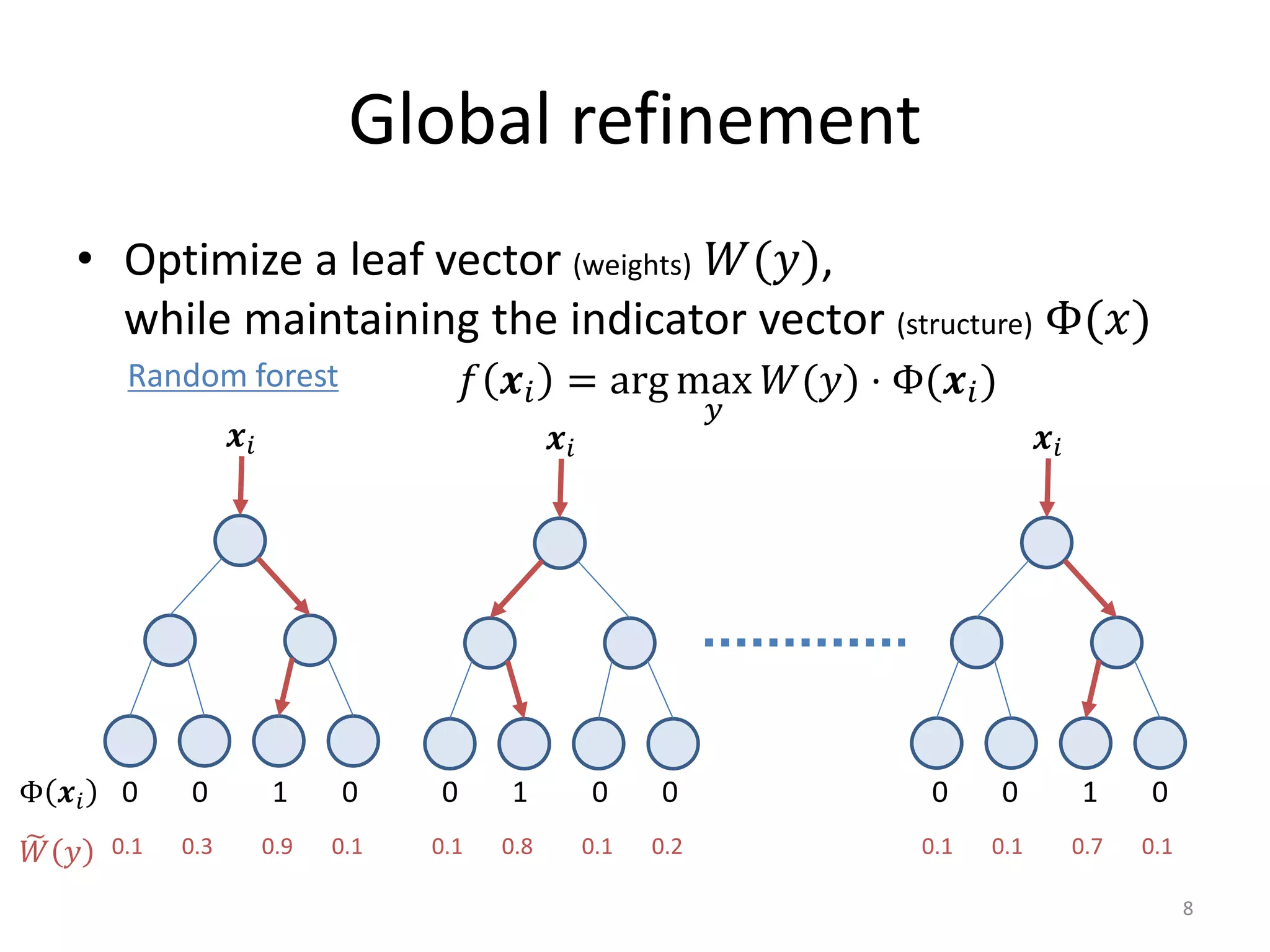

- A method is presented for refining a pre-trained random forest by optimizing the leaf weights while keeping the tree structures fixed.

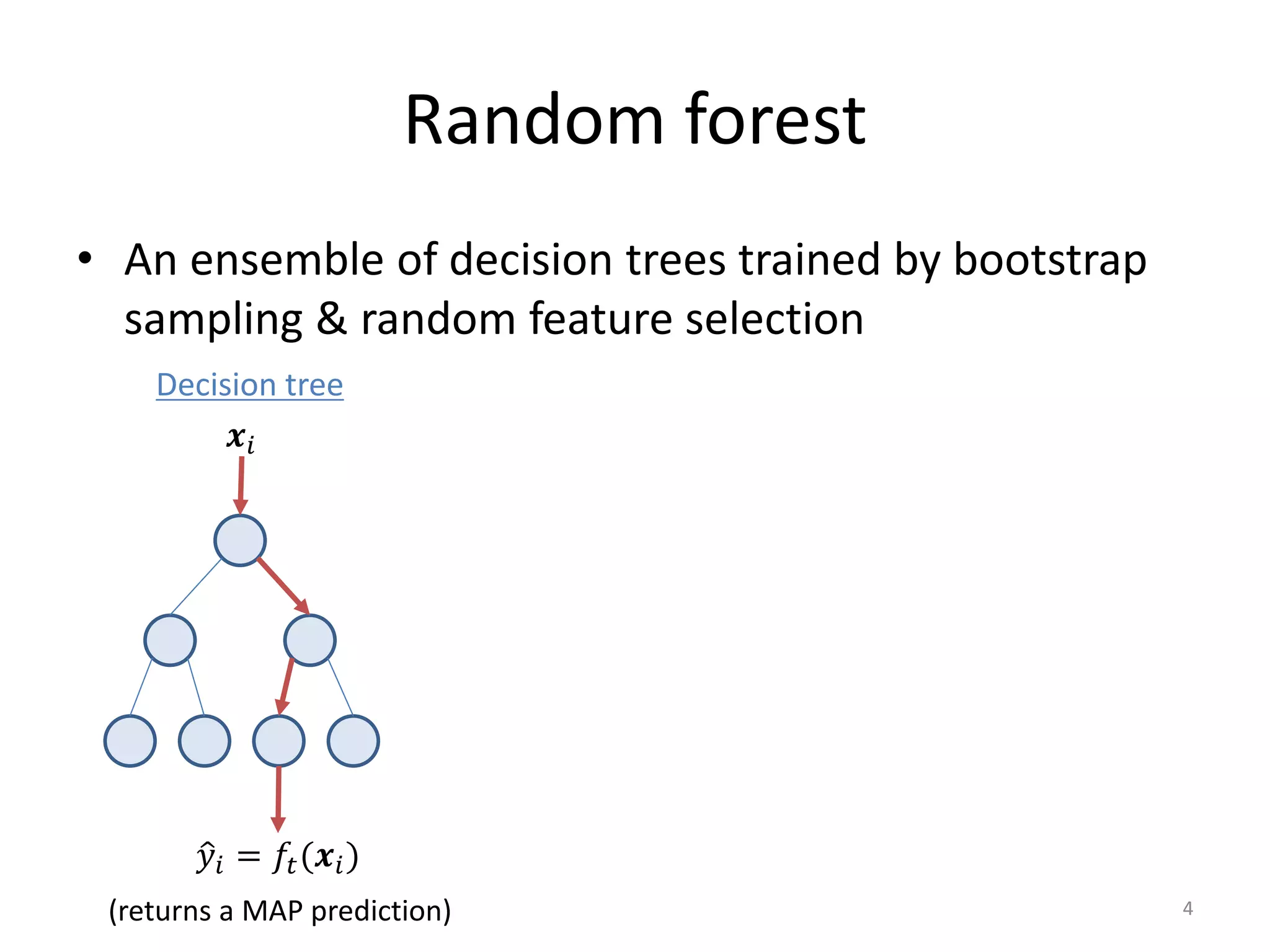

- This reformulates the random forest as a linear classification/regression problem where samples are represented by sparse indicator vectors.

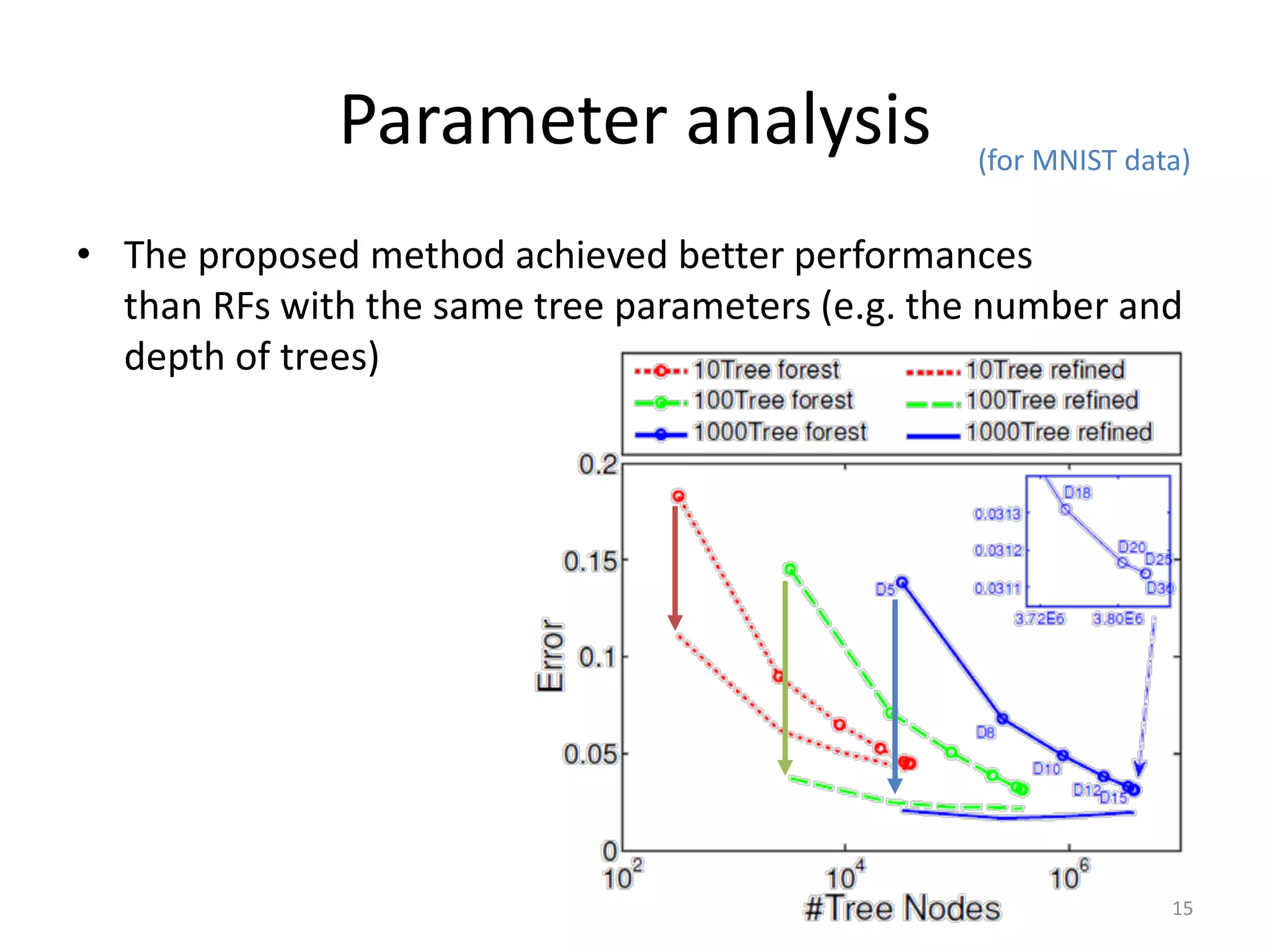

- The optimization can be performed efficiently and the refined forest has comparable or better accuracy than the original forest, but with significantly fewer trees/nodes.

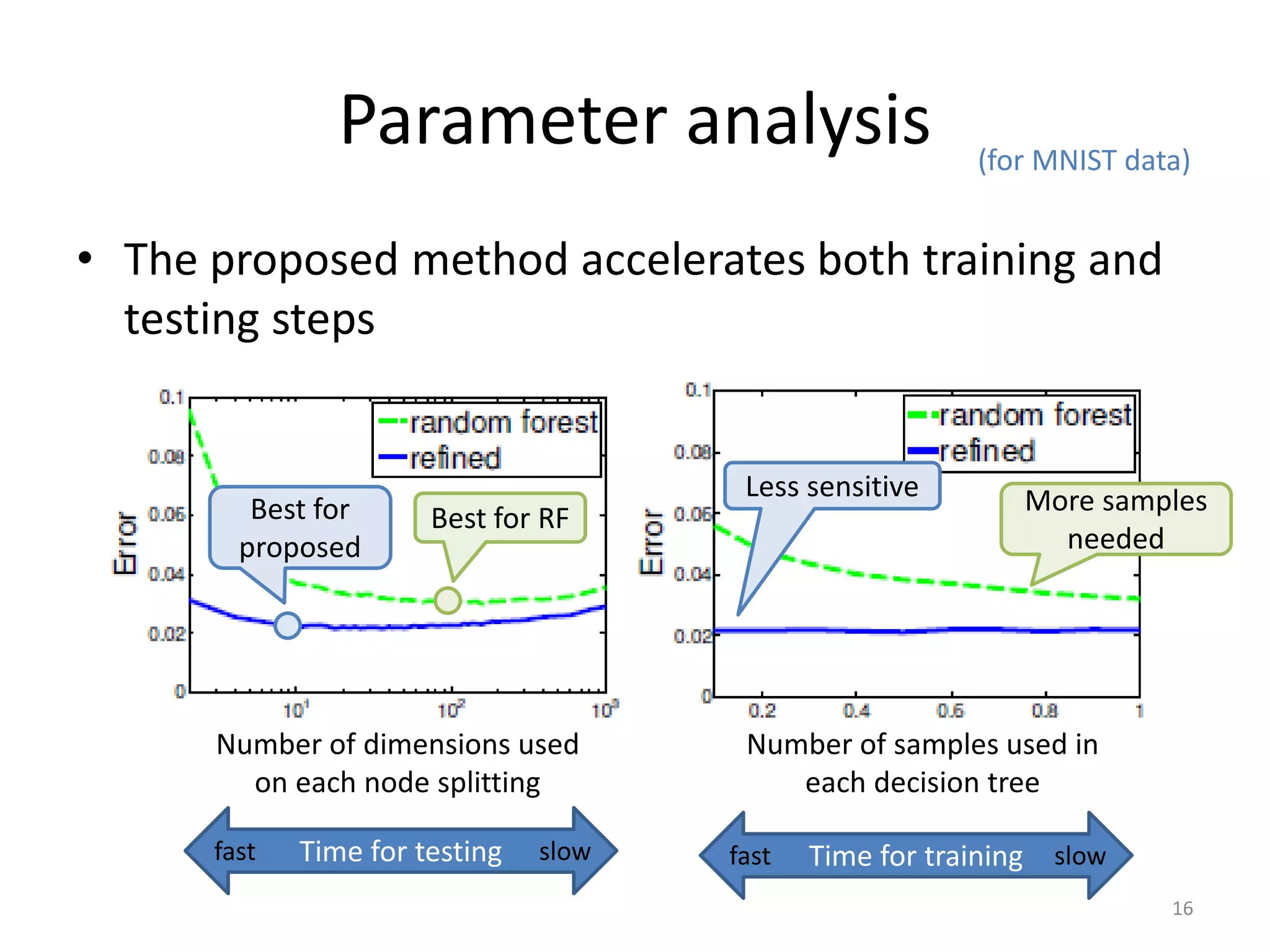

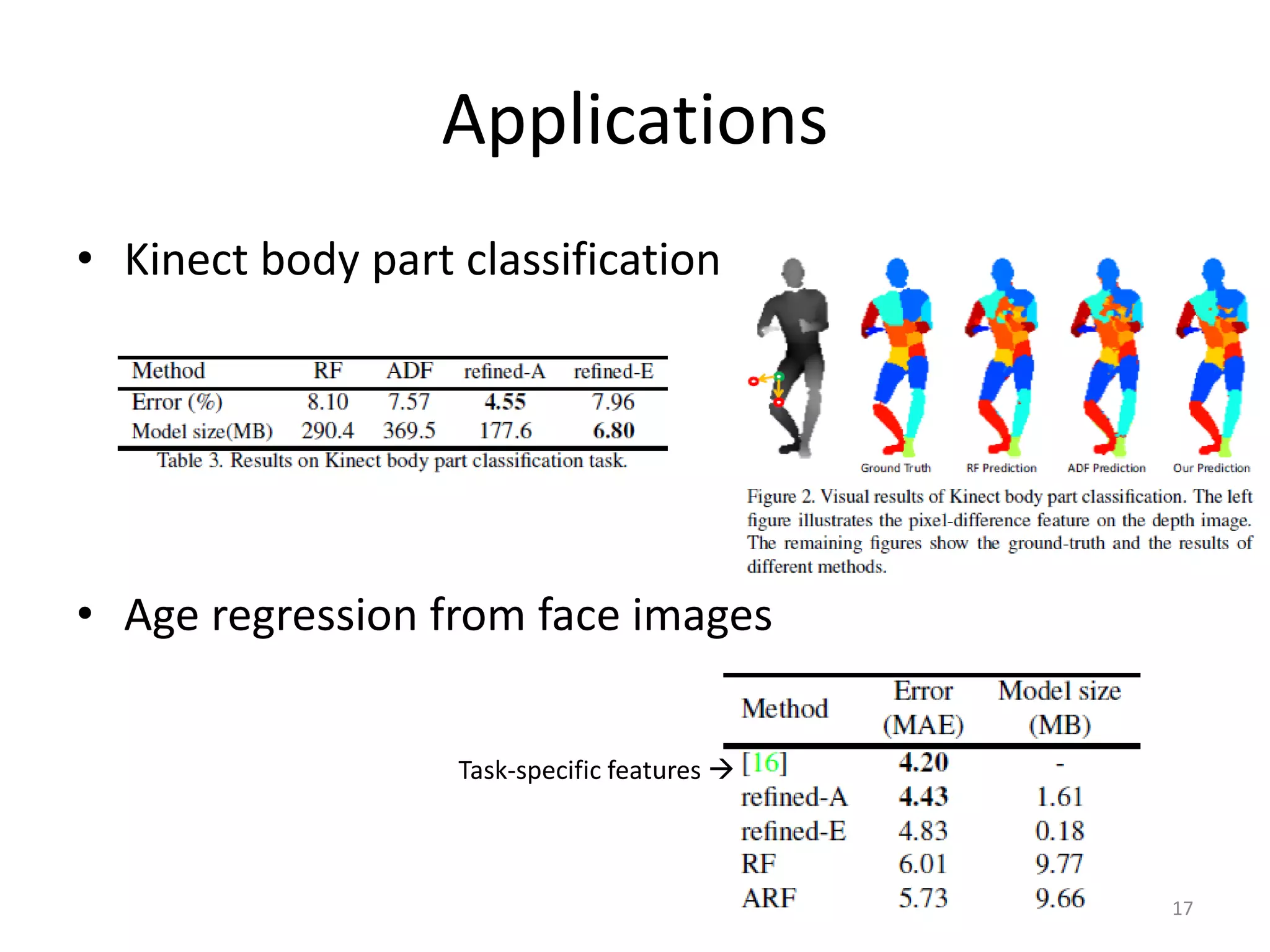

- Experiments on classification and regression datasets demonstrate the proposed method outperforms other random forest techniques while accelerating training and testing.

![Global refinement

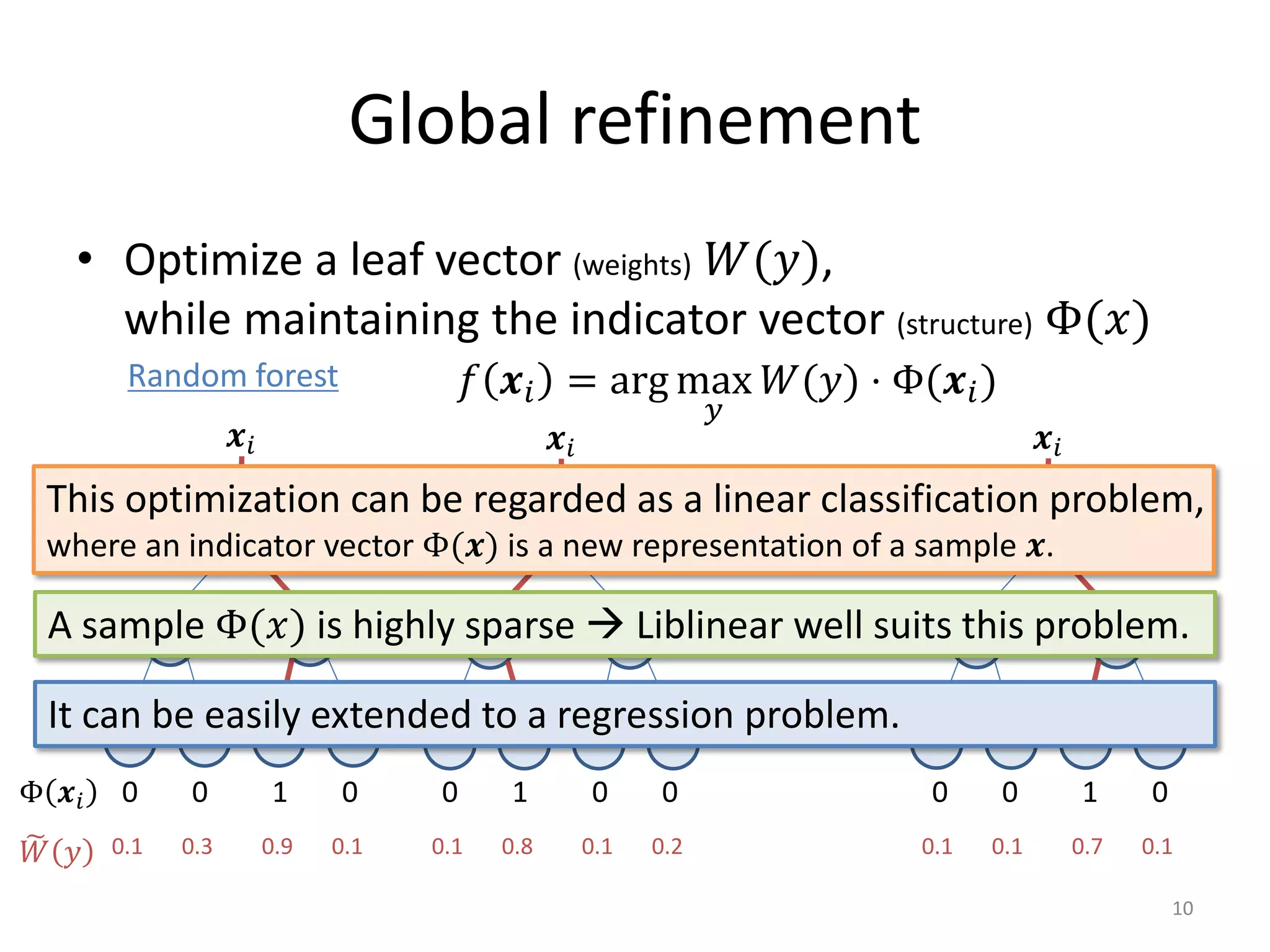

• Optimize a leaf vector (weights) 𝑊𝑊(𝑦𝑦),

while maintaining the indicator vector (structure) Φ(𝑥𝑥)

𝒙𝒙𝑖𝑖 𝒙𝒙𝑖𝑖 𝒙𝒙𝑖𝑖

0 0 1 0 0 1 0 0 0 0 1 0Φ 𝒙𝒙𝑖𝑖

0.1 0.3 0.9 0.1 0.1 0.8 0.1 0.2 0.1 0.1 0.7 0.1�𝑊𝑊 𝑦𝑦

Random forest 𝑓𝑓 𝒙𝒙𝑖𝑖 = arg max

𝑦𝑦

𝑊𝑊(𝑦𝑦) ⋅ Φ(𝒙𝒙𝑖𝑖)

This optimization can be regarded as a linear classification problem,

where an indicator vector Φ(𝒙𝒙) is a new representation of a sample 𝒙𝒙.

[Note] In standard random forest, the trees are independently optimized.

This optimization effectively utilizes complementary information among trees.

9](https://image.slidesharecdn.com/bayes150724forpub-150725092255-lva1-app6891/75/CVPR2015-reading-Global-refinement-of-random-forest-9-2048.jpg)

![Experimental results

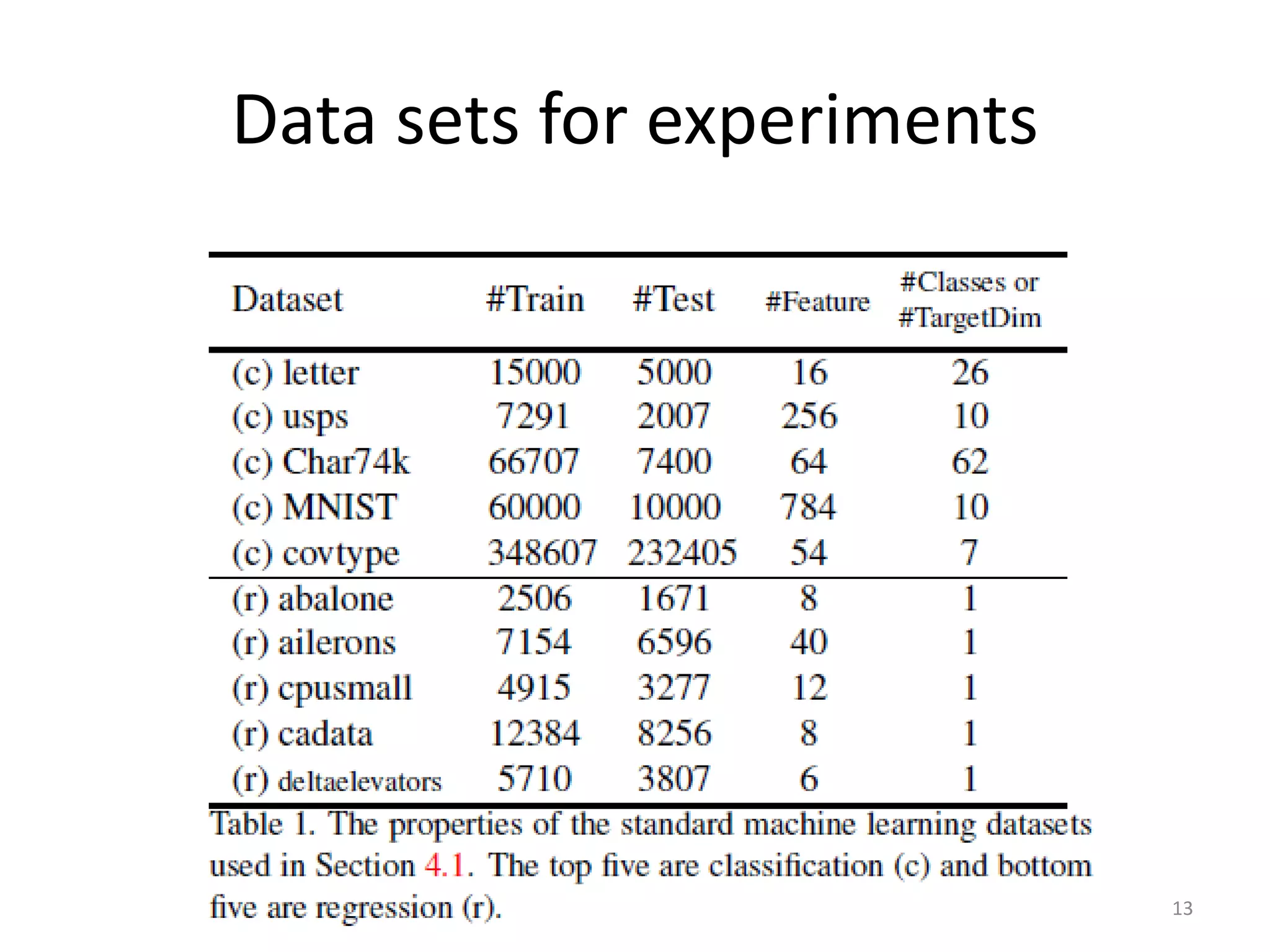

• ADF/ARF - alternating decision (regression) forest [Schulter+ ICCV13]

• Refined-A - Proposed method with the “accuracy” criterion

• Refined-E - Proposed method with “over-pruning”

(Accuracy is comparable to the original RF, but the size is much smaller.)

• Metrics - Error rate for classification, RMSE for regression.

• # trees = 100, max. depth = 10, 15 or 25 depending on the size of the training data.

• 60% for training, 40% for testing. 14](https://image.slidesharecdn.com/bayes150724forpub-150725092255-lva1-app6891/75/CVPR2015-reading-Global-refinement-of-random-forest-14-2048.jpg)