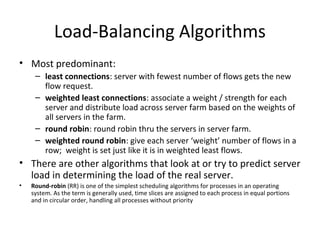

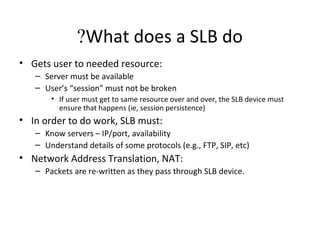

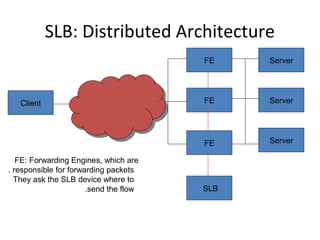

Load balancing is a networking method for distributing workloads across various resources to optimize utilization, maximize throughput, and minimize response time. Common algorithms include least connections and round robin, with server load balancers (SLB) ensuring user sessions persist and can adapt based on various factors like packet headers. SLB can be implemented in traditional or distributed architectures, where they manage packet flow between clients and servers.