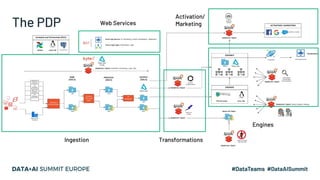

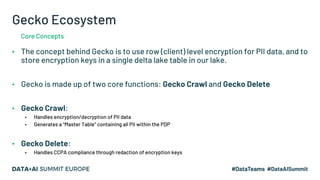

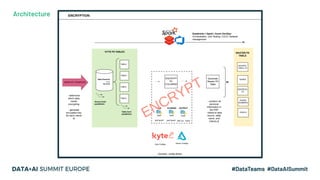

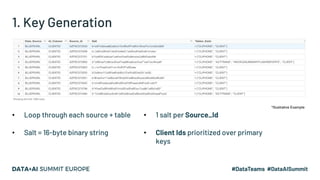

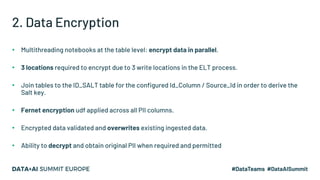

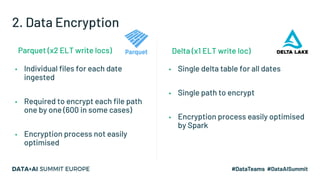

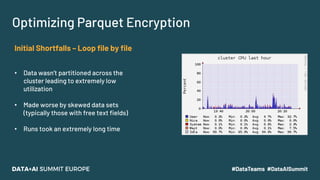

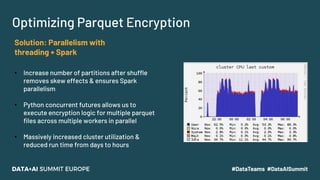

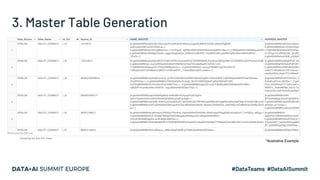

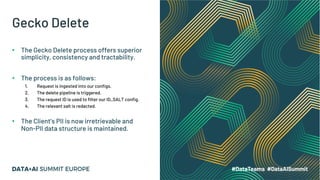

The document discusses leveraging Apache Spark and Delta Lake for enhanced data encryption, particularly related to CCPA compliance within Mars Petcare's data platform. It introduces 'Gecko', an ecosystem that utilizes row-level encryption for personal identifiable information (PII) and outlines its core functions for managing and deleting PII securely and efficiently. The presentation highlights optimizations in data processing to improve security, speed, and data integrity while also detailing future enhancements for the system.

![Gecko Delete

Vault: Petcare Data

Platform

Mars Petcare Business

Unit (Veterinary)

Right To Forget

Request:

Banfield ID

7586241

Right to forget

request comes from

the Business Unit

Via the OneTrust

System

Client is

identified within

the Vault Client’s Salt is redacted from

the ID table

• Without the Salt ID, the Encryption Key cannot be generated

• We only have to remove a single record from a single table but we achieve both of the following:

• From this point onwards, the Client PII can never be retrieved from the Vault

• All of the non-PII data stays exactly as it was in the lake, safely maintaining its overall integrity and value

7586241 [GECKO REDACTED]

*Illustrative Example](https://image.slidesharecdn.com/43haleharrington-201124203817/85/Leveraging-Apache-Spark-and-Delta-Lake-for-Efficient-Data-Encryption-at-Scale-28-320.jpg)