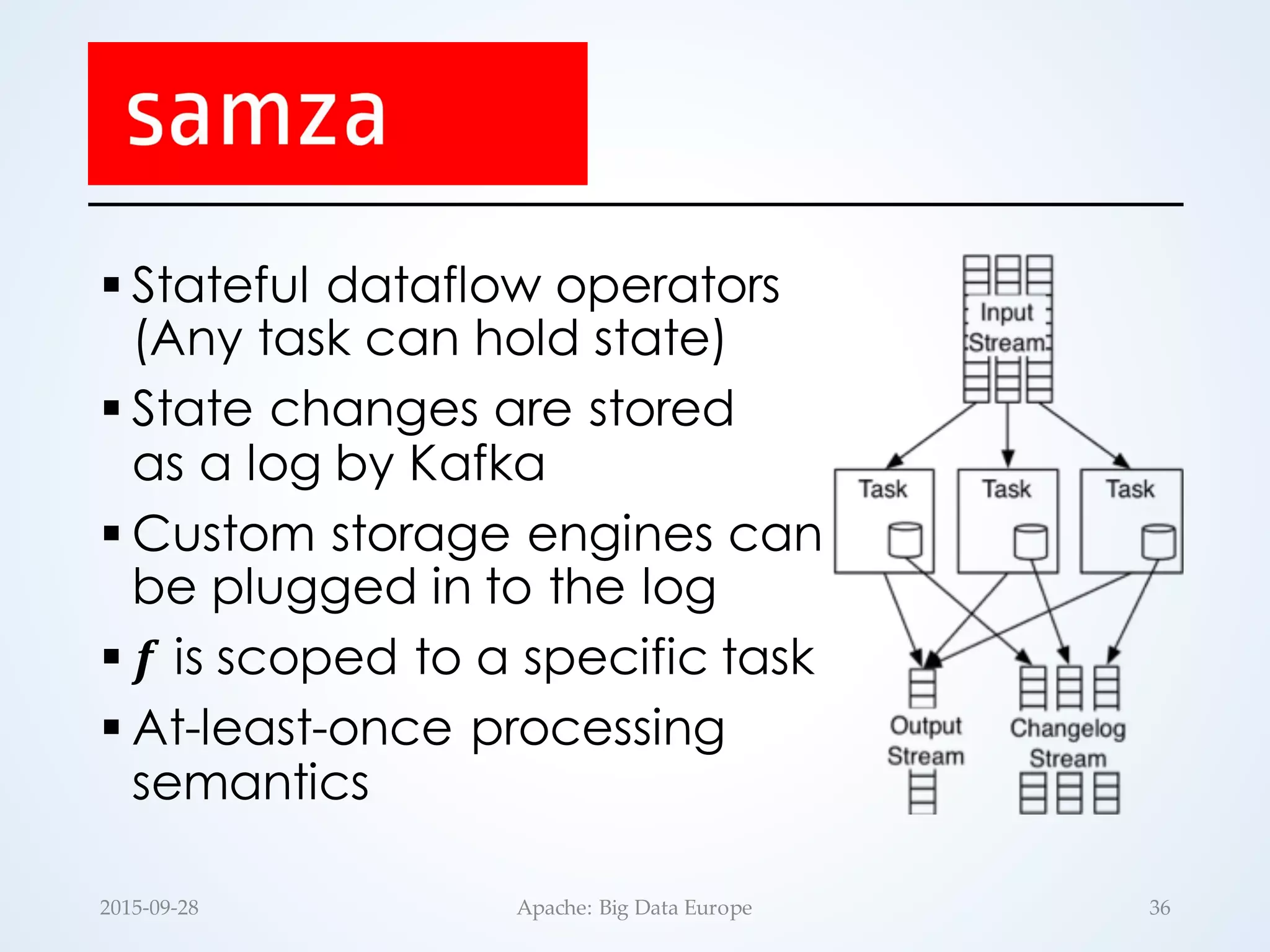

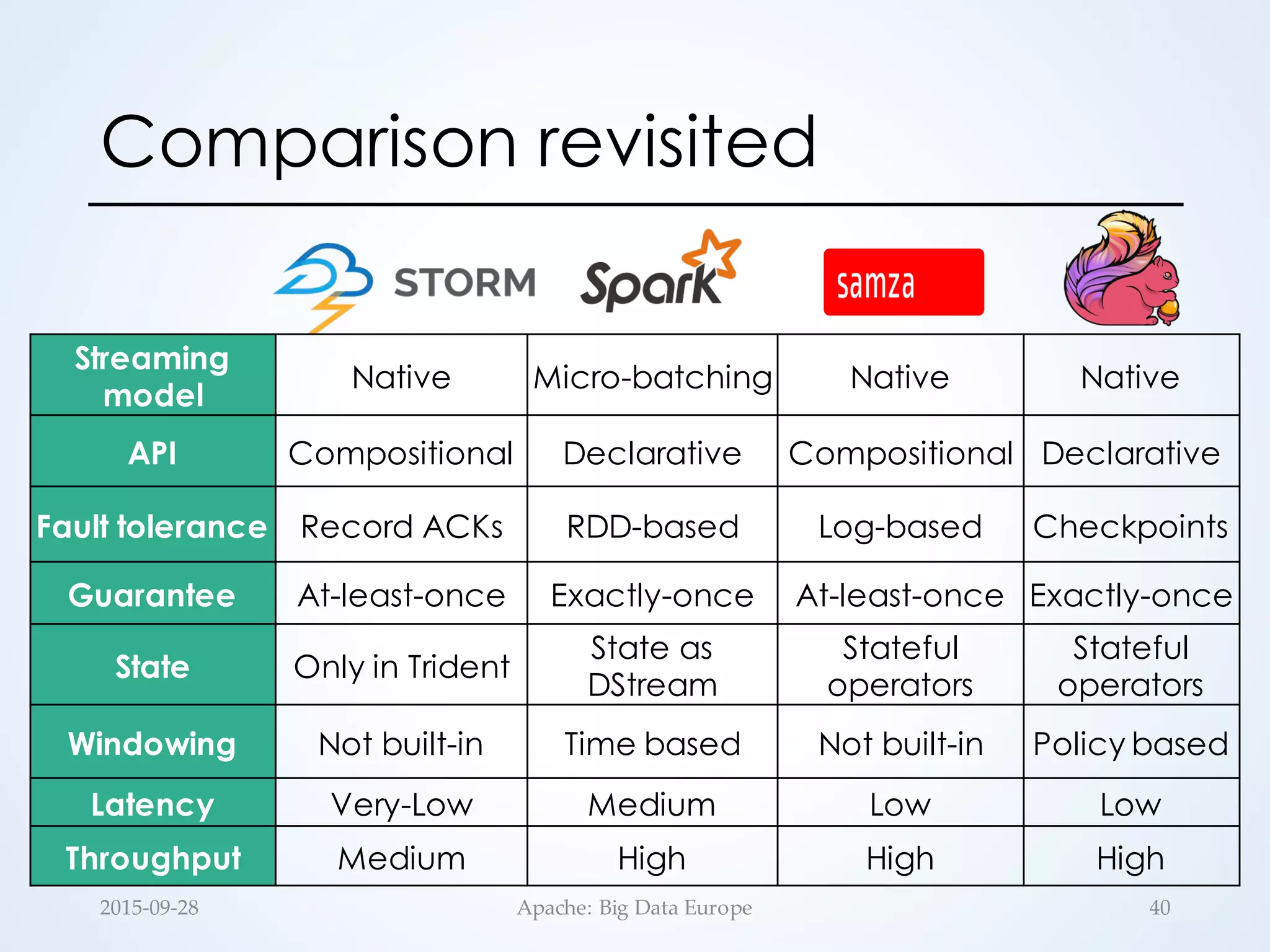

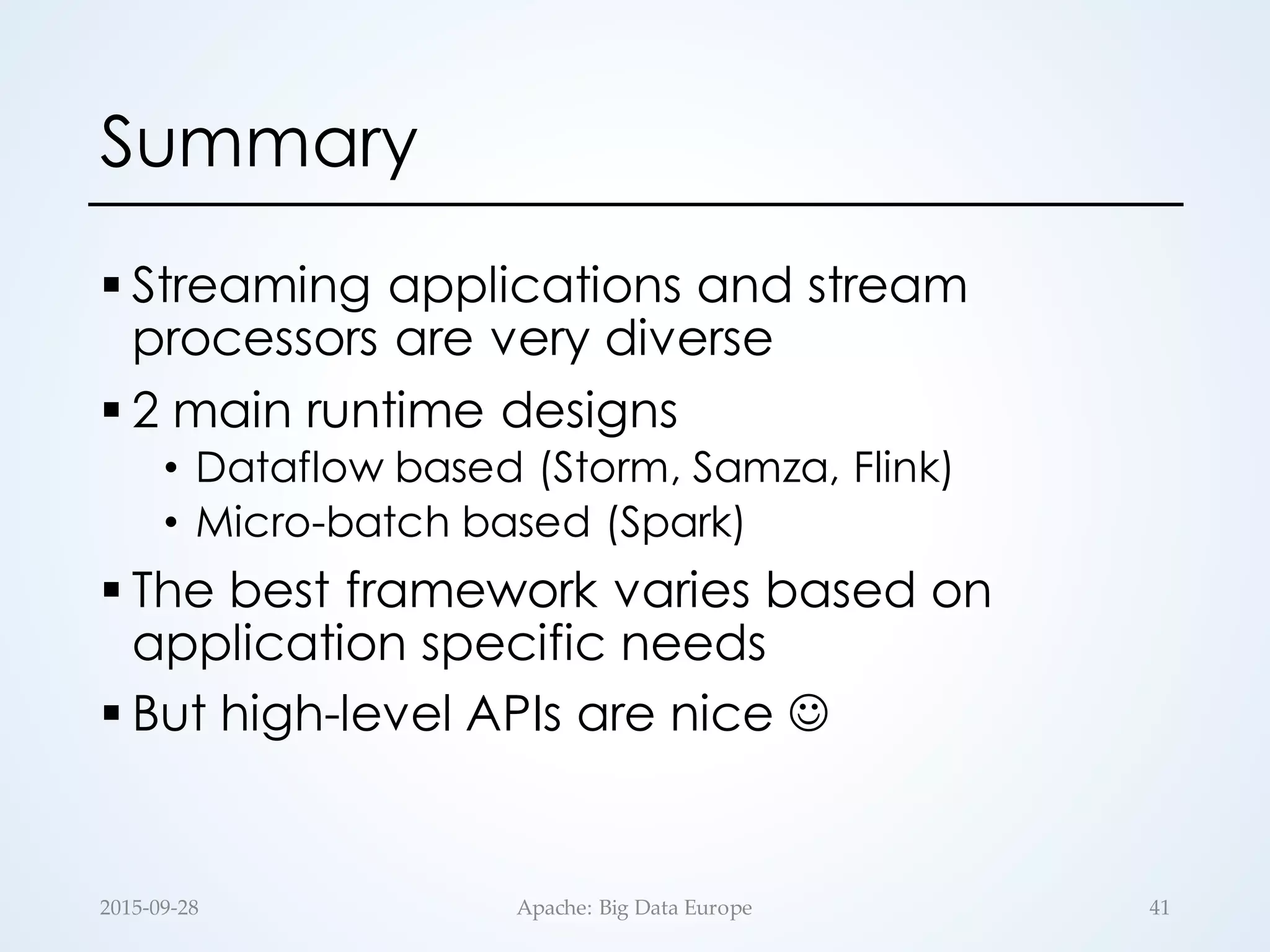

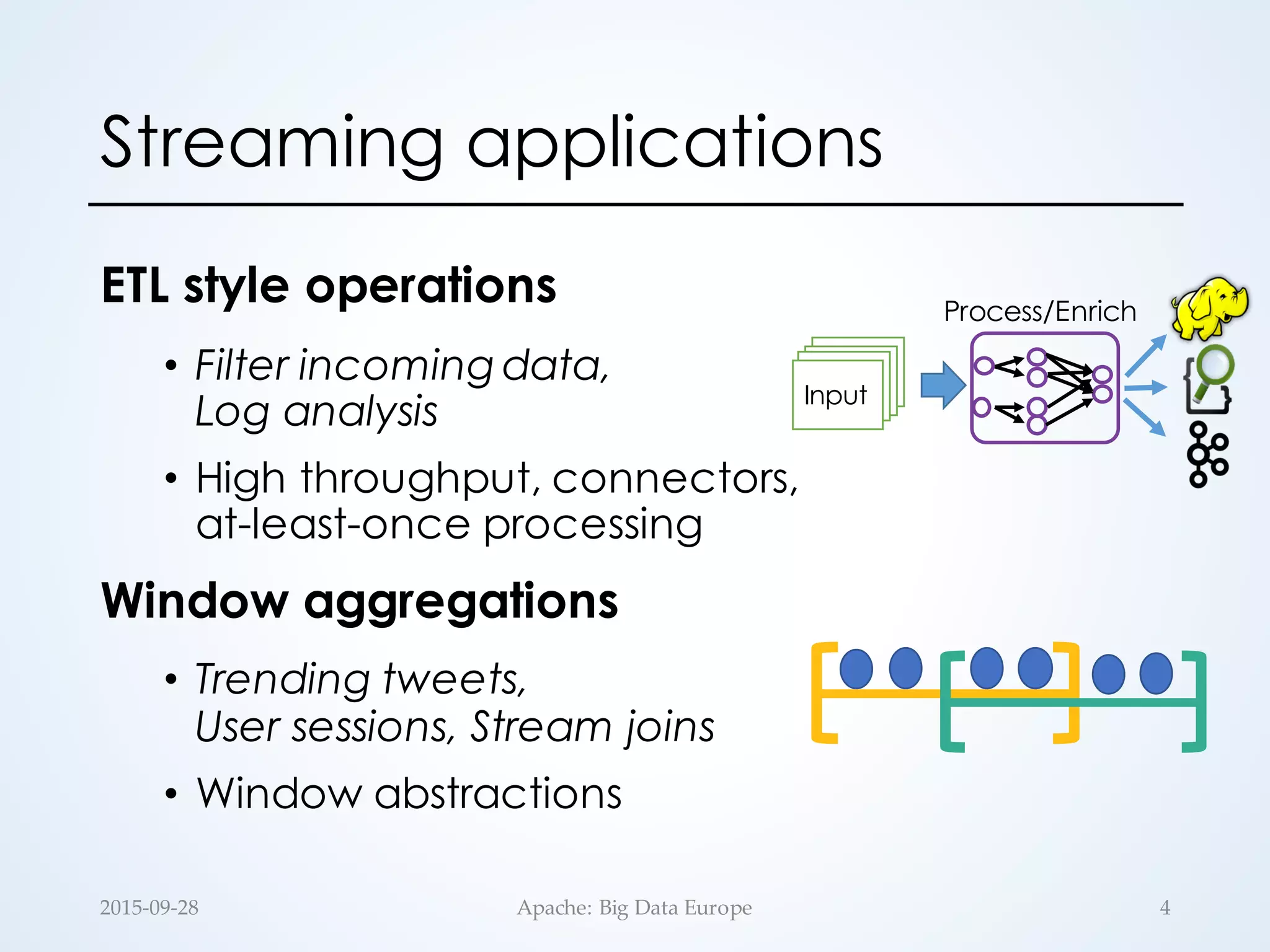

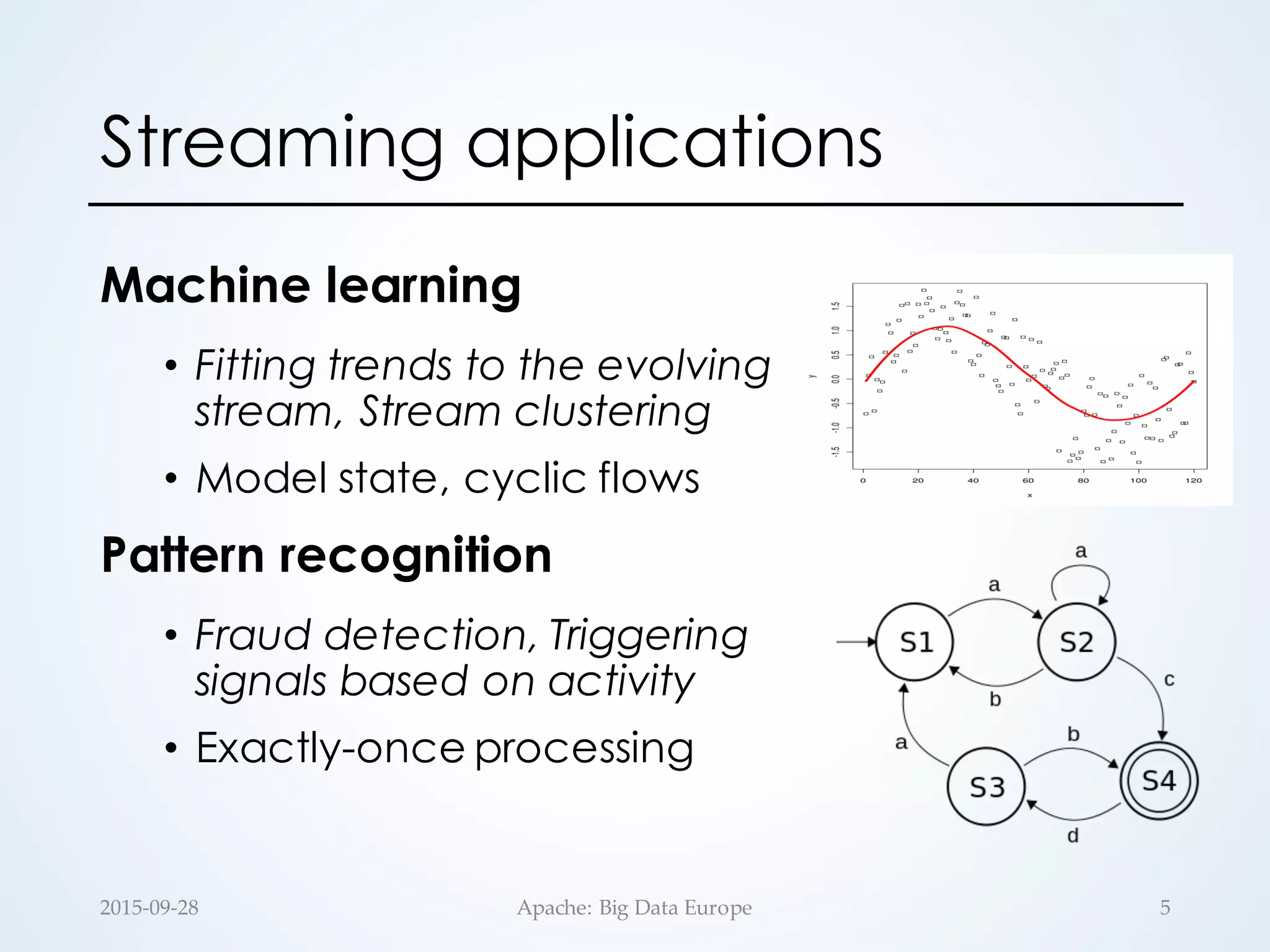

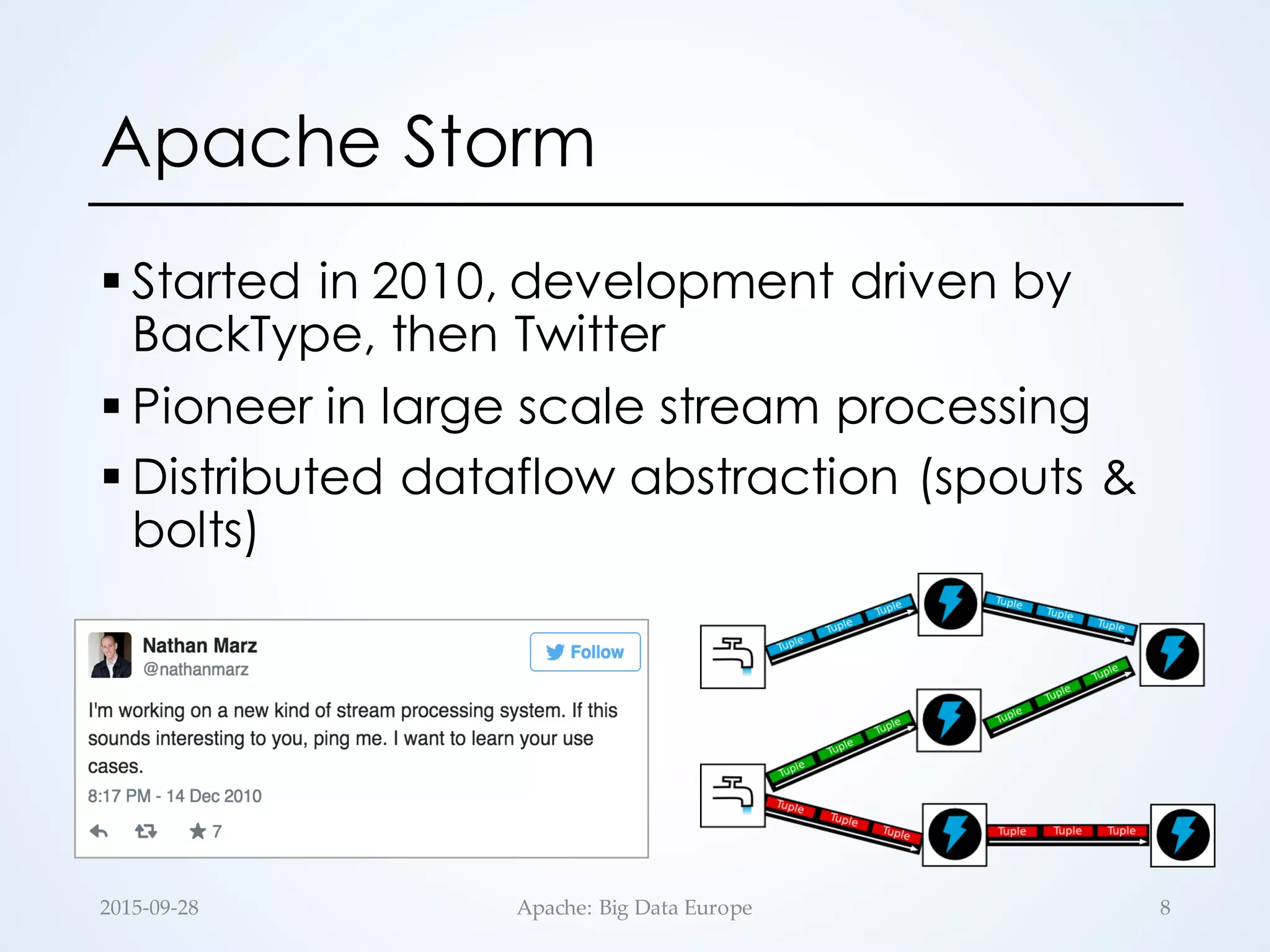

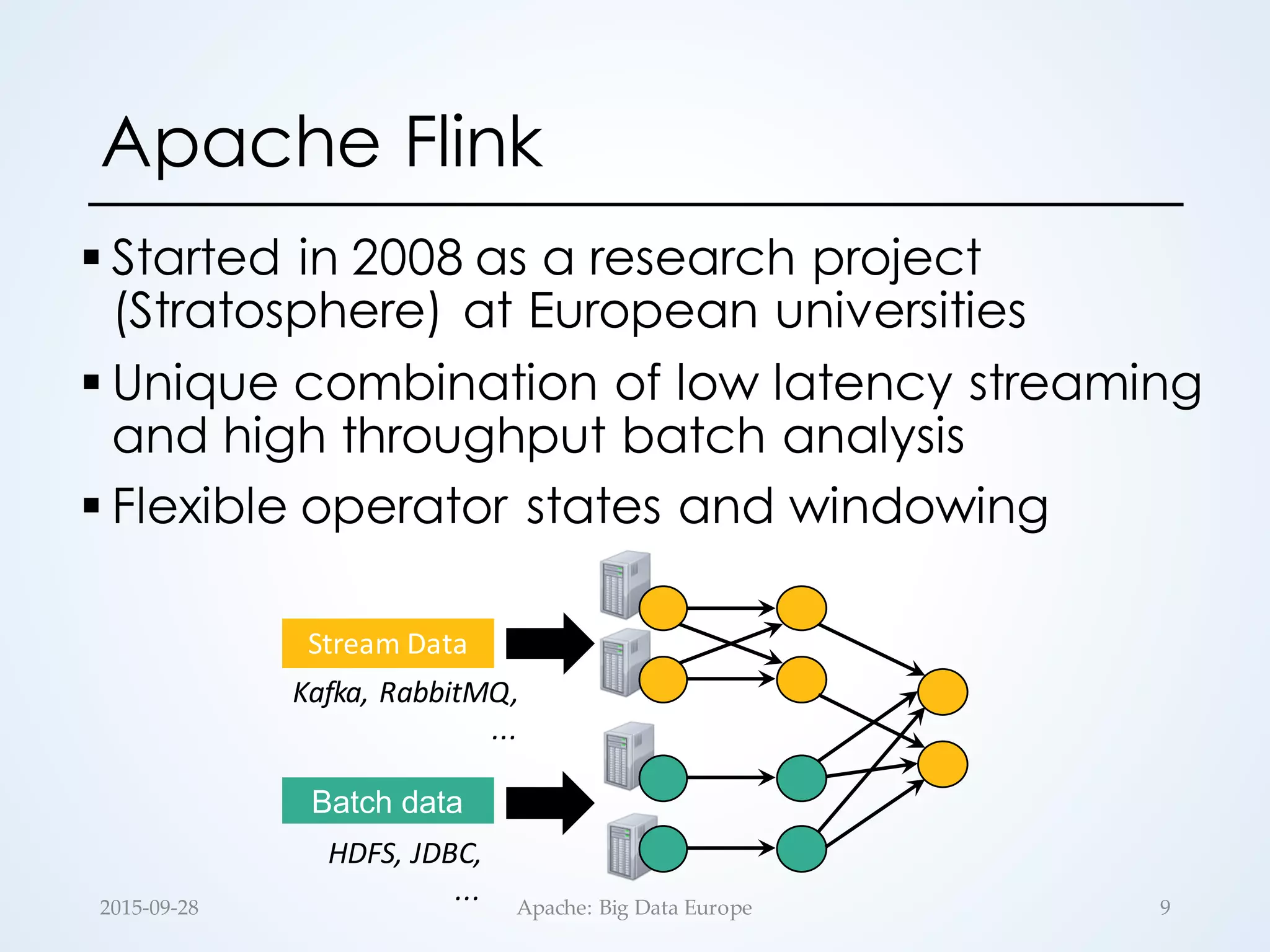

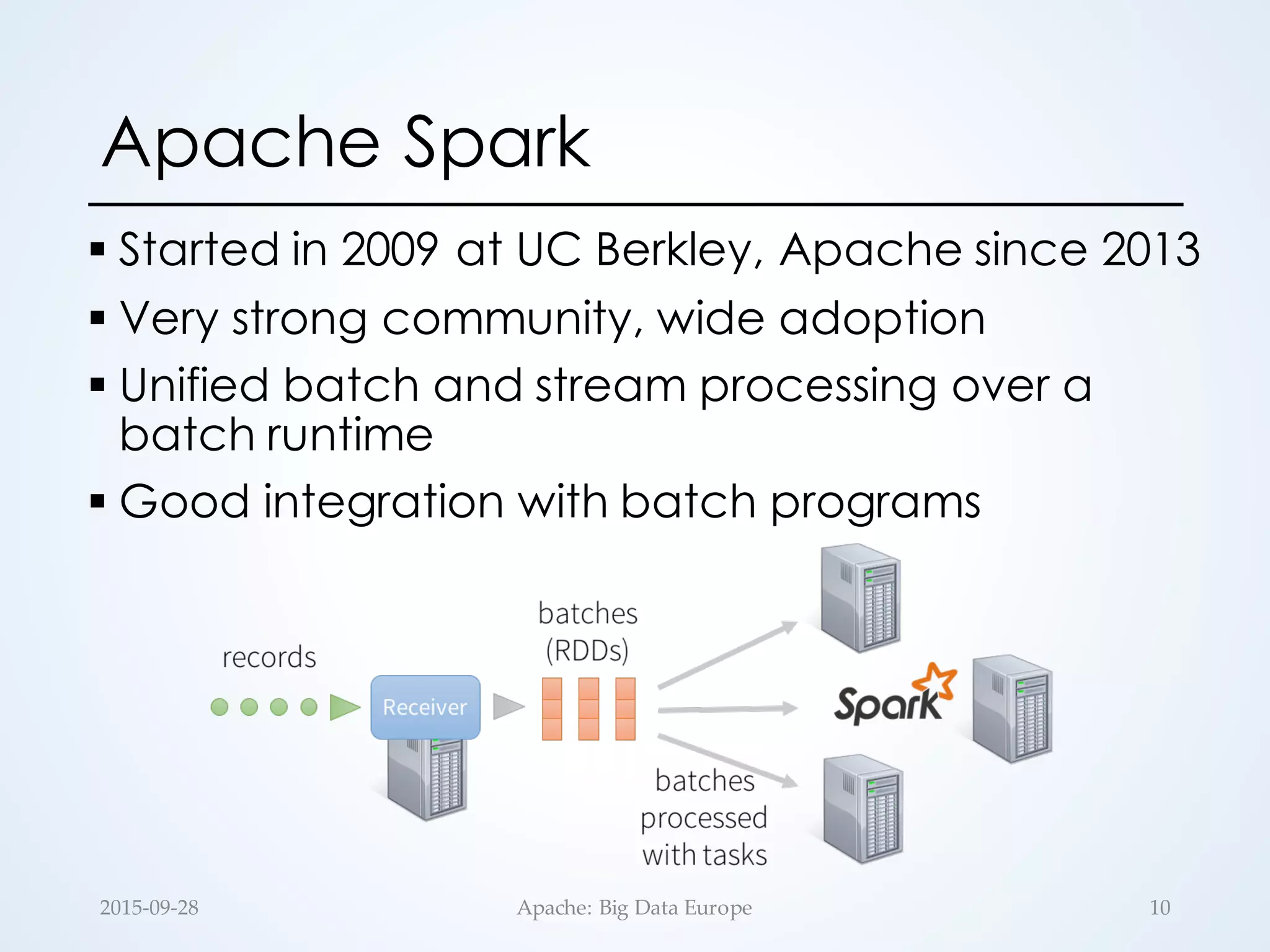

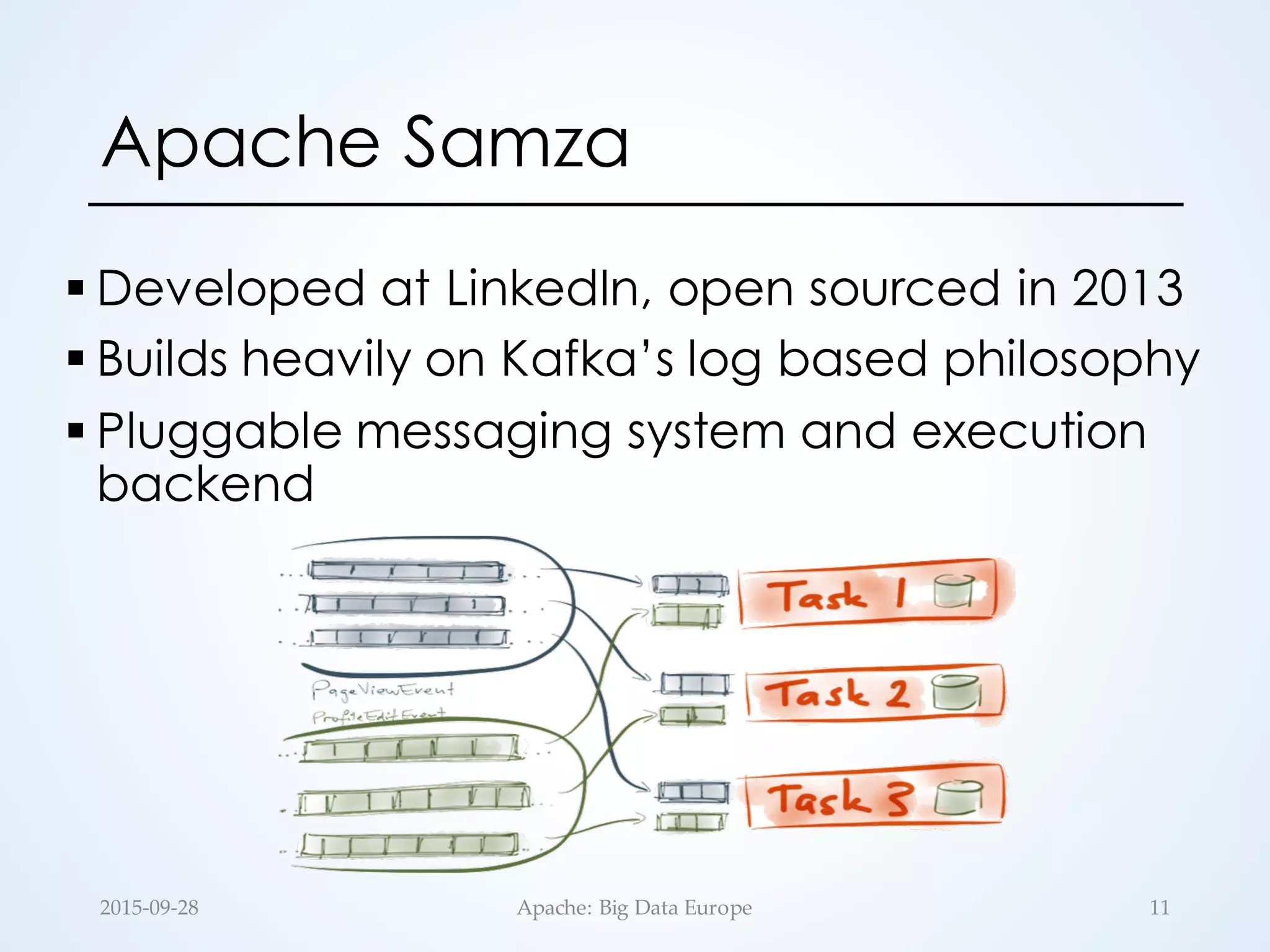

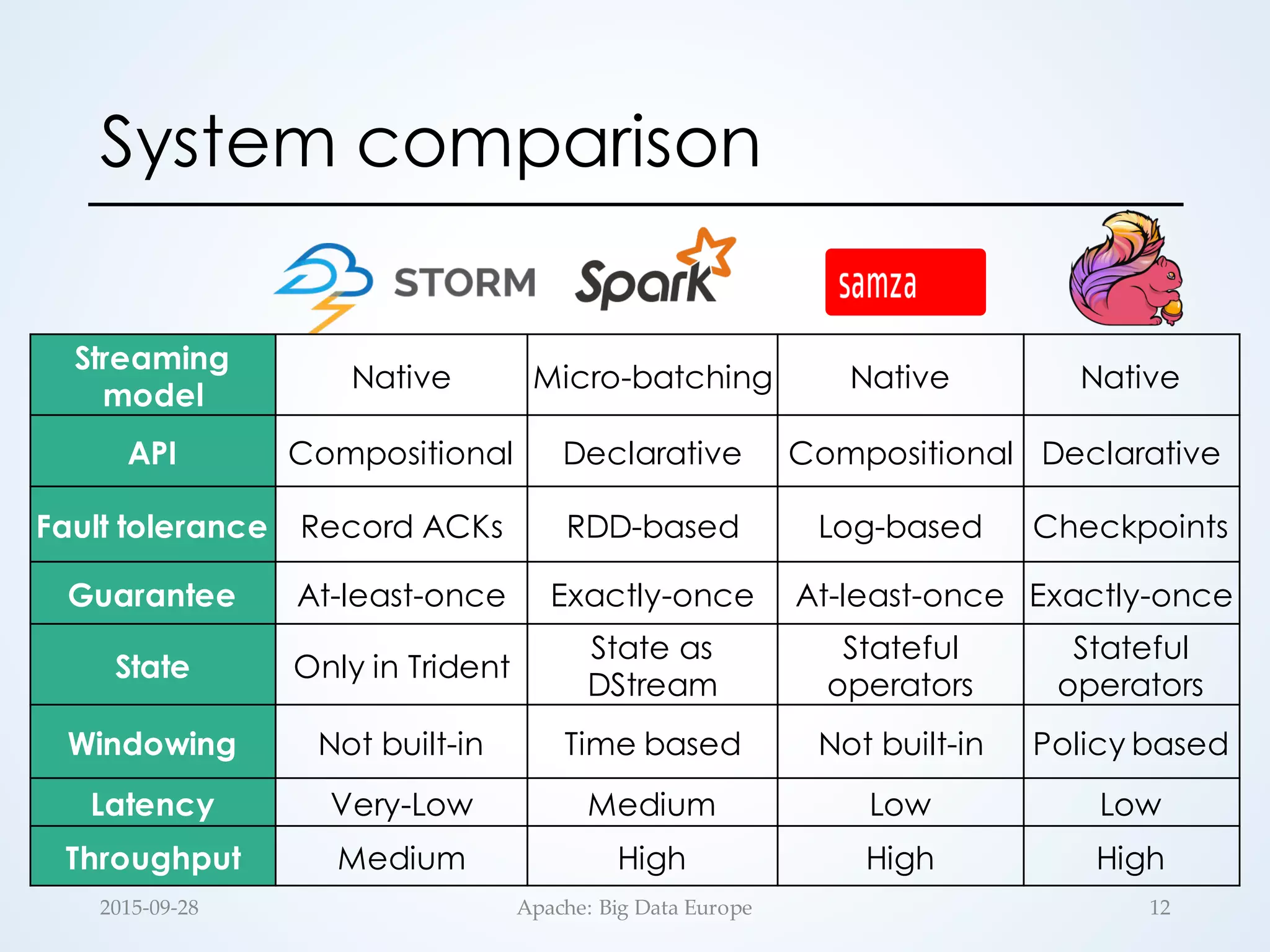

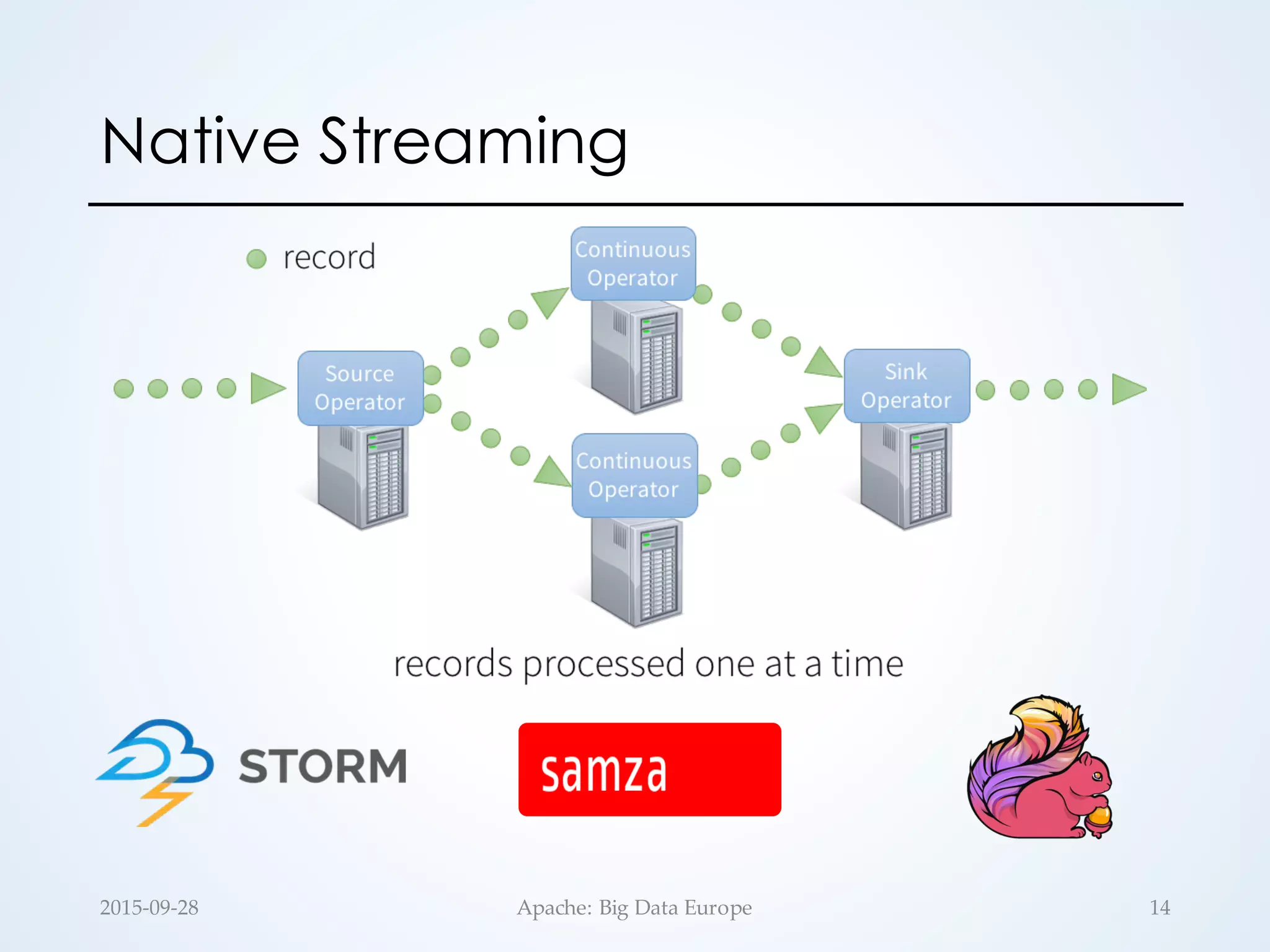

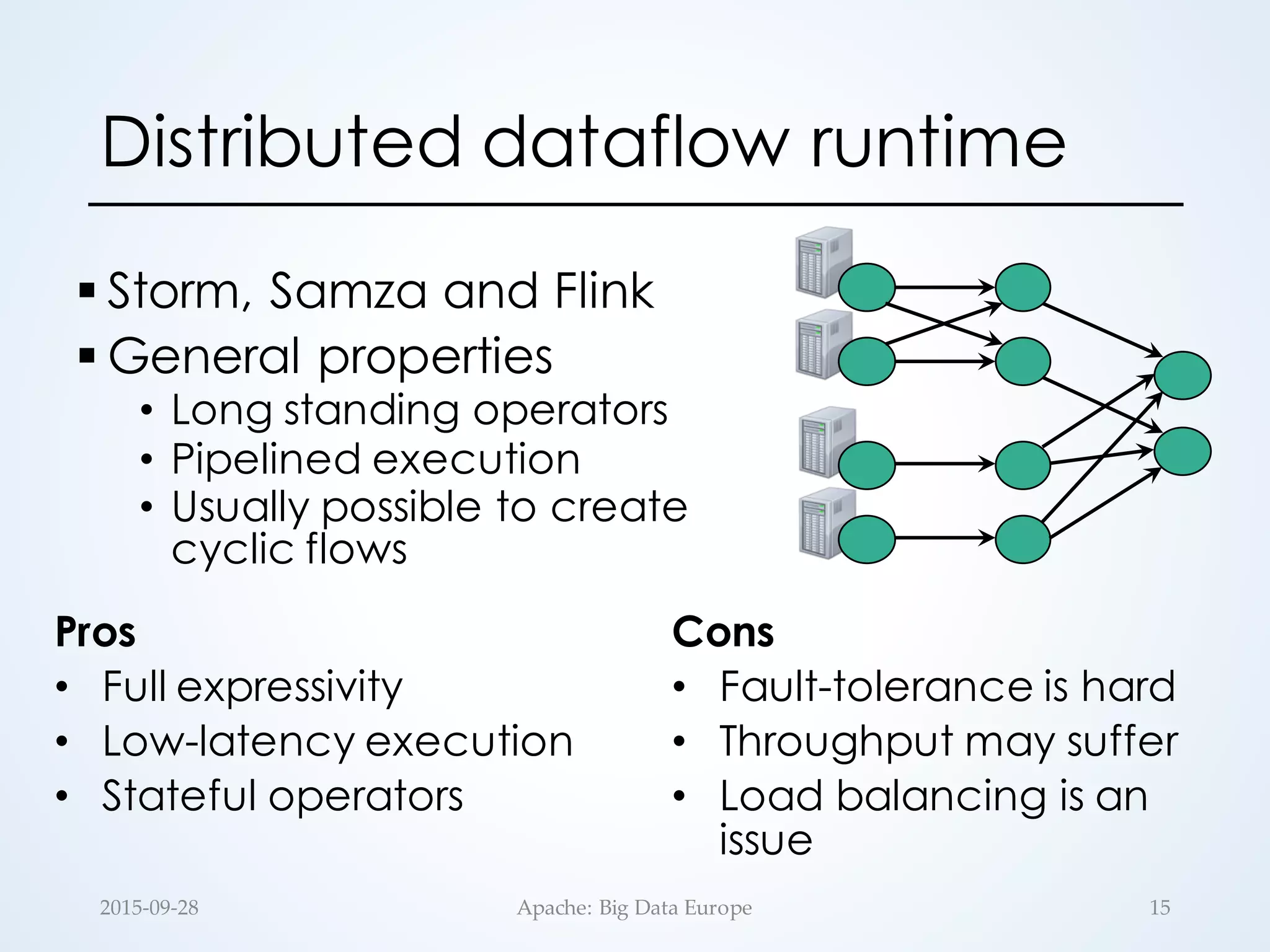

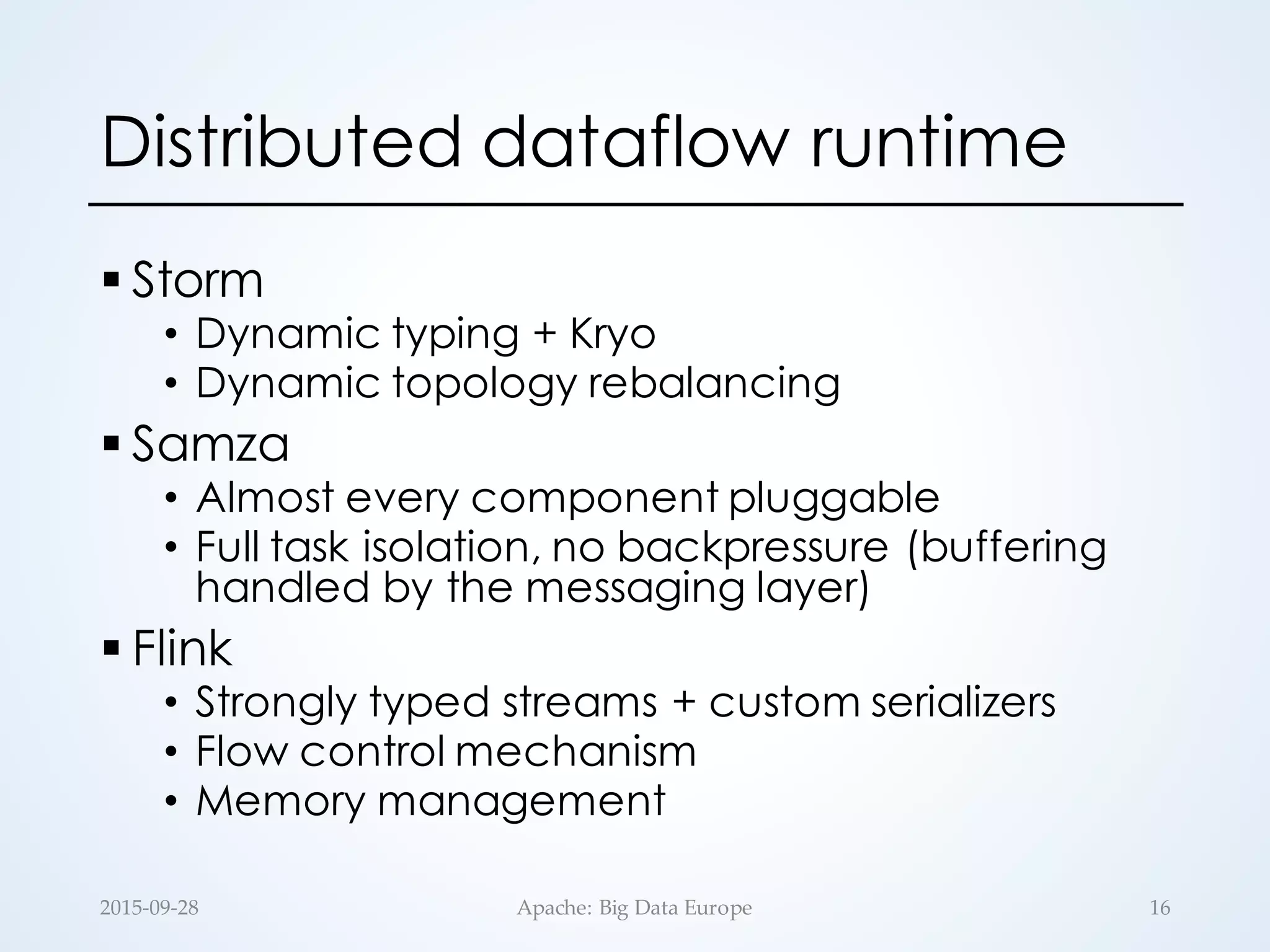

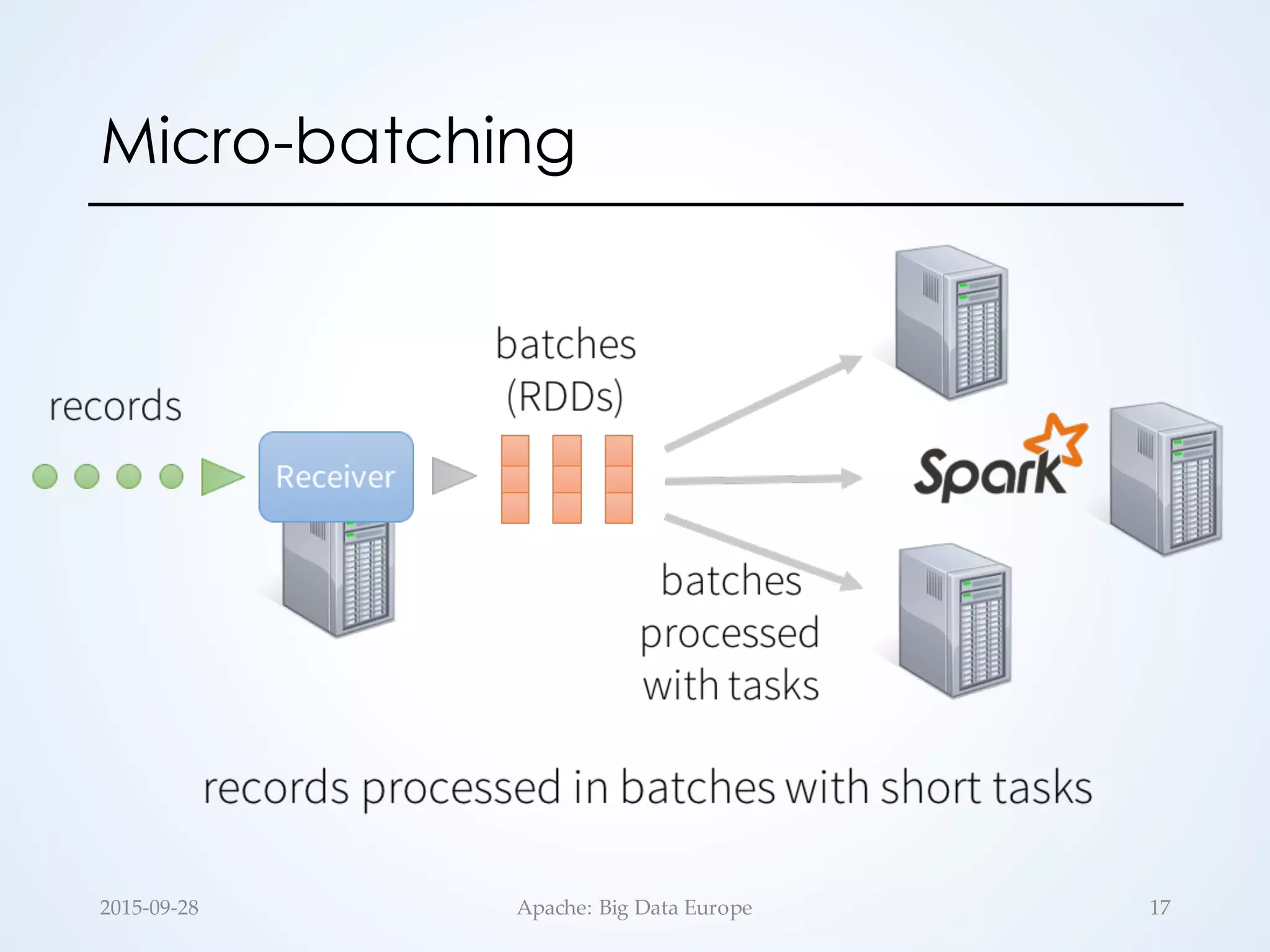

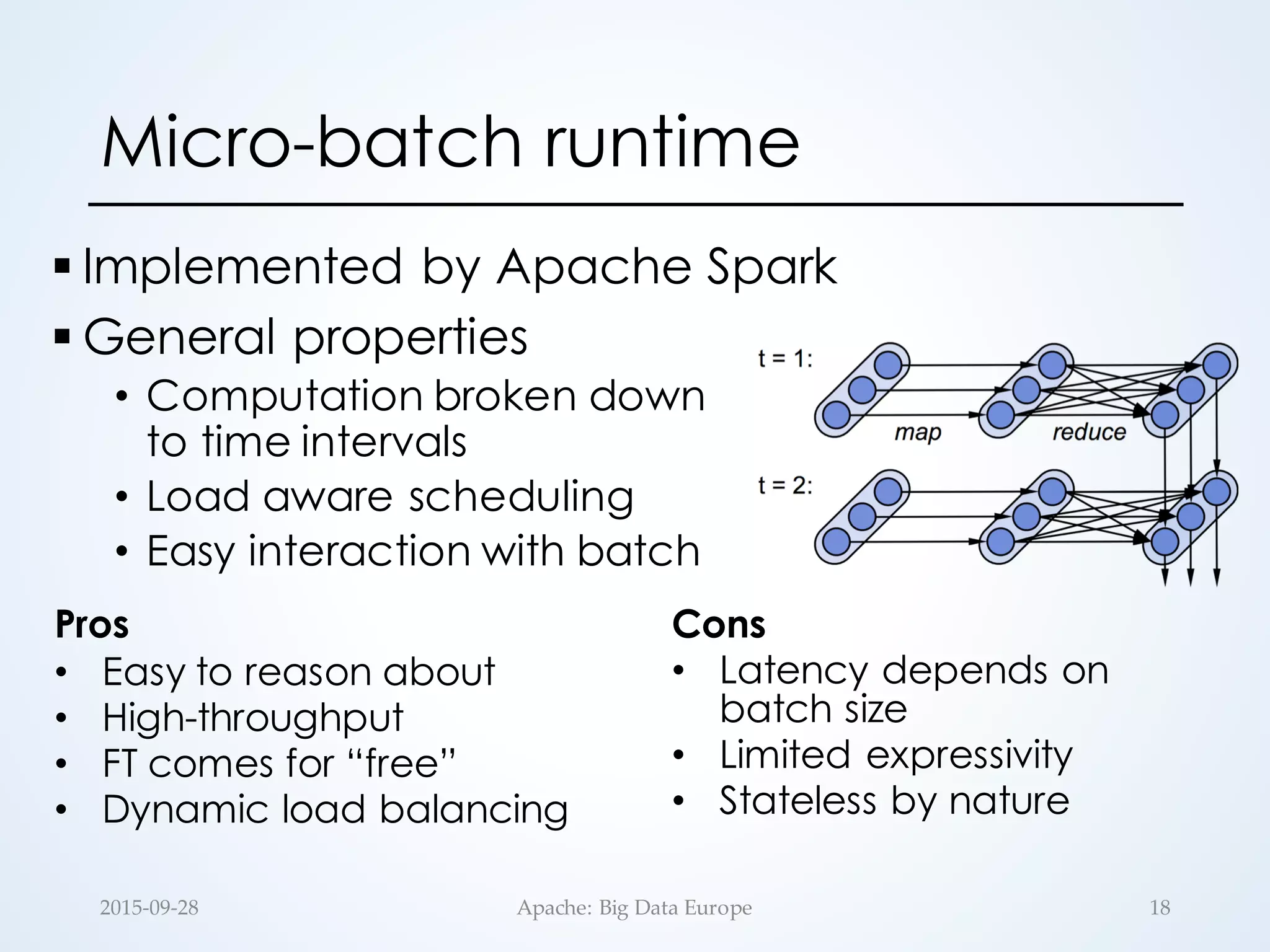

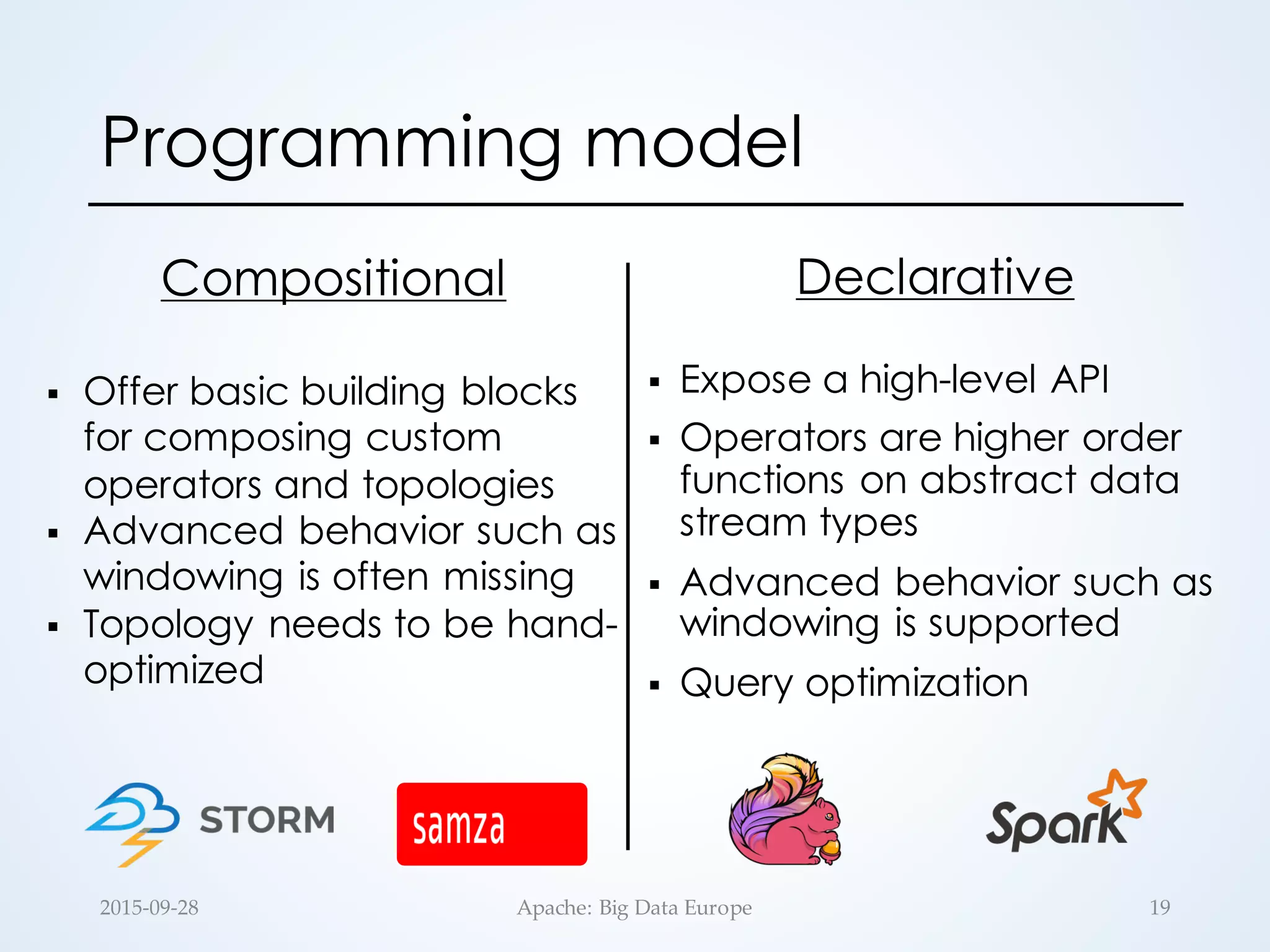

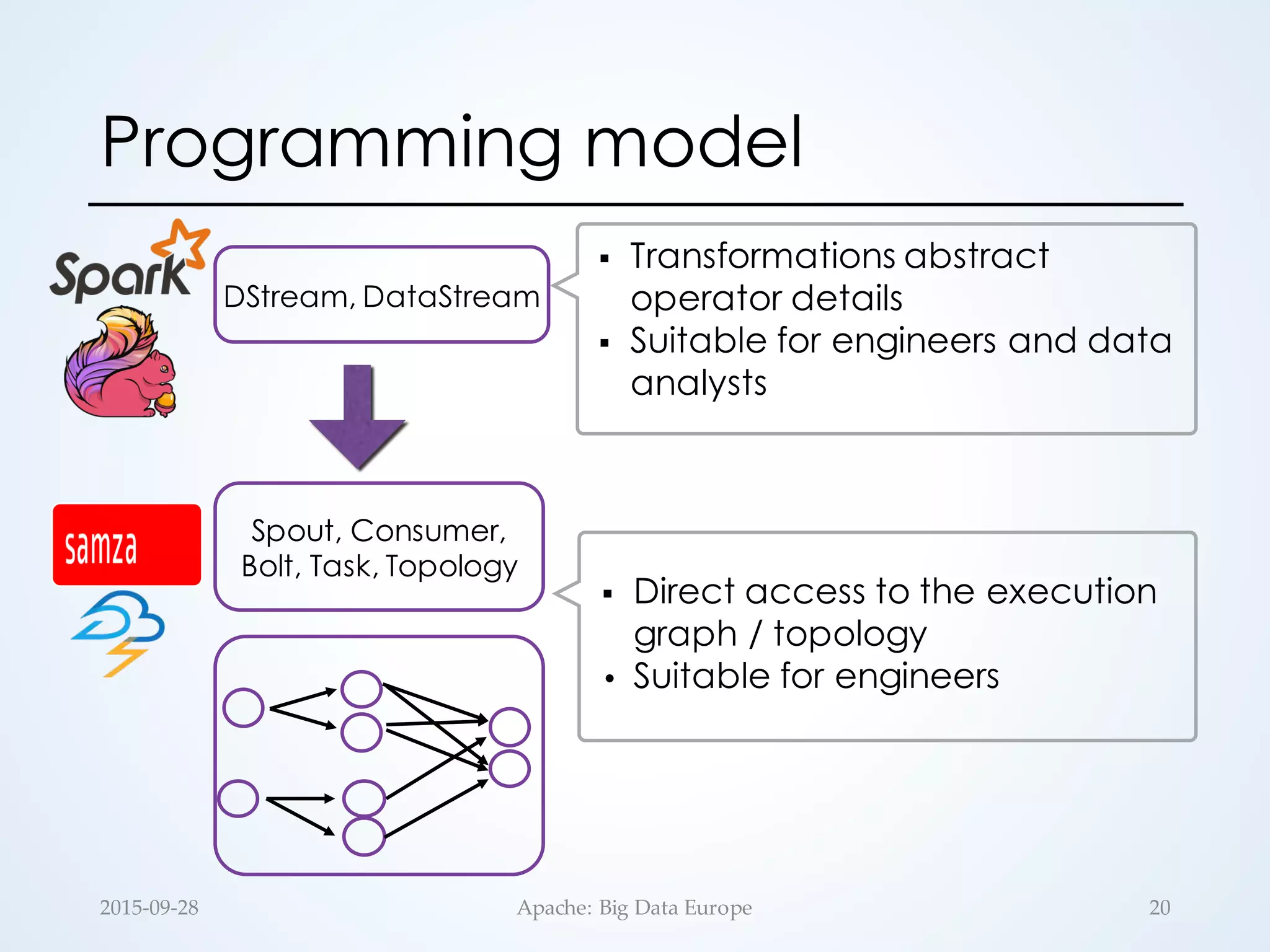

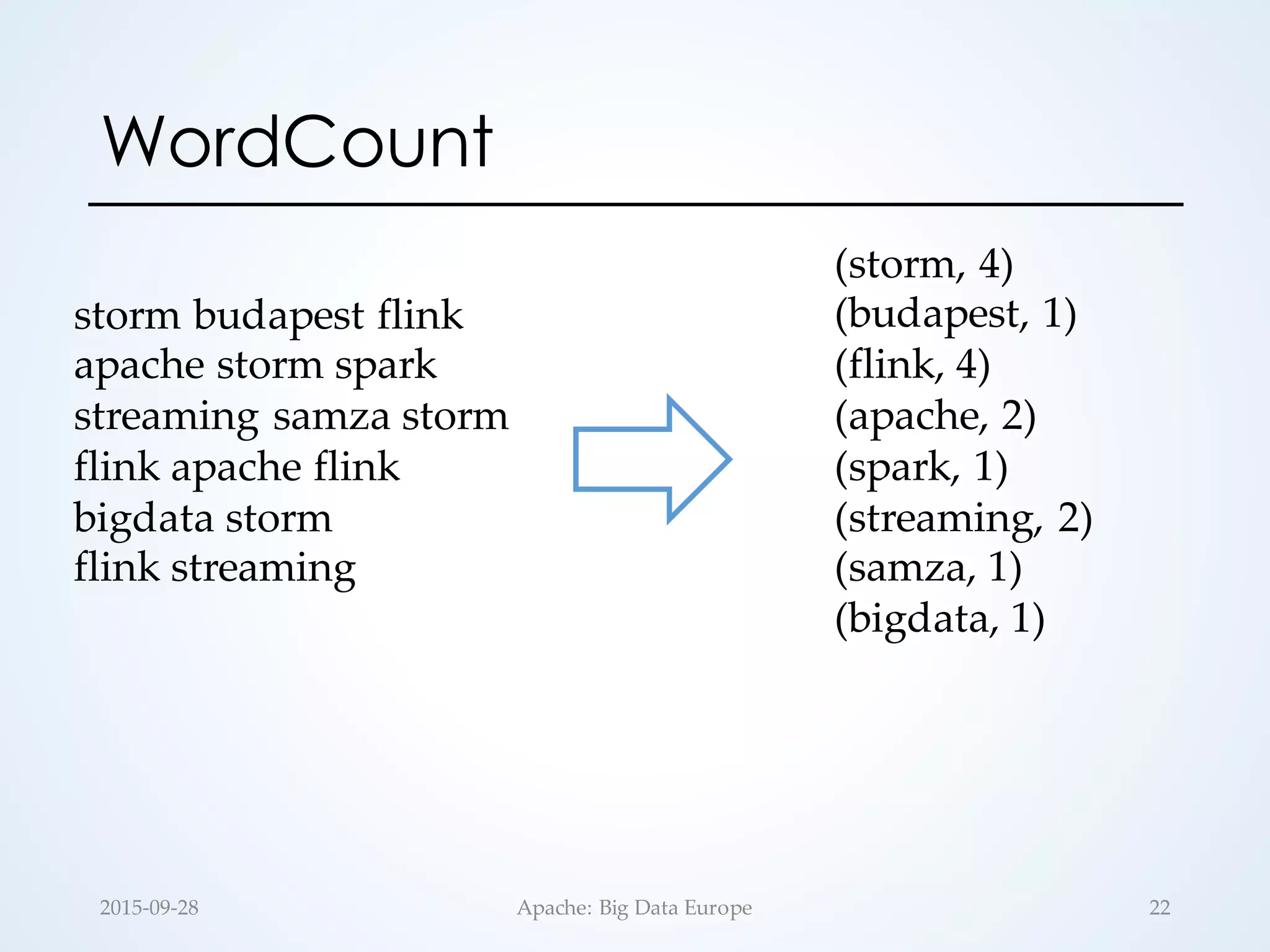

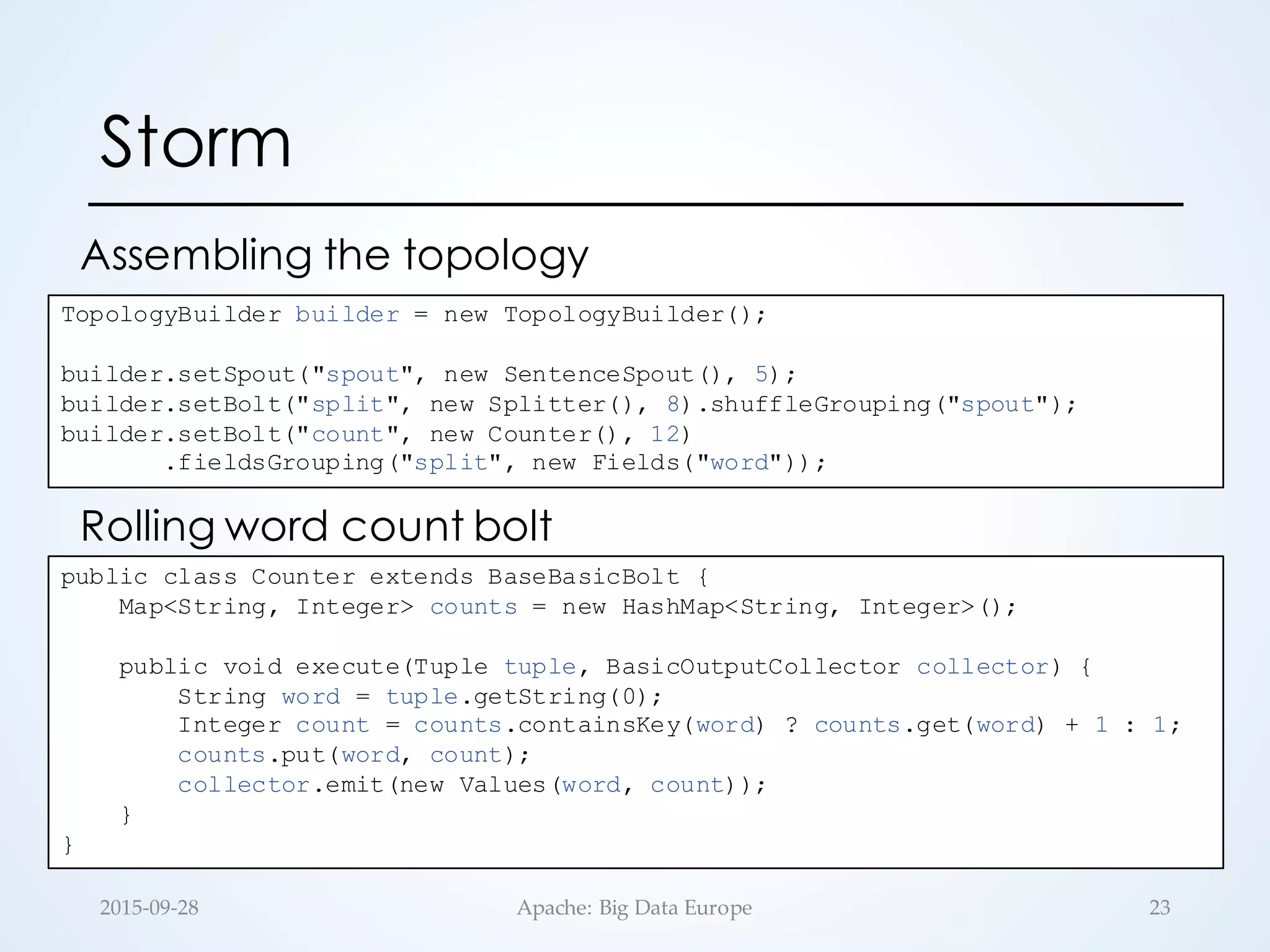

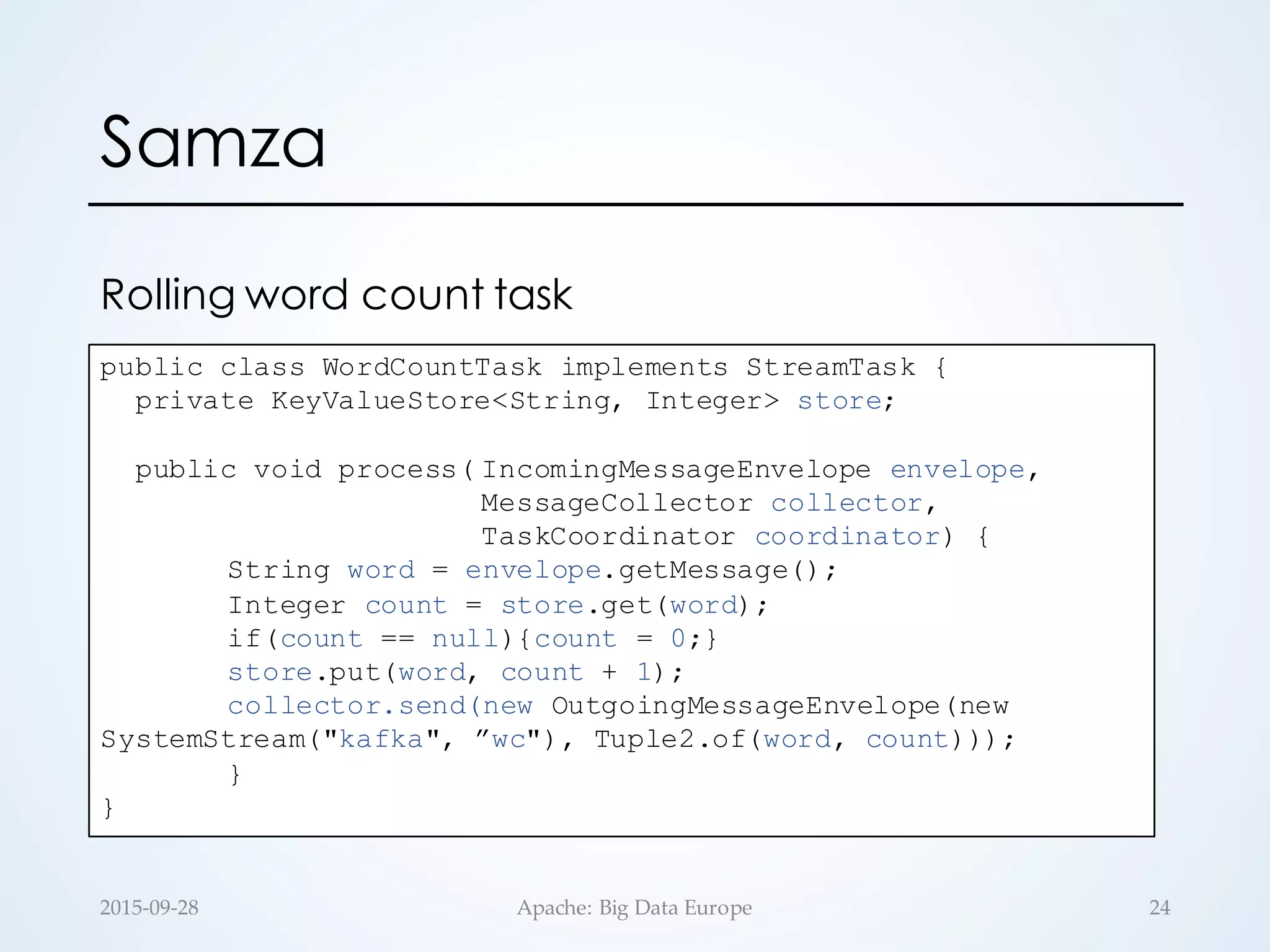

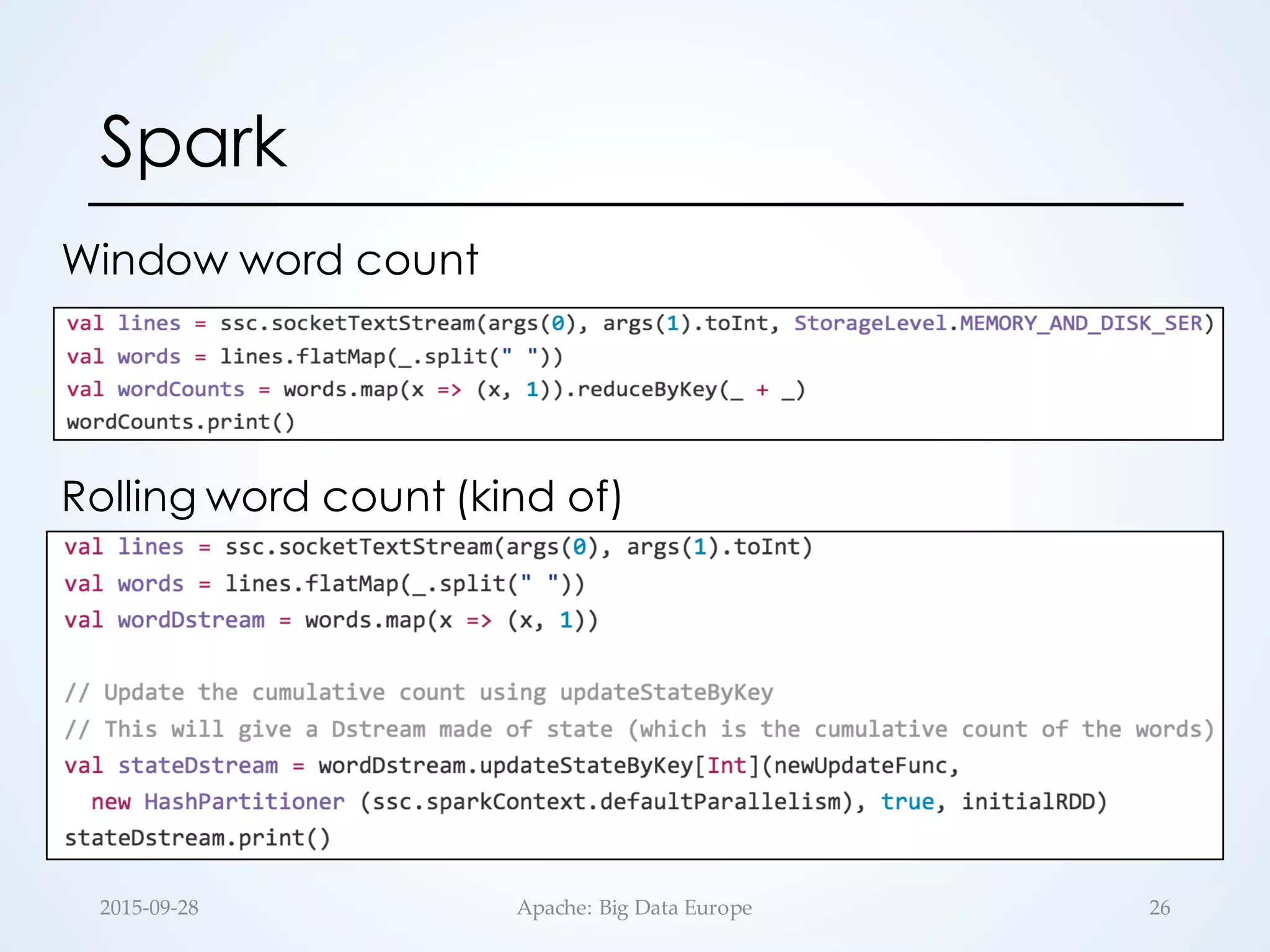

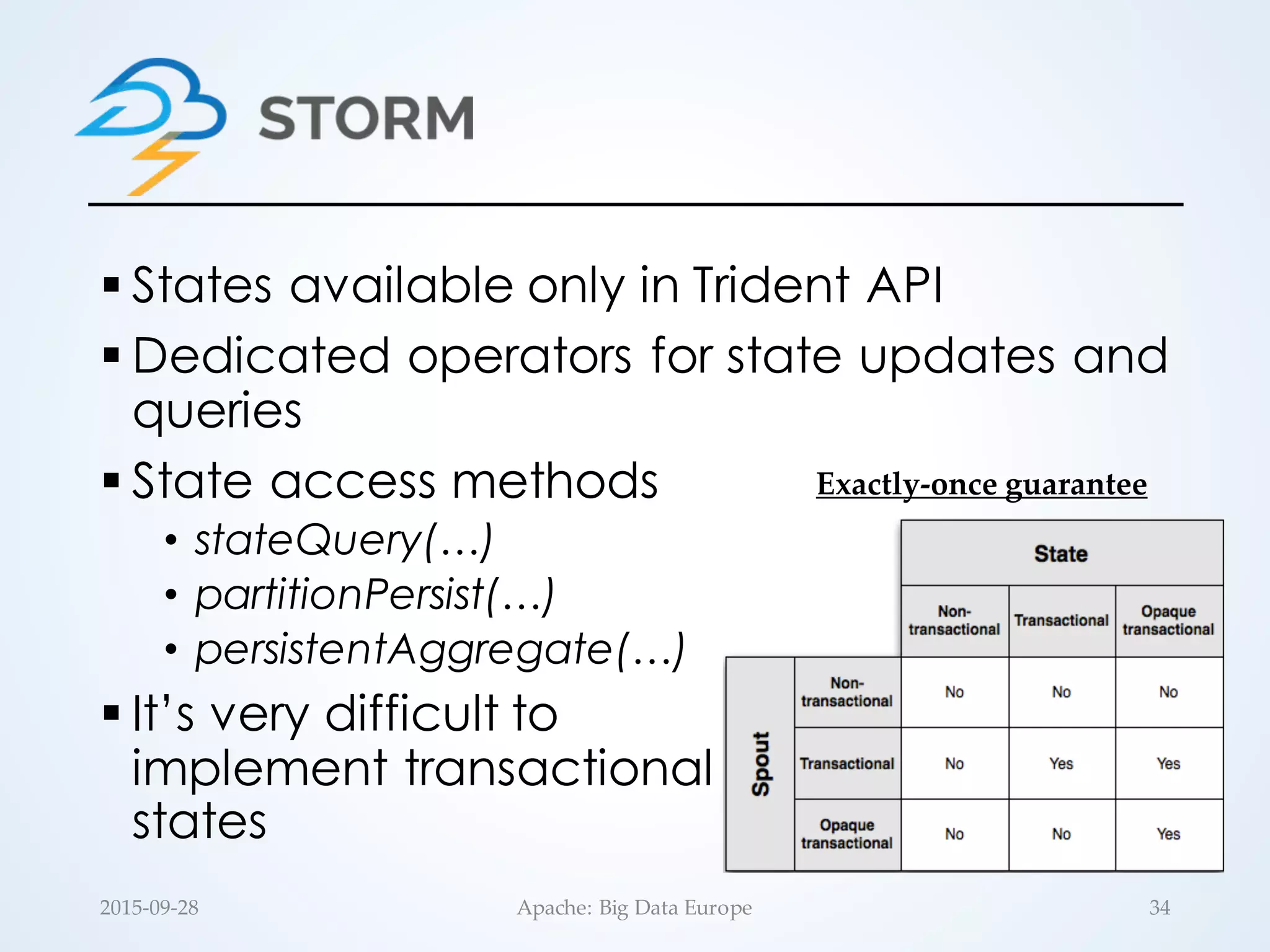

The document discusses large-scale stream processing within the Hadoop ecosystem, focusing on various open-source stream processors such as Apache Storm, Flink, Spark, and Samza. It highlights the differences in runtime architecture, programming models, fault tolerance, stateful processing, and applications of each system. Ultimately, it emphasizes the diversity of streaming applications and the need for choosing the right framework based on specific requirements.

![Flink

val lines: DataStream[String] = env.fromSocketStream(...)

lines.flatMap {line => line.split(" ")

.map(word => Word(word,1))}

.groupBy("word").sum("frequency")

.print()

case class Word (word: String, frequency: Int)

val lines: DataStream[String] = env.fromSocketStream(...)

lines.flatMap {line => line.split(" ")

.map(word => Word(word,1))}

.window(Time.of(5,SECONDS)).every(Time.of(1,SECONDS))

.groupBy("word").sum("frequency")

.print()

Rolling word count

Window word count

252015-‐‑09-‐‑28 Apache: Big Data Europe](https://image.slidesharecdn.com/opensourcestreaming-150928093329-lva1-app6891/75/Large-Scale-Stream-Processing-in-the-Hadoop-Ecosystem-25-2048.jpg)

![§ Stateless runtime by design

• No continuous operators

• UDFs are assumed to be stateless

§ State can be generated as a separate

stream of RDDs: updateStateByKey(…)

𝒇:

𝑺𝒆𝒒[𝒊𝒏 𝒌], 𝒔𝒕𝒂𝒕𝒆 𝒌 ⟶ 𝒔𝒕𝒂𝒕𝒆.

𝒌

§ 𝒇 is scoped to a specific key

§ Exactly-once semantics

35Apache: Big Data Europe2015-‐‑09-‐‑28](https://image.slidesharecdn.com/opensourcestreaming-150928093329-lva1-app6891/75/Large-Scale-Stream-Processing-in-the-Hadoop-Ecosystem-35-2048.jpg)