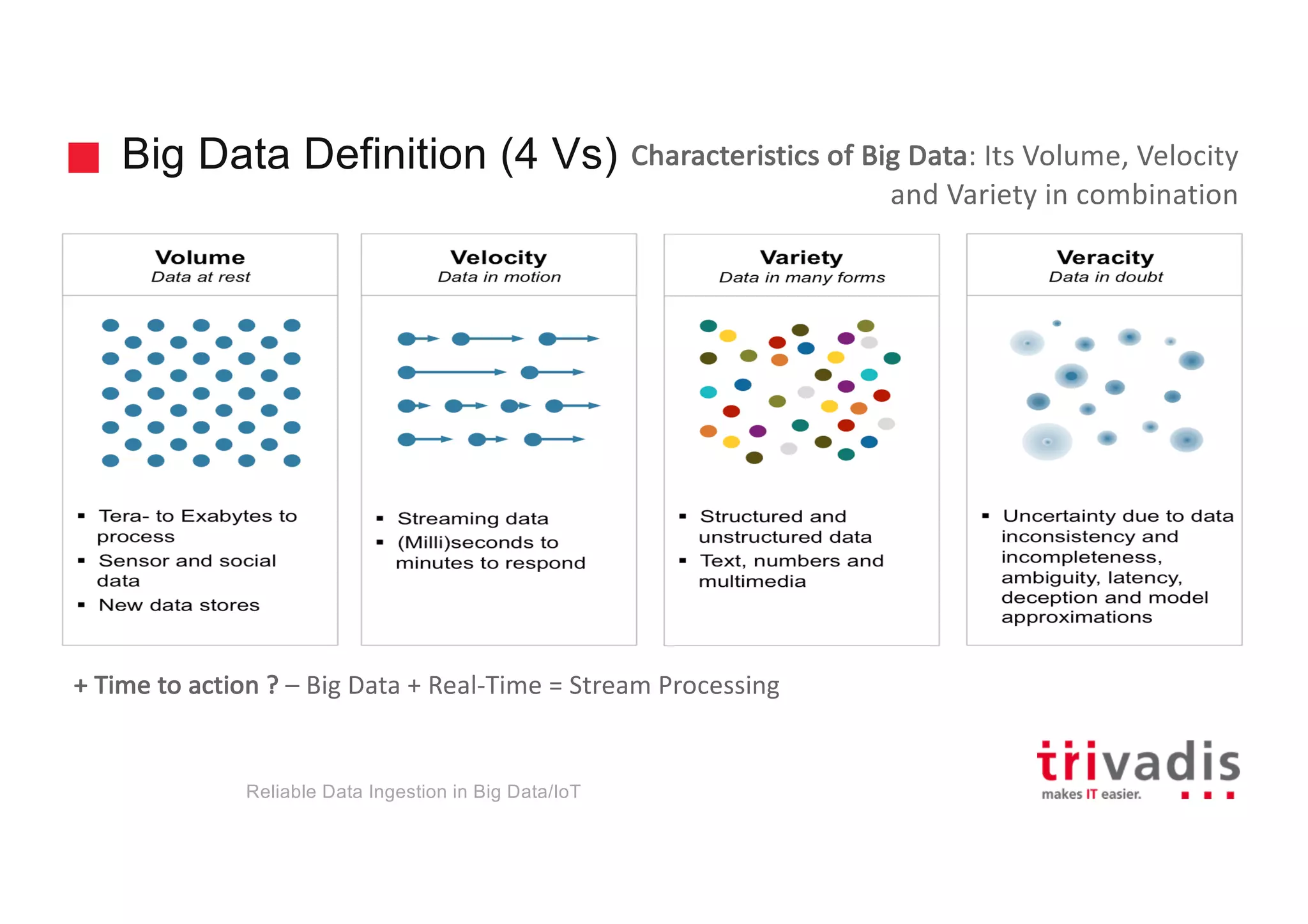

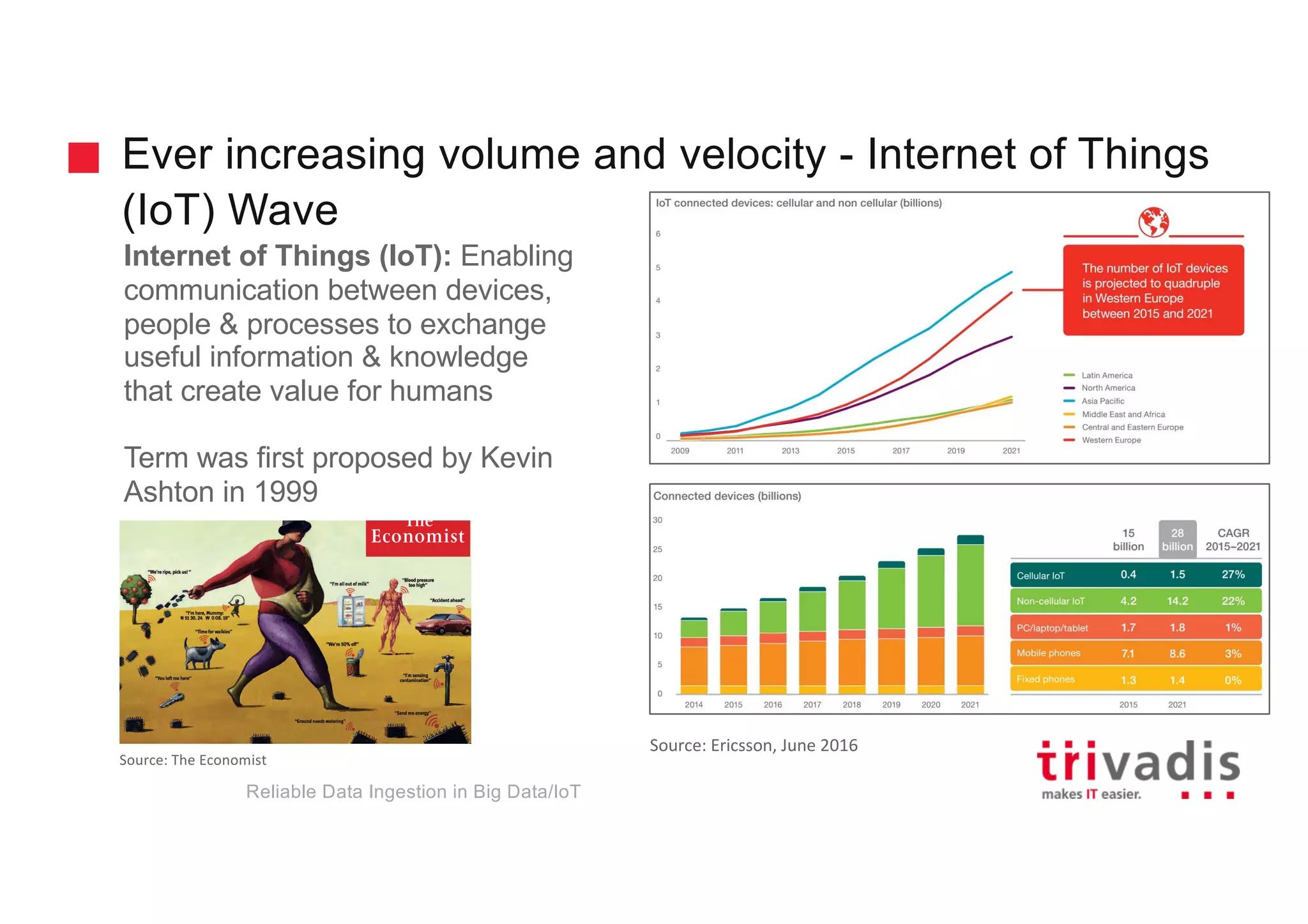

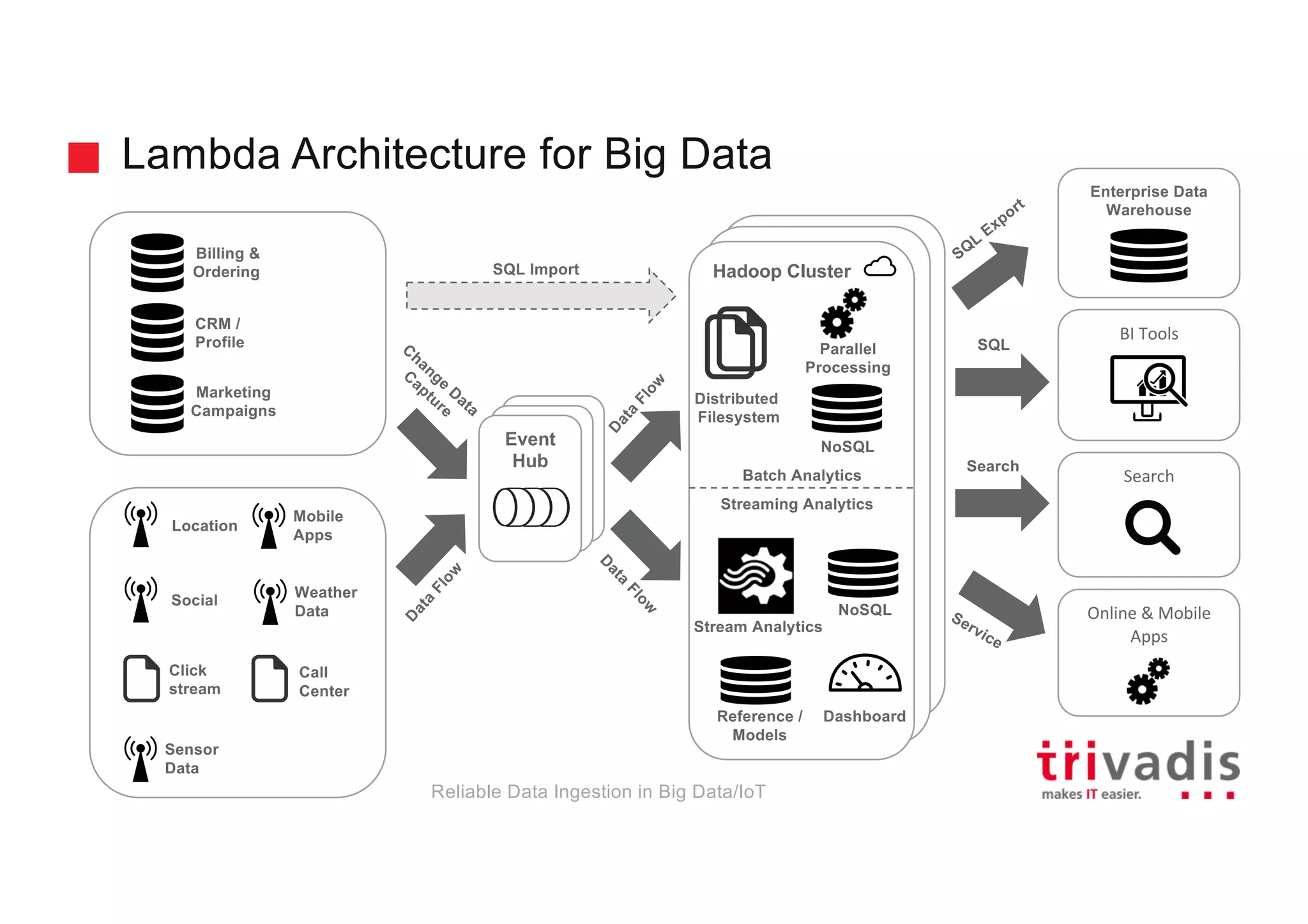

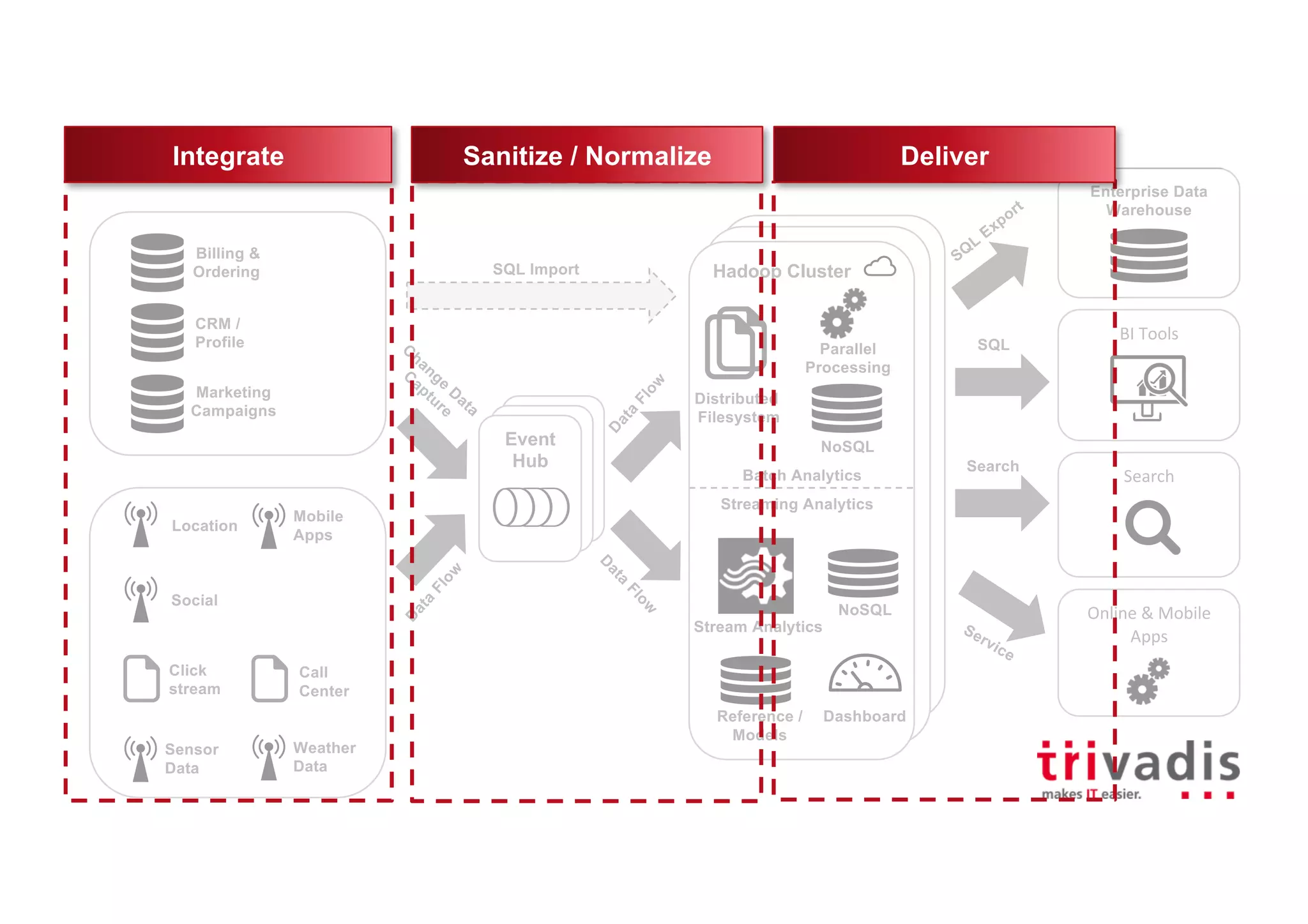

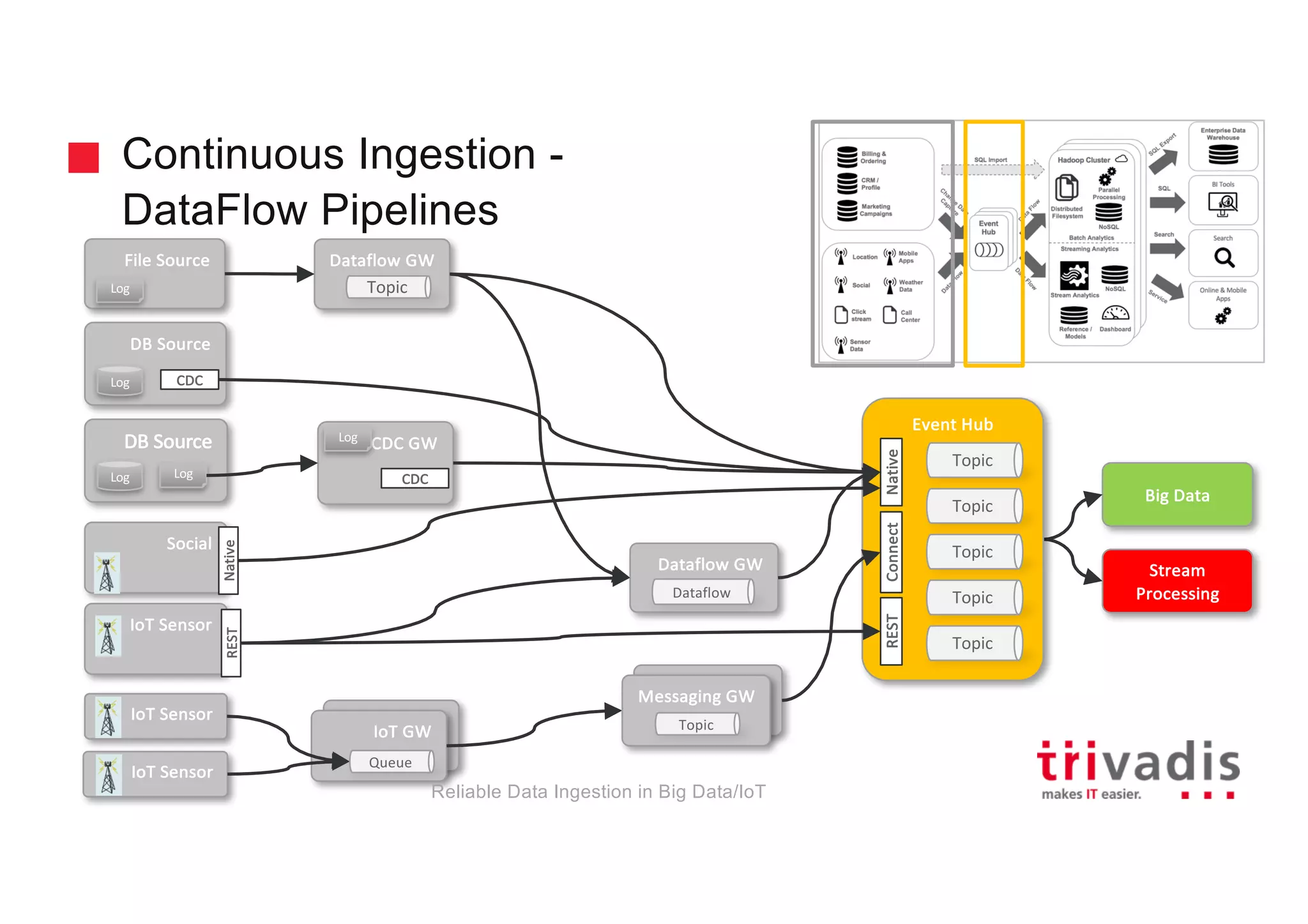

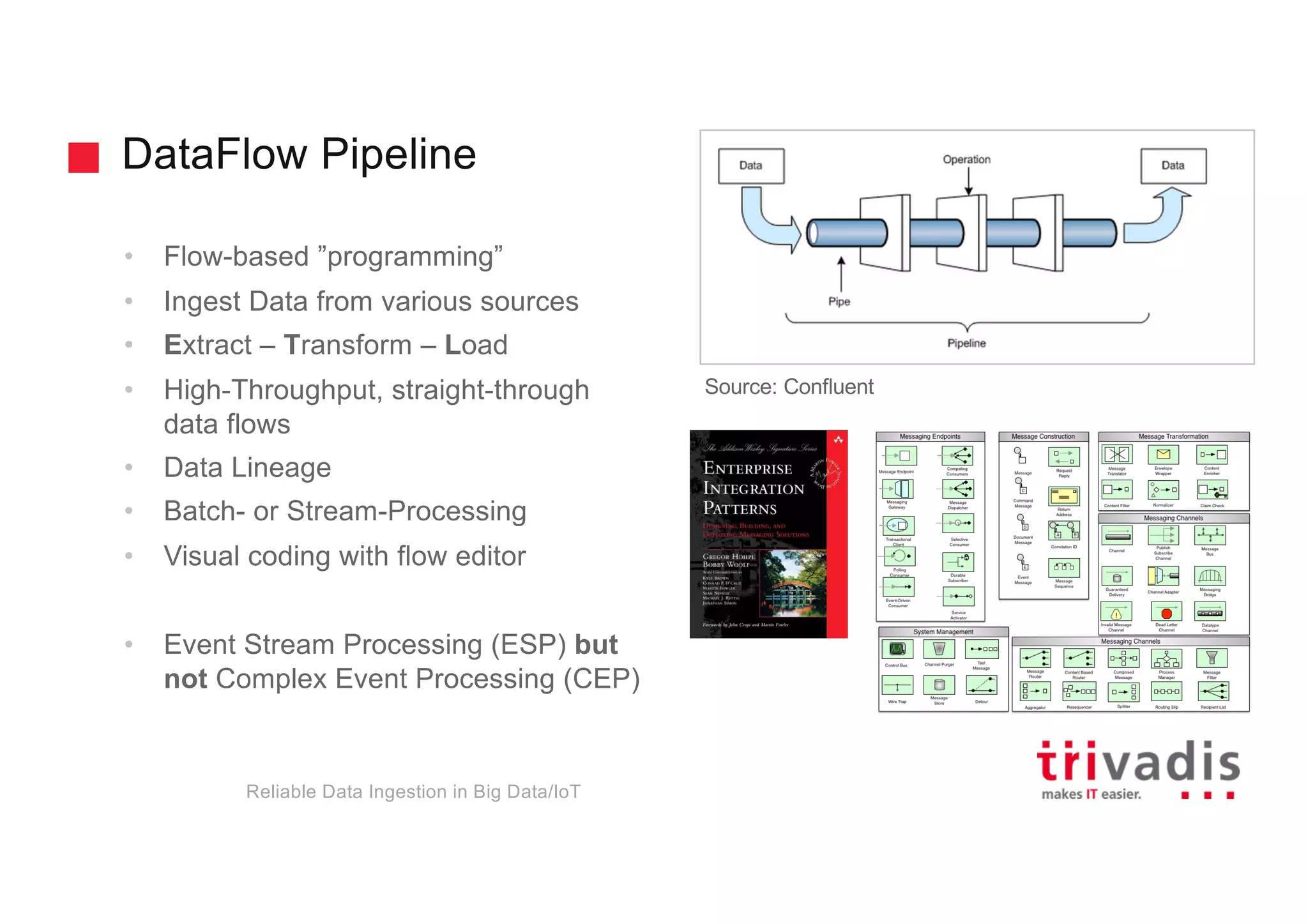

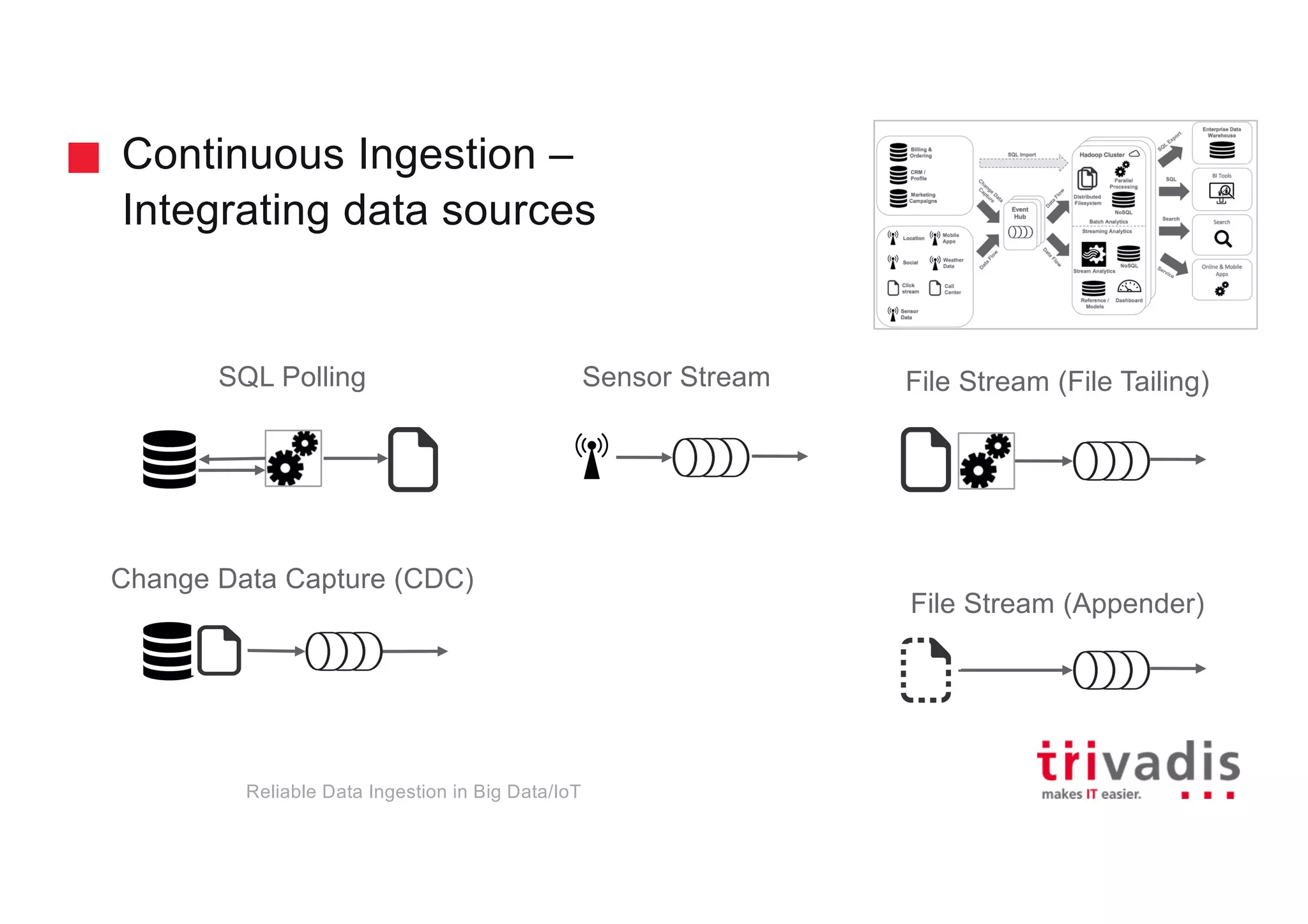

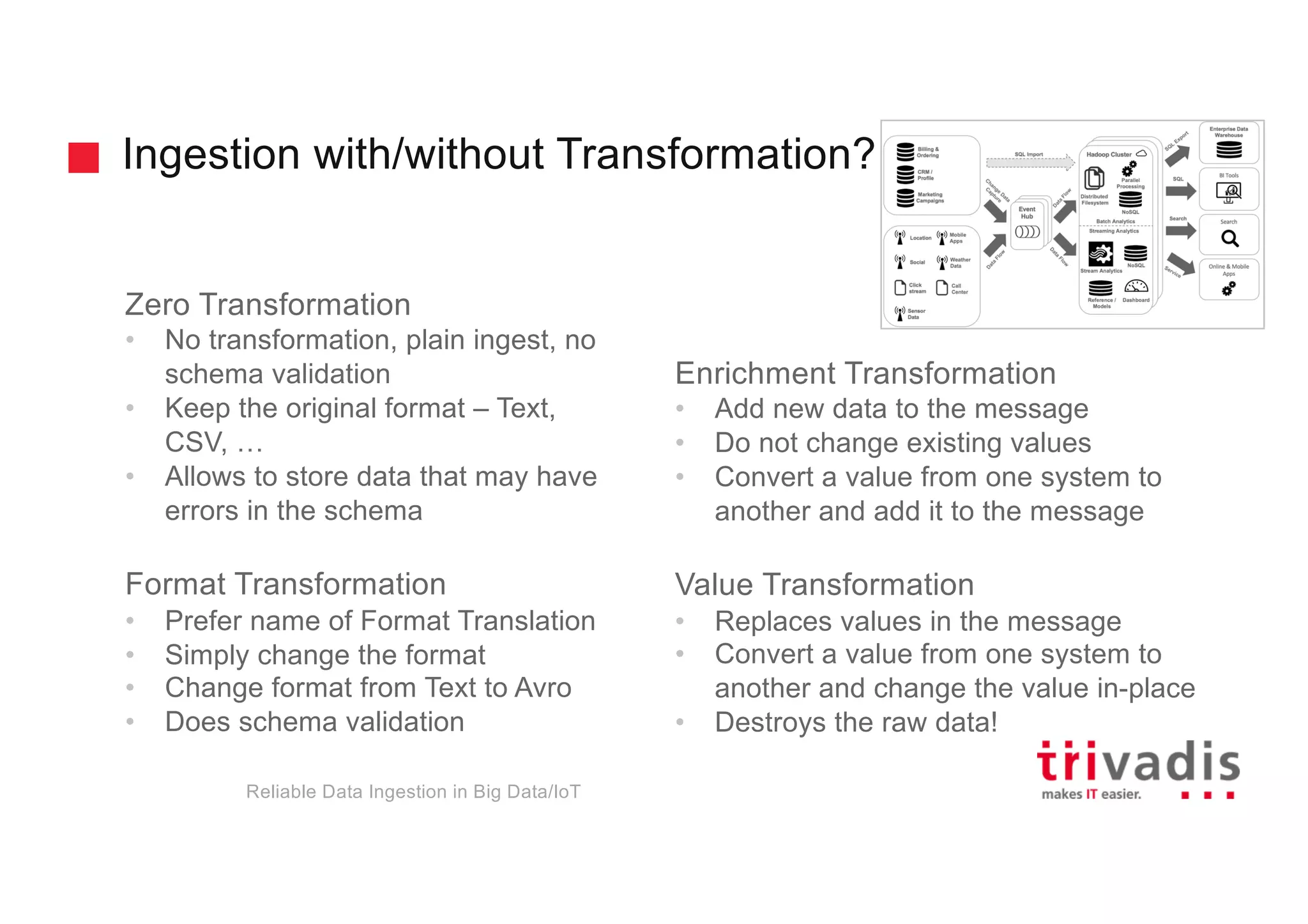

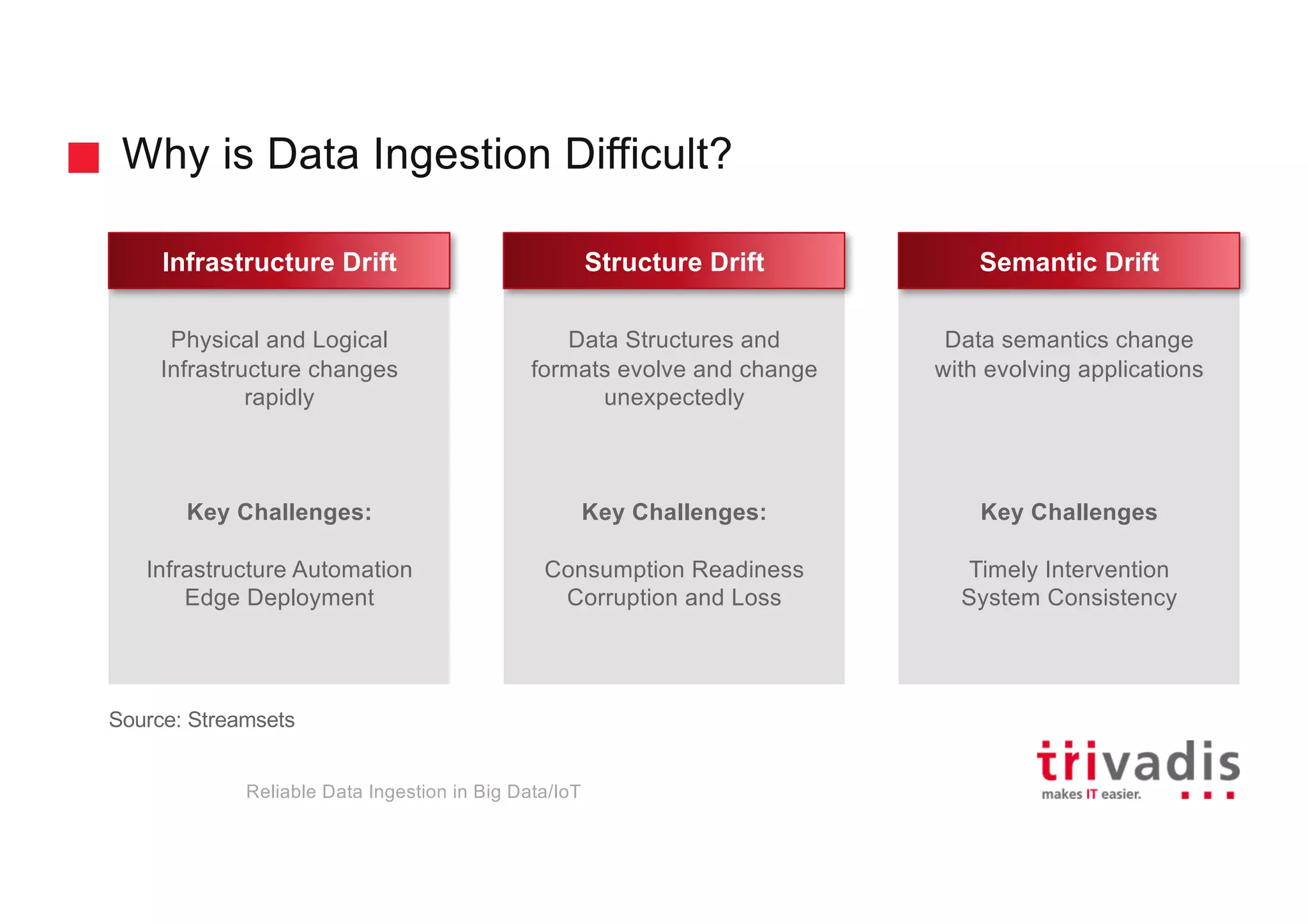

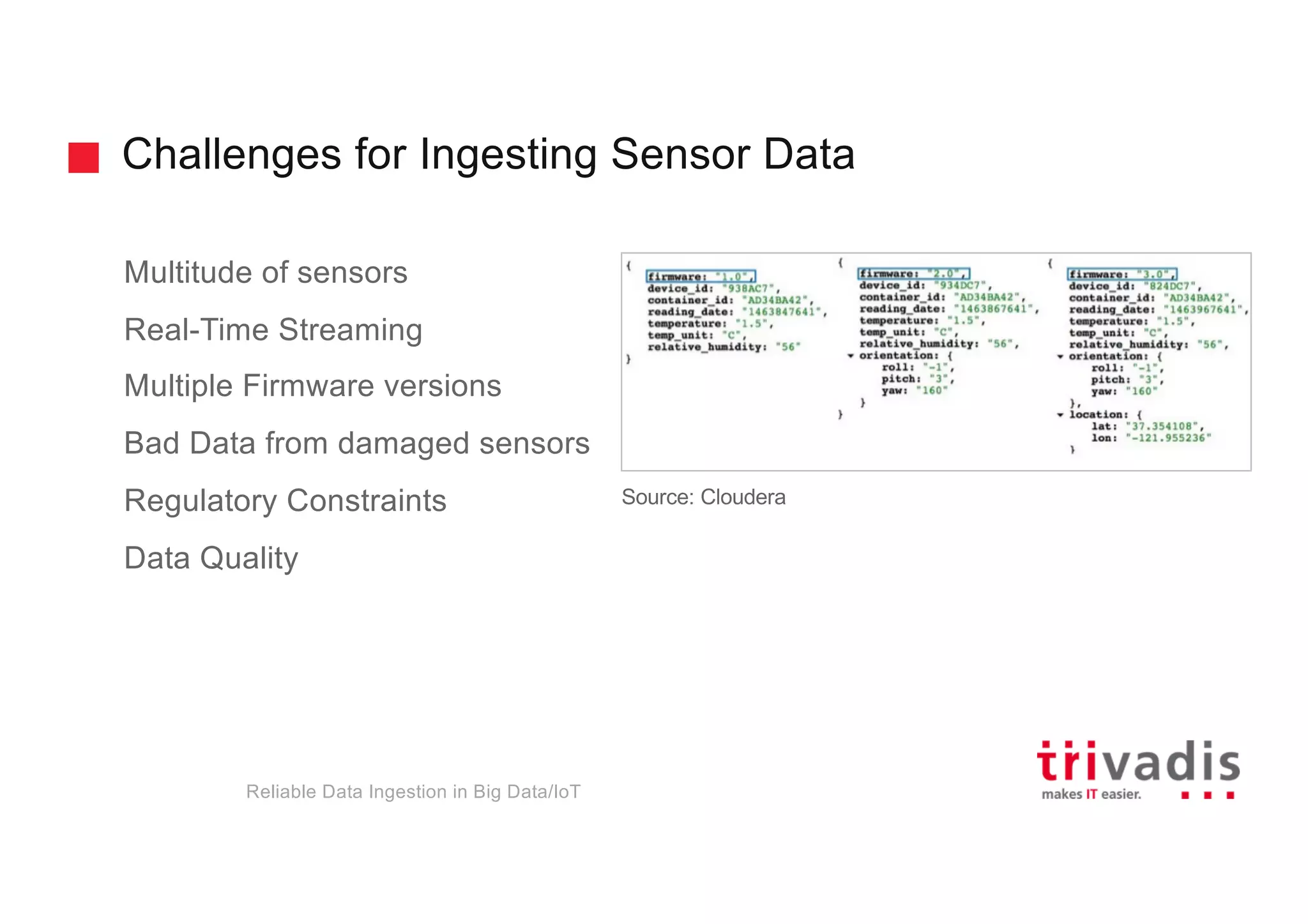

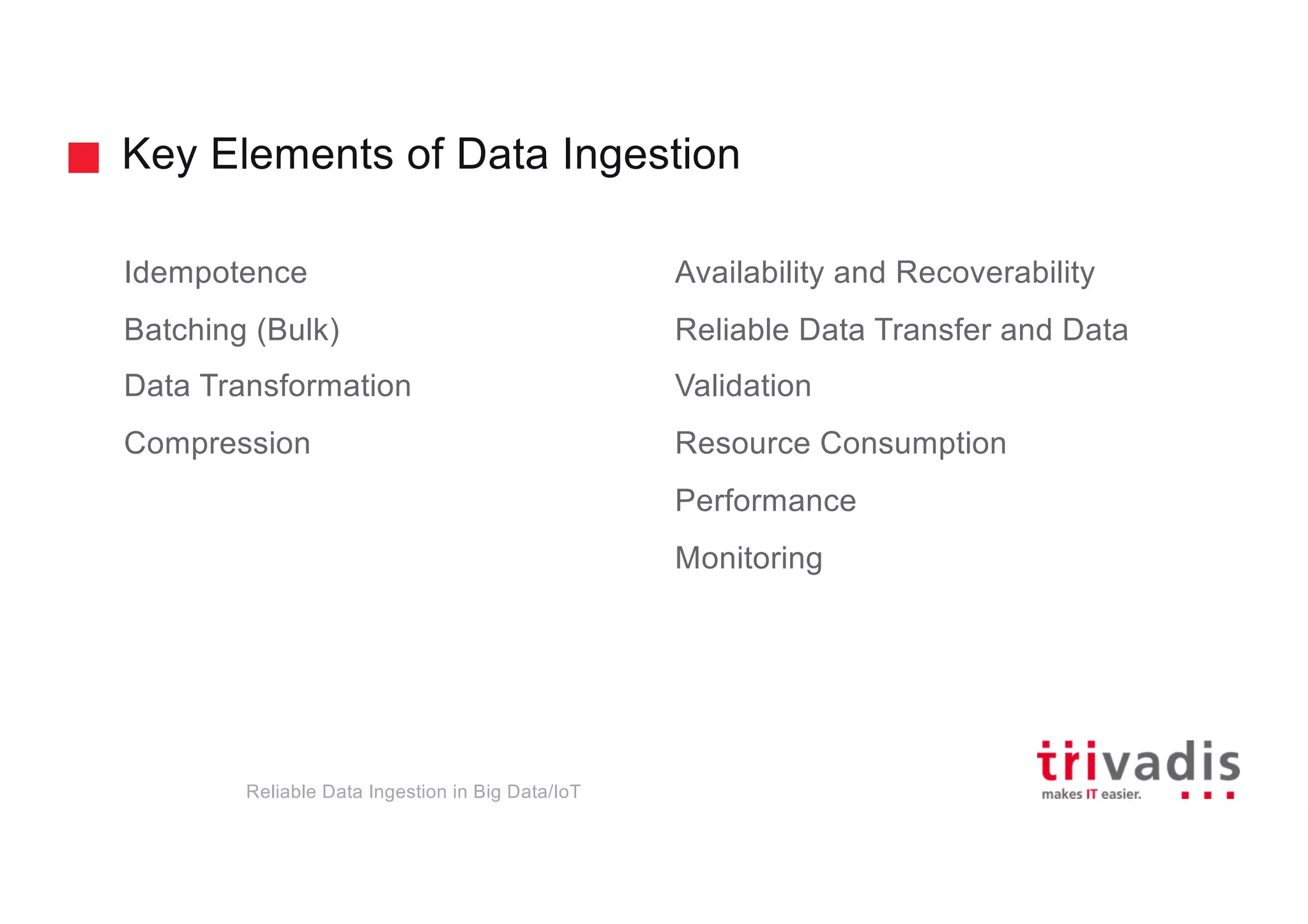

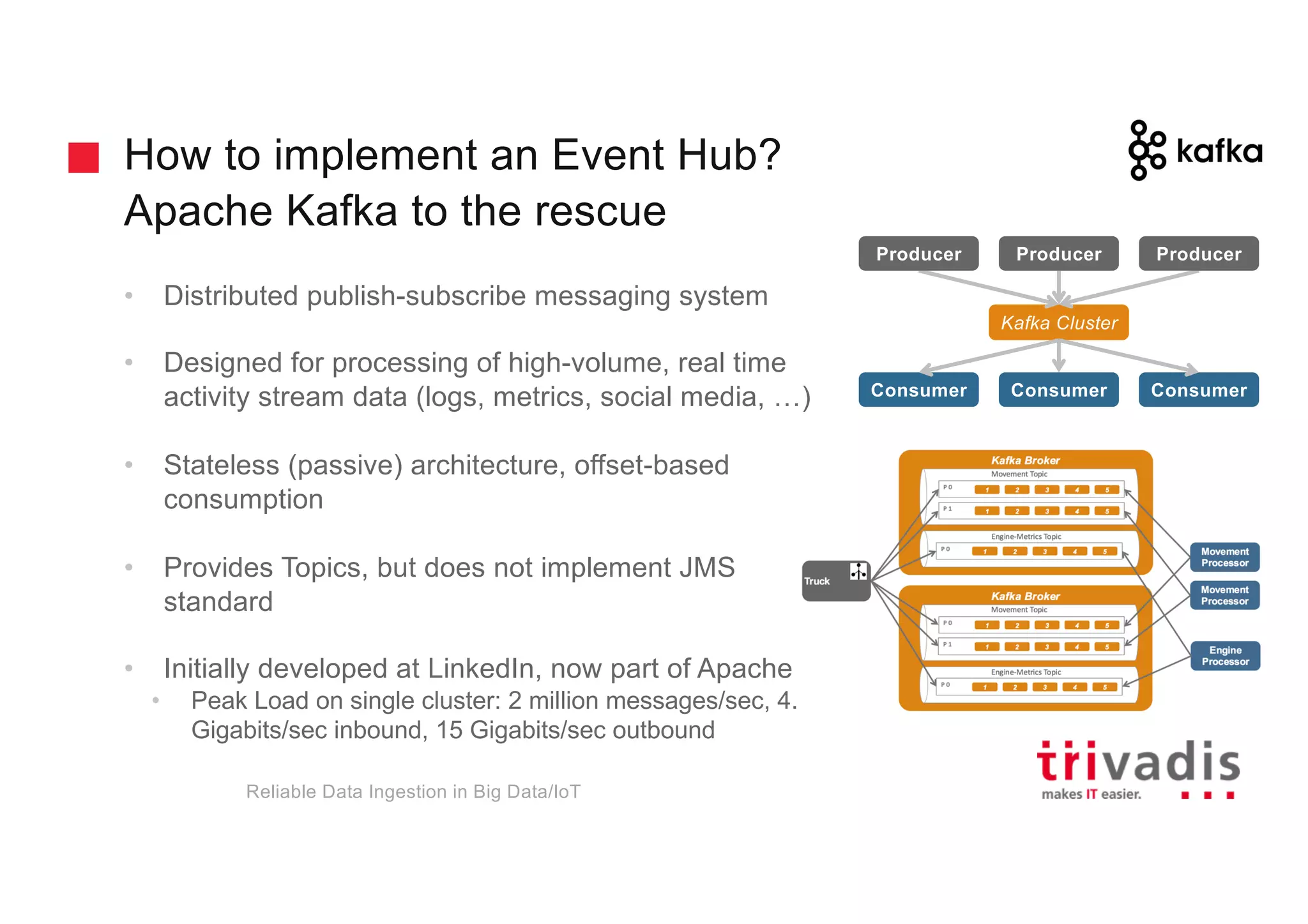

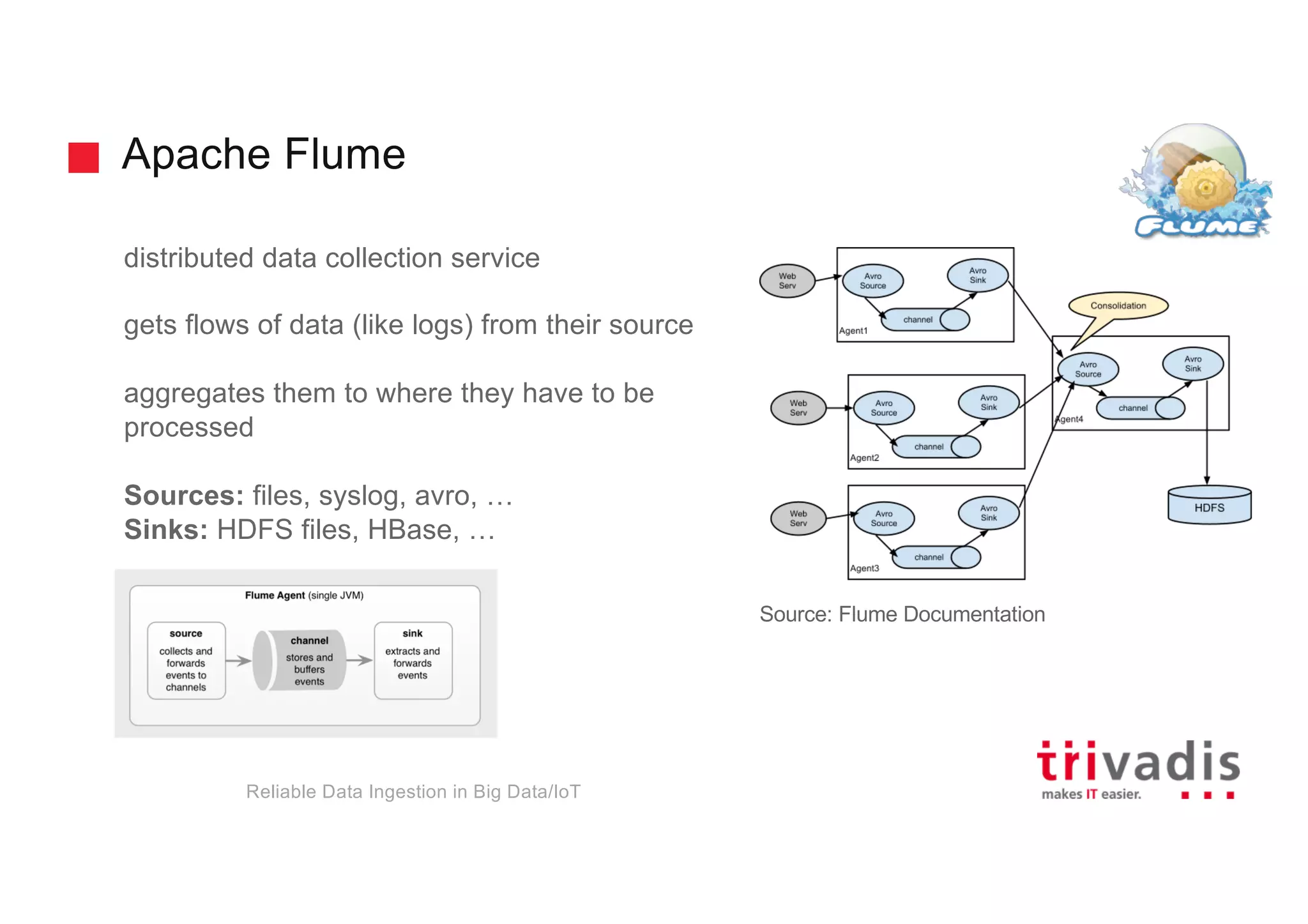

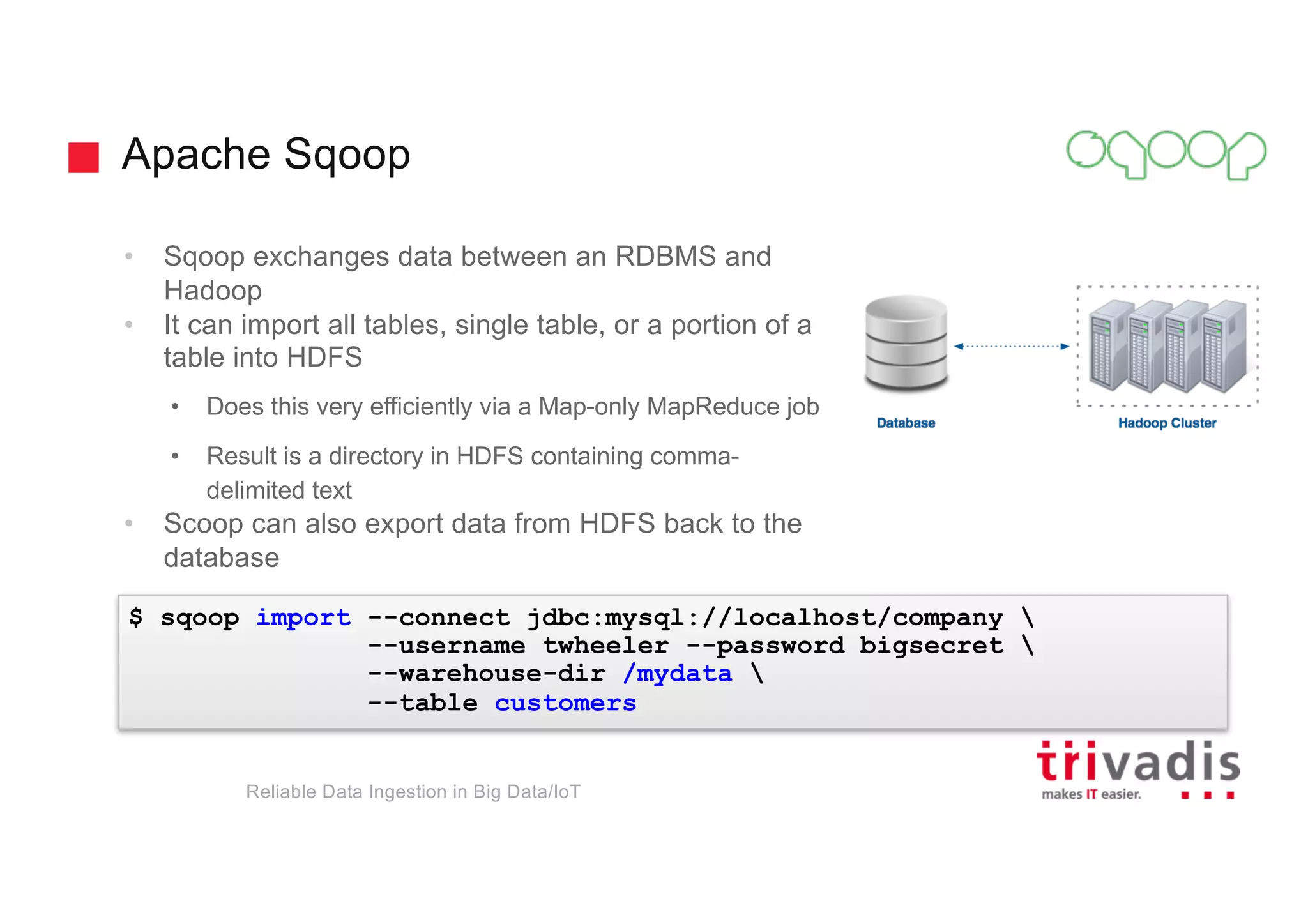

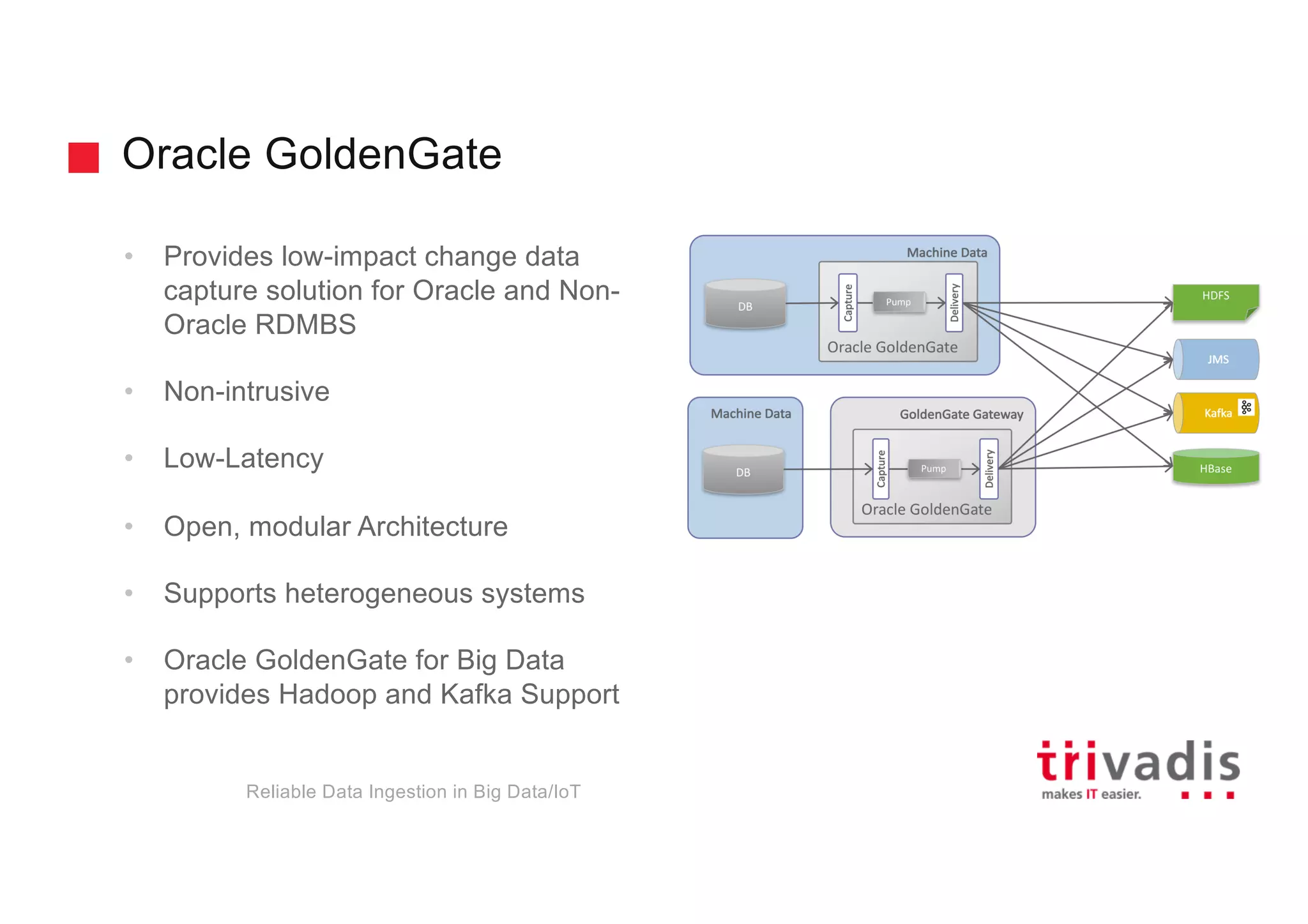

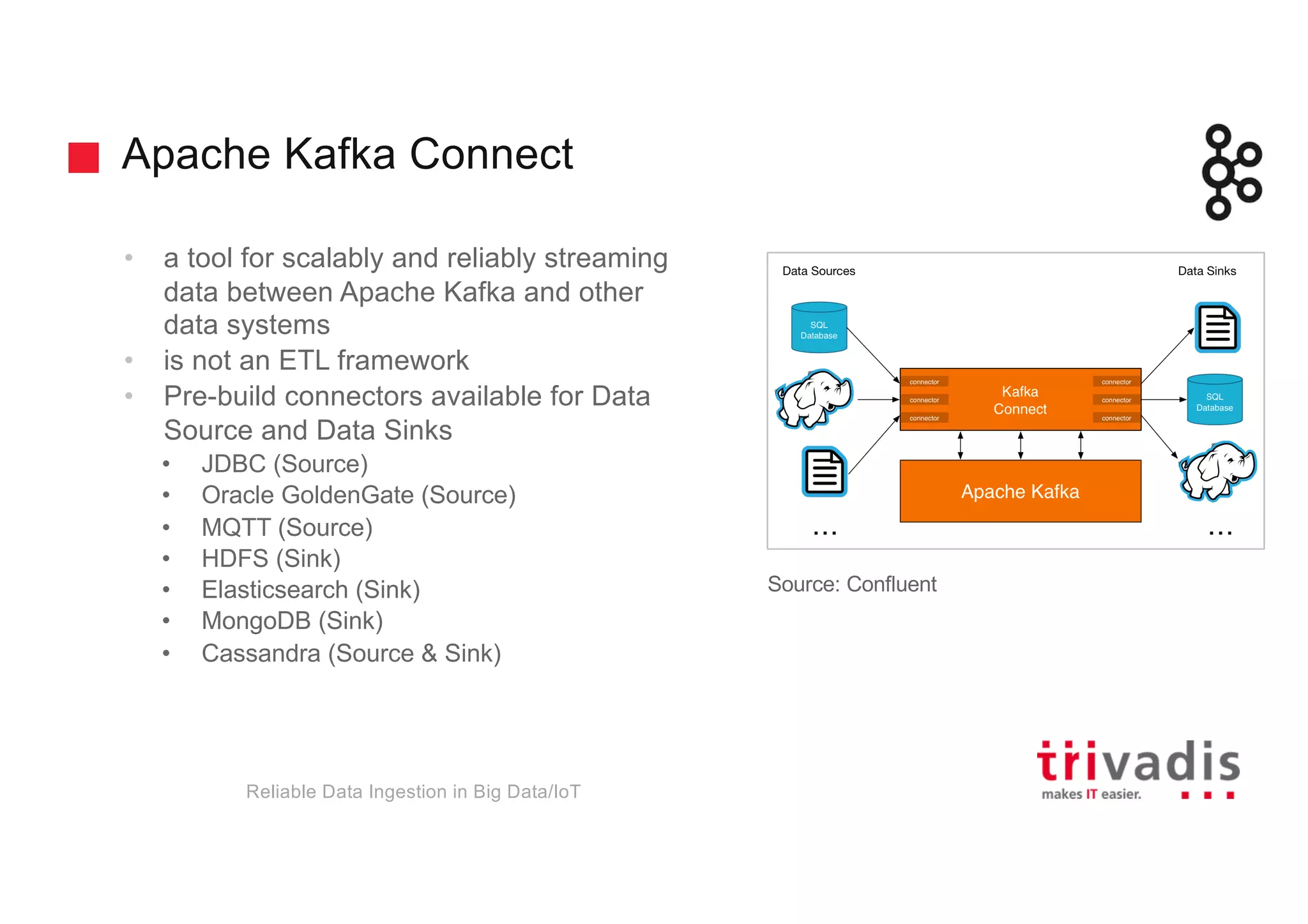

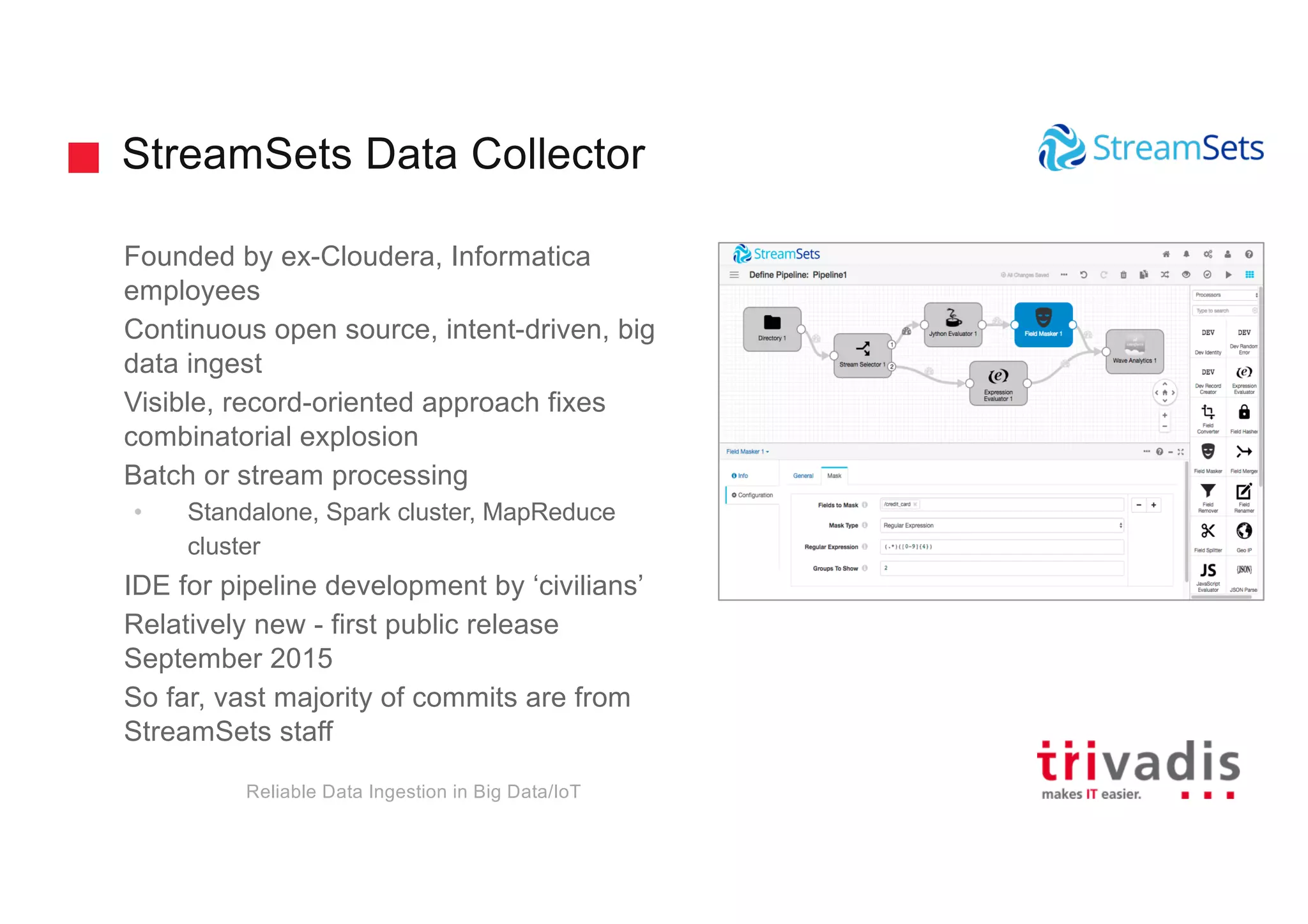

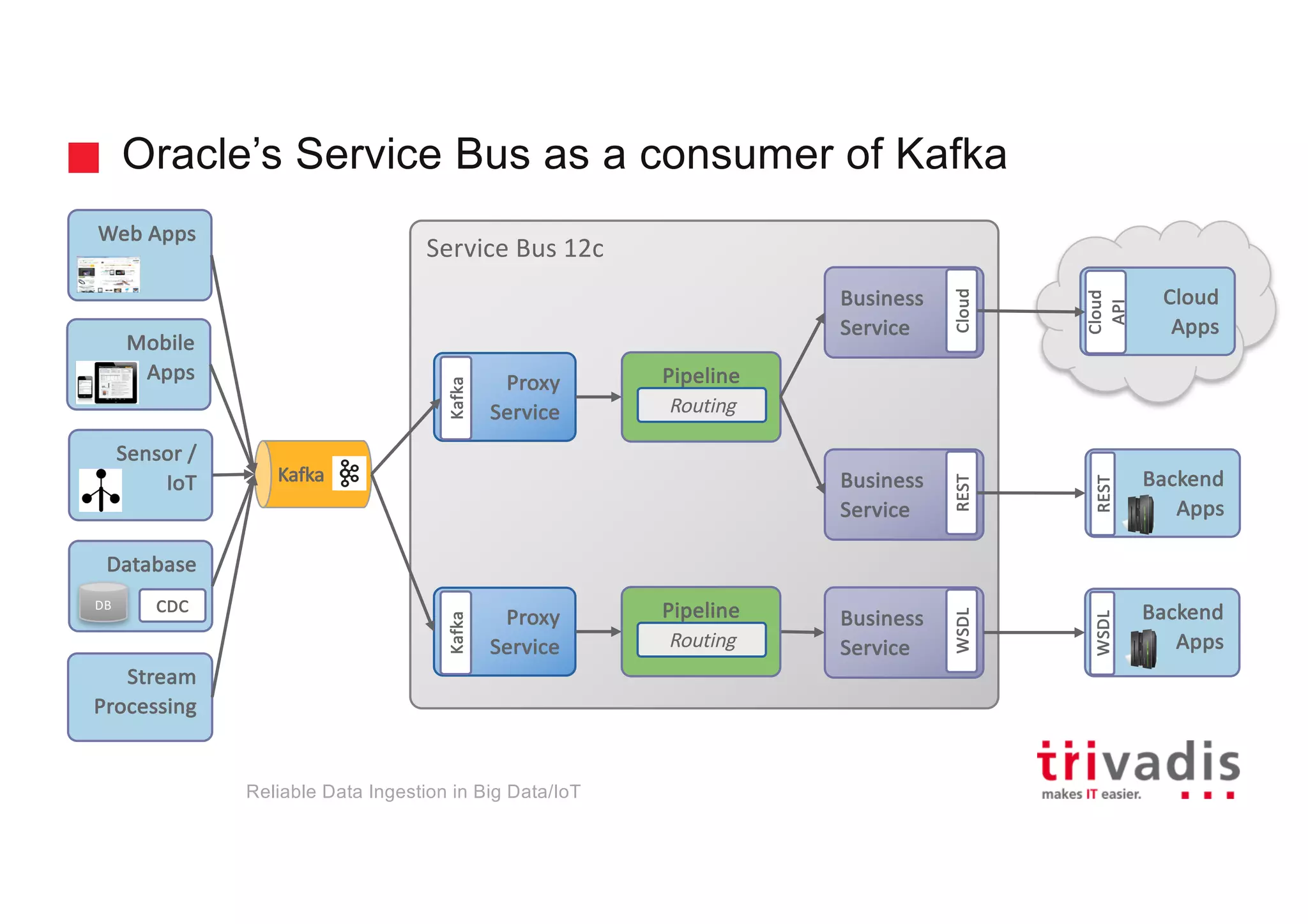

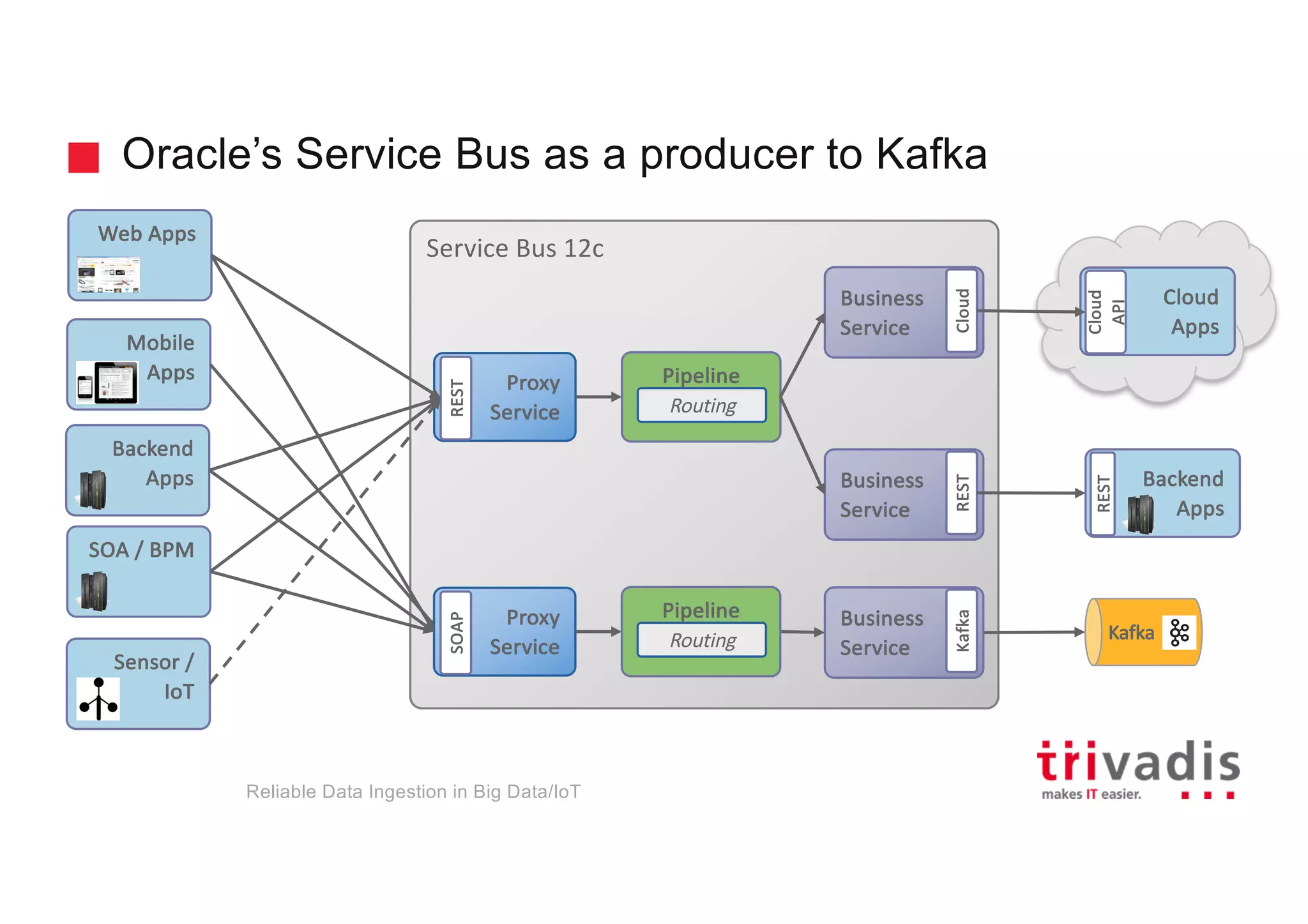

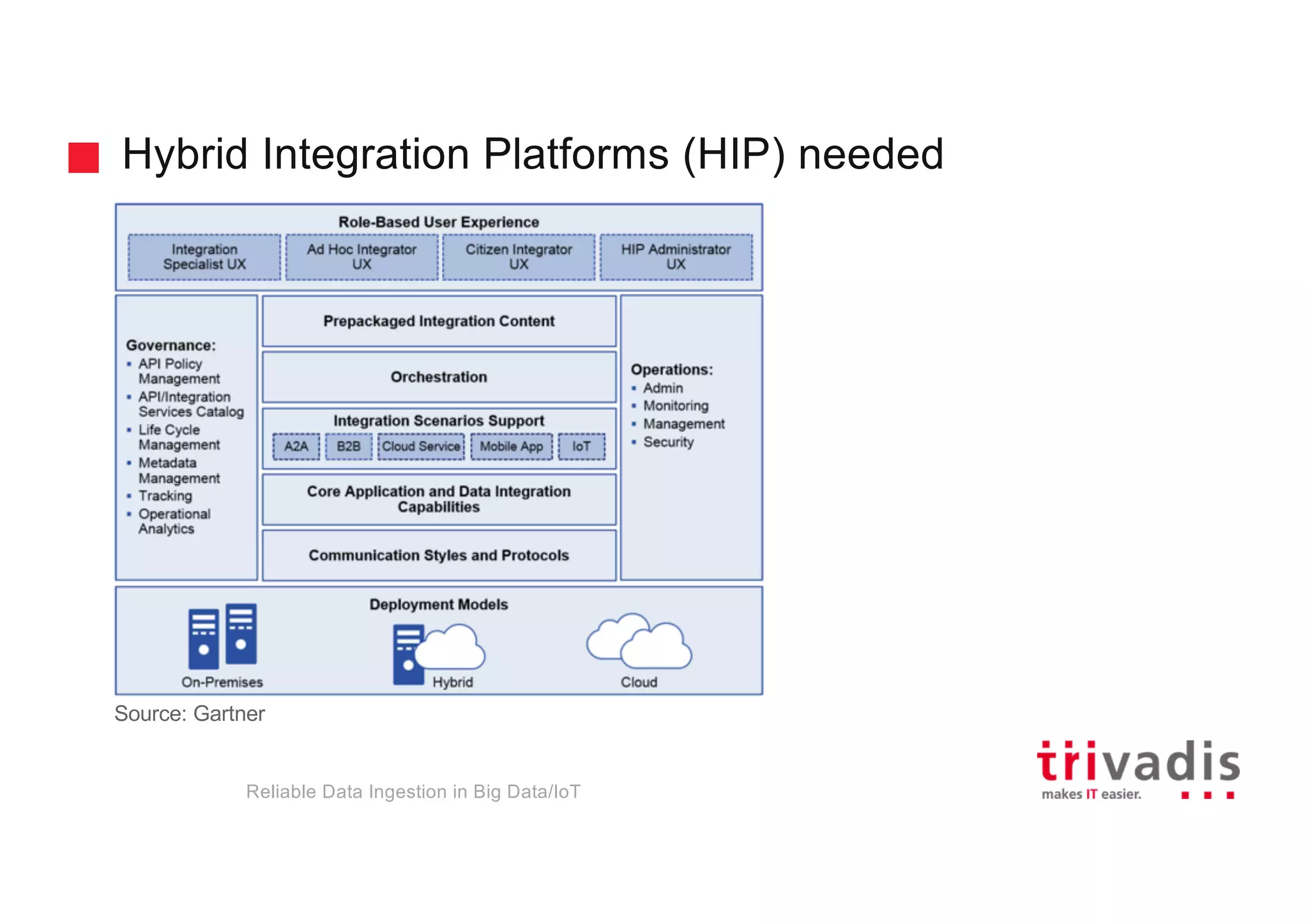

The document discusses reliable data ingestion in big data and IoT, highlighting the challenges and key elements involved in the process. It emphasizes the importance of proper technology usage and the services provided by Trivadis, a leader in IT consulting and system integration. Various tools like Apache Kafka and Sqoop for data handling and integration are mentioned, along with their roles in efficient data flow management.