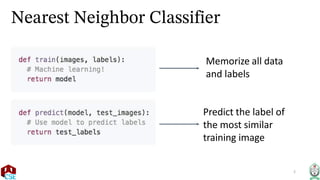

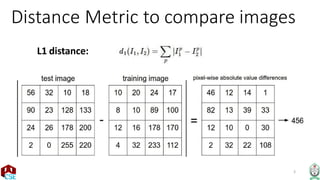

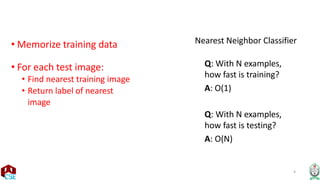

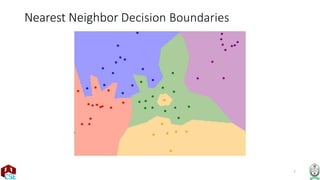

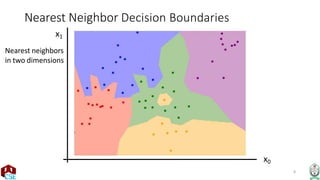

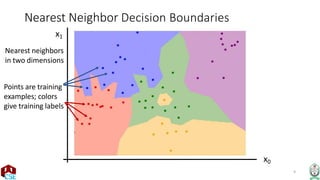

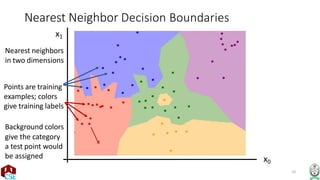

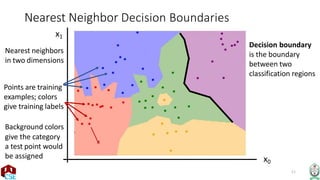

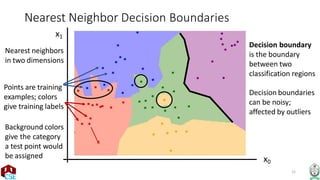

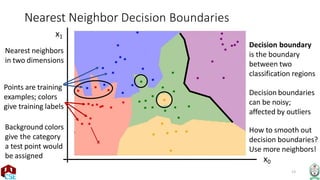

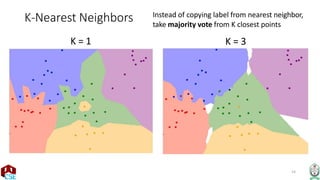

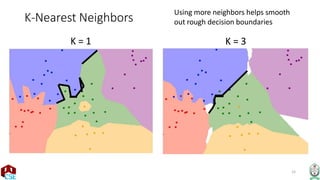

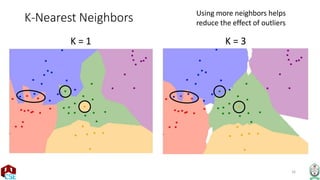

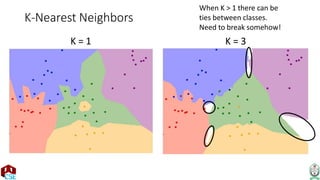

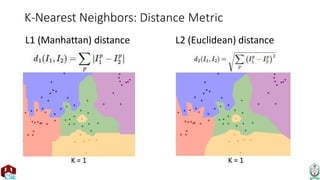

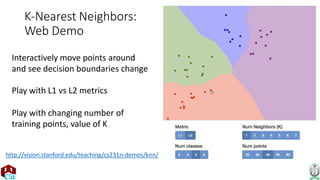

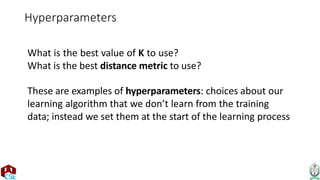

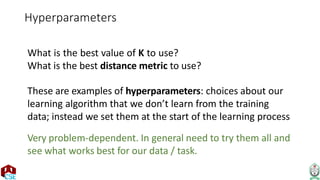

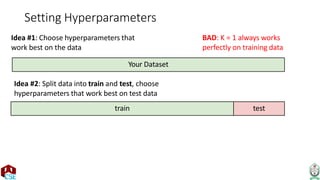

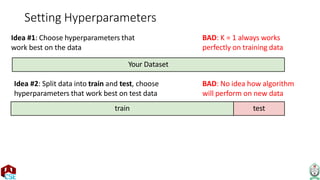

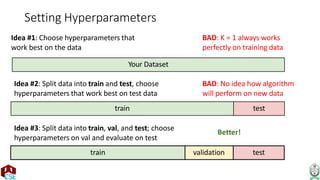

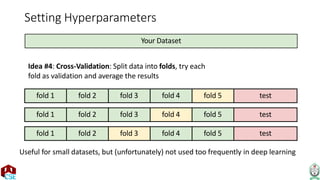

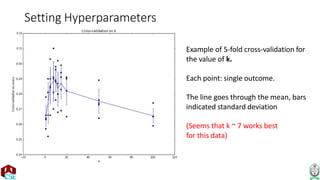

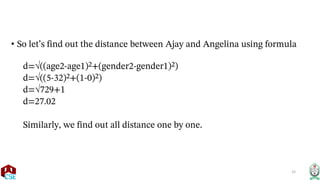

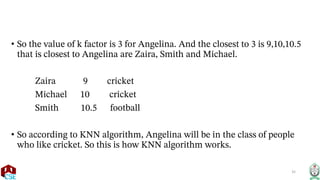

The document explains the k-nearest neighbor (k-NN) algorithm, a supervised learning method used for classification based on the similarity of neighbors. It covers aspects such as distance metrics, the optimal choice of hyperparameters like the number of neighbors (k), and the impact of noise on decision boundaries. Furthermore, it provides examples and discusses techniques for determining suitable hyperparameters through validation and cross-validation methods.