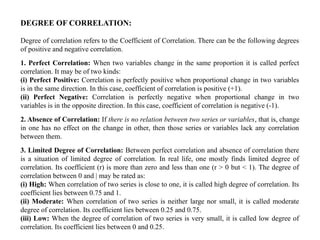

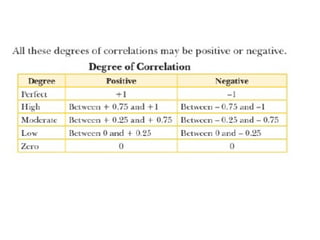

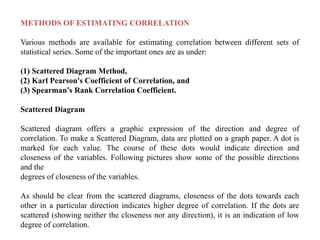

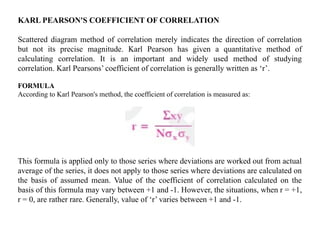

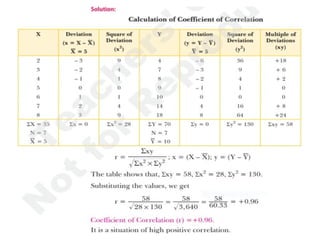

The document discusses Karl Pearson's correlation method, tracing its historical development and defining correlation as the relationship between two variables. It outlines various types of correlation, including positive, negative, linear, and non-linear correlations, and emphasizes that correlation does not imply causation. Additionally, it explains how to compute Pearson's correlation coefficient and the properties of correlation coefficients.