The document discusses data retention policies and handling of confidential and sensitive data. It provides details on:

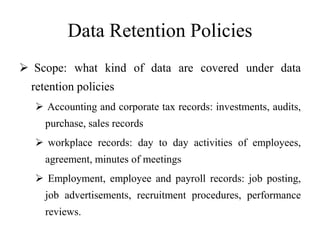

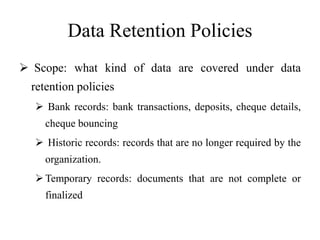

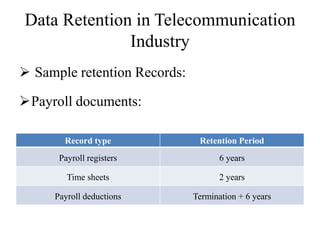

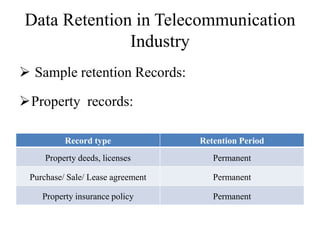

1) Data retention policies - their purpose, requirements, scope and how they are managed. Different retention periods are defined depending on the type of data.

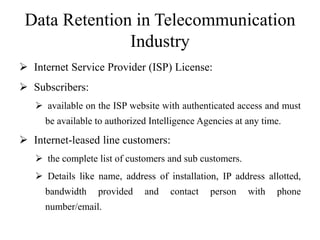

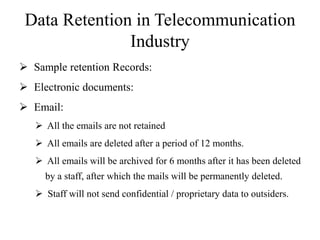

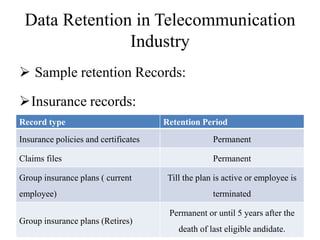

2) Laws and regulations around data retention in India, particularly for telecommunication companies. Specific requirements for retaining call detail records, network logs, and other subscriber information are outlined.

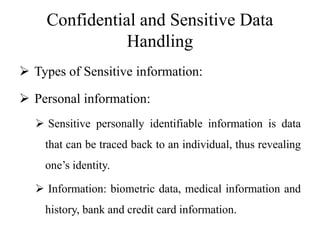

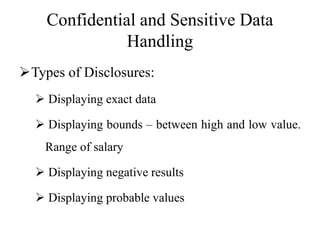

3) Types of sensitive data, including personal, business, and classified information. Guidelines for properly handling sensitive data through access policies, authentication, training, and other security practices.