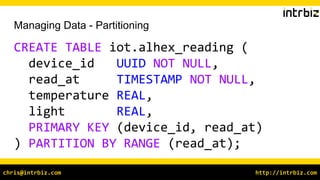

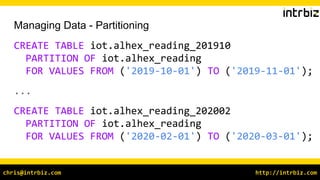

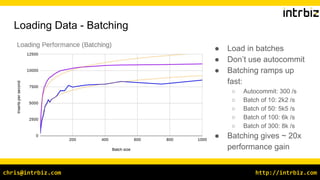

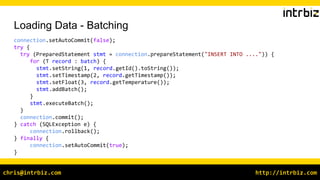

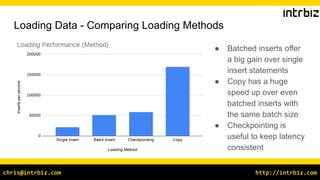

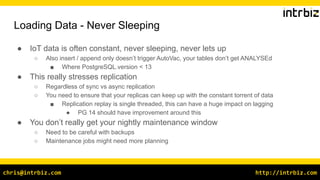

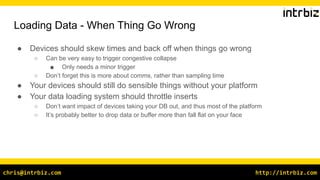

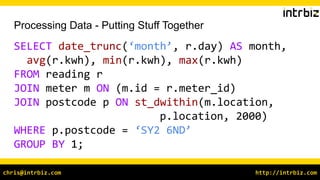

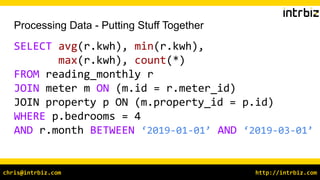

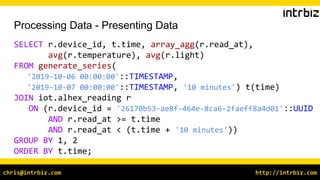

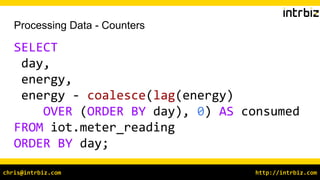

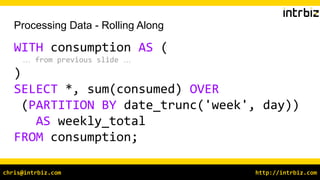

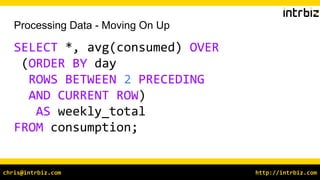

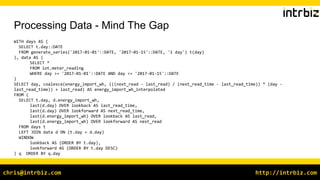

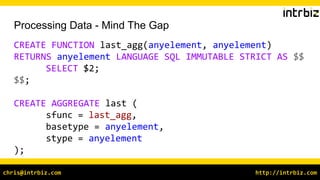

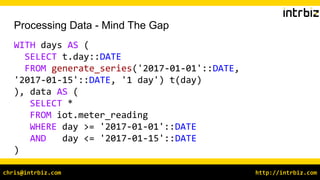

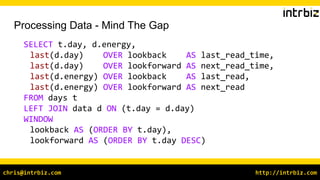

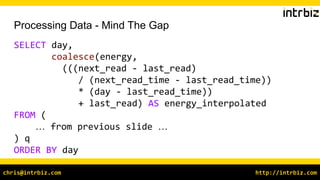

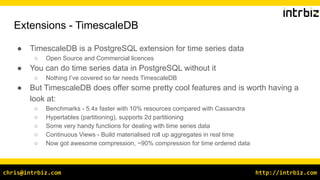

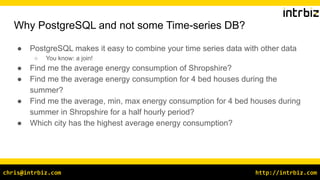

This document provides an overview of using PostgreSQL for IoT applications. Chris Ellis discusses why PostgreSQL is a good fit for IoT due to its flexibility and extensibility. He describes various ways of storing, loading, and processing IoT time series and sensor data in PostgreSQL, including partitioning, batch loading, and window functions. The document also briefly mentions the TimescaleDB extension for additional time series functionality.

![http://intrbiz.com

chris@intrbiz.com

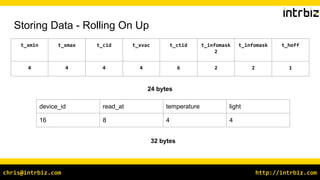

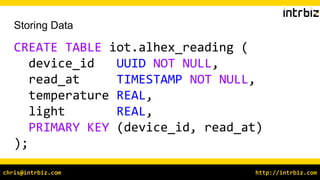

Storing Data - Rolling On Up

CREATE TABLE iot.daily_reading (

meter_id UUID NOT NULL,

read_range DATERANGE NOT NULL,

energy BIGINT,

energy_profile BIGINT[],

PRIMARY KEY (device_id, read_at)

);](https://image.slidesharecdn.com/postgresqlforiotpostgreslondon-210513103925/85/IOT-with-PostgreSQL-12-320.jpg)