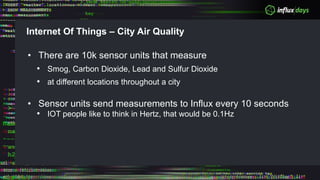

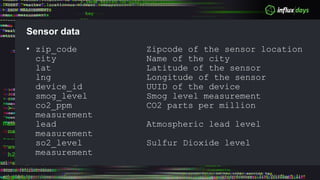

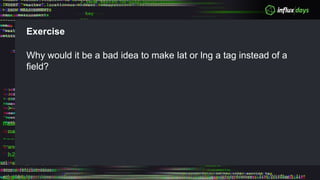

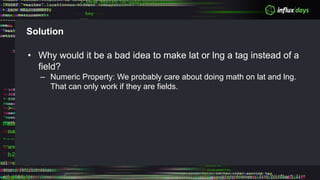

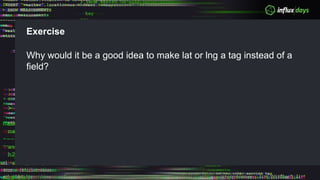

The document outlines a workshop agenda for new practitioners focusing on the Tick Stack, including sessions on installation, writing queries, architecting InfluxEnterprise, and optimizing data ingestion. It emphasizes best practices for data schema design within InfluxDB, particularly regarding the use of tags and fields, and provides examples related to IoT applications and air quality monitoring. Key considerations include the impact of schema on queries, the importance of optimizing data ingestion, and strategies for efficient query performance.

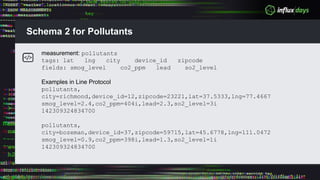

![The Line Protocol

• Self describing data

– Points are written to InfluxDB using the Line Protocol, which follows the following format:

<measurement>[,<tag-key>=<tag-value>] [<field-key>=<field-value>] [unix-nano-timestamp]

– This provides extremely high flexibility as new metrics are identified for collection into

InfluxDB. New measure to capture? Just send it to InfluxDB. It’s that easy.

cpu_load,hostname=server02,az=us_west usage_user=24.5,usage_idle=15.3 1234567890000000

Measurement

Tag

Set

Field Set

Timestamp](https://image.slidesharecdn.com/influxdayslondonoptimizinginflux-180619163927/85/OPTIMIZING-THE-TICK-STACK-5-320.jpg)

![What if my plugin sends data like that to InfluxDB?

Write something that sits between your plugin and InfluxDB to sanitize the data OR

use one of our write plugins:

Example - Telegraf’s Graphite input plugin: Takes input like…

…and parses it with the following template…

…resulting in the following points in line protocol hitting the database:

sensu.metric.net.server0.eth0.rx_packets 461295119435 1444234982

sensu.metric.net.server0.eth0.tx_bytes 1093086493388480 1444234982

sensu.metric.net.server0.eth0.rx_bytes 1015633926034834 1444234982

["sensu.metric.* ..measurement.host.interface.field"]

net,host=server0,interface=eth0 rx_packets=461295119435 1444234982

net,host=server0,interface=eth0 tx_bytes=1093086493388480 1444234982

net,host=server0,interface=eth0 rx_bytes=1015633926034834 1444234982](https://image.slidesharecdn.com/influxdayslondonoptimizinginflux-180619163927/85/OPTIMIZING-THE-TICK-STACK-7-320.jpg)