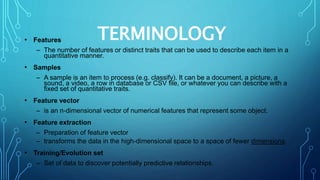

The document provides an introduction to machine learning, covering its definition, key terminologies, and main techniques such as classification, clustering, and regression. It outlines various learning types, including supervised and unsupervised learning, and discusses popular software tools used in the field. Use cases ranged from text summarization to fraud detection and sentiment analysis, demonstrating the practical applications of machine learning in different sectors.