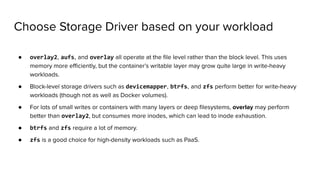

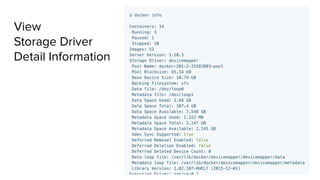

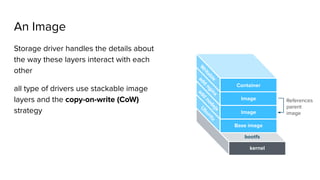

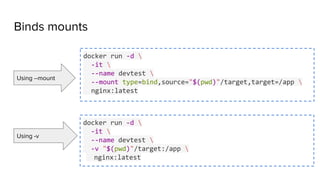

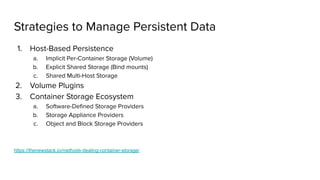

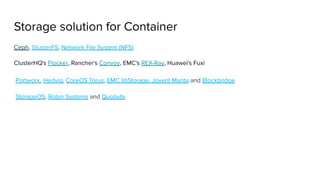

Docker storage drivers allow images and containers to be stored in different ways by implementing a pluggable storage driver interface. Common storage drivers include overlay2, aufs, devicemapper, and vfs. Images are composed of read-only layers stacked on top of each other, with containers adding a writable layer. Storage can be persisted using volumes, bind mounts, or tmpfs mounts. Strategies for managing persistent container data include host-based storage, volume plugins, and container storage platforms.

![FROM node:argon

# Create app directory

RUN mkdir -p /usr/src/app

WORKDIR /usr/src/app

# Install app dependencies

COPY package.json /usr/src/app/

RUN npm install

# Bundle app source

COPY . /usr/src/app

EXPOSE 8080

CMD [ "npm", "start" ]

Instruction in

the Dockerfile

adds a layer

to the image](https://image.slidesharecdn.com/dockerstorageintrodetailtagejlp12-190905233728/85/Introduction-to-Docker-storage-volume-and-image-13-320.jpg)

![Resources for deep dive

1. https://docs.docker.com/storage/

2. Deep dive into Docker storage drivers [Jerome Petazzoni]

a. Video - https://www.youtube.com/watch?v=9oh_M11-foU

b. Presentation Slides -

3. https://integratedcode.us/2016/08/30/storage-drivers-in-docker-a-deep-dive/

4. https://thenewstack.io/methods-dealing-container-storage/

5. https://blog.mobyproject.org/where-are-containerds-graph-drivers-145fc9b7255

6. https://blog.jessfraz.com/post/the-brutally-honest-guide-to-docker-graphdrivers/](https://image.slidesharecdn.com/dockerstorageintrodetailtagejlp12-190905233728/85/Introduction-to-Docker-storage-volume-and-image-25-320.jpg)

![Manifest file

{

"schemaVersion": 2,

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

"config": {

"mediaType": "application/vnd.docker.container.image.v1+json",

"size": 190,

"digest": "sha256:efe184abb97e76d7d900b2e97171cc20830b6b1b0e0fe504a4ee7097a6b5c91b"

},

"layers": [

{

"mediaType": "application/vnd.docker.image.rootfs.diff.tar.gzip",

"size": 170,

"digest": "sha256:9964c16915b8956cb01eb77028b1fd1976287b5ec87cc1663844a0bd32933a47"

}

]

}](https://image.slidesharecdn.com/dockerstorageintrodetailtagejlp12-190905233728/85/Introduction-to-Docker-storage-volume-and-image-30-320.jpg)

![Can we merge/flatten layers become a single layer?

Yes, run container then use docker commit

docker commit <container id> <new image name>

Example: Commit a container with new CMD and EXPOSE instructions

docker commit --change='CMD ["apachectl", "-DFOREGROUND"]'

-c "EXPOSE 80" c3f279d17e0a ejlp12/testimage:version4](https://image.slidesharecdn.com/dockerstorageintrodetailtagejlp12-190905233728/85/Introduction-to-Docker-storage-volume-and-image-32-320.jpg)