The document provides an in-depth exploration of Docker storage drivers, including their history, functioning, and importance. Key topics include the operations of various drivers like aufs, btrfs, and device mapper, along with technical insights into copy-on-write mechanisms that enable efficient container management. It emphasizes that without copy-on-write technology, Docker's practicality and efficiency on Linux systems would be severely compromised.

![If you've never seen Docker in action ...

This will help!

jpetazzo@tarrasque:~$dockerrun-tipythonbash

root@75d4bf28c8a5:/#pipinstallIPython

Downloading/unpackingIPython

Downloadingipython-2.3.1-py3-none-any.whl(2.8MB):2.8MBdownloaded

Installingcollectedpackages:IPython

SuccessfullyinstalledIPython

Cleaningup...

root@75d4bf28c8a5:/#ipython

Python3.4.2(default,Jan222015,07:33:45)

Type"copyright","credits"or"license"formoreinformation.

IPython2.3.1--AnenhancedInteractivePython.

? ->IntroductionandoverviewofIPython'sfeatures.

%quickref->Quickreference.

help ->Python'sownhelpsystem.

object? ->Detailsabout'object',use'object??'forextradetails.

In[1]:

7 / 71](https://image.slidesharecdn.com/dockerstoragedrivers-150319174627-conversion-gate01/85/Docker-storage-drivers-by-Jerome-Petazzoni-7-320.jpg)

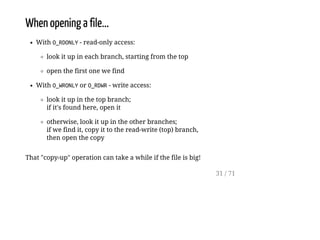

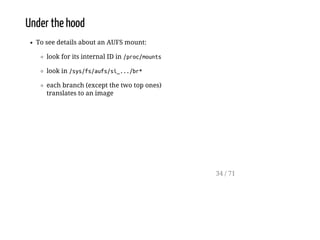

![Example

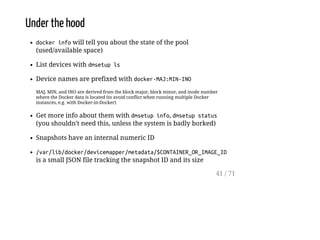

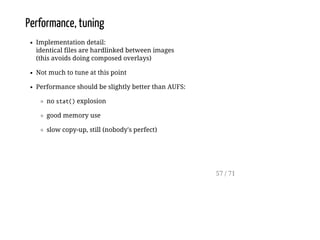

dockerhost#grepc7af/proc/mounts

none/mnt/.../c7af...a63daufsrw,relatime,si=2344a8ac4c6c6e5500

dockerhost#grep./sys/fs/aufs/si_2344a8ac4c6c6e55/br[0-9]*

/sys/fs/aufs/si_2344a8ac4c6c6e55/br0:/mnt/c7af...a63d=rw

/sys/fs/aufs/si_2344a8ac4c6c6e55/br1:/mnt/c7af...a63d-init=ro+wh

/sys/fs/aufs/si_2344a8ac4c6c6e55/br2:/mnt/b39b...a462=ro+wh

/sys/fs/aufs/si_2344a8ac4c6c6e55/br3:/mnt/615c...520e=ro+wh

/sys/fs/aufs/si_2344a8ac4c6c6e55/br4:/mnt/8373...cea2=ro+wh

/sys/fs/aufs/si_2344a8ac4c6c6e55/br5:/mnt/53f8...076f=ro+wh

/sys/fs/aufs/si_2344a8ac4c6c6e55/br6:/mnt/5111...c158=ro+wh

dockerhost#dockerinspect--format{{.Image}}c7af

b39b81afc8cae27d6fc7ea89584bad5e0ba792127597d02425eaee9f3aaaa462

dockerhost#dockerhistory-qb39b

b39b81afc8ca

615c102e2290

837339b91538

53f858aaaf03

511136ea3c5a

35 / 71](https://image.slidesharecdn.com/dockerstoragedrivers-150319174627-conversion-gate01/85/Docker-storage-drivers-by-Jerome-Petazzoni-35-320.jpg)