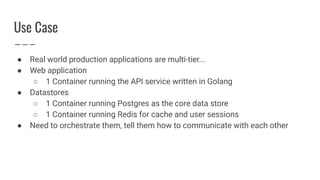

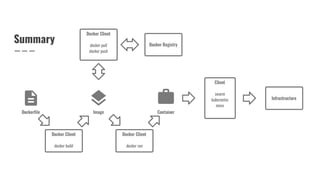

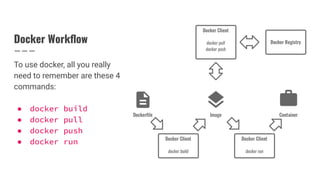

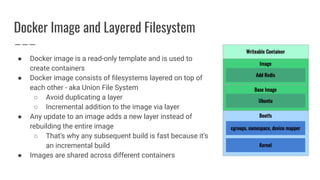

Docker is a tool that allows developers to package applications into containers to ensure consistency across environments. Some key benefits of Docker include lightweight containers, isolation, and portability. The Docker workflow involves building images, pulling pre-built images, pushing images to registries, and running containers from images. Docker uses a layered filesystem to efficiently build and run containers. Running multiple related containers together can be done using Docker Compose or Kubernetes for orchestration.

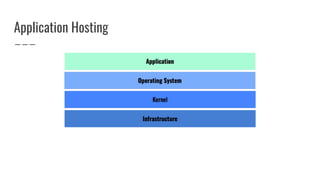

![Tip 5: Useful Docker Commands for Housekeeping

$ docker ps -a # List all docker containers

$ # Remove all stopped containers

$ docker rm $(docker ps -q -f status=exited)

$ docker stats # Live stream of live container stats

$ docker image ls # List all local images

$ docker rmi [image_name] # Remove a specific image

$ docker image prune -a # Remove unused images

$ # Delete all stopped containers, dangling images, unused

networks, unused volumes, and build cache

$ docker system prune -a --volumes](https://image.slidesharecdn.com/dockerprimerandtips-200122032935/85/Docker-primer-and-tips-37-320.jpg)