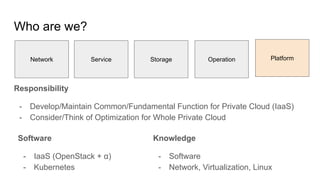

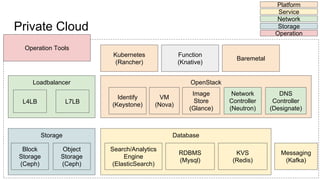

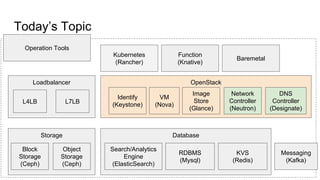

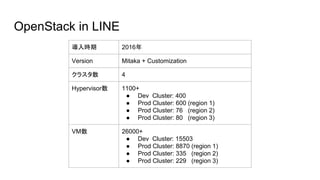

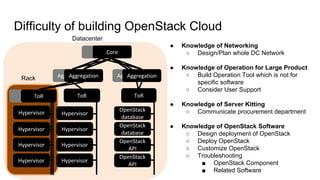

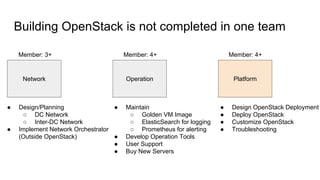

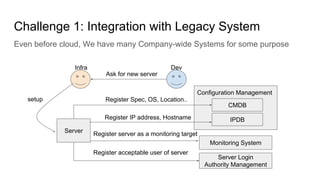

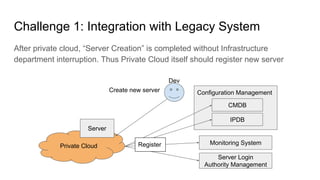

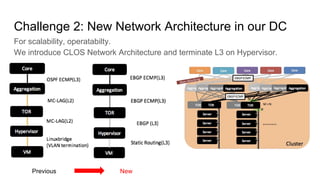

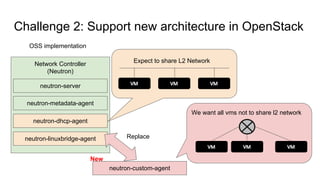

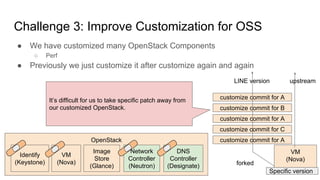

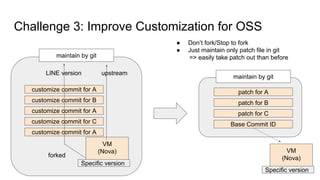

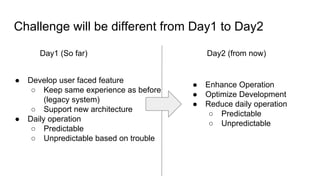

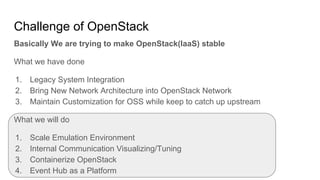

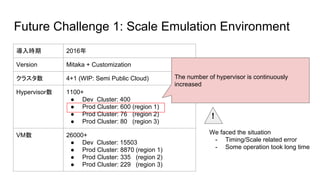

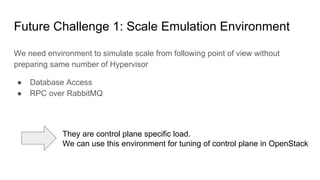

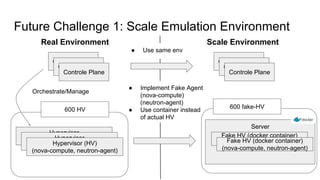

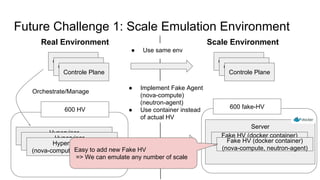

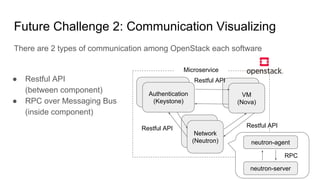

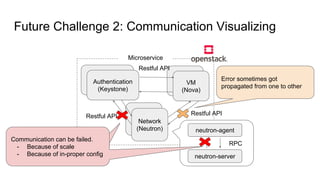

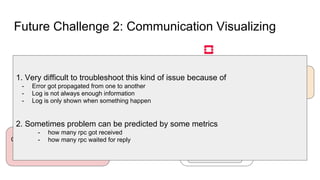

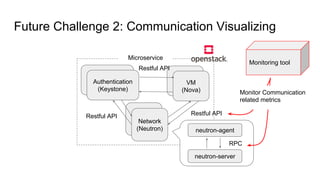

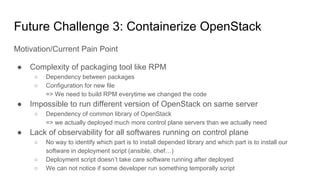

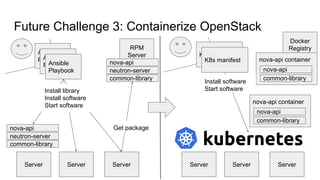

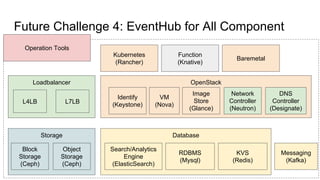

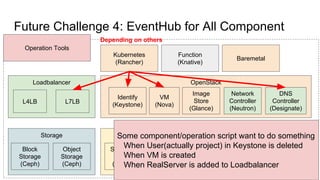

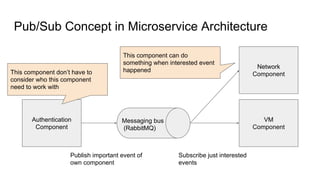

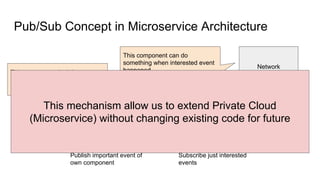

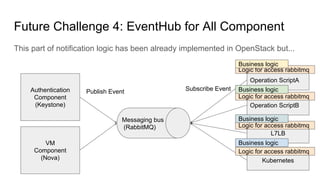

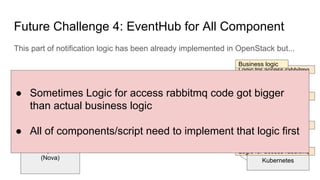

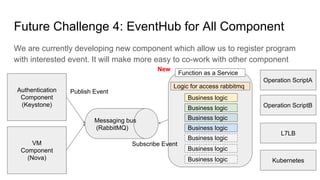

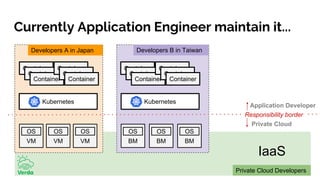

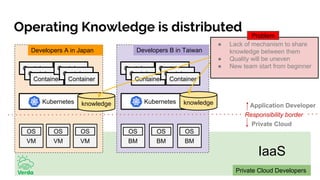

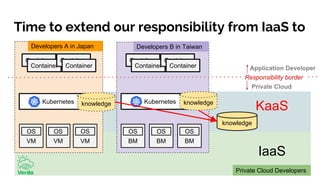

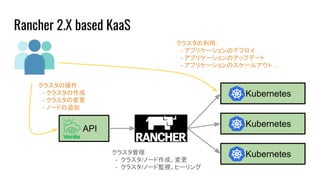

The document discusses the implementation and challenges of OpenStack in a private cloud environment, focusing on responsibilities in developing and maintaining the infrastructure as a service (IaaS). It highlights various components, customization efforts, and future plans, such as scaling, enhancing operation tools, and containerizing OpenStack. The aim is to achieve a stable and efficient cloud infrastructure while addressing integration with legacy systems and new network architecture.