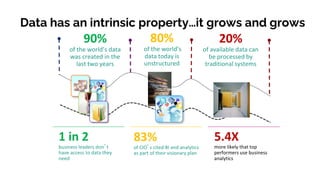

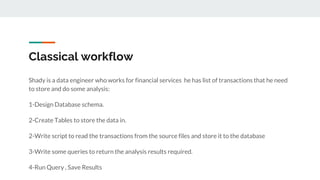

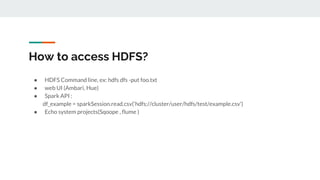

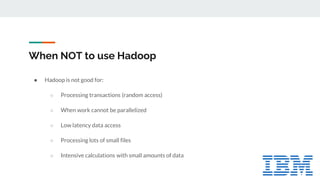

This document provides an introduction to big data and related technologies. It defines big data as datasets that are too large to be processed by traditional methods. The motivation for big data is the massive growth in data volume and variety. Technologies like Hadoop and Spark were developed to process this data across clusters of commodity servers. Hadoop uses HDFS for storage and MapReduce for processing. Spark improves on MapReduce with its use of resilient distributed datasets (RDDs) and lazy evaluation. The document outlines several big data use cases and projects involving areas like radio astronomy, particle physics, and engine sensor data. It also discusses when Hadoop and Spark are suitable technologies.