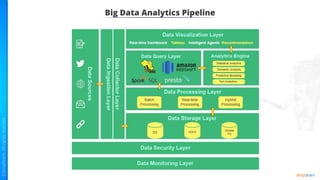

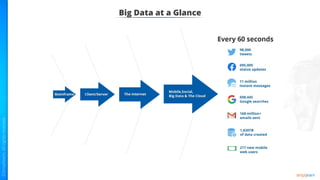

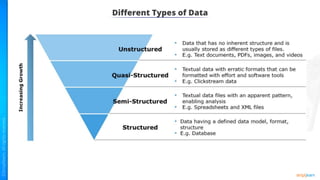

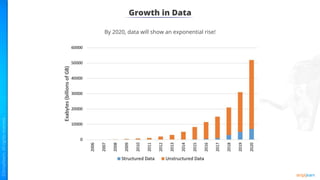

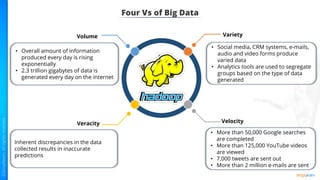

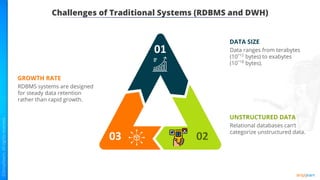

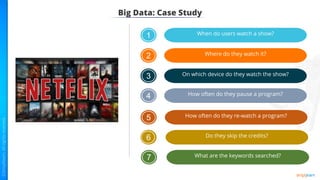

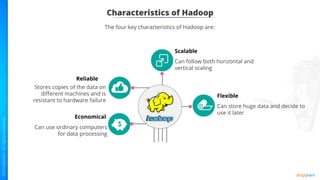

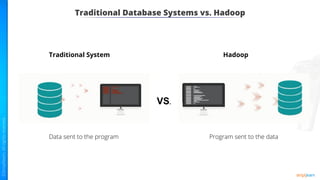

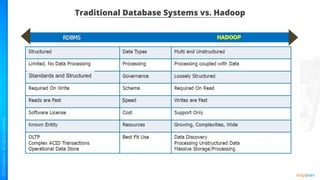

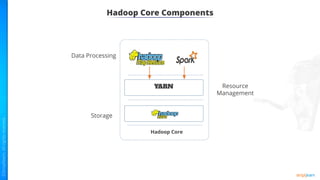

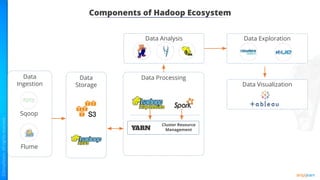

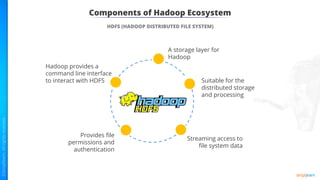

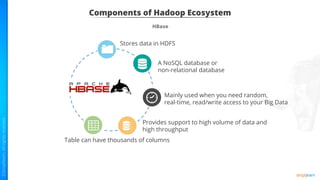

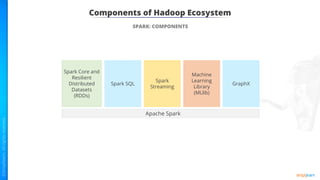

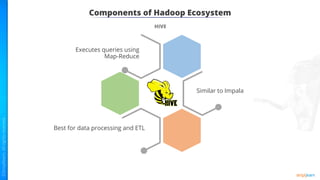

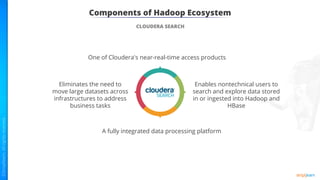

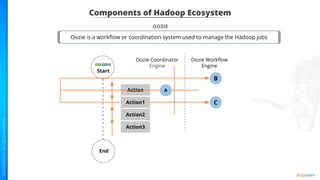

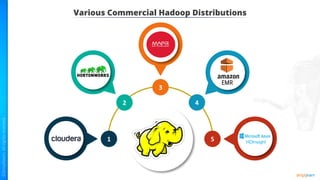

The document provides an introduction to big data and Hadoop. It describes the concepts of big data, including the four V's of big data: volume, variety, velocity and veracity. It then explains Hadoop and how it addresses big data challenges through its core components. Finally, it describes the various components that make up the Hadoop ecosystem, such as HDFS, HBase, Sqoop, Flume, Spark, MapReduce, Pig and Hive. The key takeaways are that the reader will now be able to describe big data concepts, explain how Hadoop addresses big data challenges, and describe the components of the Hadoop ecosystem.