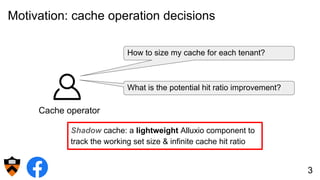

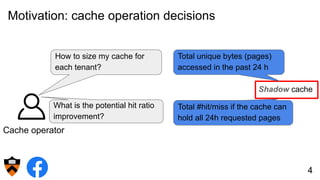

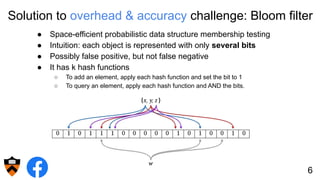

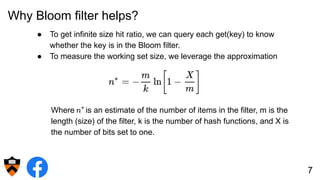

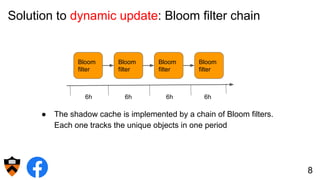

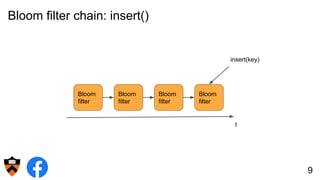

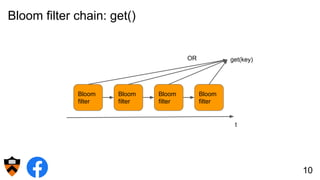

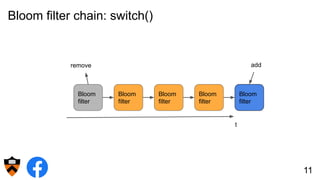

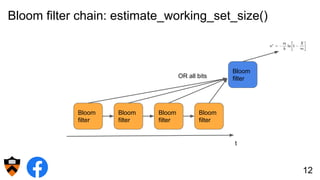

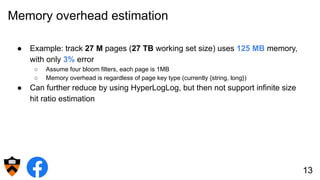

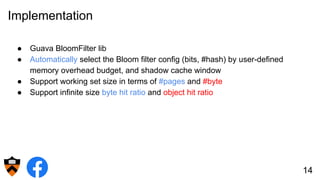

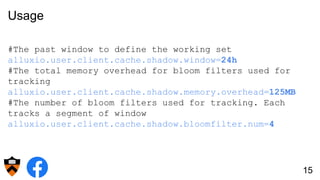

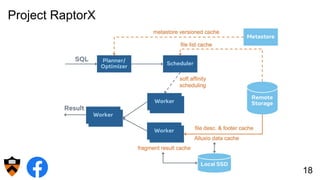

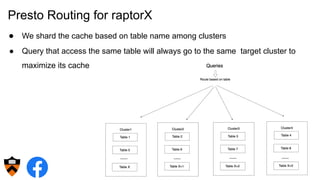

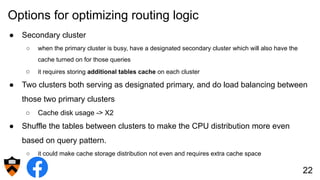

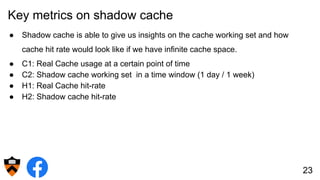

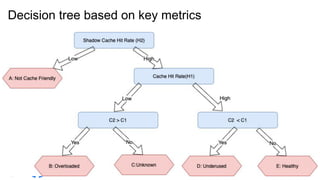

The document discusses the design and implementation of a shadow cache, a lightweight Alluxio component aimed at tracking the working set size and infinite cache hit ratio. It utilizes a chain of Bloom filters to efficiently manage cache operations, addressing challenges such as memory overhead and accuracy in dynamic updates. Additionally, the project explores optimizing cache utilization and routing algorithms within Facebook's environment for improved query performance.