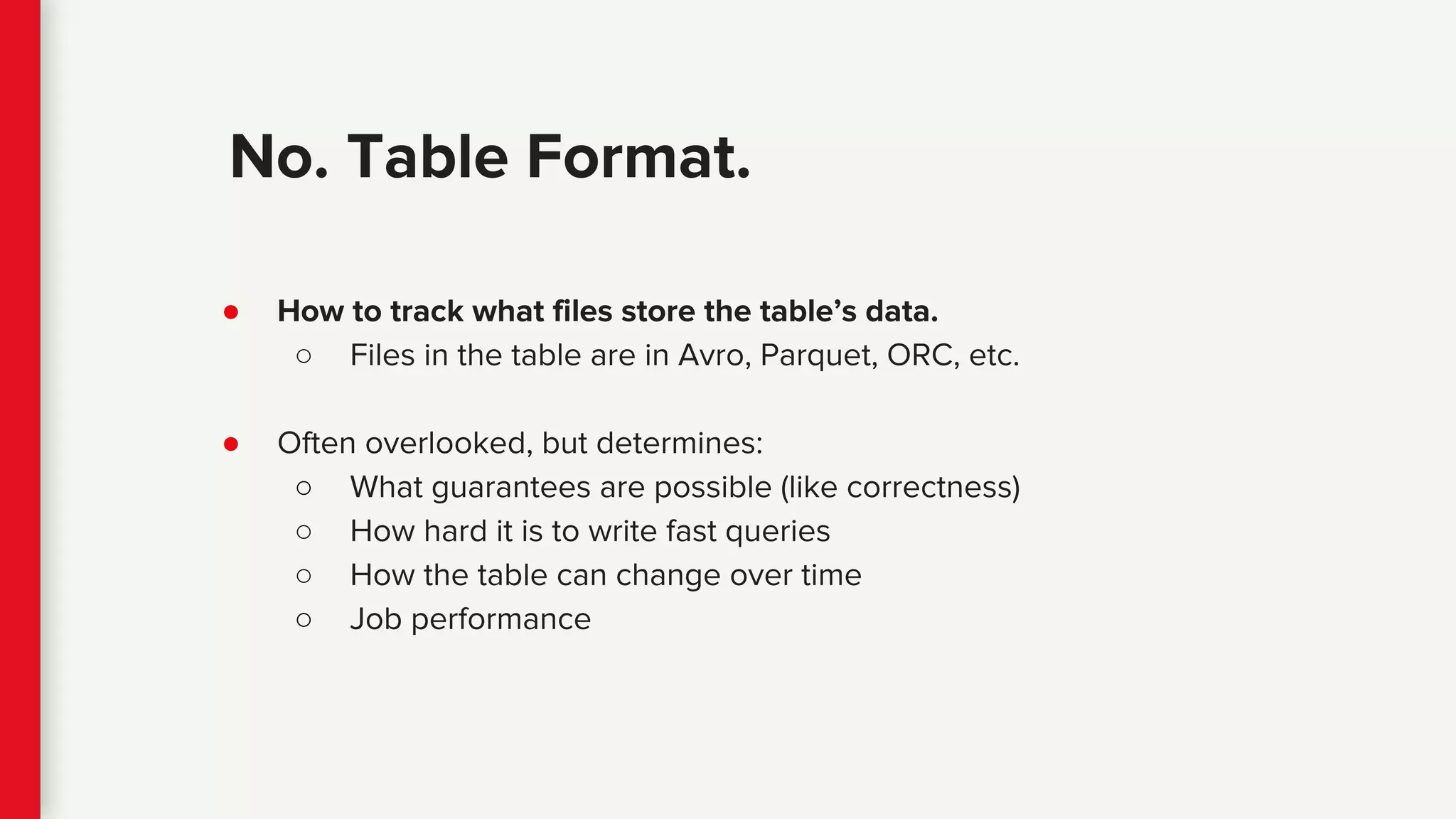

The document discusses Iceberg, a modern table format for big data, highlighting its advantages over traditional Hive tables, particularly in terms of performance and usability for large datasets like those used by Netflix. It details the technical advantages of Iceberg's snapshot isolation, atomic operations, and enhanced schema evolution capabilities, contrasting these features with limitations of Hive. The document also provides practical guidance on getting started with Iceberg, including supported engines and community resources.

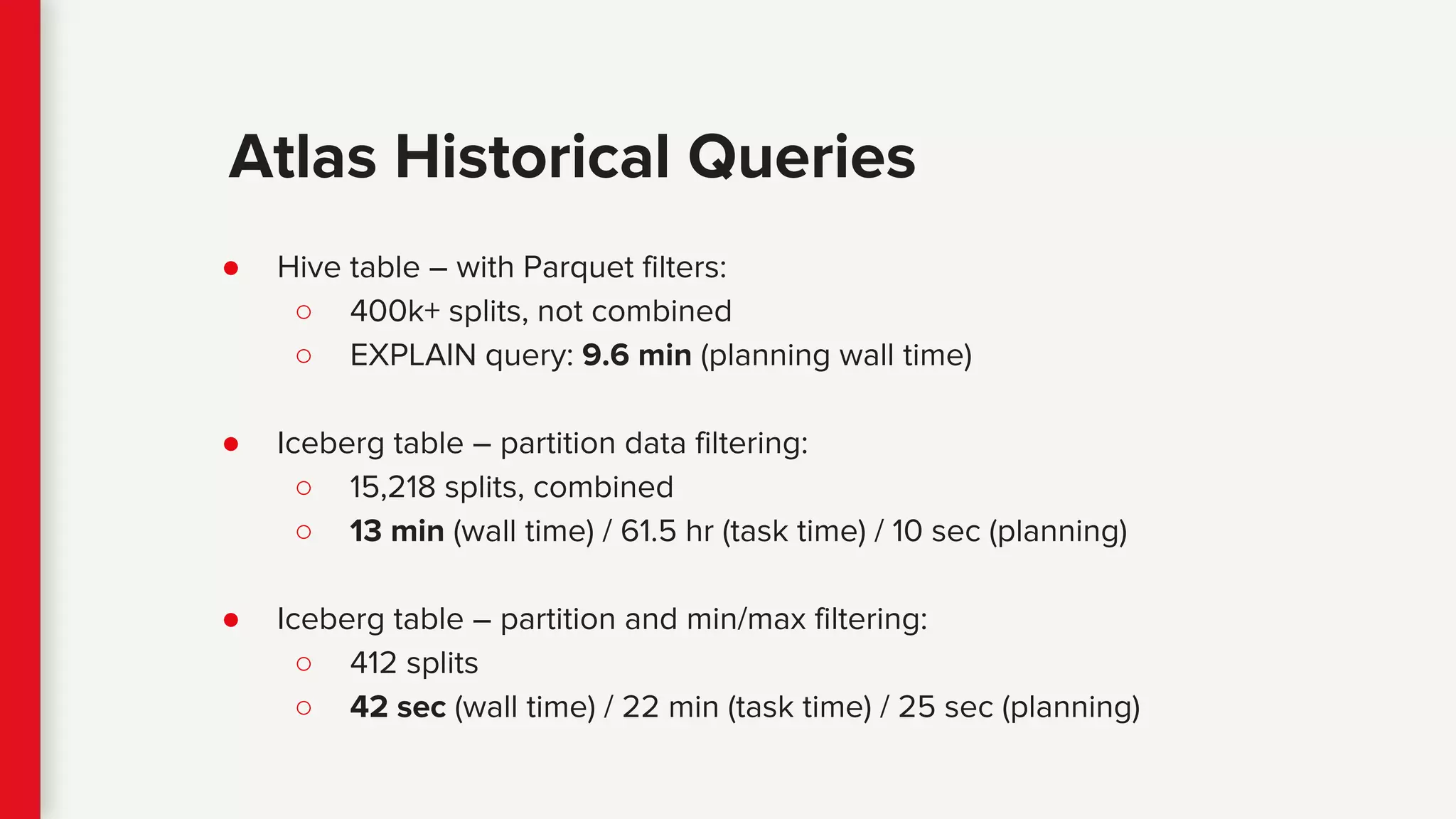

![● Historical Atlas data:

○ Time-series metrics from Netflix runtime systems

○ 1 month: 2.7 million files in 2,688 partitions

○ Problem: cannot process more than a few days of data

● Sample query:

select distinct tags['type'] as type

from iceberg.atlas

where

name = 'metric-name' and

date > 20180222 and date <= 20180228

order by type;

Case Study: Netflix Atlas](https://image.slidesharecdn.com/stratany2018iceberg-180917162355/75/Iceberg-A-modern-table-format-for-big-data-Strata-NY-2018-4-2048.jpg)