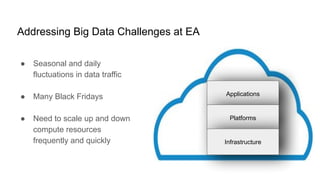

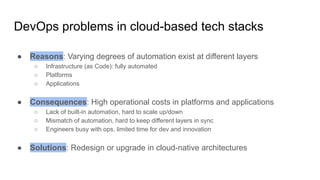

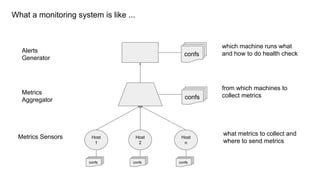

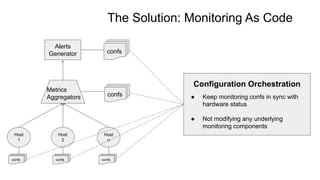

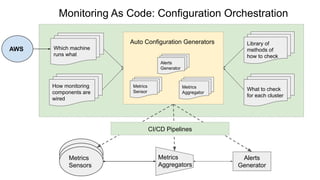

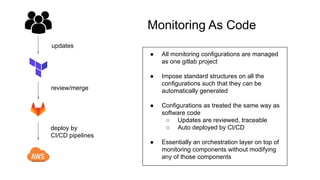

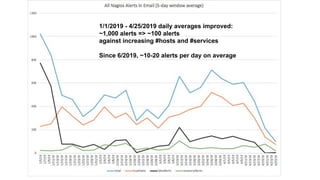

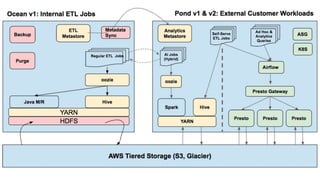

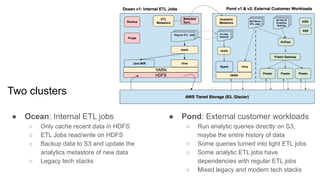

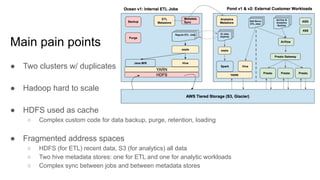

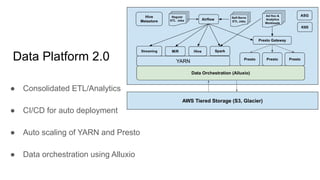

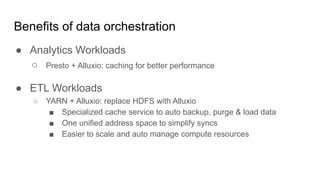

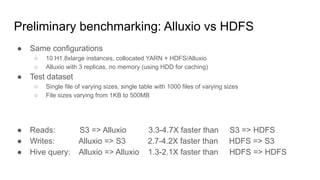

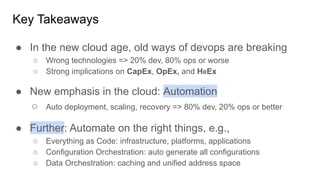

The document discusses strategies for developing and operating cloud-native data platforms and applications, highlighting challenges such as data traffic fluctuations and operational inefficiencies in DevOps. It proposes solutions like monitoring as code and data orchestration using Alluxio to enhance performance and simplify management. Key takeaways emphasize the shift towards automation in cloud computing, aiming for more efficient development and operational workflows.