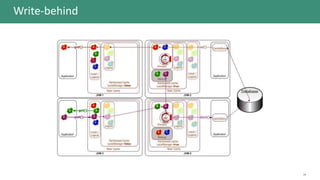

The document discusses the cache-aside pattern in cloud design, emphasizing its role in optimizing resource usage and improving application performance. Key topics include strategies for managing cached data consistency, common access patterns like read-through and write-through, and the importance of understanding expiration policies and eviction strategies. It also highlights the applicability of this pattern, as well as example implementations using technologies like Redis and Spring.