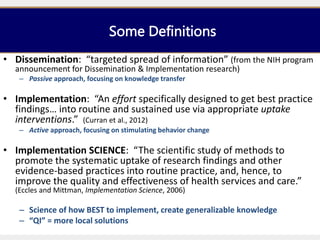

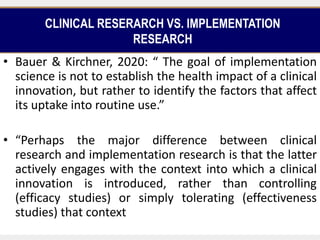

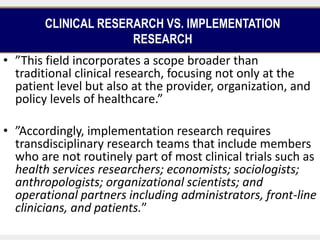

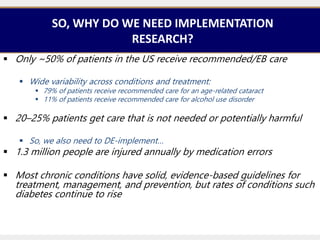

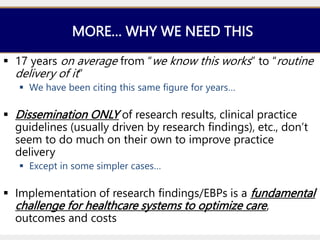

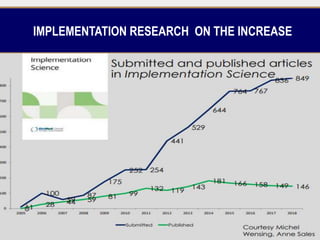

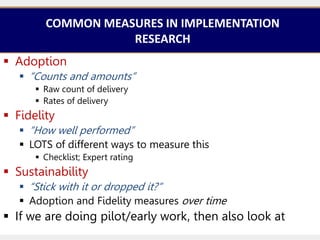

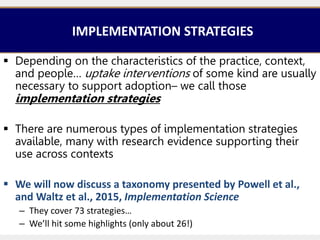

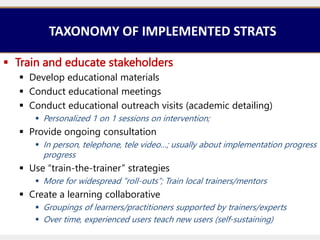

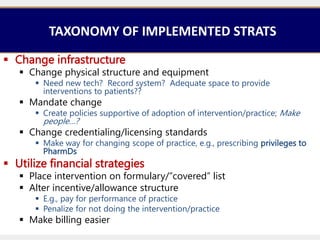

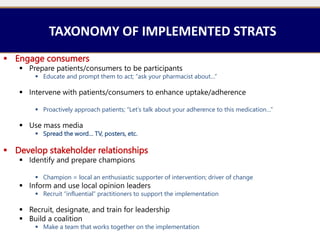

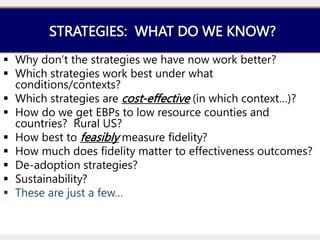

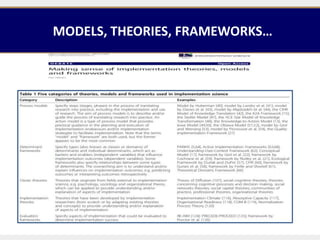

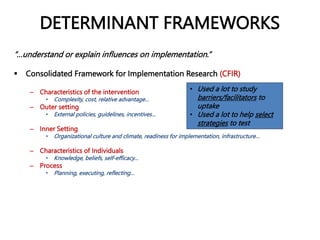

This document provides an overview of implementation research. It defines key terms like implementation, implementation science, and dissemination. It discusses the differences between clinical research and implementation research. Implementation research aims to identify factors that affect the uptake of evidence-based practices into routine use, rather than simply establishing health impacts. The document also outlines important outcomes measured in implementation research like adoption, fidelity, and sustainability. Common implementation strategies are presented from a taxonomy, including strategies like training, reminders, and use of champions. Determinant frameworks to understand influences on implementation are also briefly mentioned.