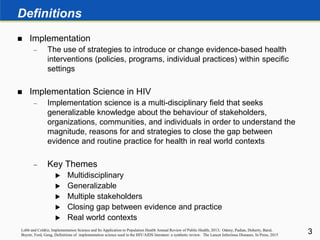

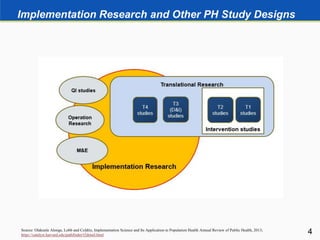

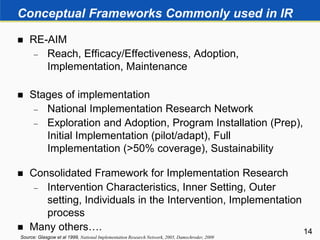

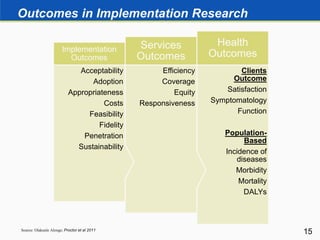

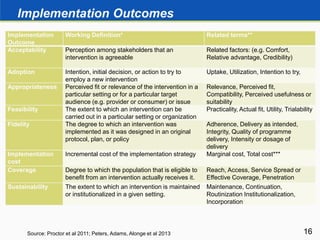

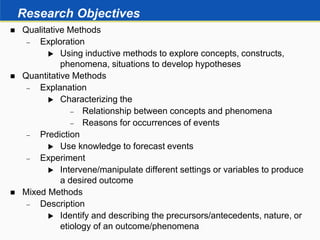

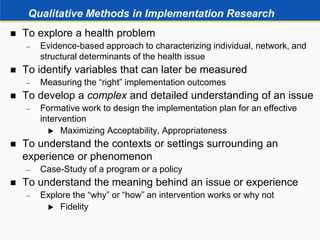

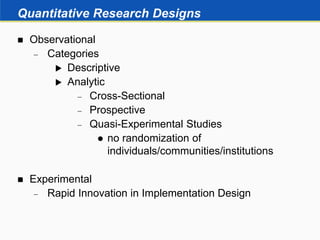

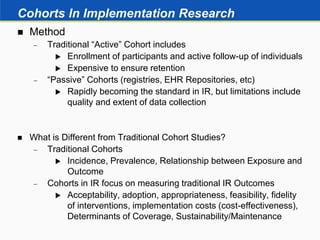

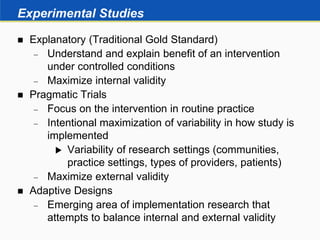

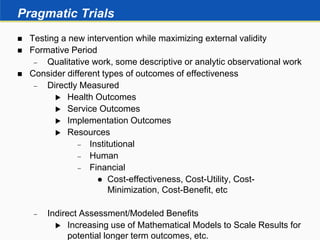

This document provides an overview of implementation research. It defines implementation research as using strategies to introduce or change evidence-based health interventions in real world contexts. Implementation research is a multidisciplinary field that seeks to understand and close the gap between evidence and practice. The document discusses conceptual frameworks, methods, outcomes and evidence used in implementation research. It describes both qualitative and quantitative research designs that can be used, including descriptive, analytic, experimental and mixed methods approaches.