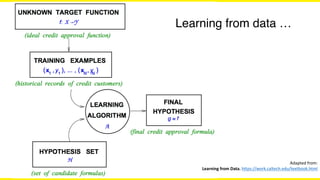

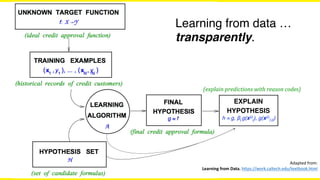

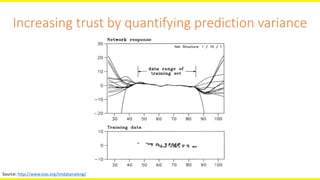

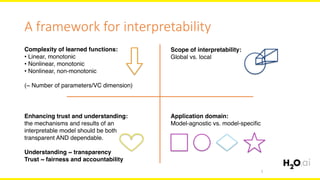

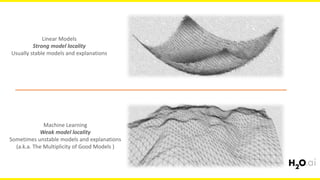

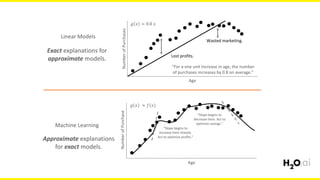

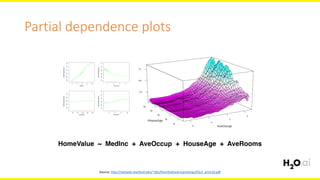

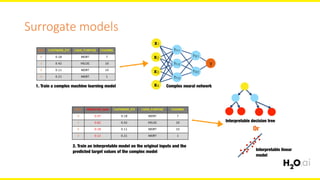

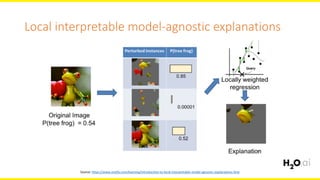

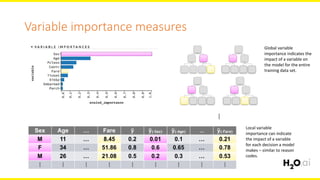

The document discusses various approaches to machine learning interpretability, emphasizing the importance of transparency, trust, and fairness in model predictions. It explores the complexities of learned functions, model-specific and model-agnostic techniques, and the challenges associated with linear and nonlinear models. Key concepts include global vs. local interpretability, variable importance measures, and methods for providing explanations to enhance user understanding and decision-making.